AI: Adding Memory to more AI applications offer promise & peril. RTZ # 780

Bigger Picture, Sunday July 12, 2025

As we race to integrate AI into our lives by augmenting our abilities and memories, new issues are fast need our attention.

Long time readers here know that my first post here on AI: Reset to Zero, over two years ago, was noting how nascent AI chatbots have the ‘memory of a goldfish’. That AI systems know very little about us, and that was a vector of massive improvement going forward.

Fast forward to now in this AI Tech Wave, and memory is being added to every type of AI application and service in Box 6 of the AI tech stack below, far beyond chatbots. And that is the Bigger Picture I’d like to discuss this Sunday.

And it’s starting earnest with our chatbots, like OpenAI’s ChatGPT, now used by up to a billion folks in any given week.

Axios highlights that part in “ChatGPT keeps having more memories of you”:

“OpenAI continues to build and improve ChatGPT’s memory, making it more robust and available to more users, even on its free tier — adding new value and opening new pitfalls.”

“Why it matters: Not everyone is ready for a chatbot that never forgets.”

“ChatGPT’s memory feature uses context from previous conversations to provide more personalized responses.”

New rules apply on helping your chatbot remember and not remember things about your daily habits.

“For example: I once asked ChatGPT for a vegetarian meal plan that didn’t include lentils. Since then, the chatbot has remembered that I don’t care for lentils.”

“If you want ChatGPT to remember what you told it, try telling it, “Remember this.” You could also say, “Don’t remember this.”

ChatGPT’s memory capabilities are being improved at an accelerating pace. As they are with AI chatbots, agents and companions at OpenAI and other LLM AI providers:

“The big picture: The first version of ChatGPT memory worked like a personalized notebook that let you jot things down to remember later, OpenAI personalization lead Christina Wadsworth Kaplan told Axios.”

“This year, OpenAI expanded memory to make it more automatic and “natural,” Wadsworth Kaplan says.”

“Zoom in: “If you were recently talking to ChatGPT about training for a marathon, for example, ChatGPT should remember that and should be able to help you with that in other conversations,” she added.”

“Wadsworth Kaplan offered a personal example: using ChatGPT to recommend vaccinations for an upcoming trip based on the bot’s memory of her health history.”

“A nurse suggested four vaccinations. But ChatGPT recommended five — flagging an addition based on prior lab results Wadsworth Kaplan had uploaded. The nurse agreed it was a good idea.”

But these new AI habits can be unsettling at first, particularly since humans do tend to anthropomorphize AIs.

“The other side: It can be unsettling for a chatbot to bring up past conversations. But it also serves as a good reminder that every prompt you make may be stored by AI services.”

“When the memory feature was first announced in February 2024, OpenAI told Axios that it had “taken steps to assess and mitigate biases, and steer ChatGPT away from proactively remembering sensitive information, like your health details — unless you explicitly ask it to.”

And there is a lot of social engineering that still needs to be figured out and executed.

“Between the lines: Beyond privacy issues, using ChatGPT’s persistent memory can lead to awkward or even insensitive results, since you can never be sure exactly what the AI does or doesn’t know about you.”

“I asked ChatGPT to generate an image of me based on what it remembers about me and it gave me a wedding ring, even though I’m not married anymore.”

“Online personalization tools have long been plagued by a proneness to “inadvertent algorithmic cruelty.”

“The term was coined by blogger Eric Meyer a decade ago after Facebook showed him an unasked-for, auto-generated “Year in Review” video containing smiling pictures of his daughter — who had recently died of cancer.”

And just like people, they can create friction with users, despite dials to notch them up or down, as iconically depicted in Scifi:

“Persistent memory can also make chatbots feel like know-it-alls and reduce user control over LLMs.”

“Yes, but: OpenAI says users have full control over their memories.”

“Your memories are only visible to you,” OpenAI personalization team lead Samir Ahmed told Axios.”We really want to make sure that users, in general, feel like they’re in control.”

“There’s an option to delete any memory in the Settings page, or to delete an associated chat, or to conversationally tell ChatGPT what you do or do not want it to remember, Ahmed said.”

And of course, different types and tiers of memory come at different tiers of pricing:

“Different versions of ChatGPT (free, Plus, Pro, Enterprise) have varying levels of memory capabilities, with paid versions offering more expanded short- and long-term memory.”

The application of memory features in the enterprise. present its own set of. issues and challenges:

“OpenAI says it’s still figuring out how to add more memory to its enterprise tier of ChatGPT.”

“Enterprise and consumer are just different use cases,” Wadsworth Kaplan says. “We built this for consumers to give ChatGPT a sense of who you are and to help you over many conversations.”

“When we look in the future, we have some exciting things on a roadmap, some of which might be more relevant to enterprise users.”

It’s not just our chatbots remembering more about us through advanced use of memory architectures, both in software and hardware.

All of our work software, from Zoom to Microsoft Teams, to Notion and much more, feature ‘note taking’ capabilities in online meetings. And they record, summarize, transcribe, and most importantly REMEMBER everything that went on. Both in work and personal contexts. A current AI startups in the note taking arena that is a Silicon Valley favorite is Granola, for example.

The Information describes its growing role in what I call ‘group memories’ in a piece titled “How Granonla—ad AI Note Taking—grabbed Silicon Valley’s Attention”:

“The note-taking apps have quickly changed privacy norms. No one’s unhappy about it.”

“Every few years, a new notes app becomes a darling of Silicon Valley, a place where digital optimization of body, mind and thought is a foremost obsession. Evernote was one, then Notion. More recently, the boom in AI has supercharged what note-taking software can do, making the apps infinitely more searchable, organizable and customizable than previous versions.

Nearly all the major meeting tools, like Google Meet and Zoom, offer an AI notes function, and older notes apps like Notion have also embraced AI. Still, Granola is the hands-down favorite among the tech elite, scooping up more than $70 million in funding from the industry’s top names, including Sequoia Capital, Lightspeed Venture Partners and Nat Friedman, the investor who just became one of Meta Platforms’ AI czars.

Users prize Granola over other options for a number of reasons, including its sophisticated search function, which runs on natural language prompts—just as we might talk to ChatGPT or Claude.

More than anything else, though, people like Granola because it hides itself. After connecting Granola to an email calendar, users can join their meetings directly through Granola, and the app transcribes the conversation without ever visibly notifying the participants that it’s running. (The transcriptions happen live, and Granola doesn’t save the audio.)”

The two year startup is seeing rapid adoption, and is becoming ‘second nature’ habit to augment memories for its users:

“The proliferation of Granola and of AI note taking has established a surreal status quo: A sizable portion of Silicon Valley’s population is recording each other all the time, often without telling the other person before doing so, upending privacy norms in a manner that would’ve been somewhat unimaginable even a few years ago.

No one seems too fussed about it. “Transparently, I kind of just assume that everyone is using a meeting note taker of some sort,” said Brett Goldstein, CEO and founder of Micro, a New York–based AI startup. And that’s apparently a universal assumption in the industry, judging by the more than half-dozen Granola devotees I spoke to.”

Problem is that all of this, besides sometimes feeling ‘creepy’, is also a strong example of technology racing ahead of legal rules, not just societal ones. And that will explode as we start using AI Agents of all kinds:

“Undoubtedly, part of what’s making everyone feel comfortable—at least for now—is that there hasn’t been a public horror story of anyone using Granola or another AI note taker in some devious fashion to embarrass someone or leak information, and none of the users I spoke to recounted such an incident or had even heard about one. And as with much of AI, the audio apps exist in a legal gray area, since laws about recording another person vary across the country.”

Over a dozen US States have what’s called ‘two-party consent’ rules, including the largest one, California. And other countries have legal rules of different types as well.

And this picture of AI ‘memories’ via chatbots and note taking apps is going to get even more complicated with the latest AI applications.

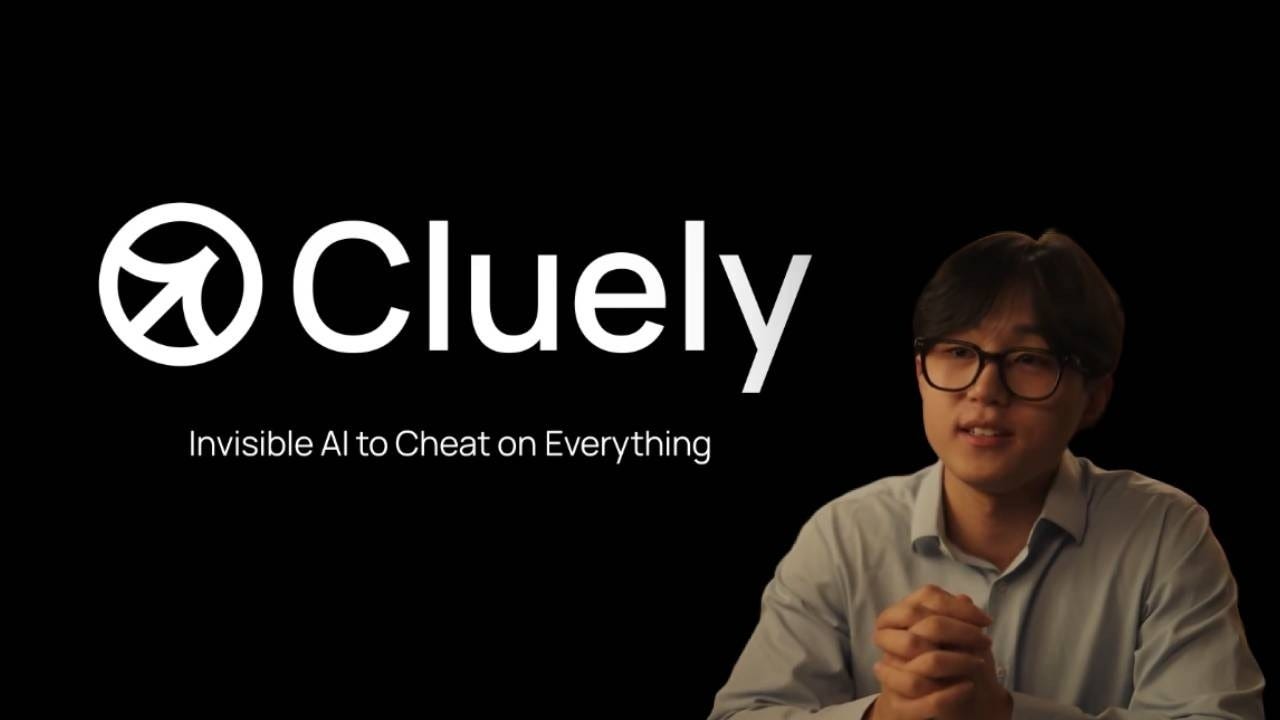

A case in point is AI startup Cluely, which has gained recent notoriety and popularity.

It offers a “cheating” application that layers on a translucent window OVER most meeting applications, running the conversations through REAL TIME transcribing, summarization, and ChatGPT lookups. It’s being used for everything from dating to online job interviews. On both sides.

They provide a layer of running information on meeting participants and their backgrounds while providing suggestions on furthering the conversation. And of course, the software remembers everything for prosperity.

All of this becomes AI memory of a whole different type than chatbots just remembering what kinds of food and music we like.

And it’s all on the threshold our online desktop and mobile browser software become more AI enabled. OpenAI is about to launch an AI Browser to compete with Google Gemini in their globally ubiquitous Chrome browser, and ChromeOS. WIth Perplexity already offering Comet, and The Browser Company’s Dia.

Thus these browsers will be remembering far more about us and our lives than ever before. And having the Agency to act on those memories on our behalf. This topic of AI Browsers is one I’ve discussed at length in writing and on podcasts.

We are in the earliest stages of an AI memory explosion ahead, where technologies are racing ahead to augment our own mental and attention capabilities. And doing so far ahead of societal and legal norms. Creating new habits in the process.

Forcing us to rapidly evolve new rules soft and hard, around what the AIs can do with memory, and can’t.

And that is Bigger Picture we need to remember most of all in this AI Tech Wave ahead. Massively memorable construction ahead. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)