AI: Anthropic's upcoming AI Reasoning in Claude. RTZ #720

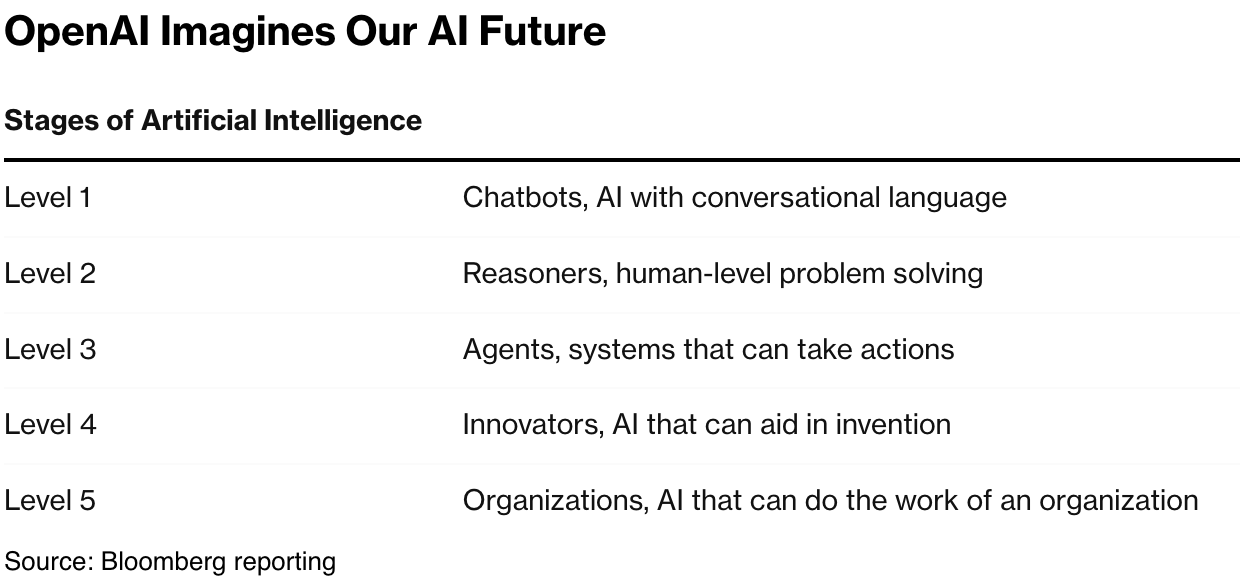

We’ve long discussed the AI roadmap to AGI as a multistep process that goes from AI chatbots to AI reasoning to AI Agents and beyond. It’s one laid out by OpenAI last year, and executed on by the AI industry thus far in this AI Tech Wave.

All of the major LLM AI companies, OpenAI, Google, Anthropic, Elon Musk’s XxAI/Grok and others are following this roadmap, leap frogging each other almost weekly in features and capabilities with a steady cadence of updates. All accelerating AI Scaling.

Google will have some things to show on this front with Gemini in a few days at the Google 2025 I/O Developer Conference.

The other one also up at bat is Anthropic.

As the Information outlines it in “Anthropic’s Upcoming Models Will Think… And Think Some More”:

“The race to develop reasoning models that “think” harder is at full force. At Anthropic, which arrived later than OpenAI and Google to the reasoning race, two upcoming models are taking the concept of “thinking” to the extreme.”

“Anthropic has new versions of its two largest models—Claude Sonnet and Claude Opus—set to come out in the upcoming weeks, according to two people who have used them. What makes these models different from existing reasoning AI is their ability to go back and forth between thinking, or exploring different ways to solve a problem, and “tool use,” the ability to use external tools, applications and databases to find an answer, these people said.”

AI Reasoning is getting embedded into these models, with an ability to go back and forth as needed based on user queries and prompts:

“The key point: if one of these models is using a tool to try and solve a problem but gets stuck, it can go back to “reasoning” mode to think about what’s going wrong and self-correct, one of the people said.”

“For example, if someone asks one of the new Anthropic models to conduct market research on potential themes for a new coffee shop they’re opening in the East Village in Manhattan, the AI might start by doing a web search for popular coffee shop themes in the U.S. After pulling that information, though, the model could switch to “reasoning” mode and realize that national trends aren’t representative of this neighborhood. Instead, it would decide to pull demographic data about the East Village to understand the average age and income of residents in the neighborhood to create a more relevant theme.”

AI Coding is one of the core capabilities where AI Reasoning and Agents deeply come into play:

“For people who use the new models to generate code, the models will automatically test the code they created to make sure it’s running correctly. If there’s a mistake, the models can stop to think about what might have gone wrong and then correct it.”

“The new Anthropic models are thus supposed to handle more-complex tasks with less input and corrections from their human customers, the people who have tested the model said. That’s useful in domains like software engineering, where you might want to provide a model with high-level instructions like, “make this app faster,” and let it run on its own to test out various ways of achieving that goal without a lot of hand-holding. (This also reminds me of the “always-on” coding assistants we recently wrote about, which also aim to operate with little instructions from human users.)”

And it’s a global race as we’ve seen with companies from China come out with AI Reasoning products like Manus earlier this year:

“Some of Anthropic‘s rivals might argue the new model’s capabilities aren’t all that different from what they offer in existing reasoning models. In a demo of its o3 and o4-mini models, for instance, OpenAI researchers showed how its reasoning models would automatically simplify code they spit out after further reviewing the code and realizing it needed to be simplified.”

It’s going to be an evolution to the revolution:

“It’s hard to know if customers will embrace the new models. Reactions to Claude 3.7 Sonnet, a previously-released Anthropic model that combined reasoning and traditional large language models in a single AI, have been mixed. Some people have complained that the model is more likely to lie and ignore user commands. Others have said that when they don’t give specific-enough instructions to the model, it’s more likely than other AI to get too ambitious and go outside the scope of what it’s supposed to do.”

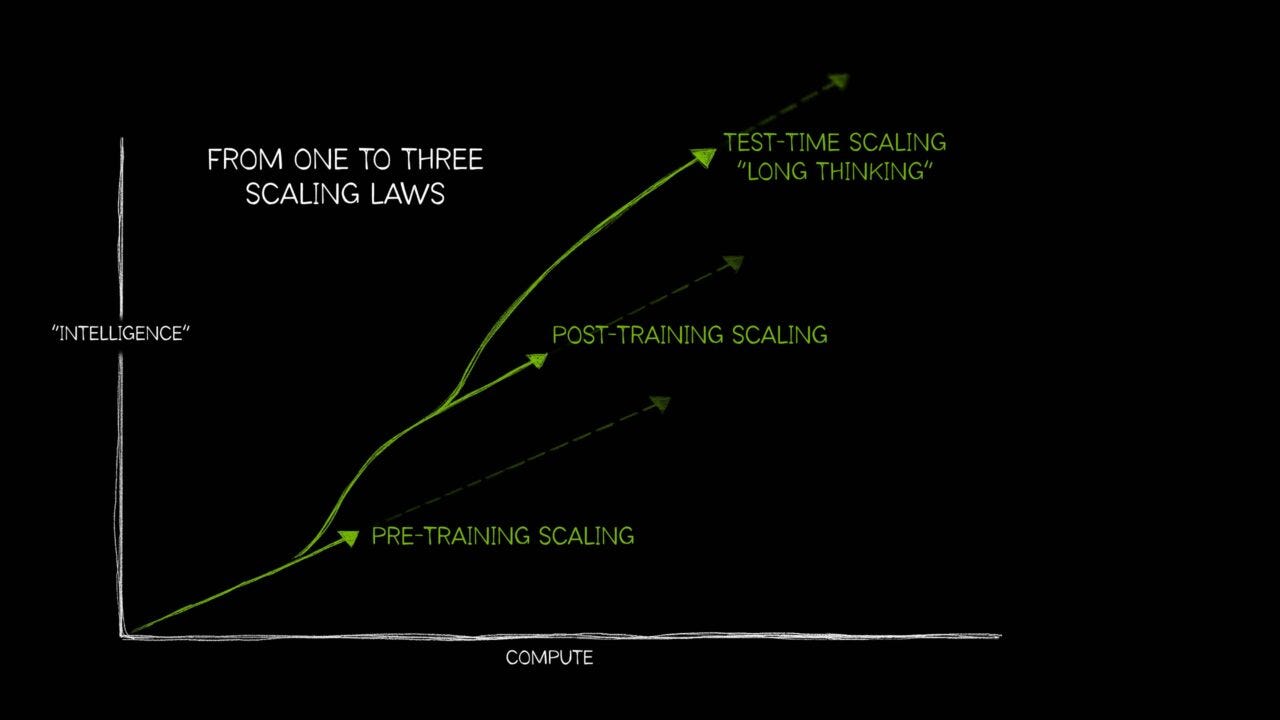

“However, the fact that Anthropic is still doubling down on test-time compute, the technique behind reasoning models, despite mixed feedback shows that it’s still bullish on this approach—at least for now.”

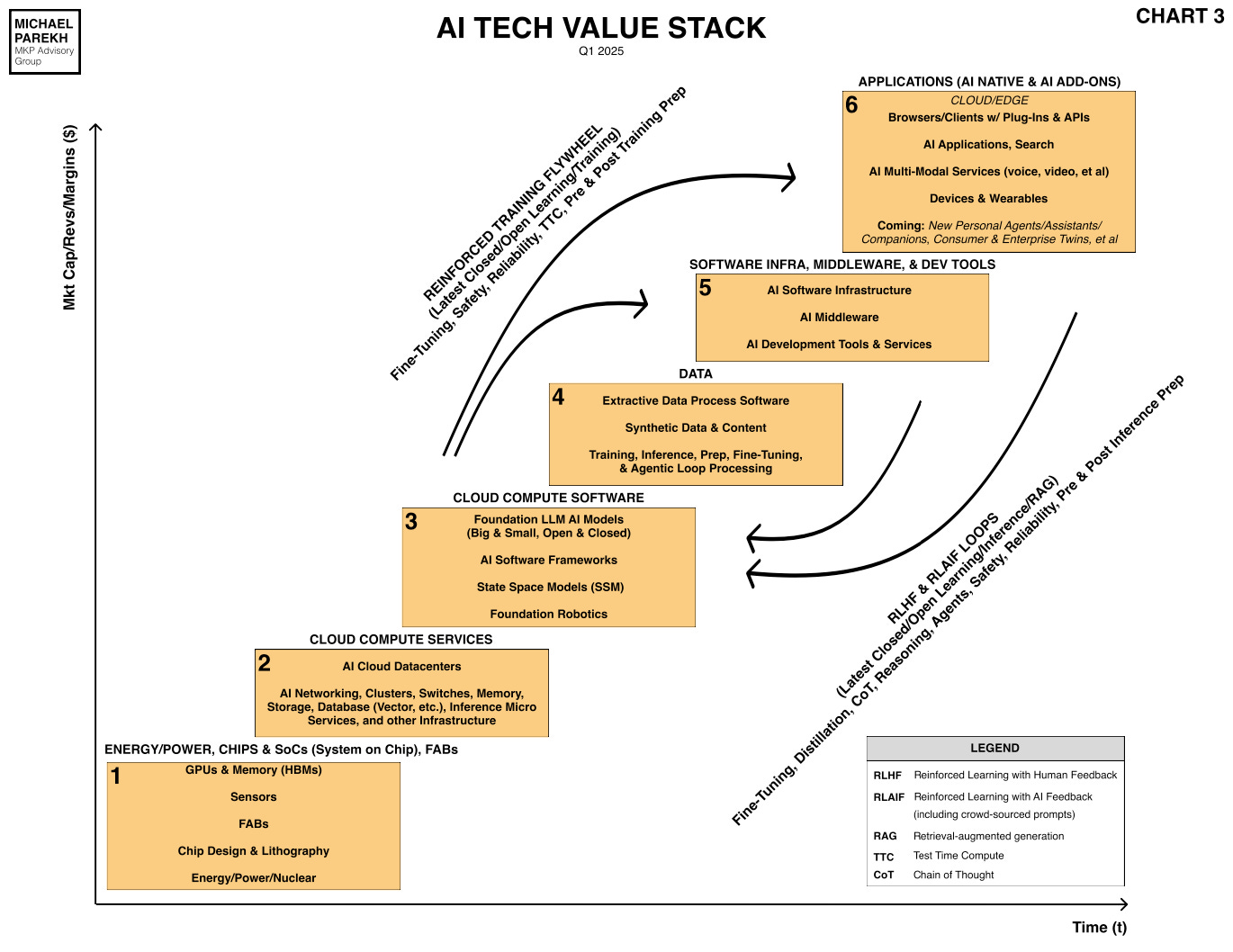

I’ve discussed the promise of AI test-time compute, and it’s incorporated in the latest version of the AI Tech Stack above in the inference and training reinforcement learning loops.

And as discussed in this piece, we’re at the very beginning of these iterations towards AI Scaling in this AI Tech Wave. Anthropic is the latest one to soon its AI Reasoning chops. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)