AI: Apple exploring AI cameras for its device ecosystem. RTZ #668

I’ve long discussed Apple’s unique set of bottom up opportunities to bring unique AI applications and services to life at scale. Starting with its Apple Intelligence initiatives, across its unparalleled ecosystem of hardware and software platforms to billions of mainstream users.

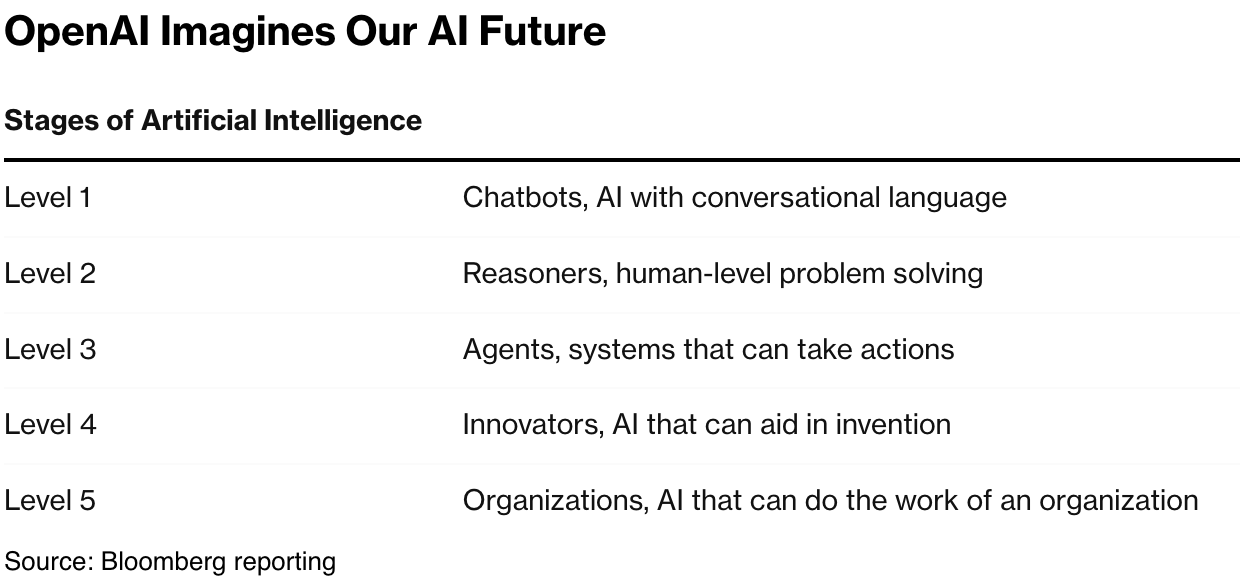

And how it’s likely beyond a future of of one to many AI chatbots, AI reasoners, and centralized AI Agent repositories.

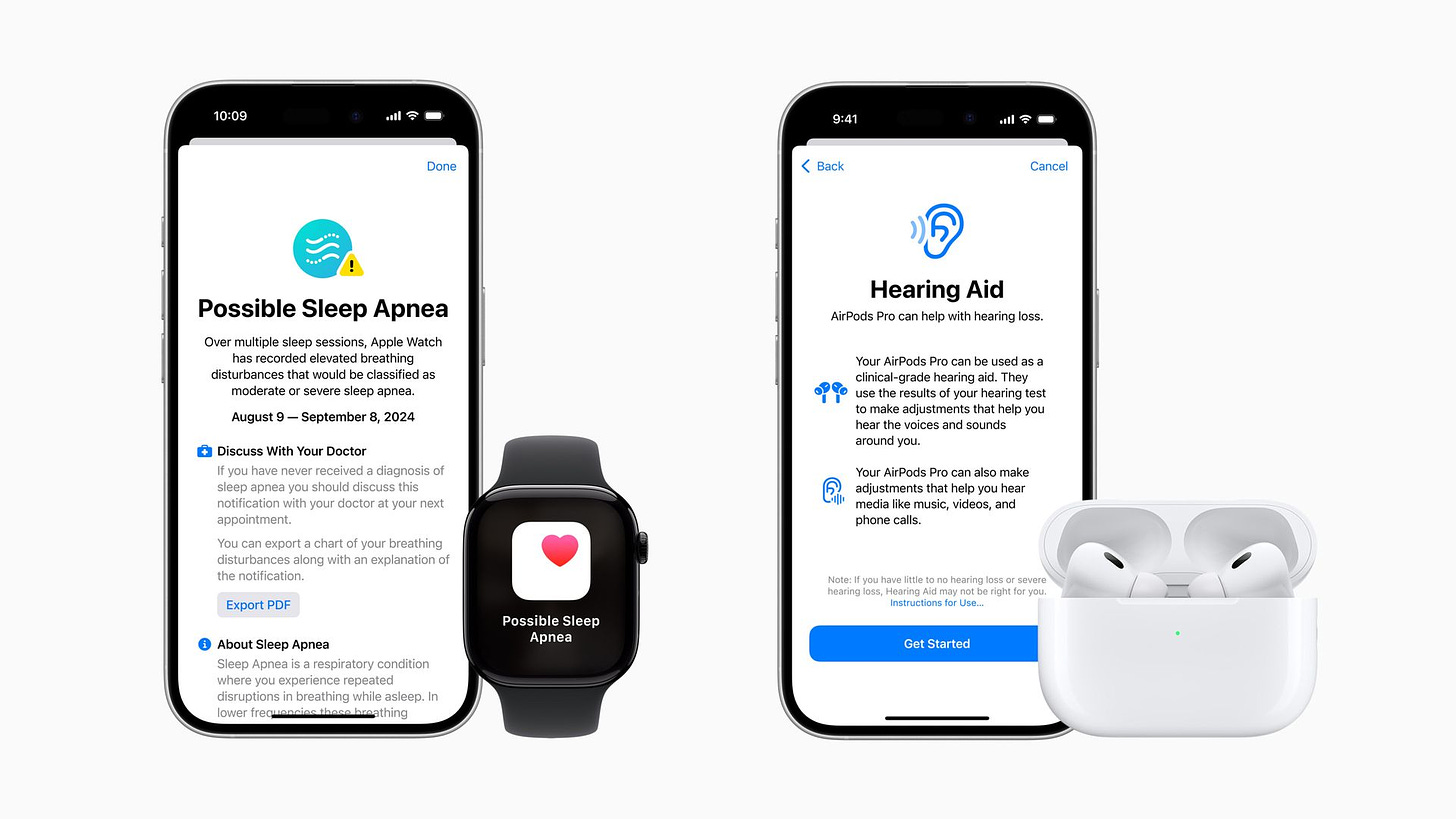

Potentially a plethora of personalized AI services over time that are made possible with AI capabilities at the edge. Perhaps starting with Apple’s focus on AI healthcare, in AI powered devices, optimized for high AI personalization, and WITH high levels of AI privacy and trust baked in for each of its billions of users.

An aspirational utopia relative to today’s AI chatbot world, but a possibility nevertheless. The industry is trying a wide range of possibilities, with its grab-bag of AI Science projects, and experiments. From AI smart glasses, to devices worn up and down our bodies and personal environments.

A new report by Bloomberg outlines a modest step that may signal some progress in the above direction.

As Mark Gurman of Bloomberg outlines in “Apple Working on Turning Watches Into AI Devices With Cameras”:

“Apple is exploring the idea of adding cameras and visual intelligence features to its smartwatch, thrusting the company into the AI wearables market. Also: Apple makes major executive changes following its Siri struggles, while the EU is pushing hard to open up iOS.”

“Over the past couple of years, we’ve seen an influx of AI wearables hit the market, ranging from the failed Humane Ai Pin to Meta Platforms Inc.’s popular smart glasses.”

“A central idea behind these devices is using onboard cameras and microphones to support artificial intelligence and provide context on a wearer’s surroundings. With the iPhone 16 launch last year, Apple started to inch into this territory. The new Visual Intelligence feature, which is tied to the Camera Control interface, relies on AI to help users learn more about the world around them.”

Cameras can be a critical AI at Scale input, for an fountain of Synthetic Data and Synthetic Content down the road. Topics I’ve discussed in recent pieces.

“For those unfamiliar, the feature works like this: If you long-press the camera button, you’re taken to a new interface where you can take a picture of something and have it analyzed by either ChatGPT or Google Search. It can also summarize or translate text and identify certain objects, landmarks or animals.”

“Today, the feature is a bit under-the-radar, partly because it only works with the iPhone 16. It should get a bigger boost with the release of iOS 18.4 next month. That’s when Apple will bring the feature to the iPhone 15 Pro and make it accessible via both the Control Center and Action button for the first time.”

The news here is potentially hinting at Apple’s broader ambitions in this area:

“Apple’s ultimate plan for Visual Intelligence goes far beyond the iPhone. The company wants to put the feature at the core of future devices, including the camera-equipped AirPods that I’ve been writing about for several months. Along the way, Apple also wants to shift Visual Intelligence toward its own AI models, rather than those from OpenAI and Google.”

“But Apple’s vision for AI wearables goes even further. The company is working on new versions of the Apple Watch that include cameras. As with the future AirPods, this would help the device see the outside world and use AI to deliver relevant information. These models are likely still at least generations away from hitting the market, but they are on the road map.”

“As part of this plan, Apple is considering adding cameras to both its standard Series watches and Ultra models. The current idea is to put the camera inside the display of the Series version, like the front-facing lens on the iPhone. The Ultra will take a different approach, with the camera lens sitting on the side of the watch near the crown and button.”

“Apple is probably considering this approach because the thicker Ultra has more room to work with. It would mean that an Ultra wearer could easily point their wrist at something to scan an object. A Series watch user, meanwhile, would have to flip over their wrist.”

The possibilities will have to honed and figured out for real world applications:

“Ideally, I’d love these cameras to also support FaceTime, but that’s probably a far-fetched idea. For one, the screens are too small to provide a good videoconferencing experience, and holding up your wrist for long periods for time can get uncomfortable. The technical challenge of moving the FaceTime app to the watchOS operating system wouldn’t be a major hurdle, though.”

Apple also continues its health centric focus of its devices, with AI at the fore:

“In other Apple Watch news, the company continues to run into problems while testing its long-planned blood-pressure tracking feature, I’m told. And the redesigned, plastic Apple Watch SE I’ve written about is also in serious jeopardy. The design team doesn’t like the look, and the operations team is finding it difficult to make the casing materially cheaper than the current aluminum chassis.”

And features for its devices, more out there. Including cameras on its Airpods:

“As for other Apple Watch features in development, I wrote a few months ago that the next Ultra model will get support for satellite texting, as well as the flavor of 5G wireless service known as RedCap. As part of the changes, it’s due to get a new modem chip from MediaTek Inc.”

“More broadly, Apple is following a similar playbook with its next-generation accessories. Rather than invent a new device from scratch (like the doomed Ai Pin), it’s looking to enhance its current lineup. That means sticking with devices that consumers already know and like. And the gradual rollout of features — like Visual Intelligence — will get customers comfortable with the new capabilities. They’ll get used to it on their iPhones and then be excited to see the technology appear in other devices.”

All these are likely going to take time given Apple’s nascent AI capabilities:

“Of course, Apple will need to seriously upgrade its AI in order to make these new integrations more useful. But that’s something it may be able to pull off by 2027, when both the camera-equipped AirPods and Apple Watches are expected to roll out.”

And the recent Siri developments are still front and center:

“Inside Apple’s big AI, Siri and Vision Pro executive shake-up. The company is making major management changes following months of internal turmoil, delays to both Siri and Apple Intelligence, and a cooler-than-anticipated response to the company’s generative AI work.”

“Here are the highlights:

-

“The Siri organization is being removed from the command of AI head John Giannandrea after Chief Executive Officer Tim Cook lost confidence in his ability to execute on consumer product development.”

-

“Mike Rockwell, the creator of the Vision Pro headset and vice president of the Vision Products Group, is taking over as the new head of Siri. He will report to Craig Federighi, who will now have oversight over that team.”

-

“Apple is breaking up the Vision Products Group, and Rockwell will no longer oversee hardware engineering for the device. The hardware team will now report to former Rockwell deputy Paul Meade and remain in John Ternus’ group. Rockwell will still lead visionOS and related headset software, which is coming with him to Federighi’s software engineering organization.”

-

“Giannandrea isn’t being fired and remains at Apple in his senior vice president role. He’ll still oversee underlying AI technologies, research and operations.”

-

“Kim Vorrath, a vice president of program management, is moving from Giannandrea’s group to the software engineering division to work with Rockwell.”

There are high hopes post these org changes:

“Internally, there is optimism that Rockwell can finally turn around the long-beleaguered Siri virtual assistant. He has experience shipping major new platforms and is known as an innovator. Having someone of his stature leading Siri is seen as a boon for the company. Still, the bigger picture remains cloudy: The whole of Apple Intelligence needs a lot of work.”

And this of course leaves question for Apple’s recent Vision Pro product launch, a Tim Cook project:

“Another question is where this leaves the Vision Products Group. Employees in that team are worried about potential layoffs and concerned that the company may ultimately give up on the category. Despite the investment of several billion dollars in the Vision Pro, the device has been a commercial flop. Senior executives have indicated that they aren’t going to abandon ship, but the near term remains murky.”

“Plans for next-generation Vision Pro models — including an upgrade of the first version and a cheaper variant — remain in flux. There is work going on, but nothing is full steam ahead right now. On the other hand, the company is committed to eventually bringing true augmented reality glasses to market. It’s going to take a while, but the iPhone maker believes it has the ingredients to be a winner in the category.”

“In the shorter term, Apple is preparing a major visionOS 3.0 upgrade this year. It will follow version 2.4, which adds Apple Intelligence and a new app with additional immersive content.”

There’re a lot more details in the piece on Apple’s plans and progress in these areas, especially in the context of regulatory moves by the EU and others to force more open interactions with third party developers.

But it’s clear that Apple is executing on its own unique AI roadmap in bringing AI applications and services to its billions of users. Even as it readies its next generation iPhone models.

And it’s very different than most of its peers in the industry. And that’s notable at this stage of the this AI Tech Wave. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)