AI: Far Smaller AI can go Big too. #536

The Bigger Picture, Sunday, 11/10/24

Much of the focus this year in this AI Tech Wave has been the trillions in AI Compute (GPUs, data centers, Power, Memory, Networking, Talent) and other inputs needed to keep Scaling AI ever larger. And do it at multiples of Moore’s Law indefinitely going forward towards AGI. But it’s important not to miss the other side of the spectrum.

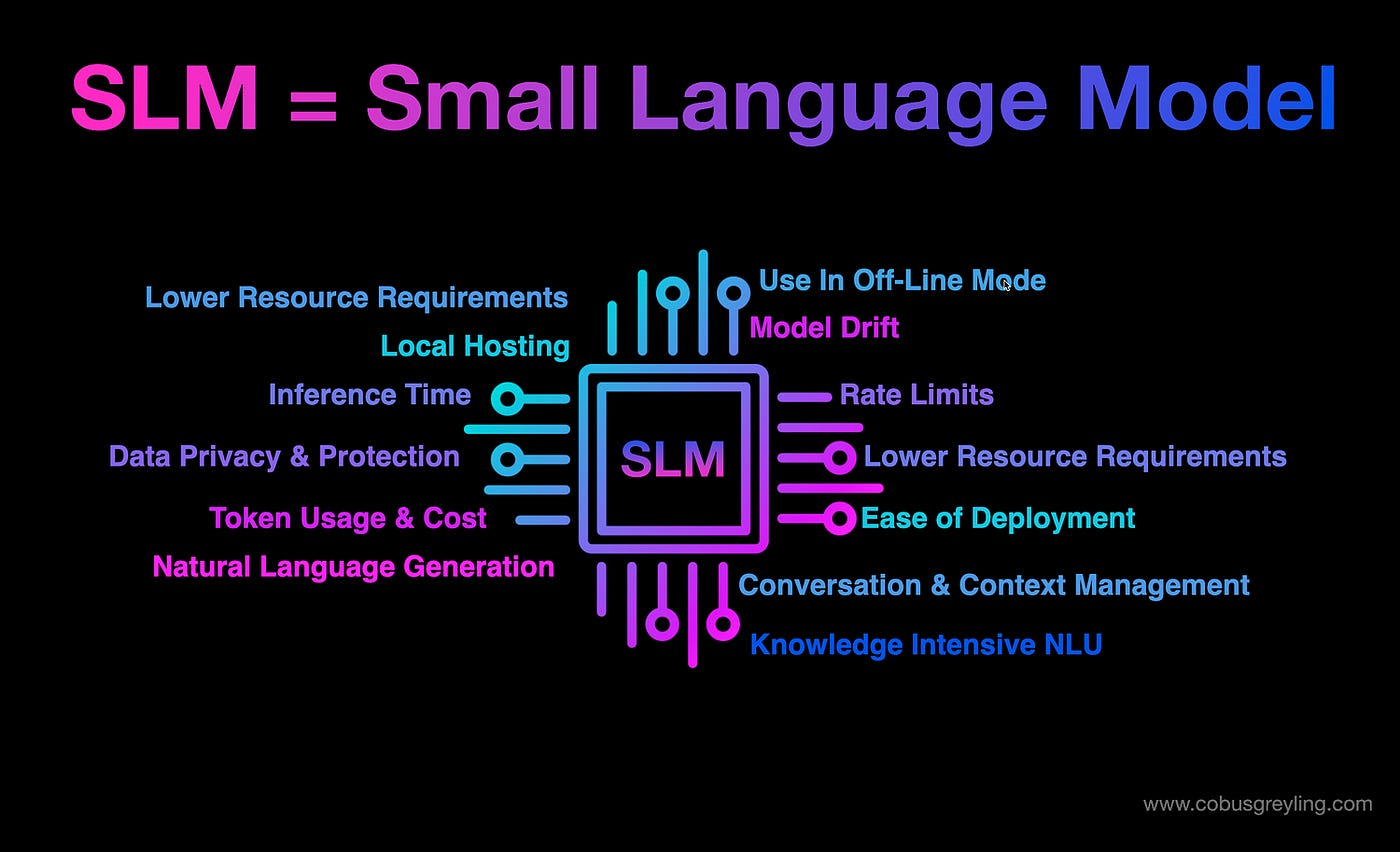

It’s not just Large Language Models (LLMs) driving AI reasoning and Agentic capabilities, but as I’ve highlighted before, Small Language Models (SLMs) as a key direction too. And likely even Tiny Language Models (TLMs) that will all help to make AI truly Big. They’re also called Micro Language Models (MLMs). That is the Bigger Picture I’d like to cover today.

First, a refresher on SLMs. Here is a good summary in “The Rise of Small Language Models: Efficiency and Customization for AI”:

“Large language models (LLMs) have captured headlines and imaginations with their impressive capabilities in natural language processing. However, their massive size and resource requirements have limited their accessibility and applicability. Enter the small language model (SLM), a compact and efficient alternative poised to democratize AI for diverse needs.”

“SLMs are essentially smaller versions of their LLM counterparts. They have significantly fewer parameters, typically ranging from a few million to a few billion, compared to LLMs with hundreds of billions or even trillions. This difference in size translates to several advantages.”

BIG one is greater compute and power efficiency, along with being able to run on local devices, not just in big cloud AI data centers:

-

“Efficiency: SLMs require less computational power and memory, making them suitable for deployment on smaller devices or even edge computing scenarios. This opens up opportunities for real-world applications like on-device chatbots and personalized mobile assistants.”

-

“Accessibility: With lower resource requirements, SLMs are more accessible to a broader range of developers and organizations. This democratizes AI, allowing smaller teams and individual researchers to explore the power of language models without significant infrastructure investments.”

-

“Customization: SLMs are easier to fine-tune for specific domains and tasks. This enables the creation of specialized models tailored to niche applications, leading to higher performance and accuracy.”

The above leads to ways to do far more creative AI processes, like using LLMs to train smaller models in volume for specific applications, with more contained ‘edge cases’. This means higher reliability, and better implicit guardrails on the application set.

Also smaller and tinier models can also help newer uses of LLM AIs I’ve discussed like creating ‘Synthetic Data’ and ‘Synthetic Content’. Both of those steps can help create far more data to then Scale AI even further up the ladder.

Many of these techniques will require using lots of LLMs, SLMs and TLMs together in newer ways, just now coming out of AI research labs and papers. Especially focused on better ways of creating huge amounts of new AI data and content:

“Like LLMs, SLMs are trained on massive datasets of text and code. However, several techniques are employed to achieve their smaller size and efficiency:”

-

“Knowledge Distillation: This involves transferring knowledge from a pre-trained LLM to a smaller model, capturing its core capabilities without the full complexity.”

-

“Pruning and Quantization: These techniques remove unnecessary parts of the model and reduce the precision of its weights, respectively, further reducing its size and resource requirements.”

-

“Efficient Architectures: Researchers are continually developing novel architectures specifically designed for SLMs, focusing on optimizing both performance and efficiency.”

The latest research in LLMs, SLMs, and TLMs is coming out of AI research at places like Google Deepmind, OpenAI, Microsoft, and others.

We may find over the next few years that the road to truly useful AI, are MANY AI models of ALL SIZES in this AI Tech Wave.

Potentially almost as countless as the transistors in our devices today. Not to mention the countless ‘Teraflops’ and ‘Tokens’ of compute through these models of all sizes. All to answer the biggest and smallest questions by 8 billion plus humans. To get us closer to the ‘smart agents’ and ‘AI Reasoning’ that we’re all craving. Including our robots and cars to come.

It’s important to remember that it’s not just about billions and trillions of dollars for ever growing AI Compute, but billions and trillions of far smaller and tinier AI models on local devices, that get us closer to AI applications that truly delight and surprise mainstream users on the upside. That is the Bigger Picture this Sunday. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)