AI: Google TPU sees notable OpenAI win vs Nvidia. RTZ #766

The Bigger Picture, Sunday, June 29, 2025

As the saying goes from Star Wars, there’s a ‘Disturbance in the Force’. Not a great one, but notable all the same.

Google made headway with its Google Cloud TPU* (see below) AI infrastructure, by getting OpenAI as a new customer for AI Inference compute.

The win continues to build on Google’s continued AI headway up and down its technology stack, as I’ve highlighted in previous posts.

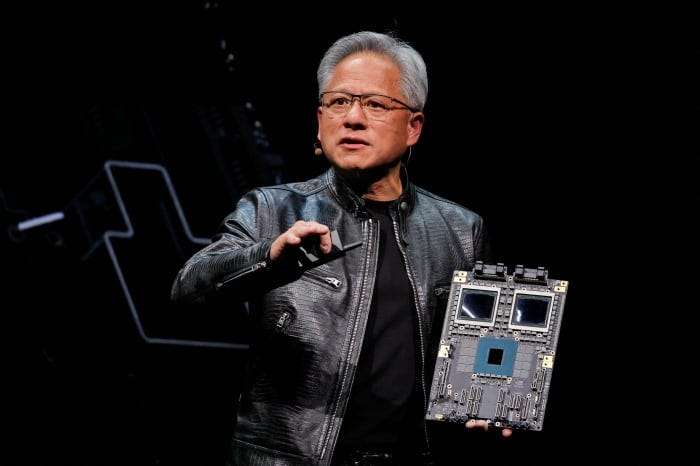

It doesn’t change Nvidia’s dominant global position in AI GPU data center infrastructure measured in the trillions. But it does establish a solid, and distant number two in terms of major LLM AI companies using non-Nvidia AI data center infrastructure. It’s an important development in this AI Tech Wave. That is the Bigger Picture I’d like to expand this Sunday.

The Information lays the groundwork in “Google Convinces OpenAI to Use TPU Chips in Win Against Nvidia”:

“OpenAI, one of the world’s biggest customers of Nvidia artificial intelligence chips, recently began renting Google’s AI chips to power ChatGPT and other products, the first time it has used non-Nvidia chips in a meaningful way, according to a person who is involved in the arrangement.

“The move reflects OpenAI’s broader shift away from relying on Microsoft data centers and could boost Google’s tensor processing units as a cheaper alternative to Nvidia’s graphics processing units, which dominate the AI chip market.”

The move away from Microsoft is a trend I’ve discussed earlier.

“The deal also shows how Google’s longtime strategy of developing technology or businesses in nearly all software and hardware related to AI may be paying off.”

“OpenAI hopes the TPUs, which it rents through Google Cloud, can help lower the cost of inference computing, a term that refers to running AI on servers after the AI has been fully developed, this person said.”

“OpenAI’s computing needs are rising fast: ChatGPT likely has more than 25 million paying subscribers, up from 15 million at the start of the year, and hundreds of millions of people use it for free every week.”

OpenAI for now has been tied to Microsoft Azure data centers, and Nvidia chips, evem though it recently partnered with Oracle Cloud for its Softbank backed Stargate AI infrastructure rollouts.

“OpenAI primarily rents Nvidia server chips through Microsoft and Oracle to develop or train its models and to power ChatGPT. OpenAI spent more than $4 billion to use such servers last year, split nearly evenly between training and inference, and has projected that it will spend nearly $14 billion on AI chip servers in 2025.”

And it’s not clear if OpenAI may want to use the latest and greatest Google TPU chips for training its AI models. Not just for inference.

“Google, which competes fiercely with OpenAI in developing AI models, isn’t renting out its most powerful TPUs to its rival, according to a Google Cloud employee. That suggests that for now, Google is reserving the stronger TPUs primarily for its own AI teams to develop Gemini models. It also isn’t clear whether OpenAI wants to use TPUs for training AI.”

It’s important to note that Apple is another Google Cloud user for AI infrastructure a well. And Apple of course also is an OpenAI ChatGPT partner as well.

“Other firms that rent TPUs from Google cloud include Apple, Safe Superintelligence and Cohere, in part because some employees of those firms previously worked at Google and are familiar with how TPUs work.”

Meta is also a possible Google TPU user down the road.

“Meta Platforms, which like OpenAI is one of the world’s biggest customers of AI chips, also recently considered using TPUs, according to a person with direct knowledge of the discussion. Meta says it isn’t using them.”

“Google Cloud also rents out Nvidia-powered servers to its customers, and it still generates significantly more from doing so than from renting out TPUs, as Nvidia chips are an industry standard and developers have more experience using the specialized software that controls those chips. Google previously placed more than $10 billion of orders for Nvidia’s state-of-the-art Blackwell server chips and began making them available to select customers in February.”

Googe’s efforts on TPUs predates other AI infrastructure efforts both in hardware and software.

“Google began working on its TPUs around 10 years ago and, starting in 2017, made them available to cloud customers interested in training their own AI models.”

For OpenAI, Google Cloud has gone from a ‘nice to have’ to ‘must have’ of late for its AI product deployment.

“OpenAI first turned to Google Cloud earlier this year, after its ChatGPT image-generating tool went viral, taxing the inference servers it was using from Microsoft.”

“But the deal is now straining Google Cloud’s own data center capacity.”

“In recent weeks, Google approached other cloud providers, which primarily rent out Nvidia GPUs, to ask whether they would also install TPUs in their data centers for the benefit of an unnamed Google Cloud customer, according to several people with direct knowledge. Google recently held discussions with CoreWeave about leasing space for TPUs in its data centers, for instance.”

“While no company can match the performance of Nvidia chips for training AI, a never-ending list of companies have been developing inference chips to lessen their reliance on Nvidia and, hopefully, lower their costs in the long run.”

It’s important to note that Google TPUs are only deployed on Google Cloud Infrastructure.

“To date, Google has made TPUs available only through its own facilities.”

“Jacinda Mein, a Google Cloud spokesperson, confirmed that Google was “looking for space and power” outside Google data centers “to meet the short-term needs of a single Google Cloud customer.”

“These discussions in no way change our strategy,” she said, referring to its preference to keep TPUs in its own data centers. An OpenAI spokesperson declined to comment.”

And then there’s addressing the elephant in the room.

“Challenging Nvidia”

“While no company can match the performance of Nvidia chips for training AI, a never-ending list of companies have been developing inference chips to lessen their reliance on Nvidia and, hopefully, lower their costs in the long run.”

“Other major cloud providers such as Amazon and Microsoft, as well as big AI developers like OpenAI and Meta, have kicked off efforts to develop their own inference chips, with varying degrees of success.”

Amazon of course has their Trainium AI chips, which have yet to see big external LLM companies beyond no, 2 LLM AI company Anthropic, in which Amazon AWS is a major investor (along with Google).

And options beyond Nvidia are still relative scarce.

“Cloud providers have struggled to score big customers for their Nvidia-chip alternatives without also offering financial incentives. For instance, Anthropic uses Amazon’s and Google’s AI chips, but it has also received several billions of dollars in funding from each firm. It’s not clear whether Google is providing OpenAI with discounts or credits as an incentive to use TPUs.”

There’s also the issue of Microsoft’s own investments in AI chips.

“OpenAI’s Google chip deal could be a setback for Microsoft, OpenAI’s closest partner and early backer. Microsoft has poured a significant amount of money into developing an AI chip it hoped OpenAI would use. Microsoft has run into problems while developing its AI chip and recently delayed the timeline for releasing the next version, meaning it likely will not be competitive with Nvidia’s chips by the time it is done.”

So overall, the Google win is a dent in the AI chip infrastructure market. But for now only a dent.

Yes, against the Nvidia ‘Force’ and all. And that is the Bigger Picture to keep in mind thus far in this AI Tech Wave. Stay tuned.

*(TPU stands for ‘tensor processing unit’, which is Google’s version of ‘GPUs’ (graphical processing units) used by Nvidia, AMD and others. And distinct of course from CPUs (central processing units), which have been the crux of computing for over 70 years. More here on all of these details.)

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)