AI: Meta's cool advances across Llama 4. RTZ #682

Meta is making waves in the open source LLM AI front again.

Meta’s founder/CEO Mark Zuckerberg has driven a meaningful upgrade in Llama 4 in various natively multimodal ‘open weight’ versions, with a meaty response to DeepSeek’s open source AI LLMs and AI Reasoning products of late. Both companies’ open source initiatives are spurring major players like OpenAI and others to up their ‘open weights’ AI game as well. Let’s unpack Meta’s Llama 4 in all its versions in this AI Tech Wave.

As TechCrunch summarizes in “Meta releases Llama 4, a new crop of flagship AI models”:

“Meta has released a new collection of AI models, Llama 4, in its Llama family — on a Saturday, no less.”

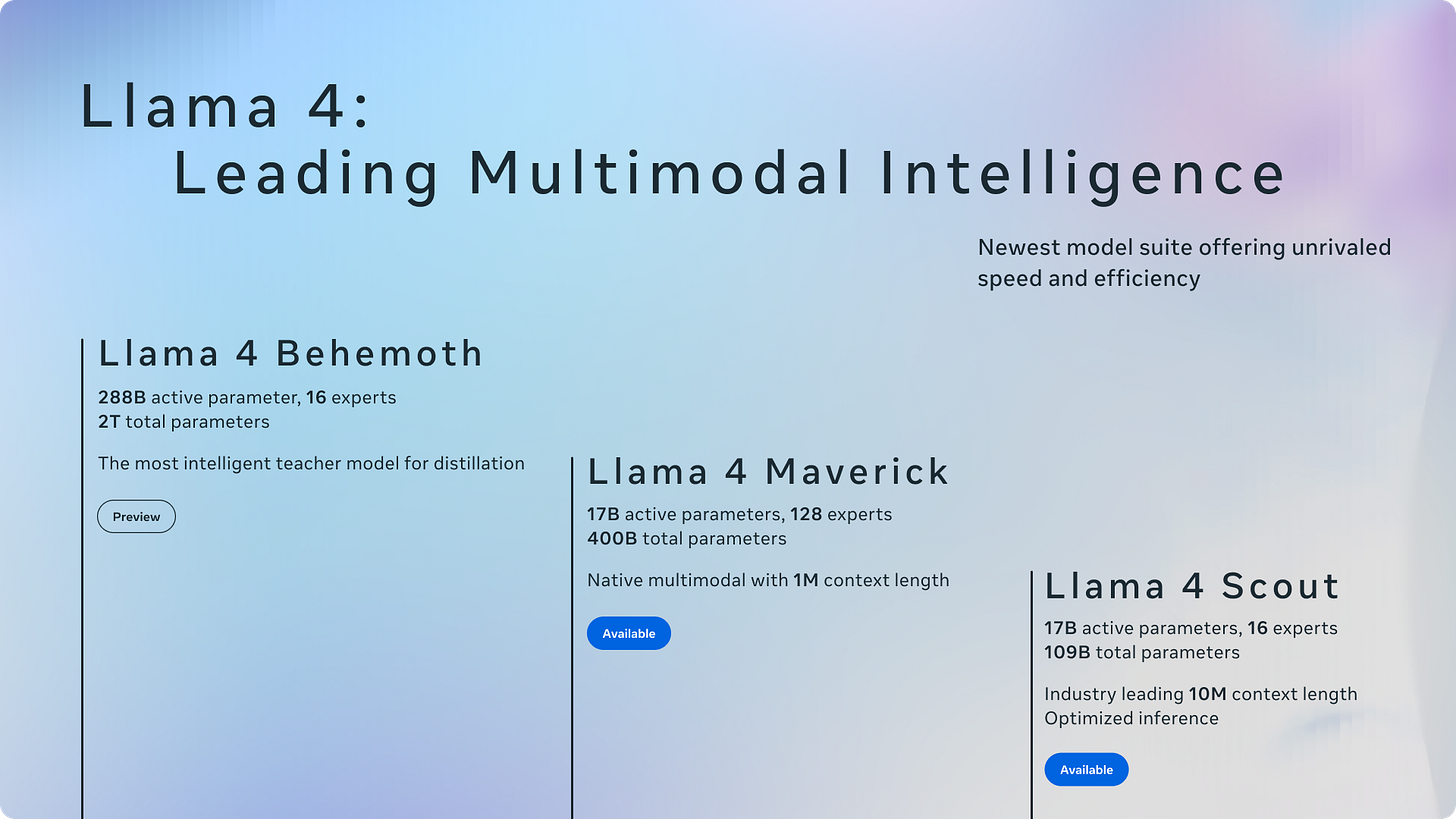

“There are four new models in total: Llama 4 Scout, Llama 4 Maverick, and Llama 4 Behemoth. All were trained on “large amounts of unlabeled text, image, and video data” to give them “broad visual understanding,” Meta says.”

All requiring billions more in Nvidia’s next gen AI chips and infrastructure:

“The success of open models from Chinese AI lab DeepSeek, which perform on par or better than Meta’s previous flagship Llama models, reportedly kicked Llama development into overdrive. Meta is said to have scrambled war rooms to decipher how DeepSeek lowered the cost of running and deploying models like R1 and V3.”

Another example on how DeepSeek has spurred the US LLM AI companies to up their game.

“Scout and Maverick are openly available on Llama.com and from Meta’s partners, including the AI dev platform Hugging Face, while Behemoth is still in training. Meta says that Meta AI, its AI-powered assistant across apps including WhatsApp, Messenger, and Instagram, has been updated to use Llama 4 in 40 countries. Multimodal features are limited to the U.S. in English for now.”

And Meta is likely to leverage these technologies on their AI Ad tech efforts as well.

There are relevant nuances on the Meta open source licenses:

“Some developers may take issue with the Llama 4 license.”

Users and companies “domiciled” or with a “principal place of business” in the EU are prohibited from using or distributing the models, likely the result of governance requirements imposed by the region’s AI and data privacy laws. (In the past, Meta has decried these laws as overly burdensome.) In addition, as with previous Llama releases, companies with more than 700 million monthly active users must request a special license from Meta, which Meta can grant or deny at its sole discretion.”

Licensing issues aside, the models are optimized to be more efficient:

“These Llama 4 models mark the beginning of a new era for the Llama ecosystem,” Meta wrote in a blog post. “This is just the beginning for the Llama 4 collection.””

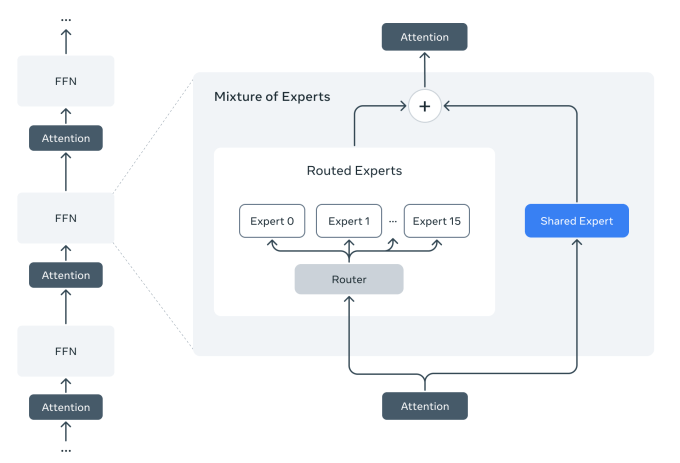

“Meta says that Llama 4 is its first cohort of models to use a mixture of experts (MoE) architecture, which is more computationally efficient for training and answering queries. MoE architectures basically break down data processing tasks into subtasks and then delegate them to smaller, specialized “expert” models.”

“Maverick, for example, has 400 billion total parameters, but only 17 billion active parameters across 128 “experts.” (Parameters roughly correspond to a model’s problem-solving skills.) Scout has 17 billion active parameters, 16 experts, and 109 billion total parameters.”

And the Llama is optimized for both ‘right and left brain’ AI activities.

“According to Meta’s internal testing, Maverick, which the company says is best for “general assistant and chat” use cases like creative writing, exceeds models such as OpenAI’s GPT-4o and Google’s Gemini 2.0 on certain coding, reasoning, multilingual, long-context, and image benchmarks. However, Maverick doesn’t quite measure up to more capable recent models like Google’s Gemini 2.5 Pro, Anthropic’s Claude 3.7 Sonnet, and OpenAI’s GPT-4.5.”

“Scout’s strengths lie in tasks like document summarization and reasoning over large codebases. Uniquely, it has a very large context window: 10 million tokens. (“Tokens” represent bits of raw text — e.g. the word “fantastic” split into “fan,” “tas” and “tic.”) In plain English, Scout can take in images and up to millions of words, allowing it to process and work with extremely lengthy documents.”

“Scout can run on a single Nvidia H100 GPU, while Maverick requires an Nvidia H100 DGX system or equivalent, according to Meta’s calculations.”

“Meta’s unreleased Behemoth will need even beefier hardware. According to the company, Behemoth has 288 billion active parameters, 16 experts, and nearly two trillion total parameters. Meta’s internal benchmarking has Behemoth outperforming GPT-4.5, Claude 3.7 Sonnet, and Gemini 2.0 Pro (but not 2.5 Pro) on several evaluations measuring STEM skills like math problem solving.”

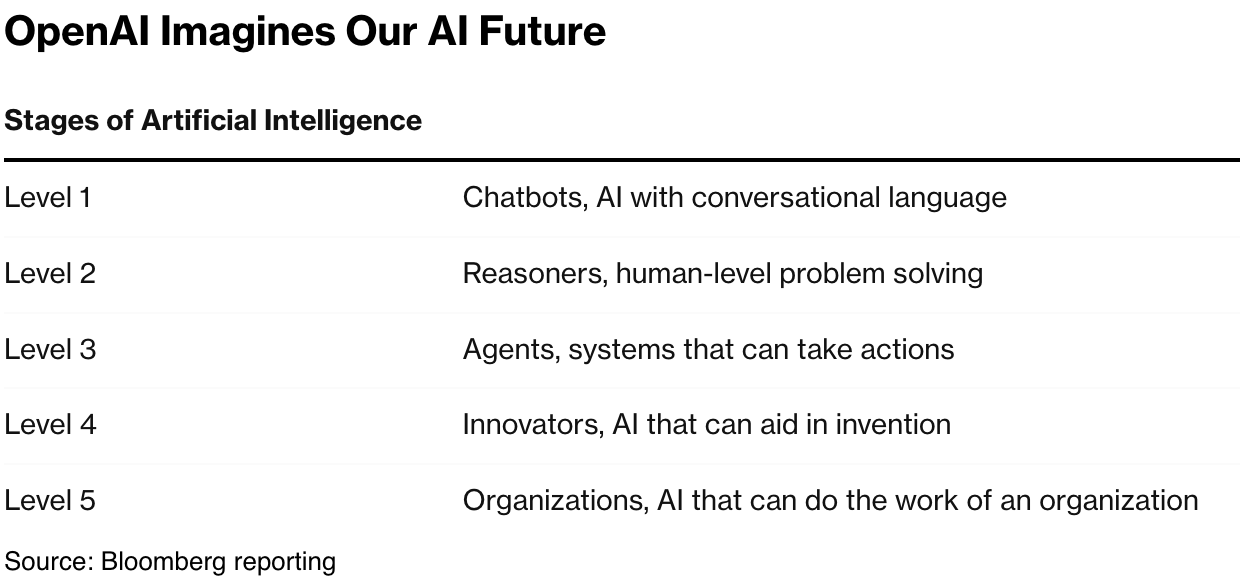

“Of note, none of the Llama 4 models is a proper “reasoning” model along the lines of OpenAI’s o1 and o3-mini. Reasoning models fact-check their answers and generally respond to questions more reliably, but as a consequence take longer than traditional, “non-reasoning” models to deliver answers.”

Llama is also tweaked for its guardrails on the types of queries it can handle:

“Interestingly, Meta says that it tuned all of its Llama 4 models to refuse to answer “contentious” questions less often. According to the company, Llama 4 responds to “debated” political and social topics that the previous crop of Llama models wouldn’t. In addition, the company says, Llama 4 is “dramatically more balanced” with which prompts it flat-out won’t entertain.”

“[Y]ou can count on [Lllama 4] to provide helpful, factual responses without judgment,” a Meta spokesperson told TechCrunch. “[W]e’re continuing to make Llama more responsive so that it answers more questions, can respond to a variety of different viewpoints […] and doesn’t favor some views over others.”

This is an issue of focus for other LLM AI companies as well:

“In actuality, bias in AI is an intractable technical problem. Musk’s own AI company, xAI, has struggled to create a chatbot that doesn’t endorse some political views over others.”

“That hasn’t stopped companies including OpenAI from adjusting their AI models to answer more questions than they would have previously, in particular questions relating to controversial subjects.”

Overall, this set of Llama 4 releases over a weekend is a good sign that Meta remains on track to do its bit on the road to AGI. And improve its open weight LLM AIs against DeepSeek and others, and that is a good thing for the industry.

This AI Tech Wave has a lot to work with going into the second quarter and beyond. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)