AI: Microsoft a Case Study of an Nvidia 'Frenemy'. RTZ #771

A key reality for Nvidia, the market leading provder of AI GPU Infrastructure in this AI Tech Wave, has been its ‘Frenemies’ position with its top customers. The four largest AI Cloud data center providers, including the emerging ‘NeoClouds’ like CoreWeave, make up over half of Nvidia’s business worldwide. Despite the growth of other markets like enterprises, and of course Sovereign AI customers globally. And of course OpenAI, Microsoft’s core Cloud partner and Nvidia customer. With and without Microsoft.

The core cloud companies, ordered in size from Amazon AWS to Microsoft Azure to Google Cloud and Oracle Cloud, as well as Meta who is not a cloud customer, all have ongoing efforts to develop alternative AI chips to Nvidia’s core offerings along its roadmap.

This has remained a key issue for Wall Street, even as Nvidia now ranks in the top three, $3+ trillion dollar market cap companies leading the US and global stock markets. On any given day, it’s Nvidia, Apple and Microsoft, given daily market volatility.

I continue to maintain that Nvidia’s moat in terms of its hardware and open source software leadership, led by its CUDA library frameworks across every imaginable industry and market segment, provides Nvidia market advantages that are relatively unassailable for the next few years.

One of the reasons is that it’s not easy developing AI chips at Scale. The investments are in the billions and the times scales in years.

And that came home with Microsoft’s latest progress on its AI chip efforts.

As the Information lays it out in “Microsoft Scales Back Ambitions for AI Chips to Overcome Delays”:

“Microsoft is revising its roadmap for its internally developed artificial intelligence server chips and will focus on releasing less ambitious designs through 2028, hoping to overcome problems that have caused delays in development, according to two people with direct knowledge of the situation.”

“Microsoft hopes that by scaling back some of the designs and pushing out the schedule for other AI chips it is already working on, it can develop them more easily. The hope is that when these chips are released over the next three years, they will still remain competitive with Nvidia’s AI chips.”

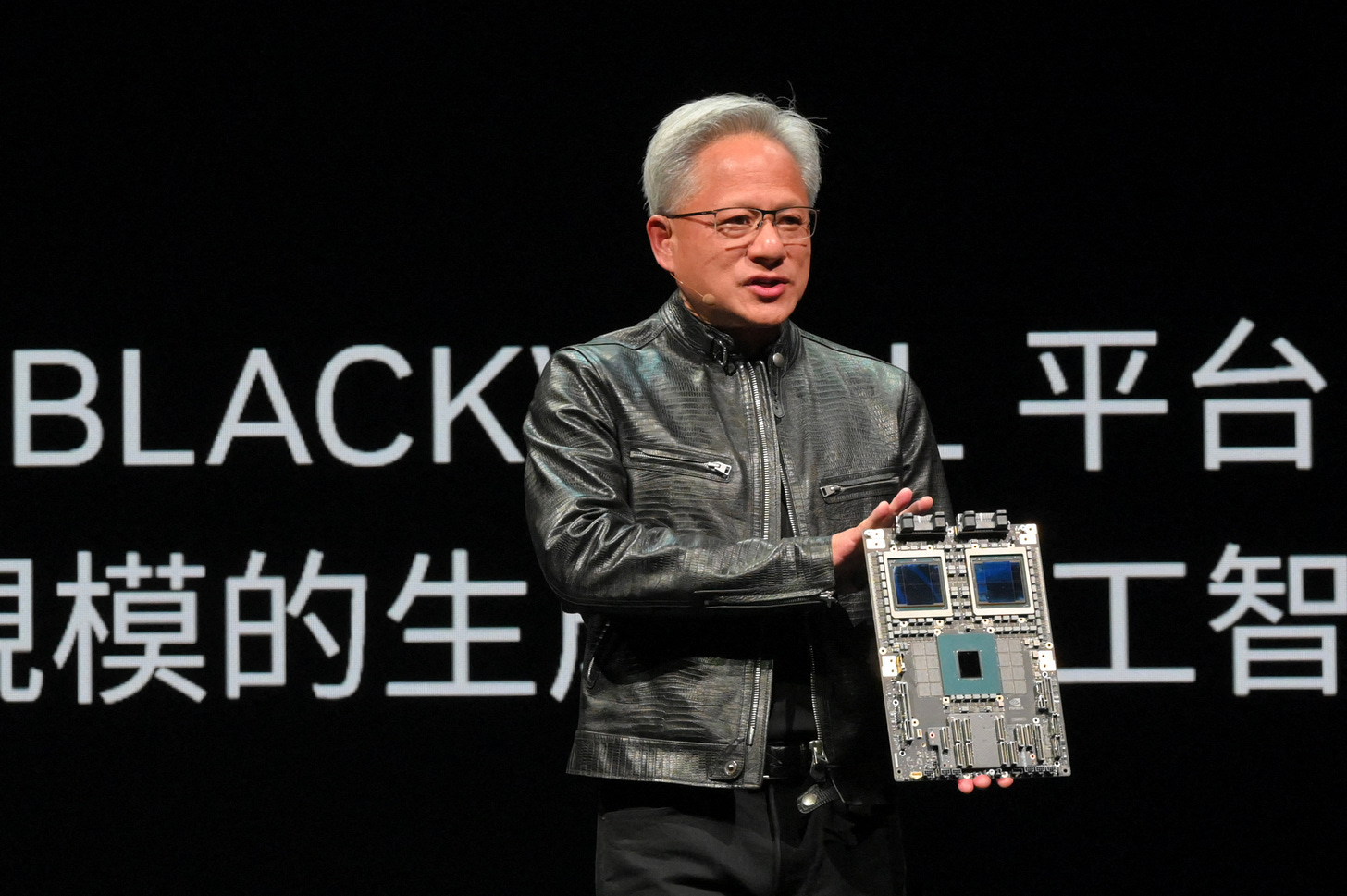

Remember, Nvidia has a roadmap for its AI GPUs for training and inference going into the next few years. They go from the earlier Hopper generation, to today’s Blackwell, followed by Rubin and Feynman. Each iteration will likely see upgrades for two years each. That’s quite the roadmap years and billions in the making.

Microsoft and others have to make their chip plans accordingly.

“Microsoft executives told engineers in its silicon team about the new plans in a meeting last week. The decision comes after Microsoft had to push back the release of its latest-generation AI chip, Maia 200, to 2026 from 2025, as The Information previously reported.”

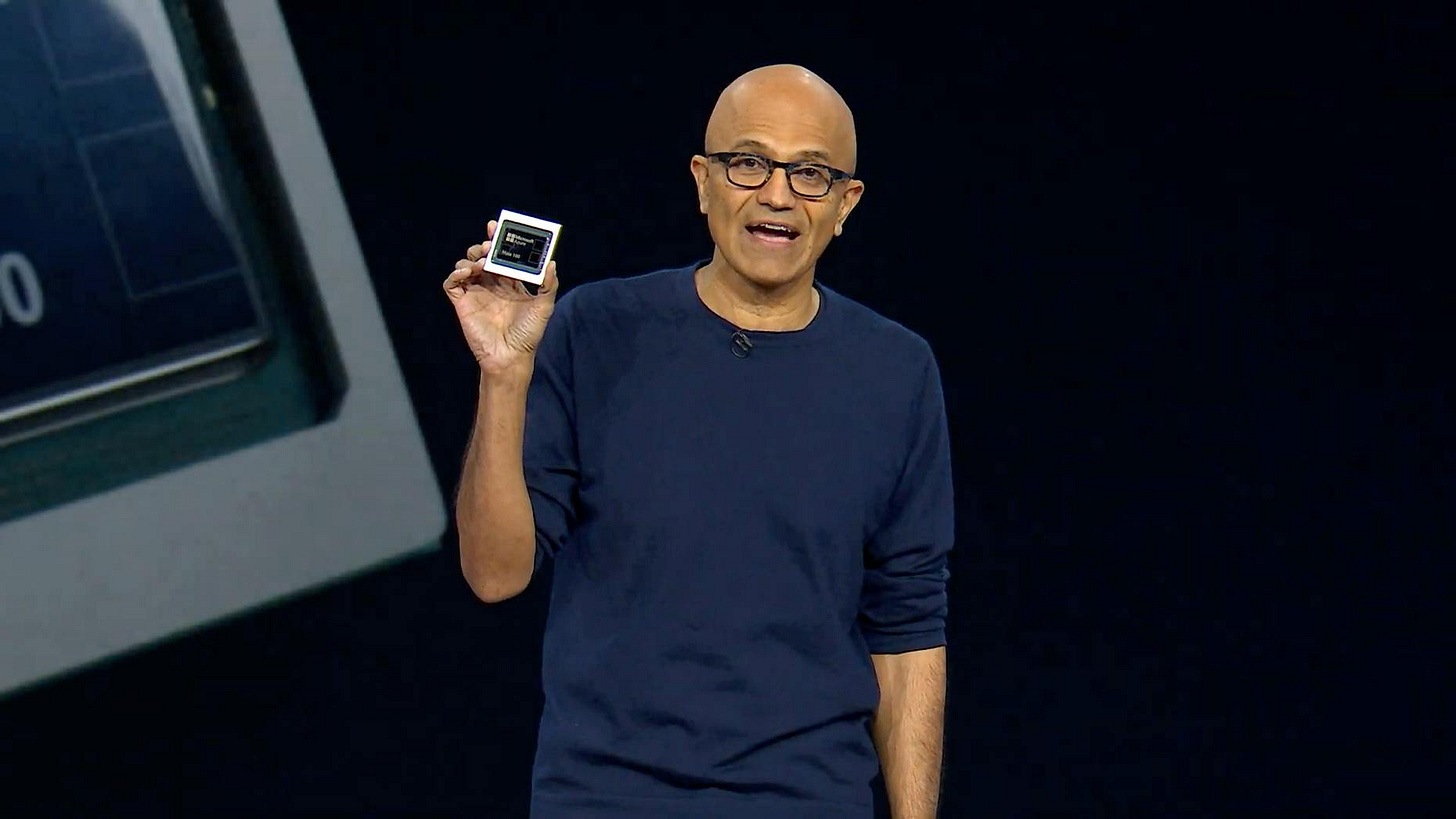

“Like both Google and Amazon, Microsoft designs its own chips meant to power AI services such as OpenAI’s ChatGPT in hopes of creating an alternative to Nvidia’s chips, which dominate the market. Nvidia tightly controls the prices and supply of its own chips.”

None of this changes Microsoft’s close customer relationship with Nvidia:

“Microsoft last year was Nvidia’s largest customer by revenue, according to multiple Nvidia employees, and spends billions of dollars a year buying Nvidia AI chips for its Azure cloud service.”

“Microsoft launched its first AI chip, dubbed Maia 100, in 2024 and immediately began working on three successors—codenamed Braga, Braga-R and Clea—due for release in 2025, 2026 and 2027, respectively. However, both Braga and Clea were based on entirely new designs, making their development especially challenging.”

But chips plans take time, and there can be delays. Thus the need to stay close to Nvidia while working on alternate chips:

“The Braga chip’s design was only completed in June, missing a year-end deadline by around six months. Braga’s delay raised concerns internally within Microsoft that the chips due in 2026 and 2027 also could be delayed, making them even less competitive with Nvidia’s chips by the time they were released, the people said.”

“As a result, Microsoft executives told engineers last week that the company is considering developing an intermediary chip for release in 2027 that will sit between Braga and Braga-R in terms of performance. This chip will likely be known as Maia 280 and is still largely based on Braga’s design. However, the Maia 280 consists of at least two Braga chips linked together so that they can work together as a single, more powerful chip.”

None of this changes Microsoft’s chips plans in the longer term:

“A Microsoft spokesperson didn’t comment directly on the development of its Maia chips but said the company “remains committed” to developing in-house hardware based on its customers’ and its own computing needs while continuing to work with “close silicon partners.”

And these things are hard to do relative to Nvidia:

“That’s a different approach than is used by chip makers like Nvidia, which link two finished chips to increase performance. The new approach to improve on Braga’s performance reflects an acknowledgment by Microsoft’s leaders that designing a new chip from scratch each year wasn’t feasible.”

There are other chips at work too for Microsoft:

“The release of Microsoft’s third-generation AI chip, Clea, has been pushed beyond 2028, and its future remains unclear. Clea was intended to be the first AI chip that would be competitive with Nvidia’s chips in terms of performance per watt.”

And of course there are implications for other chips vendors like Marvell in this instance. Another company involved in second sourcing for the cloud companies includes Broadcom, as I’ve discussed earlier.

“Microsoft’s revised roadmap has negative implications for Marvell, a specialized chip designer that Microsoft hired to work on some of the chiplets in Braga-R, according to two people with direct knowledge of the matter. Marvell’s shares surged last year due to partnerships with major tech firms including Amazon, which relies on Marvell to help build their AI chips, and Marvell had anticipated revenue from Microsoft much sooner, the people said. However, its stock price has fallen this year amid delays in chip projects from its customers, a slowing global economy and trade tensions between the U.S. and other countries.”

All this shows some of the challenges for even the largest and most Nvidia committed customers of Nvidia to ‘thread the needle’ of short term dependence and the promise of long-term independence of AI chip supplies.

And that isn’t likely to change much in this AI Tech Wave in the near term. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)