AI: Nvidia and Chips still the crux of things in 2026. RTZ #953

We’re ending 2025 with the most extraordinary buildup of semiconductor chip powered AI Data Centers, with unprecedented amounts of Power to rev then up. So much of this year has been dominated by that AI buildup in the $400 billion plus range by the big US tech companies.

It puts this AI Tech Wave in a different league at this early stage vs all other prior tech waves. Including the Internet one with its trillion dollar fiber backbone and bandwidth buildout by the telecoms over a decade.

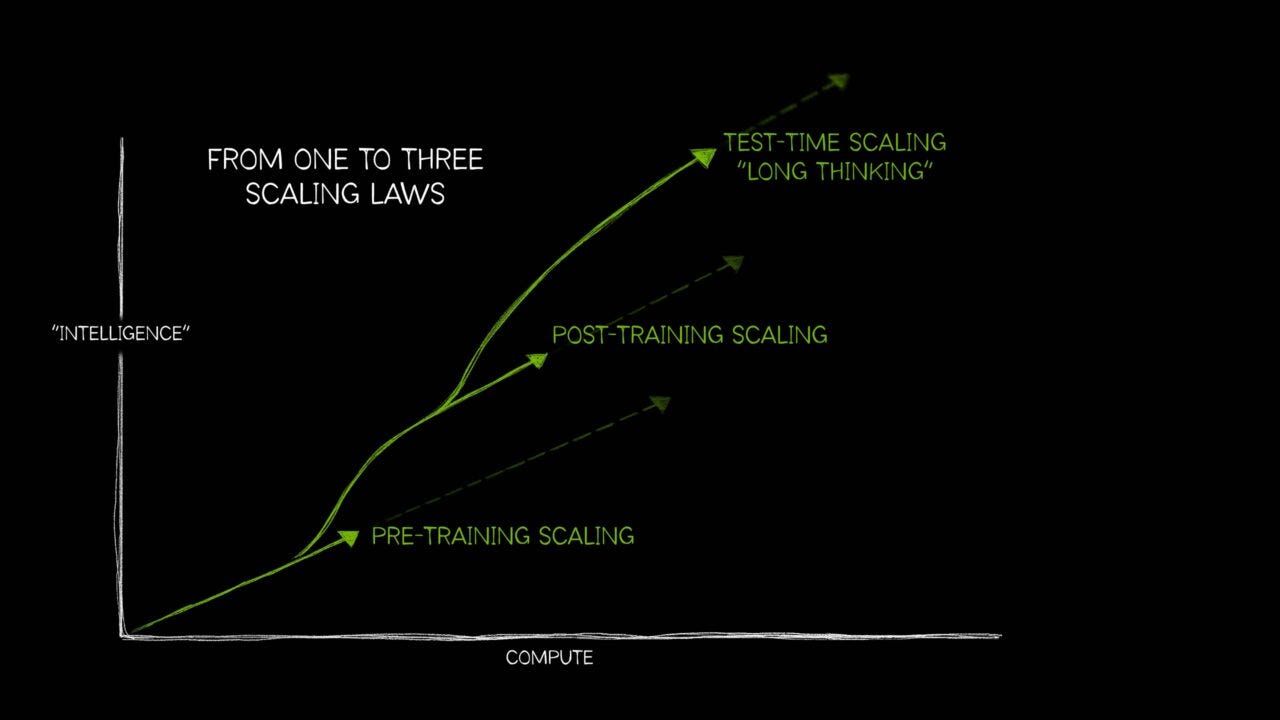

Up and down the tech stack below. With exponential complexity in Scaling the training and inference in the ‘reinforcement loops’ depicted below.

This AI one is far bigger one as I’ve talked about a lot in these pages. And 2026 is shaping up to be an even bigger run. And that point needs to sink in as we get set to say goodbye to 2025 and usher in 2026.

Fittingly, the WSJ underlines this well in “After a Year of Blistering Growth, AI Chip Makers Get Ready for Bigger 2026”:

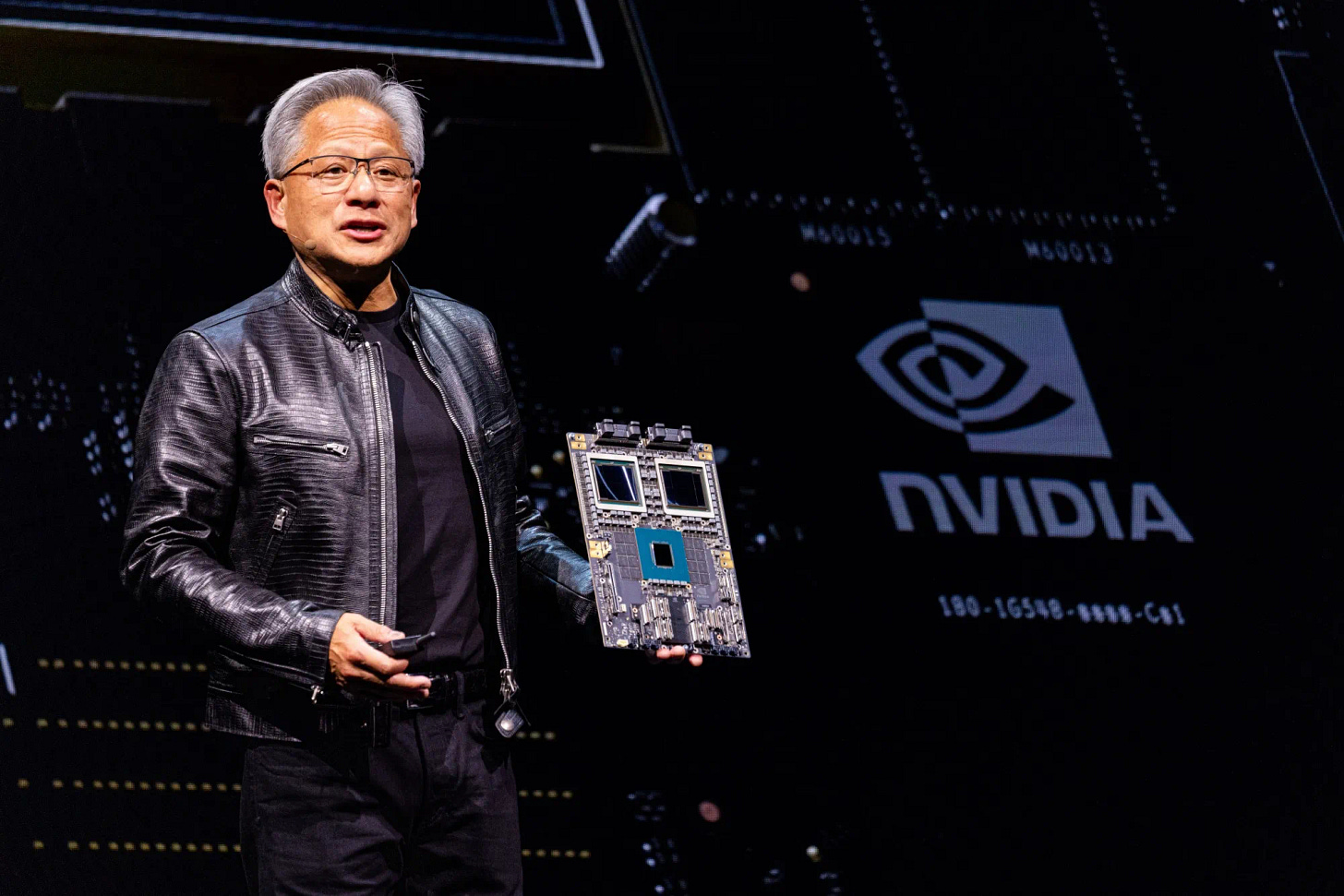

“Nvidia leads the pack, but faces increasing competition and supply-chain challenges.”

“Semiconductor companies achieved over $400 billion in combined sales in 2025, driven by AI growth, with 2026 projected to be even larger.”

“Nvidia faces increasing competition from Google and Amazon, and signed a $20 billion licensing deal with Groq for AI inference acceleration.”

“Challenges include component shortages for data centers, securing electrical power, and questions about the sustainability of AI company financing.”

I discussed the importance of the $20 billion Groq acquisition just a few days ago, as well as the ‘balancing act’ Nvidia founder/CEO Jensen Huang has to play between the US and China in its geopolitical trade and tariff driven Kabuki set piece through 2026.

Yet Nvidia remains central to all things AI in 2026 as the WSJ explains:

“Driven by the explosive growth of artificial intelligence, the largest semiconductor companies in the world recorded more than $400 billion in combined sales in 2025, by far the biggest year for chips on record. Next year promises to be even bigger.”

“Yet the blistering pace of growth, fed by what CEOs and analysts describe as “insatiable demand” for computing power, has created a host of challenges, from shortages of vital components to questions about how and when AI companies will be able to generate reliable enough profits to keep buying chips.”

“Hardware designers such as Nvidia, which more than doubled its revenue year-over-year, are the main suppliers of the picks and shovels behind this new digital gold rush. But Nvidia faces growing competition from the likes of Alphabet’s Google and Amazon.com, while the battleground shifts under its feet.”

“Last week, Nvidia signed a $20 billion licensing deal with the chip startup Groq, which designs chips and software that help accelerate AI inference, the process whereby trained AI models serve up answers to prompts. Where the last leg of the AI race was defined by training, tech giants are now competing to deliver the fastest and most cost-efficient inference.”

“Inference workloads are more diversified and may open up new areas for competition,” analysts at Bernstein wrote after Nvidia’s recent deal was announced.”

This is what is being referred to in spades:

“Data-center operators, AI labs and business customers have clamored for Nvidia’s advanced H200 and B200 graphics processing units. Google’s increasingly sophisticated custom chips, known as TPUs, and Amazon’s Trainium and Inferentia chips, both of which compete with Nvidia’s GPUs, are also scooping up customers, while software developers such as OpenAI are joining with custom designers such as Broadcom to design their own chips.”

The punchline being:

“All of it points to another record year coming for chips. Goldman Sachs estimates that Nvidia alone will sell $383 billion in GPUs and other hardware in the 2026 calendar year, an increase of 78% over the prior year. Analysts polled by FactSet estimate that the combined sales from Nvidia, Intel, Broadcom, AMD and Qualcomm will top $538 billion. That doesn’t include revenues from Google’s TPU business or Amazon’s custom chips sales, neither of which are broken out by their parent companies.”

Despite the politics, power, and memory chip challenges ahead:

“Yet 2026 could also bring unprecedented challenges. A shortage of components such as electrical transformers and gas turbines hampers data-center construction, and operators struggle to secure the immense amounts of electrical power required to run computing clusters.”

“Another major challenge: a global shortage of components that go into AI data-center servers. Items in short supply include the ultrathin layers of silicon substrate some chips require and memory chips, the semiconductors that feed data to AI processors and help store the results of computations. As data-center construction has ramped up and demand has risen for inference, the need for more high-bandwidth memory chips has surged.”

I point this all out on the last day of 2025 to emphasize that we’re only in the beginning of a long ultra-marathon in this AI Tech Wave. The New Year of 2026 is just the next steep, early mile.

Enjoy all the ‘HAPPY NEW YEAR’ toasts and celebrations tonight. Friday, January 1, 2026, the clock starts all over again for AI Acceleration.

The hard stuff has barely begun for the participants, Nvidia, its core global customers and beyond. Stay tuned.

And of course, a HAPPY NEW YEAR!!

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)