AI: Nvidia moves into AI Cloud Services market. RTZ #672

I’ve discussed before how Nvidia is increasingly focused on the AI Data Center opportunity with DGX and other AI data center cloud services. It’s a direction far beyond AI GPU chips, which is what most folks view Nvidia’s core business for over three decades.

All as the world rushes to invest hundreds of billions a year for the next few years in AI Infrastructure. Aka ‘AI factories’ for ‘accelerated computing’, as Nvidia founder/CEO Jensen Huang puts it in public presentations.

He laid out a technically impressive multi-year roadmap for Nvidia at their GTC conference a few days ago, calling the shots as it were for the next few years, Michael Jordan style, buttressing their formidable moat.

And as I’ve discussed the global AI capex boom, Nvidia is also building up its product/services roadmap with well chosen acquisitions. This week saw their acquisition in the ‘Synthetic Data’ space.

Recent reports indicate a possible acquisition in the fast growing ‘Neocloud’ space, which supplement the AI data centers being build by the four largest ‘CSPs’ (aka cloud services providers), led by Amazon AWS, Microsoft Azure, Google Cloud and Oracle Cloud. And as described earlier, Nvidia is an early investor in Neocloud leader CoreWeave, which is executing its IPO.

The Information reports “Nvidia Nears Deal to Buy GPU Reseller for Several Hundred Million Dollars”:

“Nvidia is in advanced talks to buy Lepton AI, a two-year-old startup that rents out servers powered by Nvidia’s artificial intelligence chips, in a deal worth several hundred million dollars, according to a person close to the company.”

“The move is part of Nvidia’s push into the cloud and enterprise software market, in competition with major cloud providers like Amazon and Google. Nvidia has felt pressure to diversify from hardware because the cloud providers, which are its biggest customers, are trying to undercut it by developing and renting out alternative chips for a low price.”

This is the ‘Frenemies’ coopetition environment I’ve discussed at length. Nvidia is building up its AI data center capabilities, much as its top customers are building up their AI GPU chip capabilities.

“Lepton, based in Cupertino, Calif., competes with startups like Together AI, in renting out Nvidia GPU servers. Together and Lepton don’t manage the data centers or servers themselves: After renting them from cloud providers, they turn around and rent them out to their own customers. Together generates more than $150 million in annualized revenue—or $12.5 million per month, according to an investor in the company.”

“Such firms are known as inference providers or GPU resellers. They also develop their own software to help customers—typically other startups or software firms—build and manage their AI apps in the cloud.”

Lepto itself is an early startup, with founders that have meaningful chops in AI infrastructure software and hardware.

“Lepton, which has around 20 employees, likely isn’t generating much revenue compared to Together. Lepton says customers of its “AI cloud” include gaming startup Latitude.io and SciSpace, a scientific research startup. Latitude says it uses Lepton to run AI models for a service used by hundreds of thousands of monthly active users. SciSpace says it uses the service to power a search engine for academic papers.”

“Lepton, a term referring to an elementary particle of matter, has kept a much lower profile than Together and another rival, Fireworks. Lepton raised $11 million in 2023 from investors CRV and Fusion Fund.”

The Meta experience is relevant, given Meta’s leadership role in open source Llama LLM AIs, and software infrastructure like Pytorch, which is also popular amongst AI developers worldwide. It works well with Nvidia’s CUDA open source software libraries, which are also widely used by millions of developers worldwide.

“Lepton’s co-founders, Yangqing Jia and Junjie Bai, previously worked together as AI researchers at Meta Platforms, where they helped develop PyTorch, a popular set of tools for developing AI models.”

“Jia started his career as a research scientist at Google Brain and helped build part of the software that powers Kubernetes, a tool for managing large-scale cloud applications that originated at Google.”

“Nvidia’s cloud and software business is nascent, but it rents out servers powered by its chips to businesses directly, and it also provides software to help companies develop AI models and applications, as well as manage clusters of graphics processing units that train the AI. Nvidia says this business could generate $150 billion in revenue someday.”

“The company has periodically raised expectations about generating revenue from that business, but it avoided the topic entirely during its last quarterly earnings call this month.”

“Nvidia has been buying small AI and cloud startups to make it less expensive and easier for developers to run AI models using its chips.”

“Three months earlier, Nvidia said its software, service and support revenue was generating $1.5 billion in annualized revenue—implying $125 million of revenue per month—and the company expected that figure to climb to $2 billion by the end of 2024. (For comparison, Nvidia’s AI chip business generated $35.6 billion in the fiscal quarter that ended Jan. 26.)”

“In recent months, Nvidia has advertised the enterprise software product on NPR and elsewhere.”

“Cloud providers have purchased about half of Nvidia’s AI server chips in the last couple of years, the company has said. But CEO Jensen Huang said over time, he expects Nvidia’s sales to other types of businesses will be “far larger” as a percentage of sales than its sales to cloud providers.”

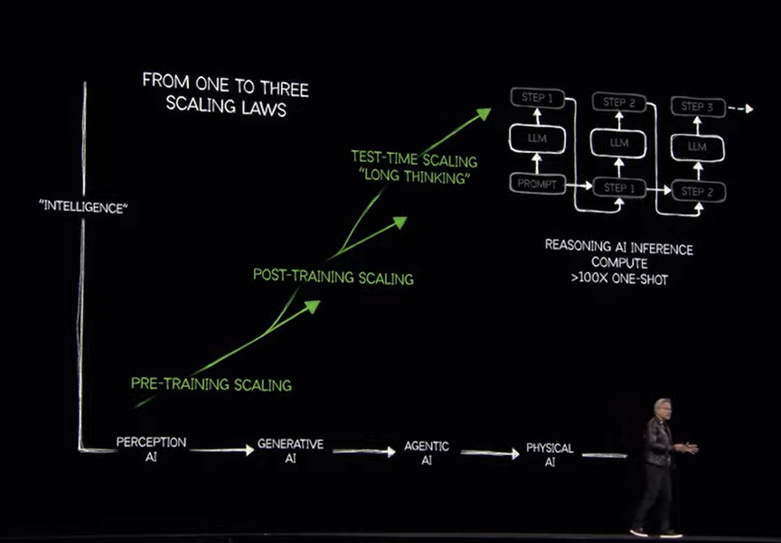

It’s all about building the Nvidia roadmap in the context of the ‘trifurcating’ AI Scaling Laws I’ve discussed.

“He has suggested that industrial firms including automakers would purchase AI chips directly someday rather than renting them from cloud providers.”

“Nvidia has been buying small AI and cloud startups to make it less expensive and easier for developers to run AI models using its chips. One criticism of the chips is that because they are expensive and have been in high demand, it has been difficult for companies to scale AI apps at a reasonable price. Recently, though, AI models have gotten more efficient, as evidenced by the rise of DeepSeek and other cheap yet powerful models.”

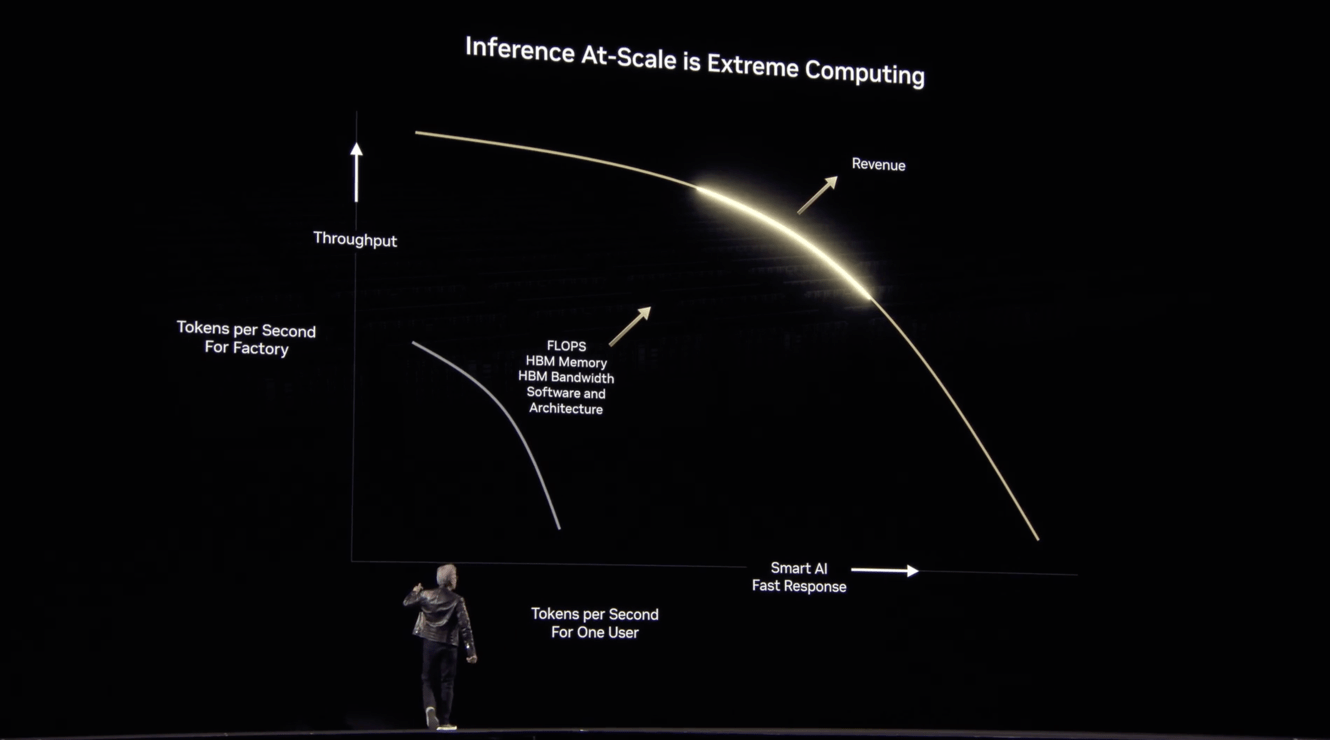

All these moves serve the broader picture of building Nvidia’s capabilities to serve AI Inference at scale in what they call ‘Extreme Computing”.

“A year ago, Nvidia likely paid upward of $1 billion for two Israeli AI startups, Run.ai and Deci, whose software aimed to lower the cost of developing or running AI models powered by Nvidia server chips. And last fall it bought Seattle-based OctoAI, another firm that developed such software.”

“More recently, Nvidia bought a startup, Gretel, that helps customers create and use AI-generated data to develop and test AI applications.”

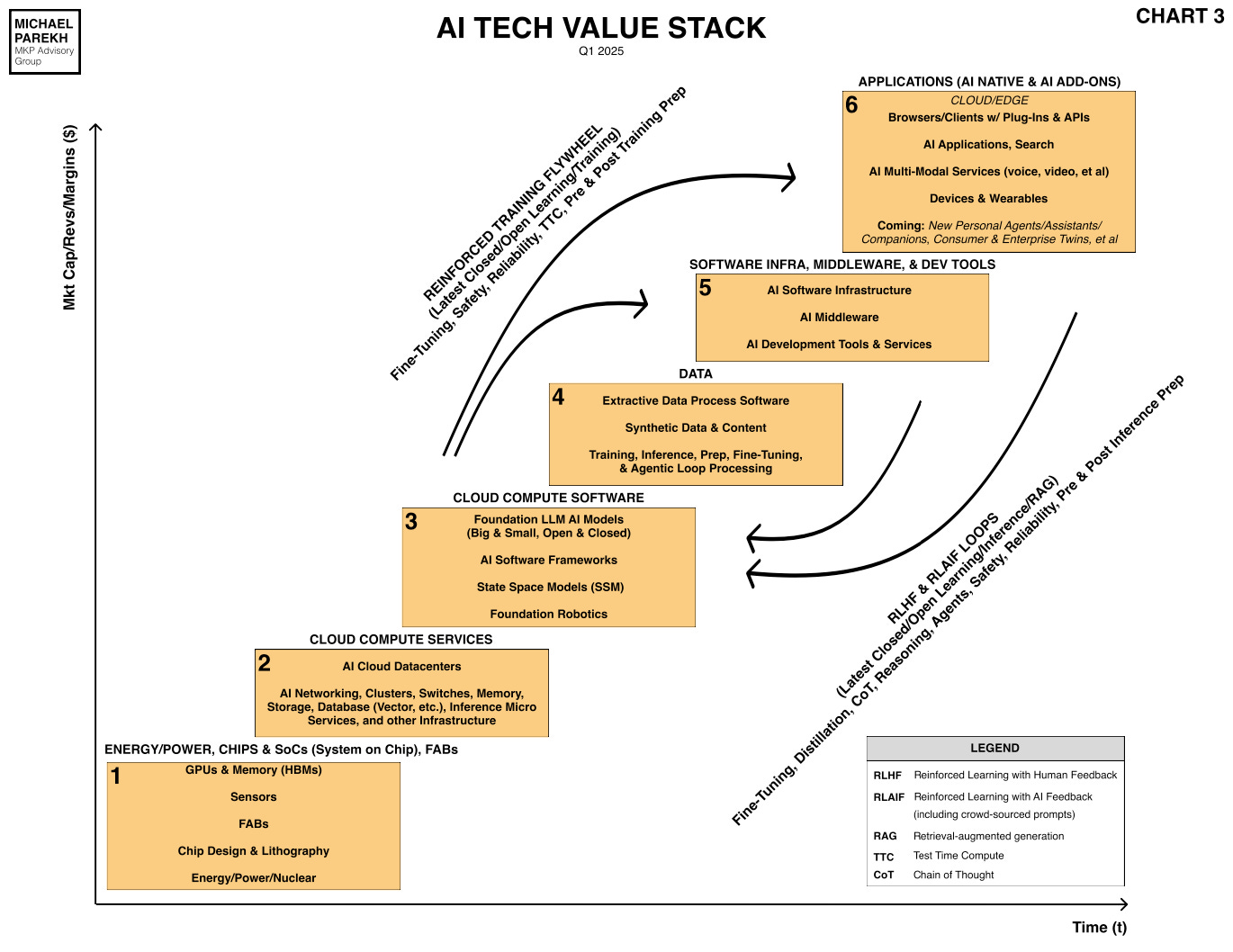

Nvidia remains laser focused on its ‘accelerated computing’ roadmap, which for now is surpassing Moore’s Law for the near term. It all requires wide and deep technical innovation and execution, across the hardware and software layers in the AI Tech Stack I’ve discussed.

Nvidia is increasingly integrated as an ‘AI Systems’ provider up and down this tech stack, from Box 1 through 5 and possibly spilling into the ‘Holy Grail’ Box 6 for AI end user applications and services.

So for now the execution on this Nvidia roadmap continues in this AI Tech Wave as an impressive pace. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)