AI: Nvidia strikes a big AI Acqui-hire deal with Groq. RTZ #947

The AI deals thus far in this AI Tech Wave, didn’t even slow down for Christmas. Nvidia announced its own multi-billion dollar AI ‘acqui-hire’ of AI inference company Groq, echoing Meta with Scale AI, Google with Character AI, Microsoft with Inflection and so many others thus far.

It’s not Elon Musk’s Grok of xAI fame, but the AI semiconductor infrastructure and services firm ending with a ‘q’. And the details are worth noting for their implications next year and beyond.

The Information outlines it all in “Why Nvidia Struck a $20 Billion Megadeal with Groq”:

“Nvidia stunned Silicon Valley on Wednesday by agreeing to pay about $20 billion to license technology from Groq, one of the best-funded startups trying to challenge Nvidia’s dominance in chips for powering AI applications, known as inference computing, according to a person involved in the deal. Nvidia is also hiring Groq’s founders and other leaders, according to the startup, which didn’t disclose the financial details.”

The financial arrangements echo earlier deals by other companies mentioned above:

“It isn’t clear whether the $20 billion figure includes future payouts from Nvidia based on performance milestones involving the Groq hires. Still, it is about three times Groq’s $6.9 billion valuation in a financing just a few months ago.”

“The deal is structured as a nonexclusive licensing deal, a type of transaction Microsoft, Google and Amazon have used over the last two years to hire key AI talent and license technology from several high profile startups without formally acquiring them and triggering regulatory reviews.”

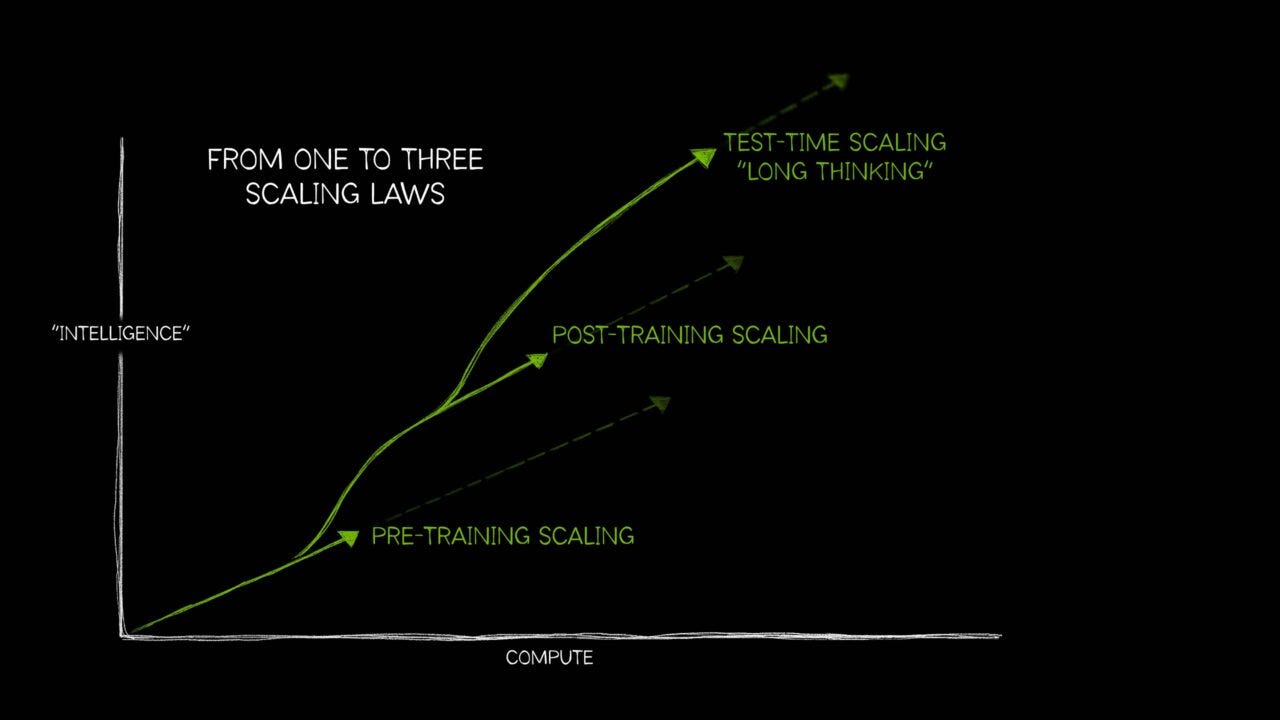

Nvidia’s goal is to beef up its capabilities on AI Inference, a key AI infrastructure application of its chips beyond AI training. Something critical as AI scales to billions in 2026 and beyond.

“The arrangement could help Nvidia, the world’s most valuable company, design server chips that are potentially cheaper and faster at running AI applications, compared to Nvidia’s current line of chips.”

“While Nvidia’s chips and tightly integrated software are widely considered to be the most powerful and effective on the market for developing new AI models, applications such as chatbots may not need such powerful chips to run those models. Many AI providers have been hoping that less-expensive chip alternatives, such as Groq’s, would become reliable enough to use.”

This is despite Nvidia’s expanding AI moat to date that I’ve discussed:

“But Nvidia’s hold over application developers has been tough to beat, in part because they have gotten used to running AI using Nvidia’s proprietary Cuda programming language and because the Nvidia chips have reliably powered AI services such as ChatGPT and Claude.:

“The licensing arrangement gives Nvidia access to Groq’s intellectual property, which the startup says can produce chips that process data faster for specific tasks involving AI apps. Nvidia’s chips are much larger and take longer to process data, but the chips also have more flexibility to handle different types of operations well.”

“We plan to integrate Groq’s low-latency processors into the Nvidia AI factory architecture, extending the platform to serve an even broader range of AI inference and real-time workloads,” Huang said in an internal email sent to employees. The AI factory architecture refers to the combined hardware and software system Nvidia offers to AI customers.”

Groq has an impressive pedigree in its founder and history:

“Groq was started in 2016 by Jonathan Ross, who worked on an early version of Google’s in-house AI chips known as tensor processing units. Groq started a cloud business last year that lets small developers run open-source AI models using its chips, called language processing units. That business will remain at Groq following the deal with Nvidia, Groq said Wednesday.”

“Ross, Groq president Sunny Madra and other staff will join Nvidia to “advance and scale” the licensed technology, Groq said. Simon Edwards, who joined Groq as chief financial officer in September, will become the new CEO. Edwards declined to comment.”

Groq has been an AI Startup to watch thus far:

“Groq has raised about $1.8 billion from investors including Blackrock and Tiger Global Management. As a result of the licensing deal, investors in the company will get a payout that includes earnouts based on future performance, according to two people familiar with the matter. The startup’s key executives will also get payouts, said the same people and an additional person with knowledge with matter.”

The challenge for even successful AI startups to date is getting to global scale in the shortest time:

“Despite billions of dollars in venture funding, Nvidia challengers including Groq have struggled to break the company’s tight grip on the market for advanced AI chips. Nvidia chips have maintained a lead in performance and are widely available on major cloud services, which have been reluctant to offer alternatives from such startups.”

“Groq recently cut its 2025 revenue projections by about three-quarters. A Groq spokesperson at the time said the company shifted some revenue projections to next year because of a lack of data center capacity in a region where it planned to install more chips.”

“In July, Groq projected the cloud business would make more than $40 million of revenue this year and projected more than $500 million in overall sales.”

Groq’s success to date has leaned on AI sales in the Middle East:

“The company has had some success selling its chips in Saudi Arabia, where the state-backed AI company Humain is using them to power a local cloud service for running open-source models. Groq and Humain said last month they planned to triple the amount of Groq chips available in Saudi Arabia, without specifying a timeline.”

And as mentioned before, the Nvidia deal echoes other AI acqui-hire deals thus far:

“The licensing-and-hiring deal Nvidia struck with Groq has a similar structure to deals Microsoft, Amazon and Google used to hire founders of AI startups without officially acquiring the firms. Google’s roughly $3 billion deal with Character.ai last year nevertheless triggered a review from the U.S. Department of Justice, but no action has been taken. Although Nvidia isn’t currently facing an antitrust review in the U.S., it has been careful to avoid describing itself as holding an outsized share of the AI chip market.”

Nvidia of course has a history of notable acquisitions in its AI journey thus far:

“Nvidia has periodically made large acquisitions, though nothing on the order of the Groq deal. In 2019, it paid $6.9 billion for Mellanox, which specialized in high-performance networking for data centers. Nvidia’s networking segment, largely driven by technologies derived from Mellanox’s products, comprised around 14% of its revenue, or $20 billion, in the nine months ending in October.”

“Nvidia also has been using its ever-growing cash pile, which reached $60 billion as of the end of October, to help cement its business by funding dozens of cloud providers and startups that exclusively buy or rent Nvidia chips.”

“Nvidia also struck a Groq-style deal three months ago when it spent more than $900 million to hire the CEO of networking startup Enfabrica and a number of engineering employees, as well as pay for the firm’s technology in a nonexclusive license, according to two people with direct knowledge. Enfabrica’s technology connects GPUs so all the chips can process large amounts of data quickly.”

In particular, this deal drills down on a key AI market need going forward:

“Huang appears to have recognized the growing demand for more specialized chips for inference workloads, or handling applications running AI models. Nvidia in September released a specialized chip, the Rubin CPX, aimed at handling such workloads better than its other chips. However, the chip was still based on its more general purpose graphics processing units rather than the more specialized chips its competitors including Groq are designing.”

“Groq’s first-generation chips were not competitive [with Nvidia’s chips], but there are two [more] generations coming back-to-back soon,” said Dylan Patel, chief analyst at chip consultancy SemiAnalysis. “Nvidia likely saw something they were scared of in those.”

And it shores Nvidia’s flank vs AI chip comers Google, AMD and others:

“Nvidia faces additional competition from Google’s TPUs that can be used for developing AI models as well as inference workloads. Major companies such as Apple have used TPUs rather than Nvidia GPUs to train their largest AI models, and Anthropic has also become a major TPU buyer.”

And of course the ‘Frenemies’ nature of the AI chip landscape today:

“Other large customers of Nvidia chips, including Meta and OpenAI, are also working on their own specialized inference chips for running AI models as a way to reduce the stranglehold Nvidia has on their technologies.”

“Challenging Nvidia directly has been difficult for other startups besides Groq. Such startups have increasingly sought to be acquired. Intel, for instance, is in advanced talks to acquire AI chip startup SambaNova and the deal could be announced as soon as next month, according to a person with knowledge of the discussions. And in October, Meta acquired AI chip startup Rivos to boost its internal chip development. In June, Advanced Micro Devices hired the staff behind Untether AI, which also develops chips for running AI models.”

The frenetic pace pf the AI industry to date and the fluid changes highlight the need for chess deals like this to date.

Even if it’s during Christmas week this AI Tech Wave in 2025. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)