AI: OpenAI adds 'open weight' AI models to the mix. RTZ #677

Well it finally happened. OpenAI, as explained by founder/CEO Sam Altman, has joined the ‘open’ side of the ‘open vs close’ spectrum in AI software. As discussed before, this has been a long running debate in software going back decades, and a lively one in the AI context all through this AI Tech Wave.

Of the big LLM AI companies, it’s only been Meta with a full fledged focus on open source Llama series of LLM AI models. With DeepSeek in China of course following suit strongly with global impact over the past few months.

Now OpenAI has thrown its hat into the ring for some of their models, with AI reasoning thrown into the capabilities mix. As Sam Altman explains it with no caps:

“we are excited to release a powerful new open-weight language model with reasoning in the coming months, and we want to talk to devs about how to make it maximally useful: https://openai.com/open-model-feedback… “

His comments build on his ‘Intelligence Age’ essay I discussed last year:

“we are excited to make this a very, very good model! __ we are planning to release our first open-weight language model since GPT-2. we’ve been thinking about this for a long time but other priorities took precedence. now it feels important to do. before release, we will evaluate this model according out our preparedness framework, like we would for any other model. and we will do extra work given that we know this model will be modified post-release.”

“we still have some decisions to make, so we are hosting developer events to gather feedback and later play with early prototypes. we’ll start in SF in a couple of weeks followed by sessions in europe and APAC. if you are interested in joining, please sign up at the link above. we’re excited to see what developers build and how large companies and governments use it where they prefer to run a model themselves.”

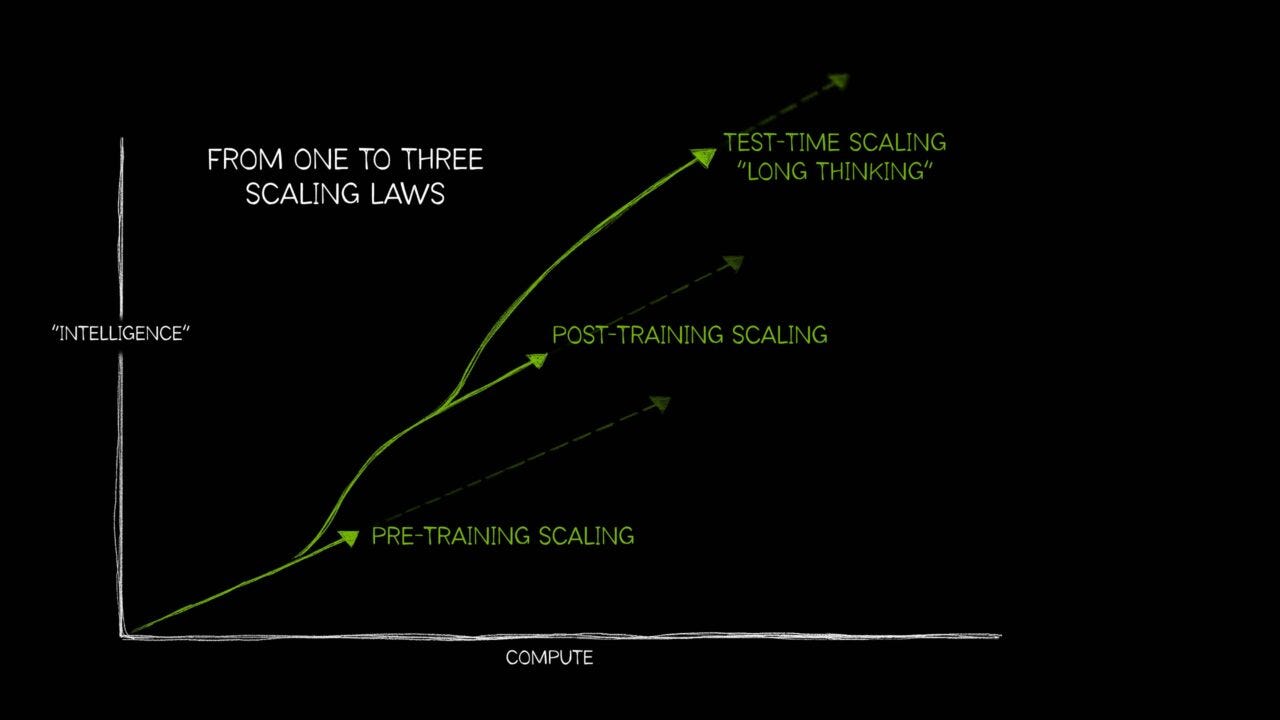

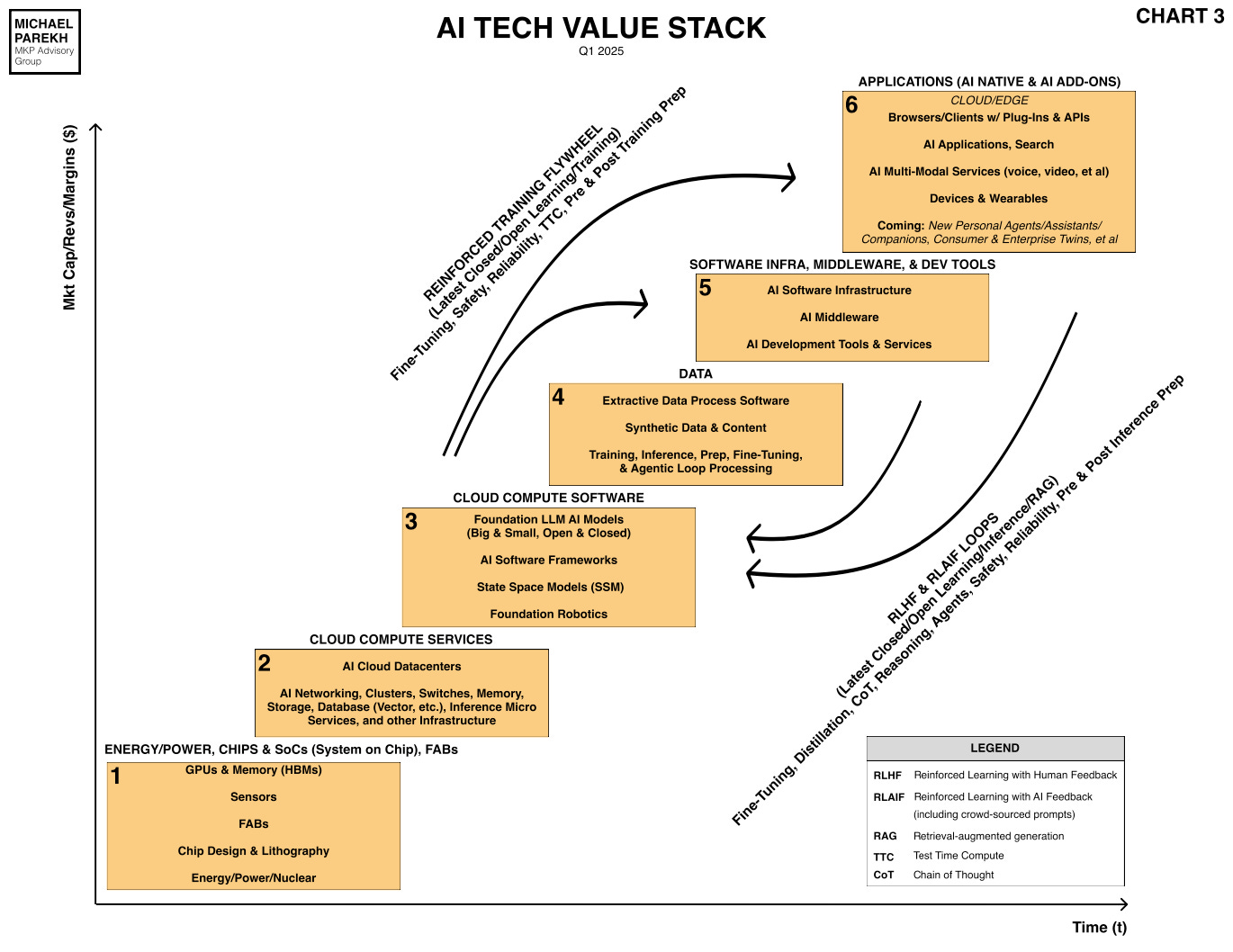

It all helps developers build faster and cheaper to scale AI on all its ‘trifurcated’ growth curves ahead.

Wired provides more context in “Sam Altman Says OpenAI Will Release an ‘Open Weight’ AI Model This Summer”:

“The move is partly a response to the runaway success of the R1 model from Chinese company DeepSeek, as well as the popularity of Meta’s Llama models.”

“Shortly after DeepSeek’s model was released in January, Altman said that OpenAI was “on the wrong side of history” regarding open models, signaling a likely shift in direction. On Monday he said that the company has been thinking about releasing an open-weight model for some time, adding “now it feels important to do.”

Initial reaction from the AI community was of course positive:

“This is amazing news,” Clement Delangue, cofounder and CEO of HuggingFace, a company that specializes in hosting open AI models, told WIRED. “With DeepSeek, everyone’s realizing the power of open weights.”

“OpenAI currently makes its AI available through a chatbot and through the cloud. R1, Llama and other open-weight models can be downloaded for free and modified. A model’s weights refers to the values inside a large neural network—something that is set during training. Open-weight models are cheaper to use and can also be tailored for sensitive use cases, like handling highly confidential information.”

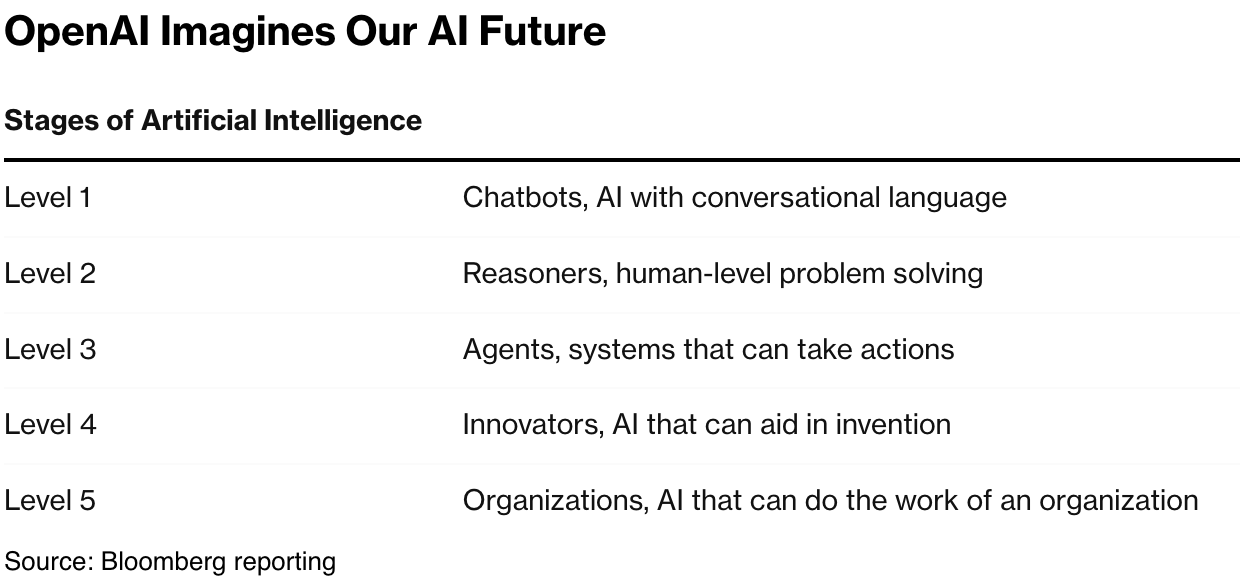

These moves also help the AI community build more AI Reasoning and Agent applications and services, leveraging OpenAI and other technologies.

More color on the ‘open weights’ moves from OpenAI followed:

“Steven Heidel, a member of the technical staff at OpenAI, reposted Altman’s announcement and added, “We’re releasing a model this year that you can run on your own hardware.”

“Johannes Heidecke, a researcher working on AI safety at OpenAI, also reposted the message on X, adding that the company would conduct rigorous testing to ensure the open-weight model could not easily be misused. Some AI researchers worry that open-weight models could help criminals launch cyberattacks or even develop biological or chemical weapons. “While open models bring unique challenges, we’re guided by our Preparedness Framework and will not release models we believe pose catastrophic risks,” Heidecke wrote.”

“OpenAI today also posted a webpage inviting developers to apply for early access to the forthcoming model. Altman said in his post that the company would host events for developers with early prototypes of the new model in the coming weeks.”

So OpenAI, now joins Meta part way on the open source, open weight release of some of their technology:

“Meta was the first major AI company to pursue a more open approach, releasing the first version of Llama in July 2023. A growing number of open-weight AI models are now available. Some researchers note that Llama and some other models are not as transparent as they could be, because the training data and other details are still kept secret. Meta also imposes a license that limits other companies’ ability to profit from applications and tools built using Llama.”

All this means general acceleration of AI software and technologies up and down the AI tech stack, with both open and close source and weights adding more lego pieces for everyone to scale AI faster, and cheaper.

Not to mention the ongoing acceleration of OpenAI’s core ChatGPT business both via subscriptions and APIs. As the Information notes in “ChatGPT Revenue Surges 30%—in Just Three Months”:

“ChatGPT has hit 20 million paid subscribers, according to a spokesperson.”

“That’s up from 15.5 million at the end of last year, as we previously reported.”

“It turns out a lot of people are willing to pay for a chatbot that can code, write, give personalized health advice and medical diagnoses and cook up detailed financial plans, among countless other tasks.”

“The strong growth rate suggests ChatGPT is currently generating at least $415 million in revenue per month (a pace of $5 billion a year), up 30% from at least $333 million per month ($4 billion annually) at the end of last year.”

“The actual figure could be somewhat higher, given that corporate ChatGPT plans are more expensive and the company has had early success selling $200-a-month Pro plans, which are 10 times more expensive than basic ChatGPT Plus plans.”

“ChatGPT revenue is separate from OpenAI sales of AI models through an application programming interface, which the company previously projected would generate about $2 billion this year.”

“If OpenAI keeps up this growth rate or anything like it, its overall revenue projection of $12.7 billion in 2025, up from about $4 billion in 2024, seems well within reach. (Revenue projections through 2029 can be found here, and the company’s very high cash burn projections are here.)”

“The overall number of ChatGPT users grew faster than revenue: OpenAI said Monday it has 500 million weekly users, up from 350 million at the end of last year, a growth rate of 43% in three months. It means 4% of users pay a subscription, down from 5% of users three months ago.”

So the core business is doing well ahead of these ‘open weight’ developments.

Sam Altman is also hinting at AI hardware down the road, perhaps with his and OpenAI’s dalliance with former Apple design chief Jony Ives. As Axios explains:

“Over the weekend, Altman also offered a tantalizing tease of the company’s long-brewing hardware plans.”

“Responding to an X engineer who said that computers should be cute, Altman wrote, “We are gonna make a rly cute one,” without offering further details.”

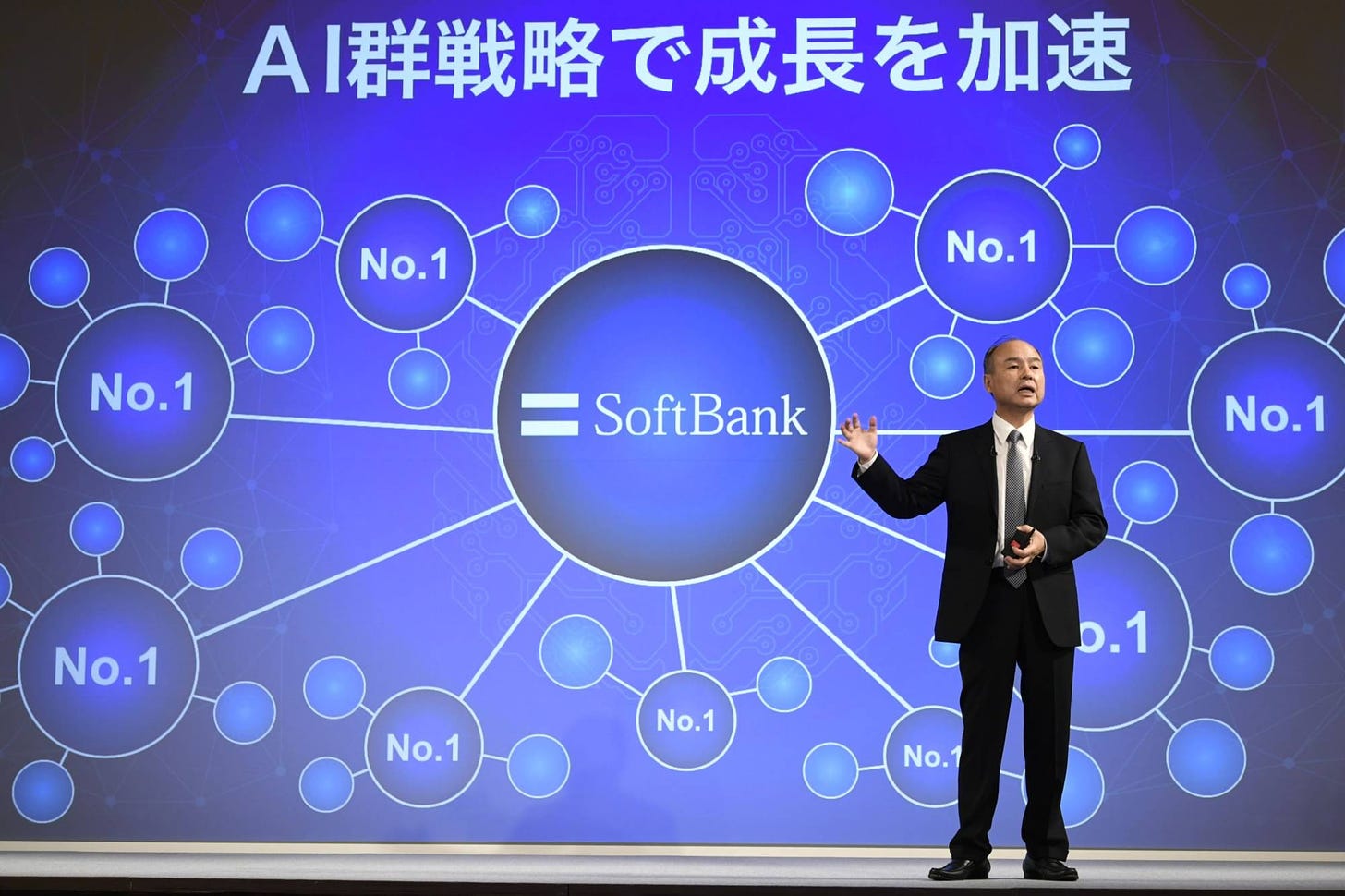

That project of course has Softbank’s Masayoshi Son in with a billion dollar investment.

Speaking of Masa and Softbank, OpenAI also closed its next big follow on $40 billion round with Softbank and other investors at a record breaking $300 billion valuation. That supplements its resources to build Stargate AI data centers with Softbank and others.

With all of the above, and its recent success of its ‘Ghibli’ powered launch of its image generation with ChatGPT/GPT-4o, OpenAI is rolling into the second quarter with some AI momentum. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)