AI: OpenAI leans into Healthcare with ChatGPT. RTZ #963

The Bigger Picture, Sunday, January 11, 2026

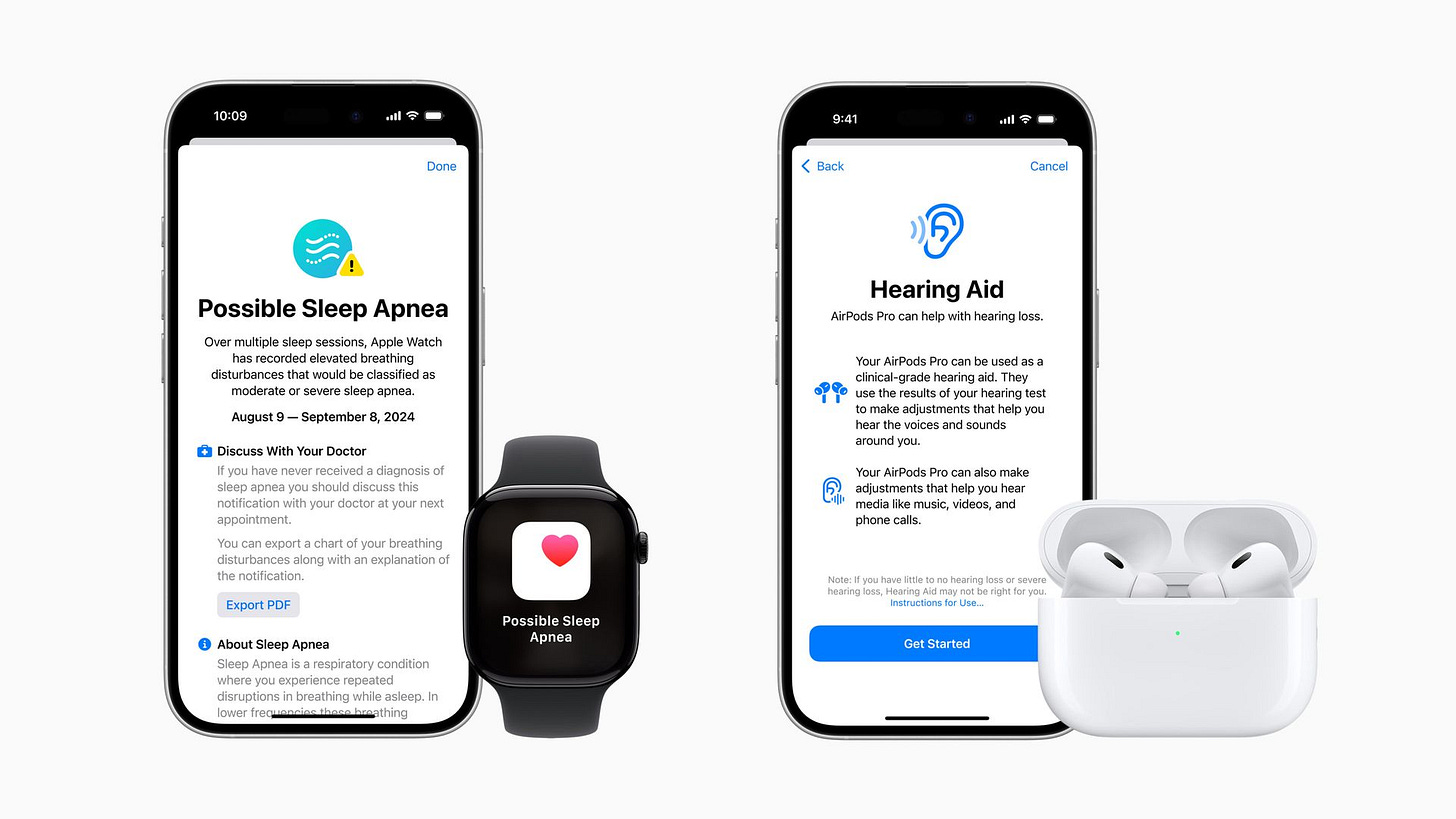

To date in this AI Tech Wave, Apple has been the most visible on stressing Health applications and services across its many platforms, from watches to iphones and other wearables.

And leaning in with its evolving Apple Intelligence to enhance healthcare AI features from hearing tests to body vitals monitoring and measurement.

Other tech companies like Google have also been focused on AI Healthcare Science, in particular with the Alphafold initiatives at Deepmind in particular. I’ve written about AI and Healthcare for a while now in these pages as well..

Now OpenAI is leaning into AI driven healthcare via ChatGPT as well. It’s something that has been building for a couple of years at least. And that is a Bigger Picture worth a discussion this Sunday.

Axios has a number of pieces on this OpenAI initiative in recent days. Including some broader concerns around AI in Healthcare. Axios notes in “OpenAI’s ChatGPT Health tools spark safety debate”:

“Mixed reactions to OpenAI’s new ChatGPT Health feature highlight demand for more personalized medical help tempered by chatbot safety and privacy concerns.”

“Why it matters: Health has become a go-to topic for chatbot queries, with more than 40 million people consulting ChatGPT daily for health advice and hundreds of millions doing so each week.”

“Catch up quick: OpenAI is adding a new health tab within ChatGPT that includes the ability to upload electronic medical records and connect with Apple Health, MyFitnessPal and other fitness apps.”

“The new features formalize how many people already use the chatbot: uploading test results, asking questions about symptoms and trying to navigate the complex health care ecosystem.”

“OpenAI says it will keep the new health information separate from other types of chats and not train its models on this data.”

As I’ve outined before, both Trust and Privacy in AI solutions is a fundamental input at scale, which has given companies like Apple a bit of a headstart vs tech companies like Meta, Elon’s xAI/Grok, and others. It’s particularly a big one in healthcare of course.

“Yes, but: Health information shared with ChatGPT doesn’t have the same protections as medical data shared with a health provider, and even those protections vary by country.”

“The U.S. doesn’t have a general-purpose privacy law, and HIPAA only protects data held by certain people like healthcare providers and insurance companies,” Andrew Crawford, senior counsel for privacy and data at the Center for Democracy and Technology, told Axios in a statement.”

“And since it’s up to each company to set the rules for how health data is collected, used, shared, and stored, inadequate data protections and policies can put sensitive health information in real danger,” he says.”

“OpenAI is starting with a small group of early testers, notably not those in the European Economic Area, Switzerland and the United Kingdom where local regulations require additional compliance measures.”

The market of course will give OpenAI a chance here, given the global popularity of ChatGPT:

“The big picture: Many AI enthusiasts on social media welcomed the tools, pointing to how ChatGPT already helps them.”

“Yana Welinder, head of AI for Amplitude has been using ChatGPT “constantly” for health queries in recent months, both for herself and family members. “The only downside was that all of this lived alongside my very heavy other usage of ChatGPT,” she wrote on X. “Projects helped a bit, but I really wanted a dedicated space …So excited about this.”

“Chatbots aren’t a replacement for doctors, OpenAI says, at the same time highlighting what the chatbot can provide.”

“It’s great at synthesizing large amounts of information,” Fidji Simo, CEO of applications at OpenAI, said Wednesday on a call with reporters. “It has infinite time to research and explain things. It can put every question in the context of your entire medical history.”

But there are big technical problems to solve here as we rush into popular AI tools in front of us:

Especially given mainstream user propensity to humanize and anthropomorphize AI technologies so easily.

“The other side: AI skeptics question giving medical information to a chatbot that has shown a propensity to reinforce delusions and even encourage suicide.”

““What could go wrong when an LLM trained to confirm, support, and encourage user bias meets a hypochondriac with a headache?” Aidan Moher wrote on BlueSky.”

“Anil Dash, advocate for more humane technology, agreed on BlueSky that it “isn’t a good idea,” but also wrote that “it’s vastly more understandable than most medical jargon, far more accessible than 99% of people’s healthcare that they can afford, and very often pretty accurate in broad strokes, especially compared to WebMD or Reddit.”

The thorniest question of course is the fungibility of Data, which is the key ingredient to make AI do anything useful at all, especially in the ‘Physical AI’ world. It’s Box no. 4 in the AI Tech Stack below.

“Between the lines: Like other information shared with ChatGPT, health information could potentially be made available to litigants or government agencies via a subpoena or other court order.”

“That seems particularly noteworthy at a time when access to reproductive health care and gender-affirming care are under threat at both the state and federal levels.”

“User data could get swept up in other ways, too. As part of their copyright battle against OpenAI, news organizations have obtained access to millions of ChatGPT logs, including from temporary chats that were meant to be deleted after 30 days.”

“Sam Altman has called for some sort of legal privilege to protect sensitive health and legal information.”

“What to watch: OpenAI said it has more health features on its road map and will talk soon about additional work with various health care systems.”

Of course, the above discussion centers around OpenAI and other LLM AI chatbots being used by end users for their personal health.

The other side of the coin is the use of AI by pharma companies working with LLM AI companies, to discover and construct better treatments faster and cheaper, using Transformer based AI technologies.

Here, a must read piece by the Economist, “An AI revolution in drugmaking is under way”, provides positive data on how this is actually proceeding in these early AI days:

“It will transform how medicines are created—and the industry itself”.

“Insilico Medicine, a biotech firm in Boston, seems to have been the first to apply the new generation of AI, based on so-called transformer models, to the business of finding drugs. Back in 2019 its researchers wondered whether they could use these to invent new drugs from biological and chemical data. Their first quarry was idiopathic pulmonary fibrosis, a lung disease.”

“They began by training an AI on datasets related to this condition and found a promising target protein. A second AI then suggested molecules that would latch onto this protein and change its behaviour, but were not too toxic or unstable. After that human chemists took over, creating and testing the shortlisted molecules. They called the result rentosertib, and it has recently completed successful mid-stage clinical trials. The firm says it took 18 months to arrive at a candidate for development—compared with a usual timeline of four and a half years.”

Fast forward to today, and the numbers are promising indeed:

“Insilico now has a pipeline of more than 40 AI-developed drugs it is assessing for conditions such as cancers and diseases of the bowels and kidneys. And its approach is spreading. One projection suggests annual investment in the field will rise from $3.8bn in 2025 to $15.2bn in 2030.”

“Tie-ups between pharma companies and AI firms are also becoming common. In 2024 a dozen deals were announced, with a combined value of $10bn according to IQVIA, a health-intelligence company.”

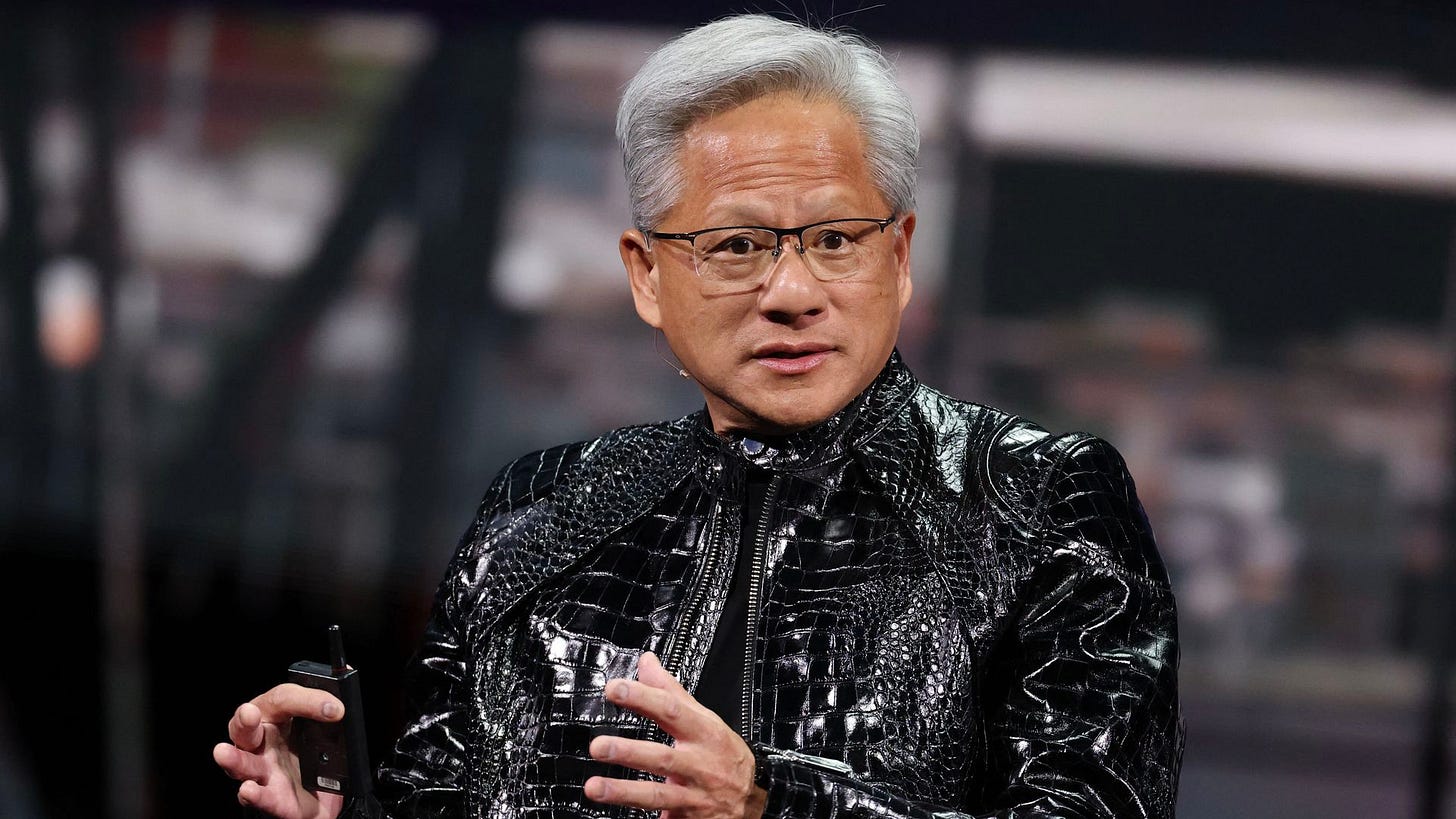

Nvidia is also a key company working with big pharma in these new healthcare AI arenas:

“And last October Eli Lilly, another pharma giant, announced a collaboration with Nvidia, the firm whose chips are widely used to train and run transformer-based AI models, to build the industry’s most powerful supercomputer, and thus speed up drug discovery and development.”

And both the pharma and AI industries are figuring out how to make the new economics work under long-established healthcare industry economics and regulatory regimes:

“Given the pharmaceutical industry’s weird economics—candidate drugs entering clinical trials have a 90% failure rate, bringing the cost of developing a successful one to a whopping $2.8bn—even marginal improvements in efficiency would offer big gains. Reports from across the industry suggest that AI has begun to deliver these. AI-designed drugs are whizzing through the preclinical phase (that before human trials begin) in only 12-18 months, compared with three to five years previously. And the success of AI-designed drugs in safety trials is better too. A study published in 2024, of their performance in such trials, found an 80-90% success rate. This compares with historical averages of 40-65%. That, in turn, boosts the overall rate of getting drugs successfully through the entire pipeline to 9-18%, up from 5-10%.”

Include in all this the context of ‘digital twins’ I’ve discussed before, and synthetic patients, and the AI/Healthcare aperture opens up further:

“The most intriguing use of AI to improve trials, though, is the creation of synthetic patients (sometimes called digital twins) to act as matched controls for real participants. To do this an AI goes through data from past trials and learns to predict what might happen to a participant if they follow the natural course of their condition rather than being treated. Then, when a volunteer is enrolled in a trial and given a drug, the AI creates a “patient” with the same set of characteristics, such as age, weight, existing conditions and disease stage. The drug’s efficacy in the real patient can thus be measured against the progress of this virtual alternative.”

“If adopted, the use of synthetic patients would reduce the size of trials’ control arms and could, potentially, eliminate them entirely in some cases. Their use might also appeal to participants, since the chance of receiving the treatment under test rather than being put into a control group without it would rise.”

Zoom out a bit, and one can see positive opportunities for OpenAI and the pharma industry on this end of the AI Healthcare ecosystem.

“Companies such as OpenAI, which led development of the transformers known as large language models, and Isomorphic Labs, a drug-discovery startup spun out of Google DeepMind, are already training systems to reason and make discoveries in the life sciences, hoping these tools will become capable biologists. For now, drug firms have the advantage of a wealth of data and the context to understand and use it, so collaboration is the order of the day. OpenAI, for example, is working with Moderna, a pioneer of RNA vaccines, to speed the development of personalised cancer vaccines. But as the new models make biology more predictable the balance of advantage in the industry may change.”

“Regardless of that, AI has already improved things greatly. If it can wring from late-stage trials the sorts of improvement it has brought to the earlier part of the process, the number of drugs arriving on the market should rise significantly. In the longer run, the possibilities for enhancing human health are enormous.”

The entire piece is worth reading in detail to get a fuller sense of the possibilities around AI and pharma processes to discover and develop new drugs.

This whole area of AI and Healthcare is just beginning to sort out the thorny details at this early point in the AI Tech Wave.

At both ends of AI and the Healthcare pipeline: The creation of drugs and treatments, and how billions of mainstream users interact with the healthcare industry to access the accelerated drugs and treatments. With all the trust and privacy safeguards put in place. And guardrails against AI anthropomorphizing by end users.

And that is the Bigger Picture to keep in mind before we all rush in with AI on our personal health in 2026 and beyond. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)