AI: Progress not always in a straight line. RTZ #549

The Bigger Picture, November 24, 2024

Progress comes in so many different paces.

Columbus in 1492, found the Americas trying to get to India in what he thought was a straight, short line west. Historians agree he grossly underestimated the length of that ‘straight line’ in particular. By over 10,000 miles.

Over the next few hundreds years it led us to the USA, now about to celebrate its 250th anniversary in a couple of years, through a lot of twists and turns.

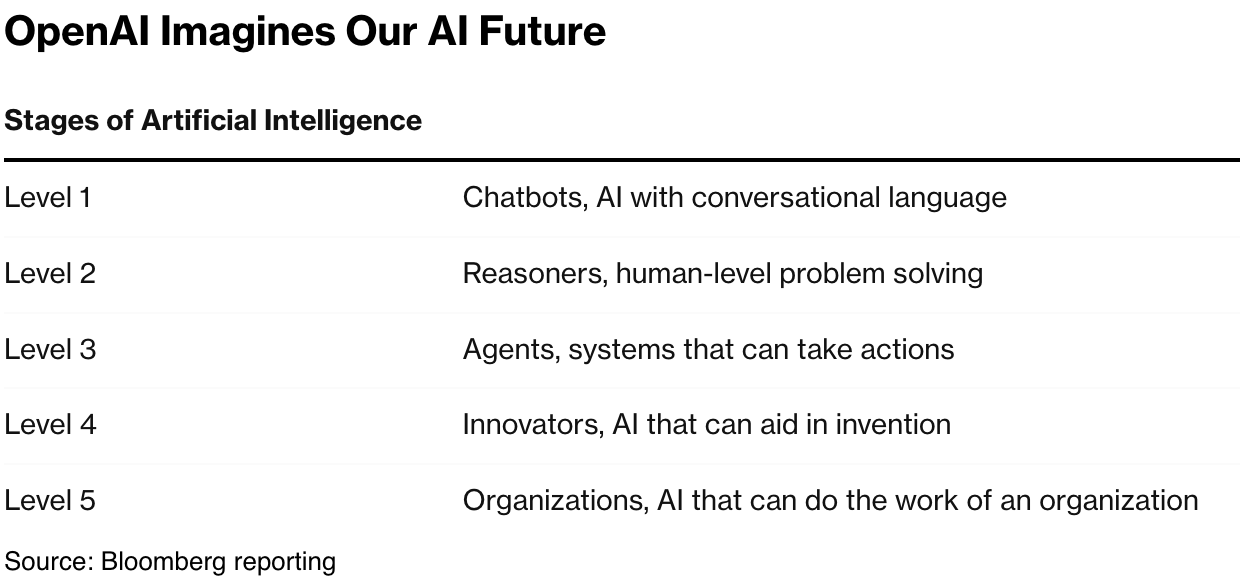

The road to AGI, or at least truly delightful and USEFUL AI applications and services, will likely come through unexpected steps in that journey. And journeys to AI Scaling not in a straight line that I’ve discussed. Those unexpected catalysts the Bigger Picture I’d like to explore this Sunday.

A good illustration of ‘AI progress not always in a straight line’, is to ask you to watch this 18 minute review of Apple’s ‘Apple Intelligence’, by tech reviewer MKBHD. He has mixed, underwhelming praise for the actual features in various Apple Apps with Apple Intelligence.

But the true punch lines comes at the end.

For those disinclined to watch the whole thing, here is the key point (spoiler alert), from my perspective. At the 17 minute mark, MKBHD says (links mine):

“Honestly, the best thing I tink we’ve gotten from Apple Intelligence, because all this stuff is running on device, is bumping up the base memory in everything. There’s more RAM in every iPhone now, and the base memory on every Mac is 16 gigabytes (GB) now, which made the base Mac Mini an incredible deal.”

It’s true, iPhones now start at 8GB and Macs/iPads at 16GB. All good for local AI on device, which I’ve been talking about for a long time.

It’s not the specific capabilities and features of Apple Intelligence, which as MKBHD and others so far chronicle, are by my paraphrasing, ‘nice to haves’, but not yet ‘must haves’.

It’s that our base devices are getting set up to actually run AI apps when they come, far easier than before. Because Apple’s moves on base memory will of course be emulated by all its competitors, from Google Android on smartphones to Microsoft Windows AI PCs by hundreds of OEM vendors.

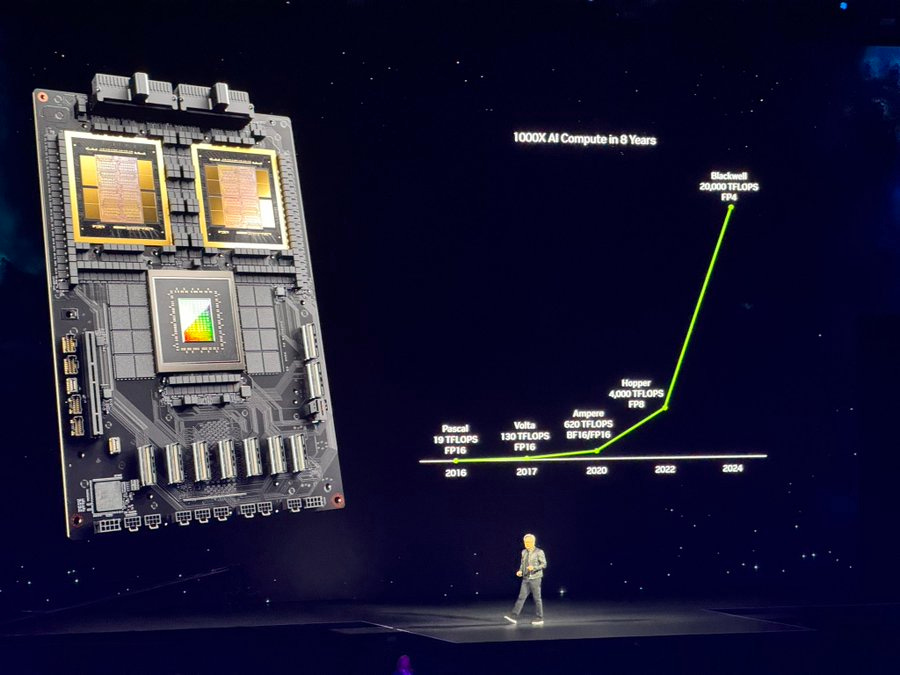

A bigger reason why this is important is that AI has variable costs (aka ‘inference computing costs’) that today mostly run on multi billion dollar AI data centers, mostly powered by Nvidia GPU chips, that are multiple times more expensive per unit of compute than traditional computing over 70 years.

That will change as billions of local devices get upgraded with sufficient hardware, and smaller AI models, to do more inference computing locally. The smartphone market globally is over 2-4x bigger than the AI capex this year and next.

As Apple and others upgrade the hardware in these devices for AI, it’s like finding entire computing continents where we didn’t think any existed. It of course will also help on our current, seemingly impossible Power demands for this AI data center infrastructure.

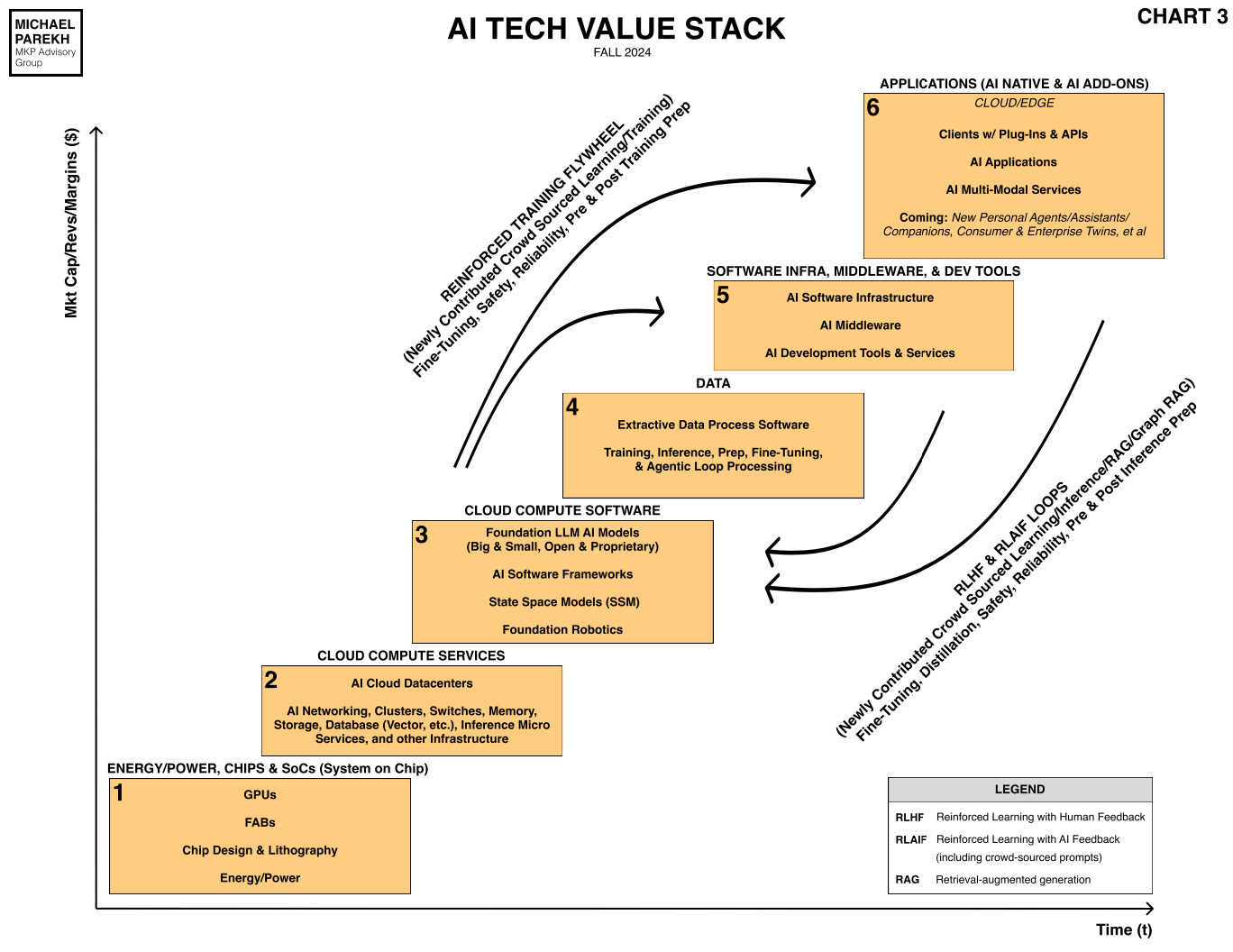

And all that local compute as important as to what the core AI Applications and services are themselves in the all important Box six in the AI Tech stack chart above.

And as I’ve said many times before, they also take their own good time to be invented and made available at scale. And it doesn’t always happen in a straight line.

AI in this AI Tech Wave will find its own Americas. While on the quest for AI, or AGI in this case, with almost incomprehensible AI capex iinvestments. That is the short ‘Bigger Picture’ for this Sunday. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)