AI: Sakana AI's new open source approach to making best LLM AIs better. RTZ #775

A cool Japanese AI startup company Sakana AI, named for ‘fish’, just published and released some AI work that could help the AI world learn how to fish a lot better. And it’s a company whose founders have already earned the attention (pun intended, as explained below).

In a post almost two years ago titled “Attention/Transformer Authors go Fishing”, I wrote about a ‘First Principles’ LLM AI startup by two of the eight authors of the seminal Google “Attention is all you need” AI paper that launched the current global LLM AI Tech Wave.

It introduced the world to Transformers and helped a little company OpenAI run with that ball to give us GPT and ChatGPT (Hint: the ‘T’ in both is for ‘Transformers’). The two founders are Llion Jones and David Ha, part of that original Google team.

Coming back to those two authors and their startup, it was called ‘Sakana AI’ for ‘fish’ in Japanese, and it became yet another AI unicorn this time last year.

The company’s ambition was to take different directions in LLM AI from OpenAI, Anthropic, Google and others. Two years later, they have something to new and cool to report, presented here as an important AI paper.

I thought it’d be useful to discuss Sakana’s work, given its broader implications for LLM AIs. It’s an update from their ongoing earlier work.

Venturebeat tells us more in “Sakana AI’s TreeQuest: Deploy multi-model teams that outperform individual LLMs by 30%”:

“Japanese AI lab Sakana AI has introduced a new technique that allows multiple large language models (LLMs) to cooperate on a single task, effectively creating a “dream team” of AI agents. The method, called Multi-LLM AB-MCTS, enables models to perform trial-and-error and combine their unique strengths to solve problems that are too complex for any individual model.”

“For enterprises, this approach provides a means to develop more robust and capable AI systems. Instead of being locked into a single provider or model, businesses could dynamically leverage the best aspects of different frontier models, assigning the right AI for the right part of a task to achieve superior results.”

This ‘united we stand’ is a fruitful approach it seems for a number of AI companies globally. Sakana AI found some successful pathways to that approach.

“The power of collective intelligence”

“Frontier AI models are evolving rapidly. However, each model has its own distinct strengths and weaknesses derived from its unique training data and architecture. One might excel at coding, while another excels at creative writing. Sakana AI’s researchers argue that these differences are not a bug, but a feature.”

“We see these biases and varied aptitudes not as limitations, but as precious resources for creating collective intelligence,” the researchers state in their blog post. They believe that just as humanity’s greatest achievements come from diverse teams, AI systems can also achieve more by working together. “By pooling their intelligence, AI systems can solve problems that are insurmountable for any single model.”

A difference was pausing at a critical stage in the inference loops, depicted in my AI Tech stack below (see legend):

“Thinking longer at inference time”

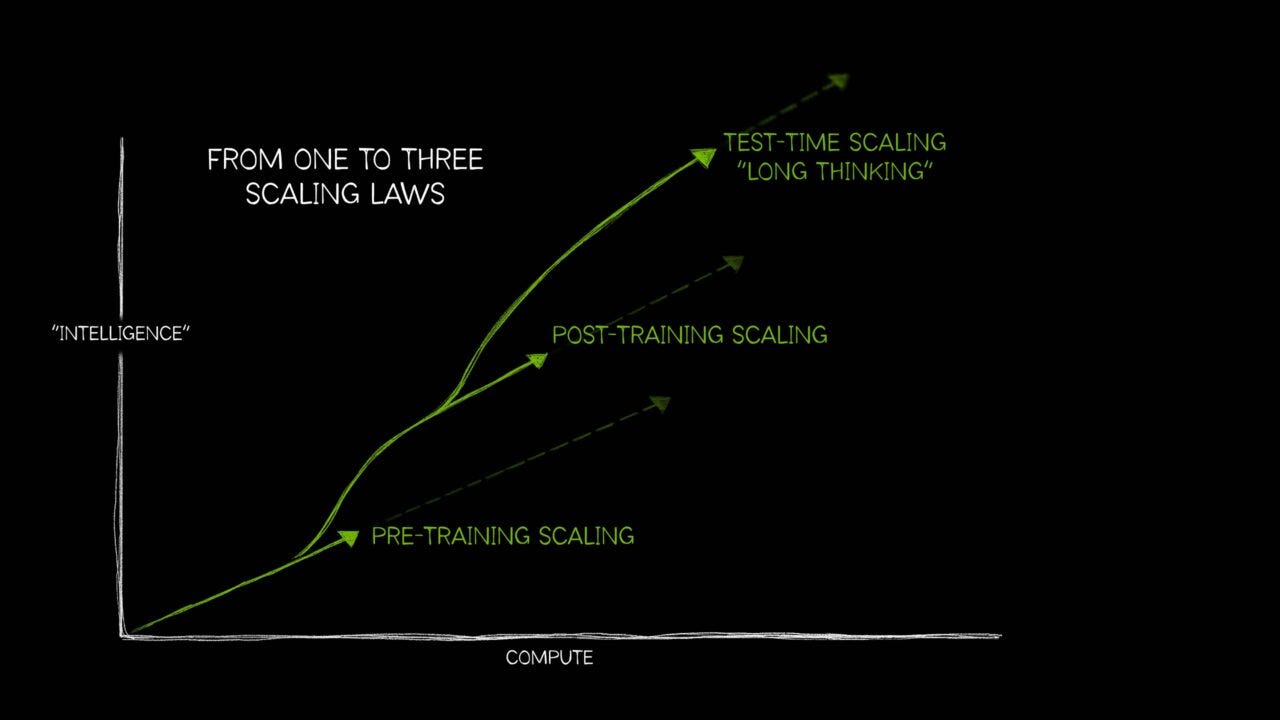

“Sakana AI’s new algorithm is an “inference-time scaling” technique (also referred to as “test-time scaling”), an area of research that has become very popular in the past year. While most of the focus in AI has been on “training-time scaling” (making models bigger and training them on larger datasets), inference-time scaling improves performance by allocating more computational resources after a model is already trained.”

“One common approach involves using reinforcement learning to prompt models to generate longer, more detailed chain-of-thought (CoT) sequences, as seen in popular models such as OpenAI o3 and DeepSeek-R1. Another, simpler method is repeated sampling, where the model is given the same prompt multiple times to generate a variety of potential solutions, similar to a brainstorming session. Sakana AI’s work combines and advances these ideas.”

Here’s how they explain it:

“Our framework offers a smarter, more strategic version of Best-of-N (aka repeated sampling),” Takuya Akiba, research scientist at Sakana AI and co-author of the paper, told VentureBeat. “It complements reasoning techniques like long CoT through RL. By dynamically selecting the search strategy and the appropriate LLM, this approach maximizes performance within a limited number of LLM calls, delivering better results on complex tasks.”

A little more technical detail illuminates their approach:

“How adaptive branching search works”

“The core of the new method is an algorithm called Adaptive Branching Monte Carlo Tree Search (AB-MCTS). It enables an LLM to effectively perform trial-and-error by intelligently balancing two different search strategies: “searching deeper” and “searching wider.” Searching deeper involves taking a promising answer and repeatedly refining it, while searching wider means generating completely new solutions from scratch. AB-MCTS combines these approaches, allowing the system to improve a good idea but also to pivot and try something new if it hits a dead end or discovers another promising direction.”

“To accomplish this, the system uses Monte Carlo Tree Search (MCTS), a decision-making algorithm famously used by DeepMind’s AlphaGo. At each step, AB-MCTS uses probability models to decide whether it’s more strategic to refine an existing solution or generate a new one.”

“The researchers took this a step further with Multi-LLM AB-MCTS, which not only decides “what” to do (refine vs. generate) but also “which” LLM should do it. At the start of a task, the system doesn’t know which model is best suited for the problem. It begins by trying a balanced mix of available LLMs and, as it progresses, learns which models are more effective, allocating more of the workload to them over time.”

What’s particularly notable is how they leverage the best of the existing models in thie exercise:

Putting the AI ‘dream team’ to the test

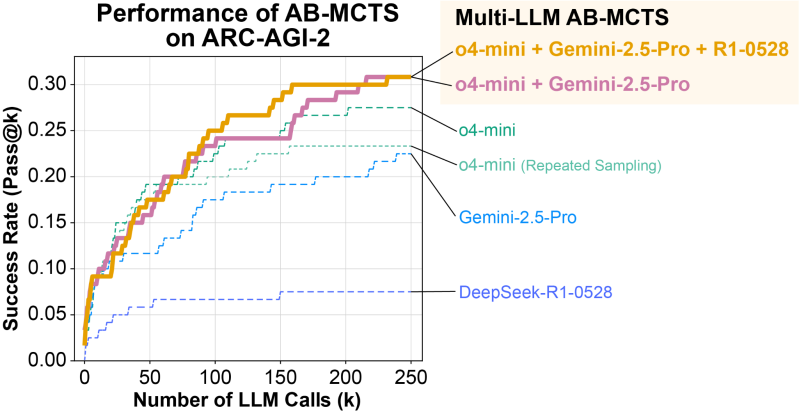

“The researchers tested their Multi-LLM AB-MCTS system on the ARC-AGI-2 benchmark. ARC (Abstraction and Reasoning Corpus) is designed to test a human-like ability to solve novel visual reasoning problems, making it notoriously difficult for AI.”

“The team used a combination of frontier models, including o4-mini, Gemini 2.5 Pro, and DeepSeek-R1.”

“The collective of models was able to find correct solutions for over 30% of the 120 test problems, a score that significantly outperformed any of the models working alone. The system demonstrated the ability to dynamically assign the best model for a given problem. On tasks where a clear path to a solution existed, the algorithm quickly identified the most effective LLM and used it more frequently.”

In particular, the approach makes it so that united they can do what by themselves the individual models can’t:

“More impressively, the team observed instances where the models solved problems that were previously impossible for any single one of them. In one case, a solution generated by the o4-mini model was incorrect. However, the system passed this flawed attempt to DeepSeek-R1 and Gemini-2.5 Pro, which were able to analyze the error, correct it, and ultimately produce the right answer.”

Emulating a pattern in nature, combine forces to do the task at hand:

“This demonstrates that Multi-LLM AB-MCTS can flexibly combine frontier models to solve previously unsolvable problems, pushing the limits of what is achievable by using LLMs as a collective intelligence,” the researchers write.”

“In addition to the individual pros and cons of each model, the tendency to hallucinate can vary significantly among them,” Akiba said. “By creating an ensemble with a model that is less likely to hallucinate, it could be possible to achieve the best of both worlds: powerful logical capabilities and strong groundedness. Since hallucination is a major issue in a business context, this approach could be valuable for its mitigation.”

That last bit on hallucinations is important, given how that is the current ‘Forever Problem’ in LLM AIs.

And useful that their approach makes both AI Reasoning and AI Agents potentially better, on the path to AGI and beyond.

And they’re going open source with their approach.

“From research to real-world applications”

“To help developers and businesses apply this technique, Sakana AI has released the underlying algorithm as an open-source framework called TreeQuest, available under an Apache 2.0 license (usable for commercial purposes). TreeQuest provides a flexible API, allowing users to implement Multi-LLM AB-MCTS for their own tasks with custom scoring and logic.”

“AB-MCTS could also be highly effective for problems that require iterative trial-and-error, such as optimizing performance metrics of existing software,” Akiba said. “For example, it could be used to automatically find ways to improve the response latency of a web service.”

The release of a practical, open-source tool could pave the way for a new class of more powerful and reliable enterprise AI applications.”

The whole piece is worth reading for more detail on how their approach works for those interested in more technical detail.

Their work helps the AI industry globally as it tackles the task of Scaling AI across its many branches this AI Tech Wave.

And potentially provide useful iterations of AI processes for businesses and consumers. Leveraging the best models altogether.

So in all those ways, Sakana AI’s efforts are teaching the AI world learn to fish better, not just give them more AI fish. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)