AI: The enthusiasm for AI 'Smart Glasses'. RTZ #487

The Bigger Picture, September 22, 2024

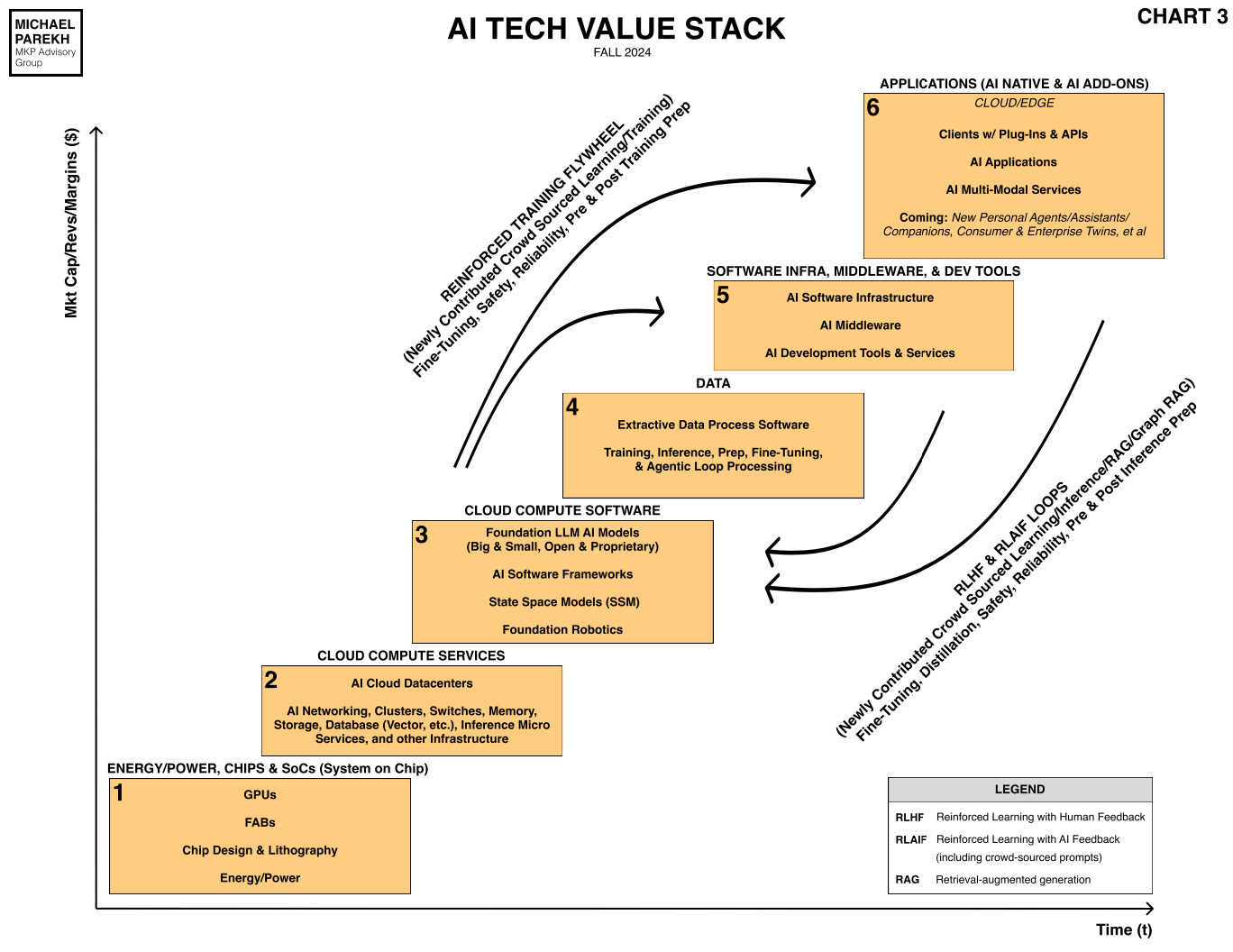

I’ve said before that we’re just in the early rush to build sufficient AI infrastructure in this AI Tech Wave . We need far, far more to provide the massive amounts of ‘variable compute’ as AI users grow from today’s hundreds of millions to billions soon.

This ‘Compute’ will drive a plethora of AI applications and services beyond today’s ‘level 1 Chatbots’, that are barely two years old. We will soon see ‘reasoning’ and ‘agentic’ AI being used by billions of mainstream users via all kinds of forms and factors. Glasses are one major device of focus in ‘AI hardware’. with Meta having an early head-start.

AI is going to be a blend of services driven by text, voice, cameras and video via a variety of devices over the next couple of years. Those are the ‘holy grail’ Box 6 in the AI Tech Stack chart below. And Meta, along with Apple, Google and others are in the race. That’s the AI ‘Bigger Picture’ I’d like to discuss this Sunday.

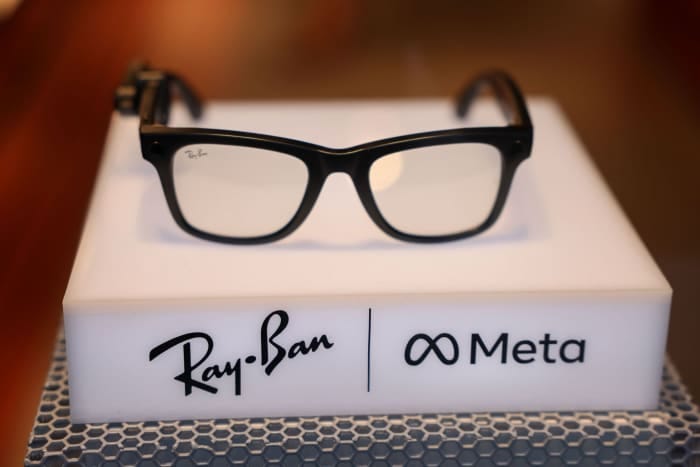

AI ‘smart glasses’, which have been a ‘work in progress’ for over a decade. From Google Glass in 2012 to Meta’s partnership with EssilorLuxoticca’s Ray-ban on their Meta AI delivering ‘smart glasses today.

And this week we’re likely to see another iteration of the latter at Meta’s annual Connect conference. Meta founder/CEO Mark Zuckerberg is laser focused on delivering open-sourced LLM AI driven AI services to Meta’s two plus billion daily users. And their renewed commitments to their ‘Ray-ban smart glasses’ with parent EssilorLuxottica.

In ‘AI: Soon in all shapes and sizes’ this January, I said:

“All of those interactions will likely seem tame compared to what’s coming next. As multimodal AI gets baked into many devices old and new. Google, Apple, Meta, Amazon amongst the big tech companies are already racing to introduce multimodal AI features into Google Nest devices, Apple Siri, and Amazon Echo/Alexa devices, and of course Meta’s ‘Meta AI’ via Ray-ban ‘Smart Glasses’. And every other tech company large and small will have their versions out this year as well.”

Meta’s smart glasses have been a slow-burning ‘success’ of sorts this year, as the Verge’s Victoria Song points out in “Meta has a major opportunity to win the AI hardware race”:

“The Ray-Ban Meta smart glasses exceeded expectations in a year when AI gadgets flopped. But can it keep the momentum going?”

“AI wearables have had a cruddy year.”

“Just a few short months ago, the tech world was convinced AI hardware could be the next big thing. It was a heady vision, bolstered by futuristic demos and sleek hardware. At the center of the buzz were the Humane AI Pin and the Rabbit R1. Both promised a grandiose future. Neither delivered the goods.”

“It’s an old story in the gadget world. Smart glasses and augmented reality headsets went through a similar hype cycle a decade ago. Google Glass infamously promised a future where reality was overlaid with helpful information. In the years since, Magic Leap, Focals By North, Microsoft’s HoloLens, Apple’s Vision Pro, and most recently, the new Snapchat Spectacles have tried to keep the vision alive but to no real commercial success.”

“So, all things considered, it’s a bit ironic that the best shot at a workable AI wearable is a pair of smart glasses — specifically, the Ray-Ban Meta smart glasses.”

“The funny thing about the Meta smart glasses is nobody expected them to be as successful as they are. Partly because the first iteration, the Ray-Ban Stories, categorically flopped. Partly because they weren’t smart glasses offering up new ideas. Bose had already made stylish audio sunglasses and then shuttered the whole operation. Snap Spectacles already tried recording short videos for social, and that clearly wasn’t good enough, either. On paper, there was no compelling reason why the Ray-Ban Meta smart glasses ought to resonate with people.”

And yet, they have succeeded where other AI wearables and smart glasses haven’t. Notably, beyond even Meta’s own expectations.”

“At $299, they’re expensive but are affordable compared to a $3,500 Vision Pro or a $699 Humane pin. Audio quality is good. Call quality is surprisingly excellent thanks to a well-positioned mic in the nose bridge. Unlike the Stories or Snap’s earlier Spectacles, video and photo quality is good enough to post to Instagram without feeling embarrassed — especially in the era of content creators, where POV-style Instagram Reels and TikToks do numbers.”

And they’re more forgiving on the nascent ‘Meta AI’ chatbot capabilities:

“In practice, these [Meta AI] features are a bit wonky and inelegant. Meta AI has yet to write me a good Instagram caption and often it can’t hear me well in loud environments. But unlike the Rabbit R1, it works. Unlike Humane, it doesn’t overheat, and there’s no latency because it uses your phone for processing. Crucially, unlike either of these devices, if the AI shits the bed, it can still do other things very well.”

This uncharacteristic enthusiasm by the Verge, which typically is more hard-nosed on tech features not yet delivered (aka vaporware’). That is evident from their recent review by their editor-in-chief Nilay Patel, who held the line on Apple’s iPhone 16 not delivering the ‘Apple Intelligence’ features on launch:

“The hard rule of reviews at The Verge is that we always review what’s in the box — the thing you can buy right now. We never review products based on potential or the promise of software updates to come, even if a company is putting up billboards advertising those features, and even if people are playing with those features in developer betas right now. When Apple Intelligence ships to the public, we’ll review it, and we’ll see if it makes the iPhone 16 Pro a different kind of phone.”

But Mark Zuckerberg’s Meta AI driven ‘Smart-glasses’ have dented this steadfast pragmatism on ‘no review before its time’, as evidenced in their aforementioned enthusiasm and ‘dreams’ for the Meta Smart Glasses to come:

“This is good enough. For now.”

“They’ve proved the first part of the equation. But if the latter is going to come true, the AI can’t be okay or serviceable. It has to be genuinely good. It has to make the jump from “Oh, this is kind of convenient when it works” to “I wear smart glasses all day because my life is so much easier with them than without.” Right now, a lot of the Meta glasses’ AI features are neat but essentially party tricks.”

“It’s a tall order, but of everyone out there right now, Meta seems to be the best positioned to succeed. Style and wearability aren’t a problem. It just inked a deal with EssilorLuxxotica to extend its smart glasses partnership beyond 2030. Now that it has a general blueprint for the hardware, iterative improvements like better battery and lighter fits are achievable. All that’s left to see is whether Meta can make good on the rest of it.”

“It’ll get the chance to prove it can next week at its Meta Connect event. It’s a prime time. Humane’s daily returns are outpacing sales. Critics accuse Rabbit of being little more than a scam. Experts aren’t convinced Apple’s big AI-inspired “supercycle” with the iPhone 16 will even happen. A win here wouldn’t just solidify Meta’s lead — it’d help keep the dream of AI hardware alive.”

I point out this contrast on reviewing AI ‘before its time’, because this is something we’re going to see not just from the Verge, but the media and early adopters at large. Enthusiasm for what’s around the corner often clashes with what’s being delivered now vs tomorrow. And at what price.

As I’ve said before, technology always takes longer than we think, especially when it comes with blending software and hardware into delivering practical and affordable products and services at global scale. By companies large and small.

We already saw the big push with ‘smart glasses’ on the high end with Apple’s Vision Pro. That is a multi-year exercise in product iteration until it finds it ‘product-market fit’.

Apple smartly, also has a concurrent multi-year investment with other AI powered wearables like the Apple Watch and AirPods, that are addressing mainstream Health & Fitness opportunities. And there are rumblings of cameras in the next iteration of Airpods. But for now, Apple is the other big tech with a horse in the ‘AI smart glasses’ race.

Another example is Snap, driven by founder/CEO Evan Spiegel, has tried their hands at smart glasses for years. They’re now in their fifth generation ‘AR Spectacles’ just rolled out for developers.

AI being delivered via wearable and ‘on-prem’ local devices is the next such roller coaster of expectations. The journey will ultimately be rewarding, but bumpy in the early days of this AI Tech Wave. We’ll see next week how Meta continues to iterate on their ‘Meta AI’ driven smart glasses. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)