AI: Weekly Summary. RTZ #311

Welcome to the Saturday weekly AI summary here on ‘AI: Reset to Zero’.

-

Microsoft & OpenAI’s $100 Billion ‘Stargate’ Supercomputer Data Center plans: The Information has a detailed story on a multi-phase $100 billion plus Microsoft OpenAI investment plan on their next generation of AI data centers. Dubbed ‘Stargate’, the plans are to be rolled out through 2030, with scaled up Power infrastructure running OpenAI’s next generation LLM AI models and services. All powering both OpenAI and Microsoft’s Copilot family of AI products and services. Piece also details active efforts to minimize and/or avoid using Nvidia’s next gen AI infrastructure, critical to their AI strategy, including their Infiniband networking switches and cable infrastructure. Overall, the plans add more ‘meat on the bones’ to OpenAI Sam Altman’s trillion+ dollar ambitions to ramp up AI GPU chips and power infrastructure investments over the next few years.

-

Amazon completes $4 Billion Anthropic Investment: Amazon topped up their earlier investment in LLM AI powerhouse Anthropic, putting its ante ahead of Google’s $2 Billion investment in the company last fall. Anthropic remains the second largest independent LLM AI company after OpenAI, with a host of others in the race (see next item). The WSJ has additional details on the investment and partnership with Anthropic. All of these moves of course compete and complement Nvidia’s roadmap for 2024 and beyond,

-

Databricks’ Open Source LLM AI rollout: Databricks announced DBRX, their open source LLM AI models, designed to go head to head with competing open source and proprietary LLM AI models from Meta, Mistral, Elon Musk’s Grok, and others. Their announcement highlights DBRX surpassing Meta’s Llama 2, Mistral’s Mixtral, and Elon’s Grok-1 specifically. Wired has a detailed story on how Databricks ramped up DBRX, and created ‘The World’s Most Powerful open Source AI Model’. Meanwhile, Elon Musk announced Grok 1.5, ‘nearing OpenAI GPT-4 performance, so the ‘eval’ races continue. Until of course OpenAI releases GPT 5 soon. Deeper take on Open Source LLM AI momentum here.

-

AI Talent Battles continue at all levels: Multiple pieces this week on the bottom and top down race for AI talent. The bottom up picture on AI compensation in this WSJ piece. The Information highlights the race for AI experts at the CEO level of the major tech companies. Finally, NY Times discusses how China competing top-down with the US on AI talent. A broader take on the talent and skill race this time vs other eras here.

-

White House AI Executive Order Implementation Stage: The White House announced its Fall 2023 AI Executive Order execution strategy via the OMB (Office of Management and Budget). The rollout will require ‘Chief AI Officers’ across government agencies, and a wide array of execution priorities. My earlier dive on the AI Executive Order here.

Other AI Readings for weekend:

-

OpenAI releases Voice Engine, rapidly building and continuing its multimodal AI initiatives like Sora for video, which is being shown around in Hollywood for commercial opportunities. Competing AI startups like HeyGen and others are racing to productize these types of multimodal AI advances.

-

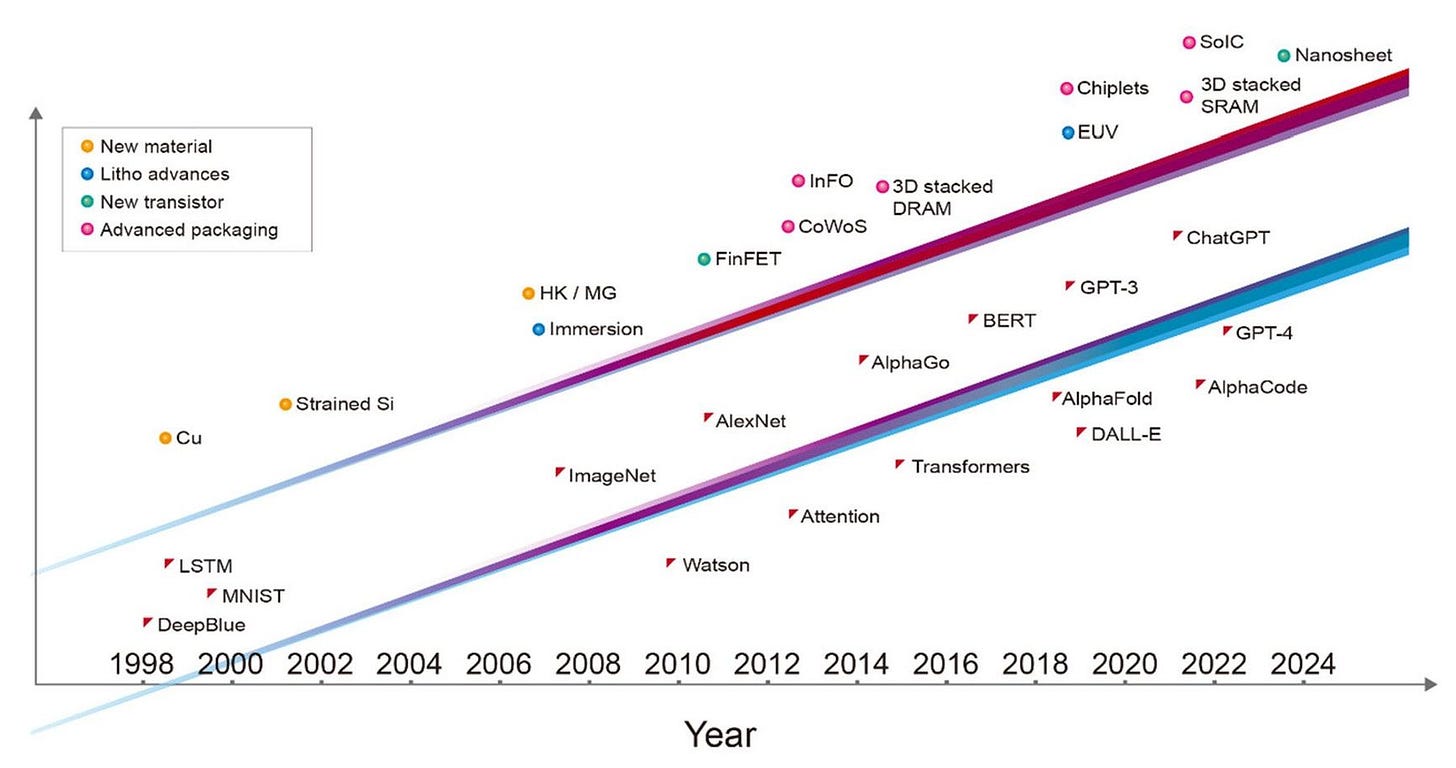

Timely piece in the IEEE Spectrum on the AI Semiconductor roadmap towards a trillion transistor GPU. Contrasts with the 200 plus billion transistors in Nvidia’s latest Blackwell AI GPUs.

Thanks for joining this Saturday with your beverage of choice.

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)