AI: Weekly Summary. RTZ #562

-

Amazon AWS announces host of AI products & partners: Amazon AWS had a packed array of AI announcements at its re:Invent conference, with upgraded AI GPU ‘Trainium’ chips, to ‘supersized’ AI data centers, and new Nova LLM AI models. As well as a host of AI products and services around its enterprise facing Bedrock and Sagemaker services. The video showcase is also worth a watch. The WSJ explains the Supercomputer clusters being rolled out with Amazon’s homegrown AI chips, as well as joint offerings via its Anthropic LLM AI partnership. Apple, an important AWS customer, also showed up on stage to outline its AI partnerships with Amazon. More here.

-

OpenAI’s ‘12 Days of AI Shipmas’: OpenAI is staging ‘12 Days of OpenAI Shipmas’, with a series of large and small product initiatives being rolled out daily over the coming few days. So far we’ve seen new ChatGPT Pro $200/month pricing options for ChatGPT and OpenAI’s reasoning models, along with imminent launch of their text to video Sora models. Google announced Veo for businesses ahead of the Sora release. We may get hints of OpenAI’s GPT-5 Orion as well as AI reasoning tools. And the OpenAI announcements are likely to see responses over the coming weeks by other LLM AI companies as well. More on OpenAI’s possible AI pipeline here and here.

-

AI Neoclouds rise to build AI Data Centers: Wall Street ramping up to finance trillion dollar AI capex is fueling a rising crop of AI neocloud companies emerging behind the big Cloud Service Providers like Amazon AWS, Microsoft Azure, Google Cloud, Oracle Cloud and others. Companies like CoreWeave, backed by Nvidia, and a plethora of data center builders around the world are rapidly building up their capabilities to build bigger and bigger AI data centers, with access to gigawatts of adjacent power. Other companies like Crusoe, Lambda and others are following a similar trail. Beneficiaries of course continue to be AI infrastructure providers like Nvidia, TSMC and others. More here.

-

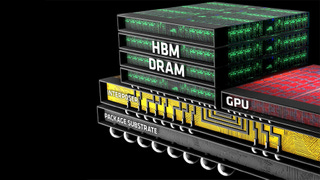

SK Hynix is Nvidia for ‘high bandwidth memory’ (HBM): AI scale data center memory, known as HBM, is the other scarce input besides AI GPU chips from Nvidia and others. The leading supplier is SK Hynix of South Korea. This demand ramp goes up far beyond training AI scale LLMs, with AI Inference demand. Particularly as hundreds of millions of daily LLM AI users grow to billions of users with an unending amount of multimodal prompts and queries. In particular as AI services get more personalized with reasoning and agent capabilities. While US suppliers like Micron and others are ramping to become big suppliers as well, currently, SK Hynix is in the lead in the HBM space, much like Nvidia with AI GPUs. More here.

-

AI and Tech companies position for incoming new Administration and Congress: As President Biden’s administration winds down, big changes are ahead with his AI executive orders under the new administration. The changes could run across the spectrum from a ‘rebrand more than a repeal’, to broader and deeper changes in the new White House’s approach to AI. Particularly in the context of a more Crypto friendly approach from a regulatory perspective The President-elect Trump appoints SF VC David Sacks as AI & Crypto Czar. Big tech CEOs are positioning themselves for the changes ahead. The range of issues include everything from big tech antitrust initiatives to US/China AI/Tech trade and geopolitical issues. Also different this time is the influence of Elon Musk as the new President’s close advisor. More here.

Other AI Readings for weekend:

-

US Appeals Court upholds US ban of Bytedance’s TikTok, facing January 19 sale deadline. Positive of course for big tech Meta, Google and others.

-

New Wired Profile on Apple CEO Tim Cook and Apple Intelligence. Broader context here.

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)