AI: Weekly Summary. RTZ #941

-

Google Gemini 3 leverages the new Flash model: Google accelerated its advantage over OpenAI with Gemini 3, releasing Gemini 3 Flash, optimized for speed. It represents its latest frontier model capabilities. It will be offered in its Gemini app globally. Gemini Flash has competitive benchmark scores vs peers. The model will be made available across Google’s services. This leverages Google’s unique TPU AI Infrastructure advantage relative to peers, given that AI Compute remains the key supply constraint for most of its competitors. Despite the tens of billions in AI Data Center investments (see below), Google has a head start on this front going into 2026. Gemini 3 Flash is multi-modal, so it will offer Google’s latest Voice, images and video capabilities as well. . More here.

-

OpenAI races to catch up with Google et al: OpenAi is heads down on its ‘Code Red’, improving its GPT and ChatGPT models, while continuing to raise more capital going into the New Year. The latest valuation jump seems to take the private company from $500 billion to over $830 billion. While also managing its organizational priorities as it focuses on both AI Research around its AI models. AND a plethora of AI apps under ‘CEO of Applications’ Fidji Simo. One of the challenges is balancing the demands of its core users vs the competitive dynamics of competing in the broader AI Research aspects of AI Frontier models, Agents and Reasoning vs Google, Anthropic and other well funded competitors. Not to mention rising open source competition from China and beyond. More here.

-

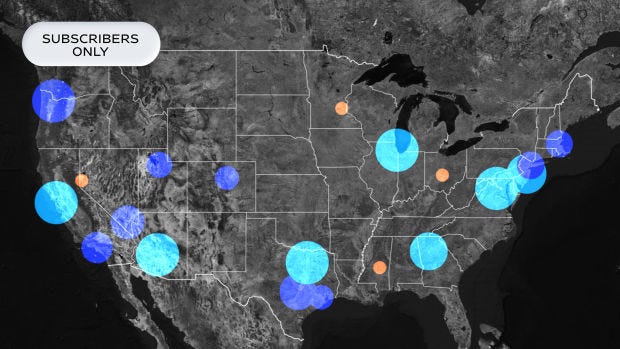

Update on AI ‘Braggawatt’ Data Center Race: The accelerating global multi-Gigawatt AI Data Center Race has been one of the key drivers of AI spending this year, and shows no signs of slowing down, If anything, the race is expanding into space, as well as intensifying across the fifty states in the US (see below). The key companies remain OpenAI, Google, Microsoft, Meta, xAI/Tesla, Amazon, as well as of course Nvidia. A key constraint is Power and its regulatory issues. Another component in short supply increasingly is memory for these data centers, with the current provider SK Hynix, Micron and others at capacity. The industry is seeing rising prices on this front, and it’s spilling over to consumer computers and smartphones, with likely impact on higher prices going into next year. More here and here.

-

Regulatory Tussles over AI Data Centers & Power: The race for AI Data Centers buildouts is scattered across the fifty states in the US, with Texas having a leading share for now. Texas has over 40% of the builds with over 440 projects, as tech companies large and small race to build ‘Gigawatt’ AI Data centers that field a million plus AI GPUs. Mostly from Nvidia of course. Note that a single Gigawatt AI Data Center is likely to run over $50 billion this year and at least 2-3 years to build out. The industry is targeting massive multiples of gigawatts, with a collective price tag in the trillions over this decade alone. OpenAI of course has the higher profile here with its financing deals, but others aren’t far behind. Add to that the regulatory tussles across state and federal politics to get these data centers and their infrastructure connected to Power at affordable prices/ That adds a regulatory overlay issue that is currently being hashed out between the Executive and Congressional branches, with most of the state governors chiming in. More here.

-

A Cautionary Robots story with AI Robots ahead: There was a cautionary development on a multi-decade US Robotics success story with its category defining Roomba product. After failing to be sold to Amazon for almost $2 billion, the company filed for bankruptcy, with its key assets likely going to its Chinese creditors. iRobot will continue to operate post bankruptcy. The tale illustrates how hard it is to develop and maintain product market fit for early AI consumer devices. And is important to note as the current AI enthusiasm spills over into a new global race for AI driven humanoid and industrial robots. Both in the US and China. The AI humanoid robotics wave is even further complicated by the need for synthetic data to train the robots. And of course to find true mainstream applications for these robots as the underlying technologies are developed and improved at scale. Both on the hardware and software fronts. It’s also relevant to keep in mind the coming investment boom in AI devices beyond robots. More here.

Other AI Readings for weekend:

-

AI may be creating more jobs than expected. More here.

-

A16Z updates the Consumer AI Race amongst key AI Chatbots. More here.

(Additional Note: For more weekend AI listening, have a new podcast series on AI, from a Gen Z to Boomer perspective. It’s called AI Ramblings. Now 33 weekly Episodes and counting. More with the latest AI Ramblings Episode 33 on AI issues of the day. As well as our latest ‘Reads’ and ‘Obsessions’ of the Week. Co-hosted with my Gen Z nephew Neal Makwana):

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)