AI: Weekly Summary. RTZ #962

-

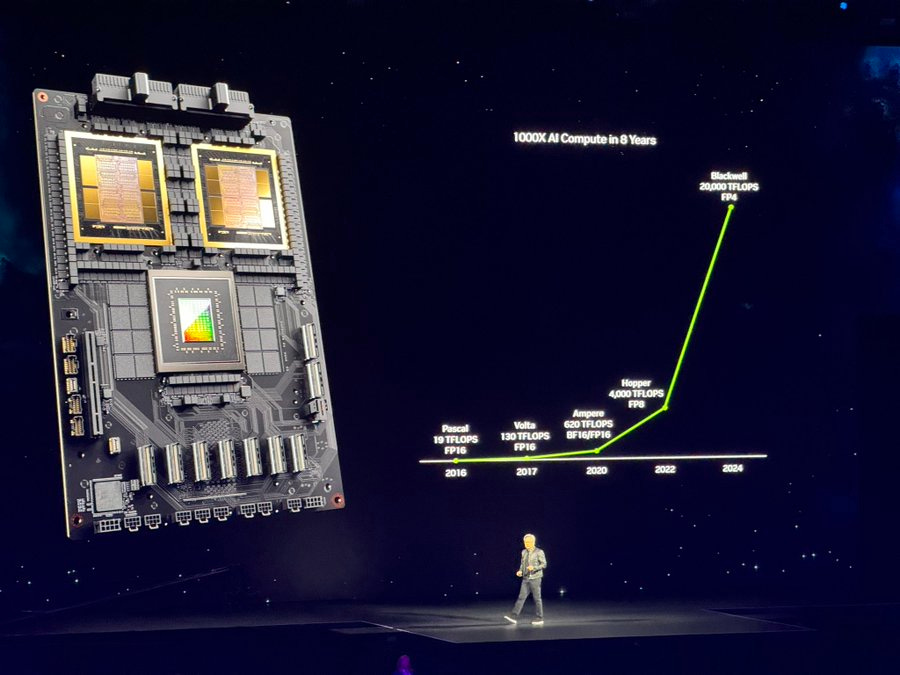

Nvidia at CES 2026, Vera Rubin & Alpamayo: Nvidia founder/CEO Jensen Huang focused his annual CES 2026 keynote on its next generation Vera Rubin AI Computing Infrastructure racks, complete with new sets of supporting chips for the Vera CPUs and Rubin GPUs. Highlight amongst them is Nvidia’s new Bluefield chips that provide a novel, high efficiency ‘context storage’ technology for AI inference computing of ‘KV cache’. Overall, the new Vera Rubin architecture offers multiples of the training compute and inference compute, with commensurate energy efficiency vs the current Blackwell generation. A separate set of focus at the keynote went to Nvidia’s Alpamayo self driving autonomous vehicle (AV) AI hardware and software platform, based on its Orin hardware today, and Thor hardware following. In particular, Nvidia got good reviews of the demos of its L2++ (level 2) self-driving systems with partner Mercedes’s CLA cars. The key takeaway here is that Nvidia is offering a full stack of AV self driving tech stack for auto makers globally vs the vertical approaches taken by Tesla and Google’s Waymo. This includes Nvidia NV customers in China like BYD. Nvidia also showed off its full stack AI solutions for the humanoid robot market as well. More here.

-

AI Memory Chip Sticker Shock at CES 2926: A big takeaway from CES 2026 is the accelerating shortage of memory chips across local PCs, smartphones, and gadgets of all types, and their price increase impacts on mainstream customer affordability. As discussed earlier, this has been a rising concern over recent months, and the evidence is discernible across product categories at CES. The key driver remains the dramatic acceleration in AI Infrastructure investments in Data and Power over the last couple of years in particular. And the dramatic shifts by key memory providers like SK Hynix, Micron and others, to shift their capacities to the AI Data Center market, away from the consumer side of the market. As well as the trade and tariff issues between the US and China. This is likely to be a reality beyond 2026 for now. And likely impacts the adoption of AI products and services, as the main hyperscalers build up the supply of AI Intelligence tokens . More here and here,

-

Nvidia’s 3 year AI run over 30 years: Nvidia has indeed come a long way financially over three decades from its origins as a gaming GPU company, to the pivotal provider of ‘Accelerated AI Compute’ infrastructure globally over the last three years. The company’s AI hardware/software offerings have been a bookend match to OpenAI’s ChatGPT three year anniversary this past November. Looking at the mix of the company’s technical and financial momentum in 2026 vs 2025 in particular highlights the ‘early days’ nature of these markets for AI intelligence token production worldwide. And Nvidia under founder/CEO Jensen Huang remains laser focused on its meticulous execution against those opportunities. While also balancing the complex geopolitical trade and tariff realities between the US and China. Nvidia is also tightly executing on its ‘Frenemies’ business relationships with core customers, as those customers build proprietary AI Infrastructure options away from Nvidia’s AI products and services. More here.

-

Google Gemini Distribution w/ Samsung & Apple: Samsung announced its plans to double the footprint of its global AI smartphone franchise to almost 800 million units, leveraging its relationship with Google and its Gemini AI offerings. Across its Galaxy smartphone line including bi-folds and tri-folds. This is noteworthy as Google also expands its Gemini distribution with Apple, as that company rolls out its AI revamped Siri Apple Intelligence efforts later this year. Between those two Samsung and Apple platforms, Google has core external distribution mechanisms for its Gemini AI offerings. This of course supplemented by Google’s core Search distribution to billions globally via its direct offerings like Gmail, Google Drive, Docs, and of course YouTube. All of these distribution mechanisms enable Google to expand Gemini distribution to 2 billion plus mainstream users over 2026, vs OpenAI’s over 900 million plus weekly users of ChatGPT 5.2 and other AI products and services. More here.

-

Anthropic’s Claude Code moment: Anthropic continues to enjoy broad success with its Claude Code AI models to ‘vibe coding’ developers and businesses worldwide, via its core investor and distribution partners Amazon, Google, Microsoft, Nvidia and others. This despite concentrated competition from AI coding applications by OpenAI, Microsoft, Cursor and many others. Anthropic’s momentum in Claude Code highlights the company’s innovation around deeper and wider utility of its AI coding applications and tools, as well their efficiency vs competing offerings. Its tight integration with Anthropic’s Claude Opus 4.5 models is a key driver, as well Claude Code’s focus on simplifying and automating coding across disparate platforms. An added driver has been Anthropic’s focus on open sourced interoperability frameworks like MCP (Model Control Protocol), that accelerate developer efforts with AI Agents and Reasoning in their AI applications across industry domains. More here.

Other AI Readings for weekend:

-

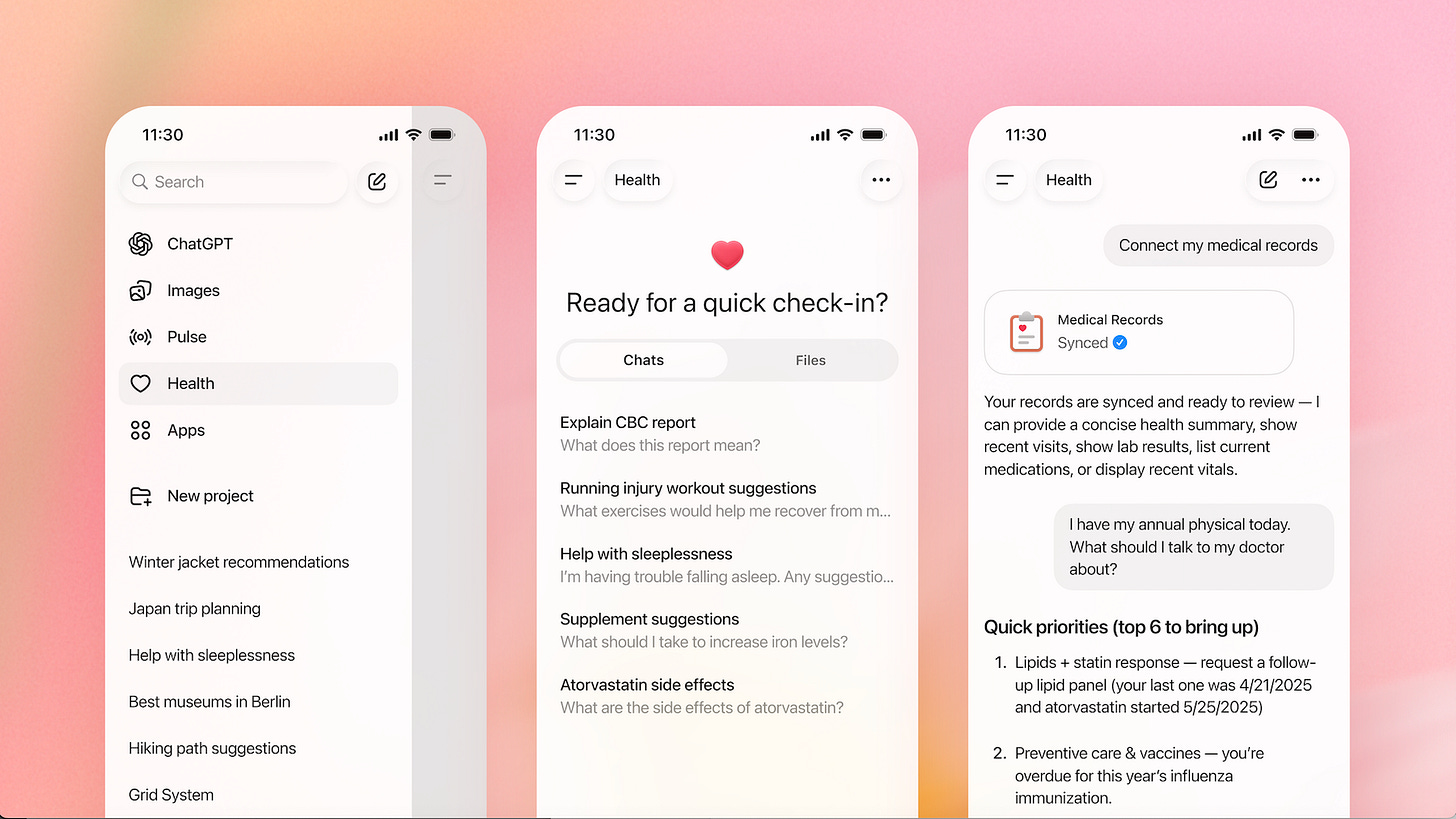

OpenAI’s big ChatGPT healthcare audience and growing focus. More here.

-

Elon Musk’s Grok/xAI under fire for explicit AI images’. More here and here.

(Additional Note: For more weekend AI listening, have a new podcast series on AI, from a Gen Z to Boomer perspective. It’s called AI Ramblings. Now 36 weekly Episodes and counting. More with the latest AI Ramblings Episode 36 on our ‘Out of the Box’ Predictions for 2026. Co-hosted with my Gen Z nephew Neal Makwna):

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)