AI: Widening the apertures of current LLM AI Research. RTZ #932

I’ve been discussing for a while the growing schism in this AI Tech Wave between the AI Researchers that believe AI Scaling can get us to AGI, in any form. And the increasing community on the other side that say a different path may be needed. These including AI heavyweights like Yann LeCun, Andrej Karpathy and many others as I’ve outlined earlier.

This debate is seeing growing discussion and its worth taking a closer look as we start to focus on 2026 and AI.

The Information frames the debate well in “At AI’s Biggest Event, Some Researchers Said the Field Needs an Overhaul”:

“A small but growing number of artificial intelligence developers at OpenAI, Google and other companies say they’re skeptical that today’s technical approaches to AI will achieve major breakthroughs in biology, medicine and other fields while also managing to avoid making silly mistakes.”

“That was the buzz last week among numerous researchers at the Neural Information Processing Systems conference, a key meetup for people in the field. Some of them believe that developers must create AI that continues gaining new capabilities after it’s developed, mirroring the way humans learn. This type of continual learning hasn’t yet been discovered in the AI field.”

““I will guarantee that the way we’re training models today will not last,” said David Luan, who heads an AI research unit at Amazon.

“Other researchers who attended NeurIPS in San Diego last week also voiced that sentiment, arguing that humanlike AI, often referred to as artificial general intelligence, may require a new development technique.”

“Some of them echoed Ilya Sutskever, a co-founder and former chief scientist of OpenAI, who said last month that some of today’s most advanced AI training methods aren’t helping the models generalize, or handle a wide variety of tasks, including topics they haven’t encountered before.”

I’ve discussed Ilya Sutskever’s views on AI Data directions in particular in recent months. He’s been arguing recently that we’re going back to an age of AI Research from AI Scaling. A view that’s been music to the ears of AI Bears.

But then there are the believers in AI Scaling, like Anthropic founder Dario Amodei:

“Sutskever’s views contrast with recent pronouncements from other AI leaders. Anthropic CEO Dario Amodei said at the New York Times DealBook Summit last week that scaling existing training techniques will achieve AGI. He previously said such a system could be developed as soon as next year. OpenAI CEO Sam Altman believes that a little over two years from now, AI would be able to conduct research to improve itself—a kind of holy grail for the industry.”

“But if the skeptics of current methods, like Luan and Sutskever, are right, that could cast doubt on the billions of dollars developers including OpenAI and Anthropic will spend next year on popular techniques to improve models, such as reinforcement learning, including paying firms such as Scale AI, Surge AI and Turing that help with such work. (Tom Channick, a spokesperson for Scale, disagreed, saying AI that uses continual learning will still need to learn from human-generated data as well as the kind of reinforcement learning products Scale offers.)”

“That said, AI developers seem poised to generate plenty of revenue even without new breakthroughs, as current forms of AI are well suited for helping people with tasks such as writing, creating designs, shopping for products and analyzing data. OpenAI recently projected its revenue will more than triple to around $13 billion this year, while Anthropic projected its revenue will rise more than 10 times to around $4 billion from sales of chatbots and AI models to app developers. These companies had little to no revenue three years ago.”

That’s not to say that current AI approaches are limited in their financial opportunities/ Far beupmd AI Reasoning and Agents:

“Other startups developing AI apps, such as coding assistant Cursor, are on track to collectively generate more than $3 billion in sales over the next year.”

“The technology’s limitations, however, have slowed corporate customers’ purchases of newer AI products known as agents. Models continue to make mistakes on simple questions, and AI agents often fall short without substantial legwork from AI providers to ensure they perform correctly.”

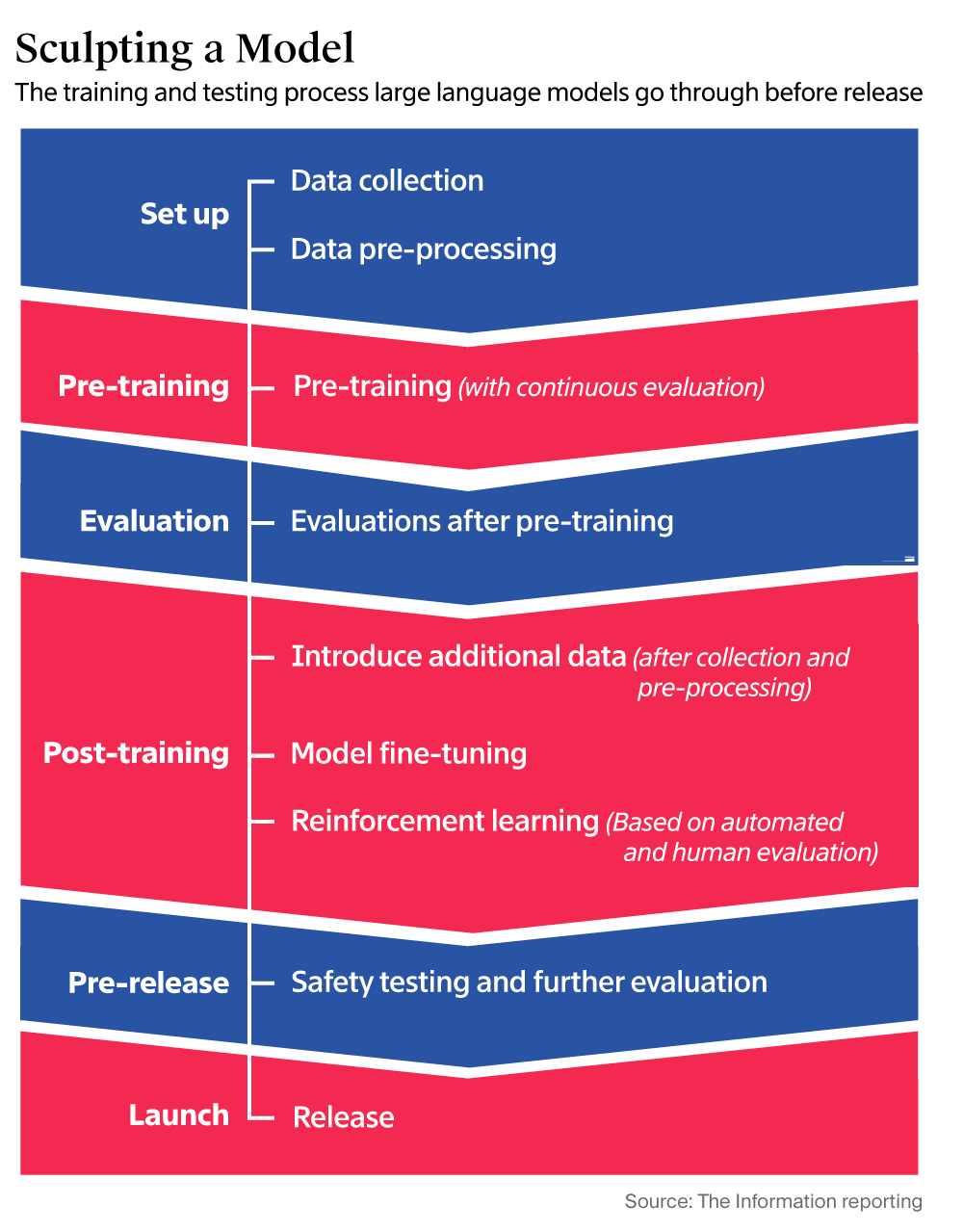

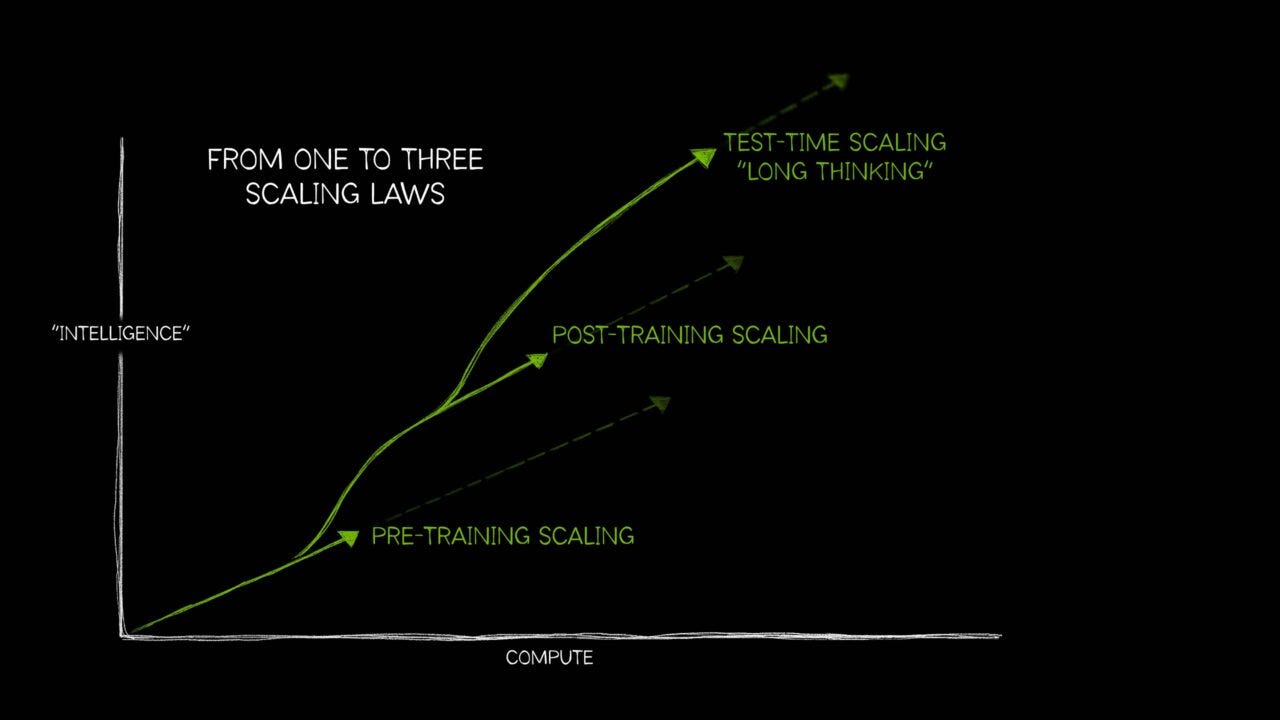

“Until recently, a majority of improvements to AI came from increasing the amount of data, from scientific to literary texts, as well as the computational resources AI models process to make connections between concepts, a practice known as pretraining. According to the scaling law, feeding more data and server power to the models should produce better AI.”

And the LLM AI companies are broadening their AI focus across industries:

“In the last year, developers like OpenAI also added intelligence to their AI by increasing the amount of computing power that AI models used when they answered questions, known as test-time compute.”

“OpenAI and its rivals also pay experts in fields like medicine, biology and software engineering to write examples of answers to tough questions in their domain, which improve models’ performance in those areas, a process known as reinforcement learning.”

There are specific areas of AI Scaling Research that could be promising to improve:

“A major problem researchers at the conference discussed is how today’s advanced AI isn’t able to update its knowledge outside the aforementioned training processes.”

“A number of marquee research papers presented at NeurIPS addressed this topic. One from researchers from the Massachusetts Institute of Technology and OpenAI introduced a new technique, self-adapting language models, in which a large language model—a common type of AI that powers answers for ChatGPT—uses information it encounters in the real world to either gain new knowledge or improve its performance on new tasks.”

“For instance, if a ChatGPT customer asks the chatbot to analyze a medical journal article it hasn’t seen before, the model might rewrite the article as a series of questions and answers, using it to train itself. The next time someone asks about that topic, it’ll be able to give an answer with the new information in mind. Some researchers say this kind of continual self-updating is essential for AI that can come up with scientific breakthroughs, as it would make the AI act more like human scientists who apply new information to old theories.”

A notable discussion here of late is a discussion with AI guru Richard Sutton:

“In a much-discussed keynote talk at the conference, University of Alberta Professor Richard Sutton, commonly referred to as the father of reinforcement learning, similarly said models should be able to learn from experience and researchers shouldn’t be trying to improve models’ knowledge in various fields by training them on lots of specialized data created by human experts because such approaches don’t scale, meaning they don’t lead to long-lasting gains.”

Richard Sutton, right, with Dwarkesh Patel. Screenshot via Dwarkesh.com

“The AI’s progress is “eventually held up” when the human experts reach the limits of their knowledge, he said. Instead, he argues, researchers should focus on inventing new AI that can learn from new information after it starts handling real-world tasks. (Sutton is also a research scientist at Keen Technologies, a startup aiming to develop AGI.)”

“Sutton’s argument runs counter to the way OpenAI and Anthropic have lately used reinforcement learning techniques to add more smarts to their AI.”

And as highlighted by OpenAI’s recent internal ‘Code Red’, AI Research is getting a closer, deeper look again:

“Researchers also discussed the AI race among large developers, as Google’s technology has pulled ahead of rivals by some measures, and OpenAI’s Altman has told the company to prepare for “rough vibes” and “temporary economic headwinds” as a result of Google’s resurgence.”

“During a question-and-answer session with members of Google’s AI team, a number of attendees asked how the team managed to improve its pretraining process, something OpenAI struggled with for much of this year.”

“Vahab Mirrokni, a Google Research vice president, said the company improved the mixture of data it uses for pretraining. He said it also has figured out how to better manage the thousands of specialized Google-designed chips—known as tensor processing units—that it uses to develop its flagship AI model. The result is fewer hardware failures interrupting Google’s model development process. (Read more about TPUs and how they compare to Nvidia’s AI chips.)”

“More recently, OpenAI leaders said that they’ve been able to similarly improve their pretraining process, leading to the creation of a new model, code-named Garlic, that they believe will be able to challenge Google’s in the coming months.”

The full piece is worth a closer read for more nuance and detail.

But the broader debate around LLM AI companies and their current approaches is something to keep a close eye going into next year. This AI Tech Wave is likely to see many more new promising areas of AI improvements and directions. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)