AI: AI Hallucinations the 'Forever Problem'. RTZ #741

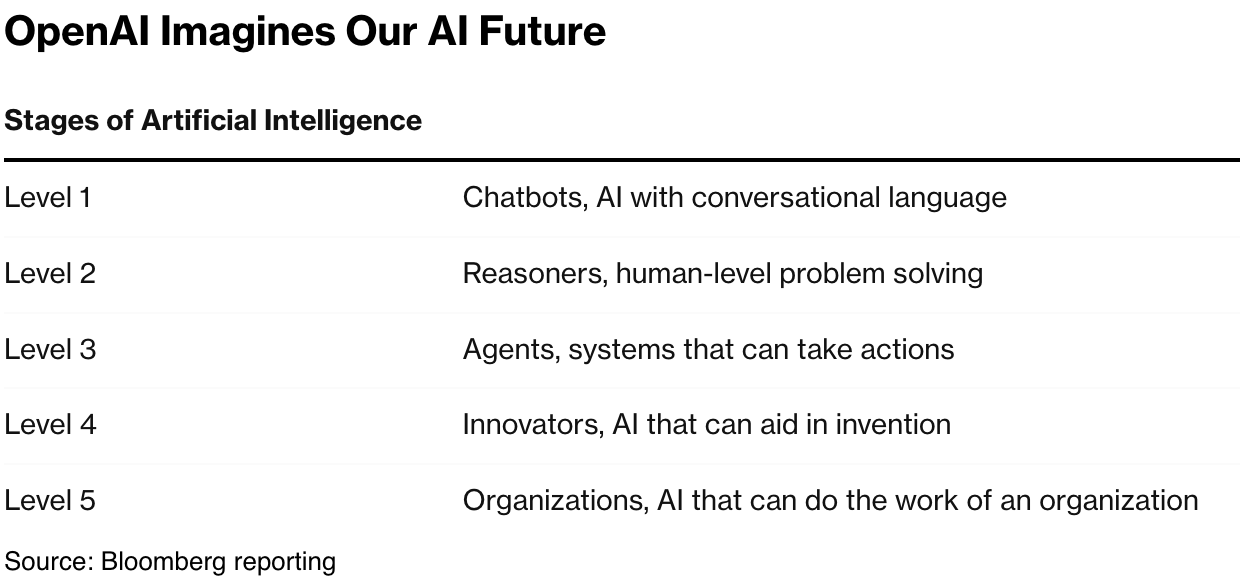

LLM AIs and chatbots may be the next best thing since sliced bread, but AI hallucinations remain a perennial challenge for all the AI vendors. While every LLM AI company has its own ways to try and reduce hallucinations, it remains a reality in AI Tech Wave, for both businesses and consumers. WIth no easy fixes in sight despite billions expended to swat them away on the way to AGI

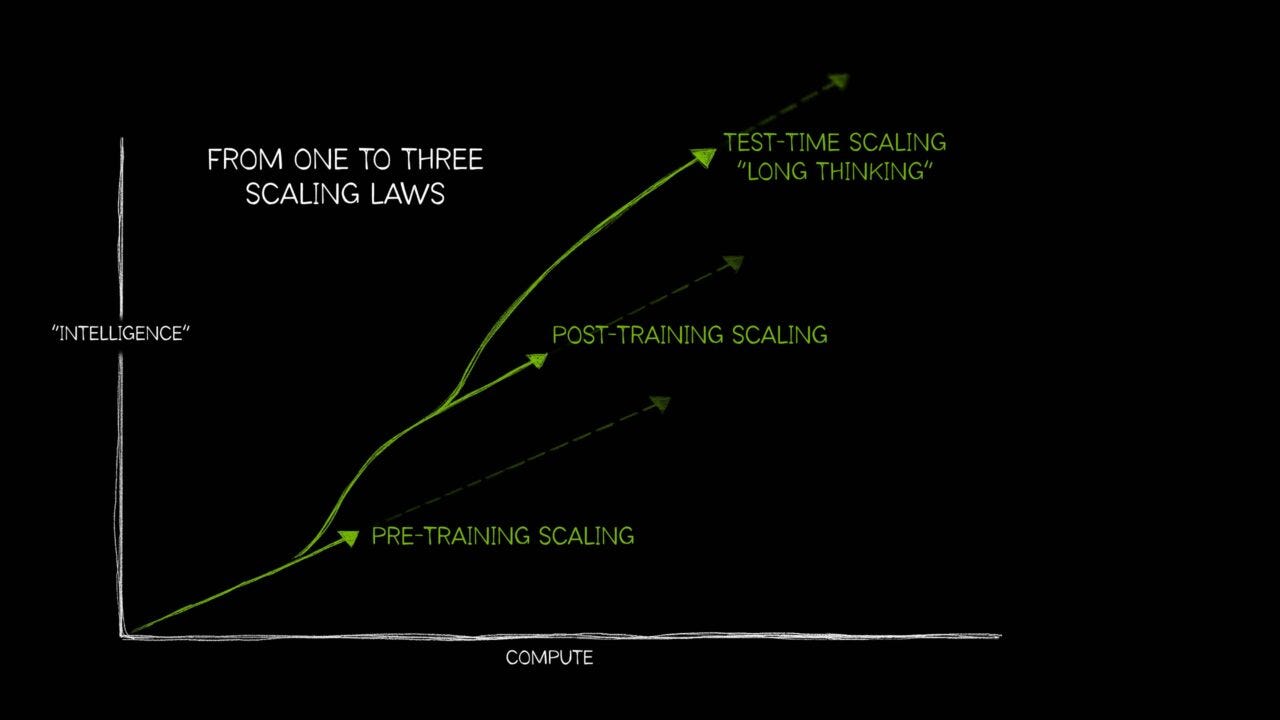

And as the underlying AI innovations around scaling Inference expand with techniques like ‘test time compute’, distillation, ‘chain of thought’ AI Reasoning and Agents, the underlying issue of hallucinations grows in complexity. Especially as the AI technologies trifurcate in their scaling as discussed previously.

Axios discusses this in “Why AI is still making things up”:

“AI makers could do more to limit chatbots’ penchant for “hallucinating,” or making stuff up — but they’re prioritizing speed and scale instead.”

“Why it matters: High-profile AI-induced gaffes keep embarrassing users, and the technology’s unreliability continues to cloud its adoption.”

“The big picture: Hallucinations aren’t quirks — they’re a foundational feature of generative AI that some researchers say will never be fully fixed.”

For many AI users in the ‘right brain/left brain’ creative fields, hallucinations are as much a feature, as they are a bug for others.

“AI models predict the next word based on patterns in their training data and the prompts a user provides. They’re built to try to satisfy users, and if they don’t “know” the answer they guess.”

“Chatbots’ fabrications were a problem when OpenAI CEO Sam Altman introduced ChatGPT in 2022, and the industry continues to remind users that they can’t trust every fact a chatbot asserts.”

But they are embarrasing as AI products get used in many business applications:

“Every week brings painful new evidence that users are not listening to these warnings.”

“Last week it was a report from Robert F. Kennedy Jr.’s Health and Human Services Department citing studies that don’t exist. Experts found evidence suggesting OpenAI’s tools were involved.”

“A week earlier, the Chicago Sun-Times published a print supplement with a summer reading list full of real authors, but hallucinated book titles.”

“AI legal expert Damien Charlotin tracks legal decisions in which lawyers have used evidence that featured AI hallucinations. His database details more than 30 instances in May 2025. Legal observers fear the total number is likely much higher.”

Embarrassing, but still something the top AI companies can barrel through for now:

“Yes, but: AI makers are locked in fierce competition to top benchmarks, capture users and wow the media. They’d love to tamp down hallucinations, but not at the expense of speed.”

“Chatbots could note how confident the language model is in the accuracy of the response, but they have no real incentive for that, Tim Sanders, executive fellow at Harvard Business School and VP at software marketplace G2, told Axios. “That’s the dirty little secret. Accuracy costs money. Being helpful drives adoption.”

“Between the lines: AI companies are making efforts to reduce hallucinations, mainly by trying to fill in gaps in training data.”

“Retrieval augmentation generation (RAG) is one process for grounding answers in contextually relevant documents or data.”

“RAG connects the model to trusted data so it can retrieve relevant information before generating a response, producing more accurate answers.”

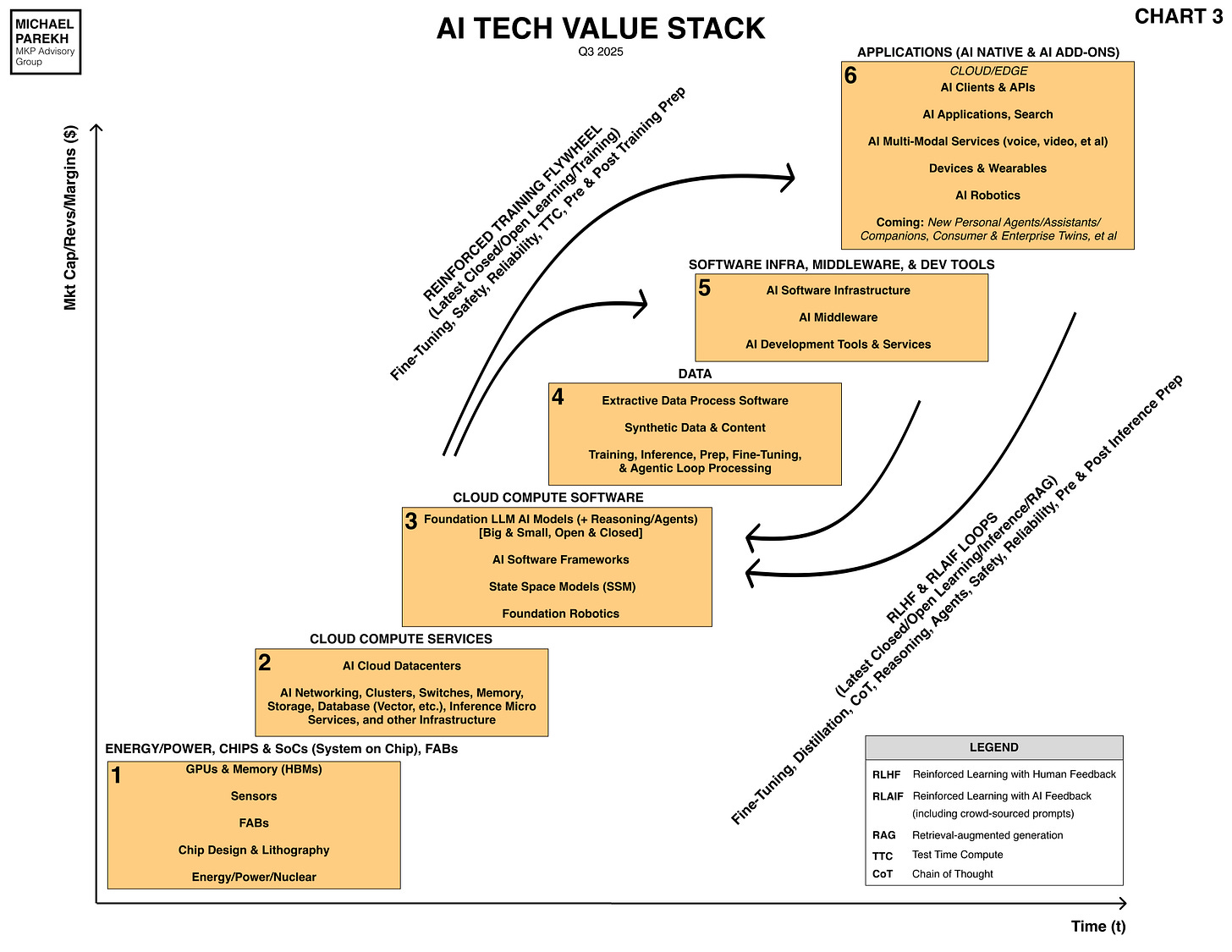

This is the stuff in the reinforcement learning loops in the AI Tech Stack below:

The best cloud providers providng LLM AI services still can’t get the 20-25% hallucination rates, even with the latest models:

“AWS offers Amazon Bedrock, a cloud service that allows customers to use various AI providers and responsible AI capabilities (including reducing hallucinations) to build generative AI applications.”

“AWS says its Bedrock Guardrails can filter over 75% of hallucinated responses.”

“Researchers from Google’s DeepMind, along with Stanford University and the University of Illinois at Urbana-Champaign, are working on a Search-Augmented Factuality Evaluator (SAFE), which uses AI to fact-check AI.”

“Anthropic offers a guide to help developers limit hallucinations — including allowing the model to answer, “I don’t know.”

“OpenAI’s developer guide includes a section on how much accuracy is “good enough” for production, from both business and technical perspectives.”

“Researchers inside AI companies have raised alarms about hallucinations, but those warnings aren’t always front of mind in organizations hell-bent on a quest for “superintelligence.”“

Especially as the AI venture driven arms race accelerates. Especially as investors and users continue to roll the dice:

“Raising money for building the next big model means companies have to keep making bigger promises about chatbots replacing search engines and AI agents replacing workers. Focusing on the technology’s unreliability only undercuts those efforts.”

“The other side: Some AI researchers insist that the hallucination problem is overblown or at least misunderstood, and that it shouldn’t discourage speedy adoption of AI to boost productivity.”

“We should be using [genAI] twice as much as we’re using it right now,” Sanders told Axios.”

“Sanders disputes a recent New York Times article suggesting that as AI models get smarter, their hallucination rates get worse.”

“OpenAI’s o3 and similar reasoning models are designed to solve more complex problems than regular chatbots. “It reasons. It iterates. It guesses over and over until it gets a satisfactory answer based on the prompt or goal,” Sanders told Axios. “It’s going to hallucinate more because, frankly, it’s going to take more swings at the plate.”

“Hallucinations would be less of a concern, Sanders says, if users understood that genAI is designed to make predictions, not verify facts. He urges users to take a “trust, but verify” approach.”

“The bottom line: Hallucination-free AI may be a forever problem, but for now users can choose the best model for the job and keep a human somewhere in the loop.”

For now, it’s important for users of all types to be aware that these models and products at this stage of the AI Tech Wave are fallible indeed. And that it may be a ‘forever problem’.

But they’re useful nevertheless, and so not to throw out the AI baby with the hallucinations prone bath water. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)