AI: Amazon AWS's API services Scaling issues. RTZ #696

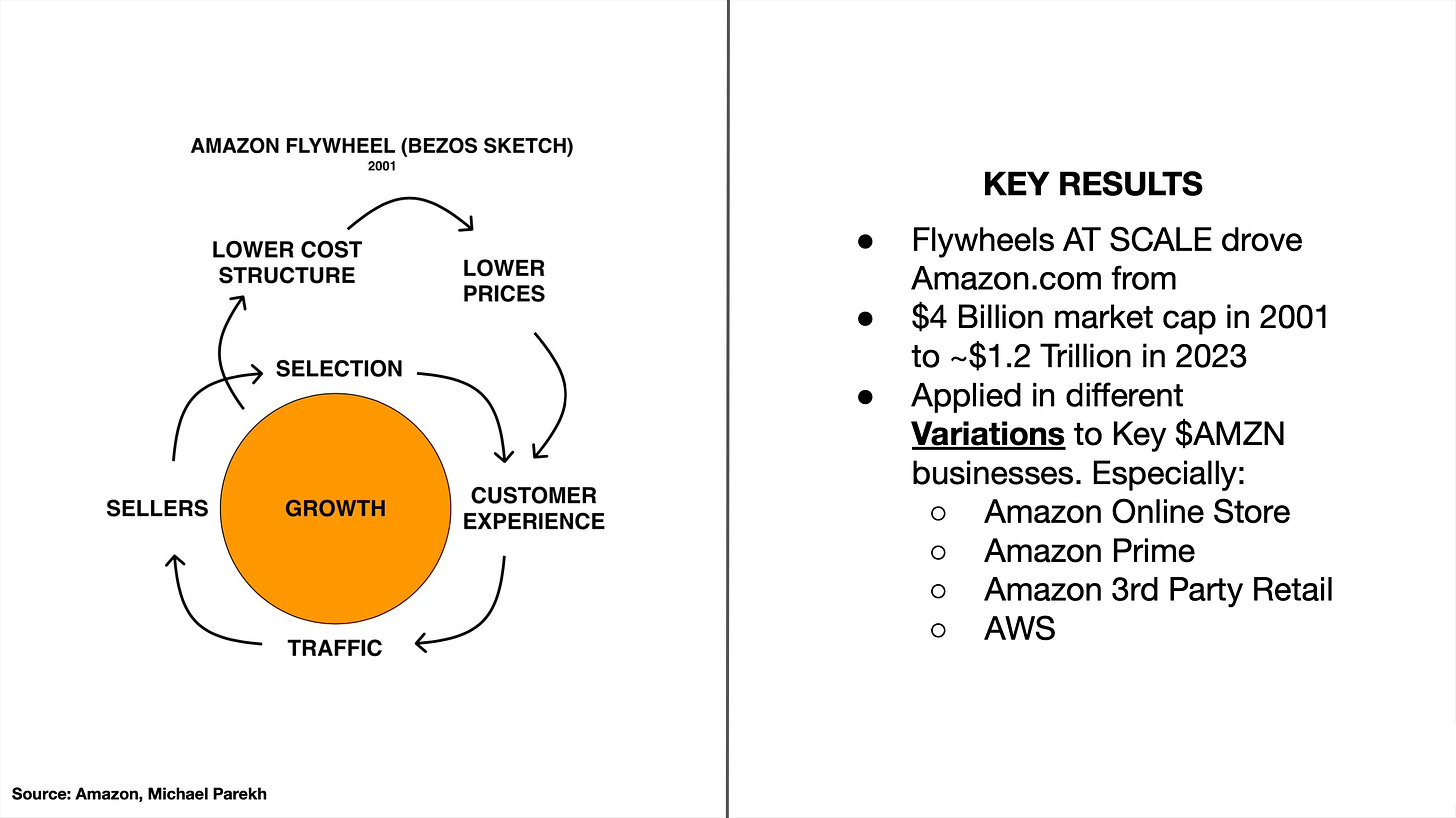

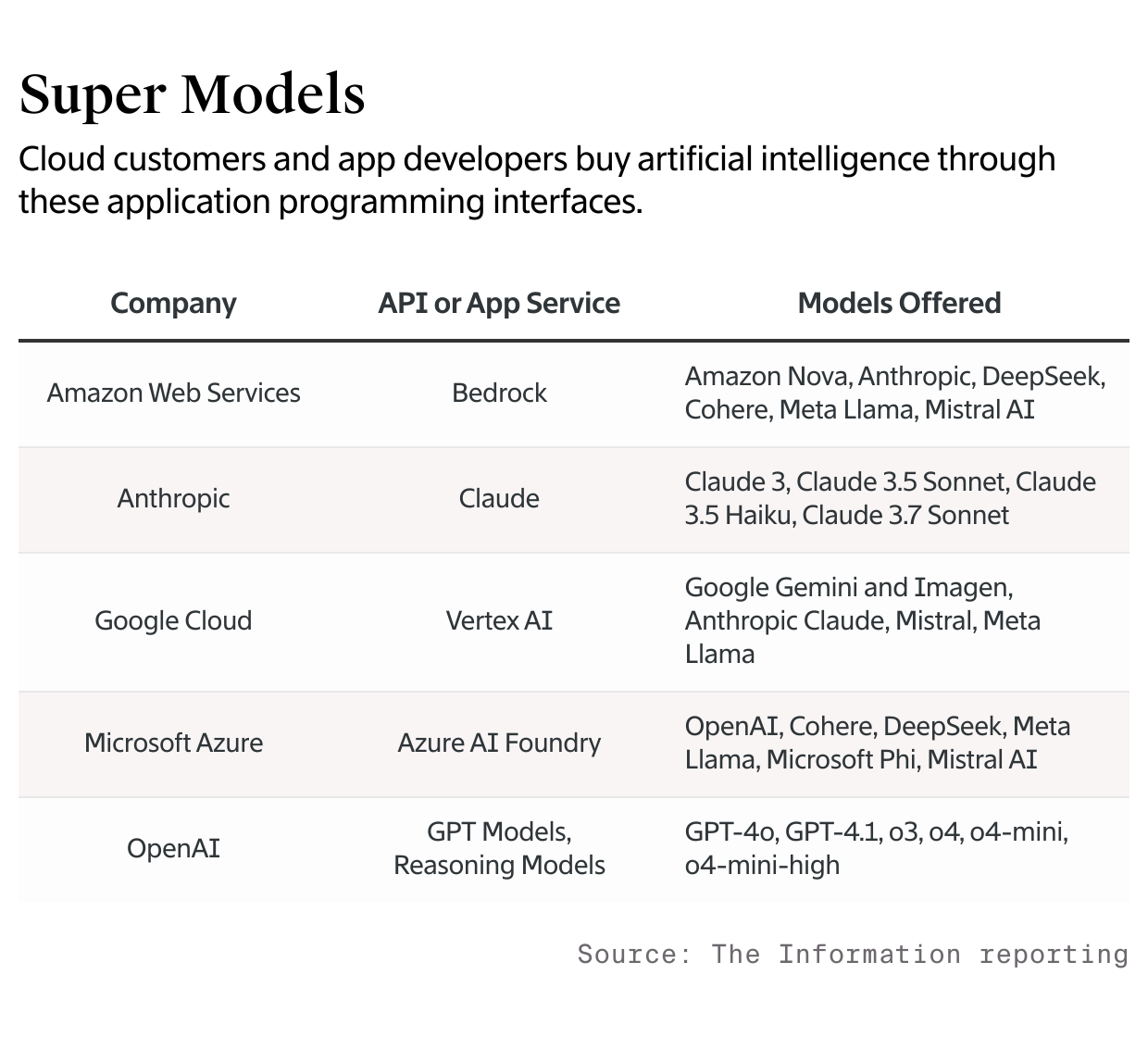

Amazon AWS, the company that pioneered scaling APIs (application programming interfaces) into a global revenue and profit flywheel for cloud data center applications for developers/companies large and small, is ironically facing API scaling issues with its AI products from partner Anthropic. Much has this to do with the current global scarcity of AI data center GPU chips and infrastructure and the variable costs of AI training and inference compute and related flywheels I’ve written about.

But it’s a notable scaling speed bump in this AI Tech Wave that companies like Google, OpenAI and others are going through as well. Let’s discuss.

As the Information explains in “AWS Faces Backlash Over Limits on Anthropic’s AI”:

“Amazon has hitched its artificial intelligence wagon to Anthropic, investing $8 billion in the startup and heavily promoting its AI to customers of the Amazon Web Services cloud unit.”

“But AWS has fumbled Bedrock, a key service its customers use to build applications with Anthropic AI models. Customers who have used Bedrock say its application programming interface puts arbitrary limits on how much they can use Anthropic’s models and lacks features they want, according to founders and executives from four AWS customers, as well as two consulting firms whose clients use AWS.”

Many causes here:

“AWS representatives say such usage limits are common in the industry. However, the problem suggests AWS doesn’t have enough server capacity for Anthropic usage or is reserving an outsize amount of it for certain large customers.

“Inside AWS, some senior leaders recently discussed the Bedrock capacity problem as a “disaster,” according to a person who was part of the conversation.”

“If the issues persist, AWS risks losing its standing with startups that might later become large consumers of cloud applications, said one of the consulting firm executives.”

That’d be bad, since Amazon is the leading provider of cloud data services ahead of Microsoft Azure, Google Cloud and Oracle Cloud, before we include AI ‘neoclouds’ like Nvidia backed CoreWeave and others.

Google of course is also an investor partner of Anthropic, alongside of Amazon.

“Google, a major AWS rival that has also invested in Anthropic, stands to benefit from Bedrock’s problems.”

“Several customers said that because of the Bedrock issues, they now go to Anthropic’s website to get access to models through the startup’s own API.”

“Anthropic has used Google’s servers in addition to those of AWS to power its API business, according to an AWS executive. Anthropic’s ability to tap two cloud providers may explain the capacity gap between Bedrock, which can only access Amazon’s servers, and Anthropic’s API. It also means Google could be capturing revenue that might otherwise have gone to AWS.”

For now it’s a ‘high-class’ problem of demand meeting limited supply:

“AWS spokesperson Kate Vorys said in a statement via email that AWS has “tens of thousands of customers” using Anthropic models through Bedrock and the service is experiencing “unprecedented demand” as more customers add generative AI to the cloud applications they use to run their businesses.”

“AWS uses rate limits in Bedrock to ensure that all of its customers can get “fair access” to popular AI models, and in services like EC2, its cloud server business, “to protect the security and continuity of our technology,” said Vorys.”

“The Information’s suggestion that rate limits are a response to capacity constraints, or that Amazon Bedrock is not equipped to support customers’ needs, is false,” Vorys said in the statement.”

Again, this has to do with the variable cost nature of AI training and inference I’ve discussed.

And the shortage in supply is causing other problems:

“The Bedrock capacity shortage has existed since AWS launched the service a year and a half ago. But the frequency and severity of error messages customers encounter while using it have increased in the past few months, according to the customers, who include the founders of AI coding and education startups.”

APIs again are the key pricing and distribution mechanism of these services, resulting in businesses that have revenues in the tens of billions.

It’s reminiscent of the AOL problem in the nineties of having too many consumers trying to dial into the internet via modems to get online. The enterprise version of that consumer service problem for Amazon and others.

“In one instance of Bedrock’s capacity limits this month, AWS told a customer they would be able to tap Anthropic’s Claude Sonnet 3.7 model just five times per minute, according to a consulting firm that works with the customer. For the same price as Bedrock, the lowest-price usage tier of Anthropic’s own API gives customers up to 50 requests per minute. (AWS customers can sometimes get access to higher request limits by asking their AWS support teams, said a person who works at AWS and an executive from one of the consulting firms.)”

“Michael Gerstenhaber, vice president of product at Anthropic, said AWS and Google are investing in more servers for Anthropic and whatever problems exist with Bedrock could be temporary.”

In August, Anthropic launched prompt caching, which ensures developers don’t have to provide AI models with the same background information over and over again. Amazon didn’t make the same feature available in Bedrock until earlier this month.

“AWS also uses Bedrock to distribute its own AI model series, Amazon Nova, which it first unveiled in December and which doesn’t seem to be experiencing the same capacity problems as the Bedrock Anthropic models.”

And Bedrock is a meaningful business for Amazon AWS;

“As of last fall, AWS’ AI business, which includes Bedrock, was on pace to generate more than $2 billion a year and “continues to grow at a triple-digit year-over-year percentage,” Amazon CEO Andy Jassy said on an earnings call in October. (AWS generated $107.6 billion in revenue in 2024.)”

“Amazon isn’t the only major cloud provider that has struggled to attract or serve some customers of APIs for AI. As of last year, more customers were using OpenAI’s API than Microsoft’s Azure cloud API to access the OpenAI models, in part because customers said OpenAI helped them tailor the AI to their needs better than Microsoft could.”

It’s competitors like Google have had similar issues with API scaling, as I’ve written about as well.

“And Google last year struggled to attract customers to its Gemini AI models because developers found them too difficult to use compared to rivals’ technology.”

“But AWS leaders seem to be aware of what’s at stake.”

“In a recent management reorganization, AWS moved Bedrock under David Brown, chief of its EC2 cloud server business, which has long been the cloud provider’s largest revenue generator. Brown joined Amazon in 2007 and has deep experience in addressing the capacity needs of AWS’ largest customers.”

It’s partner Anthropic is also working hard to scale supply:

“Anthropic’s API has improved substantially over the past year or so,” said Travis Rehl, chief technology officer and chief product officer at Innovative Solutions, which works with AWS and Anthropic customers.”

The whole episode for Amazon and others highlights the growing pains of scaling AI services supply vs demand, and is a characteristic of most tech waves. This AI Tech Wave is no exception.

The problems will get resolved sooner than later, but the process is notable in the meantime, even for leaders like Amazon AWS. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)