AI: Amazon AWS's 'No Expense Spared' AI Builds. RTZ #999

I’ve written a lot for a while now, on how Amazon is poised to be a strong big tech beneficiary of the early AI Tech Wave. In particular with its crown jewel Amazon Web Services (aka AWS) Cloud business.

Amazon under CEO Andy Jassy is ramping up its AWS Cloud business, to offer cutting edge AI enterprise services at Scale. As character John Hammond in Jurassic Park (1993), was fond of saying, “We Spared no Expense”.

For context, Amazon AWS, the biggest cloud services company with over a 130 billion in 2025 revenues, and representing over 60% of Amazon’s profits, is in danger of losing the AI Cloud share of its business to #2 Microsoft Azure over the next three years. It’s one of the reasons CEO Andy Jassy has committed to the largest sums, over $200 billion in AI Capex this year, vs its ‘Mag 7’ peers.

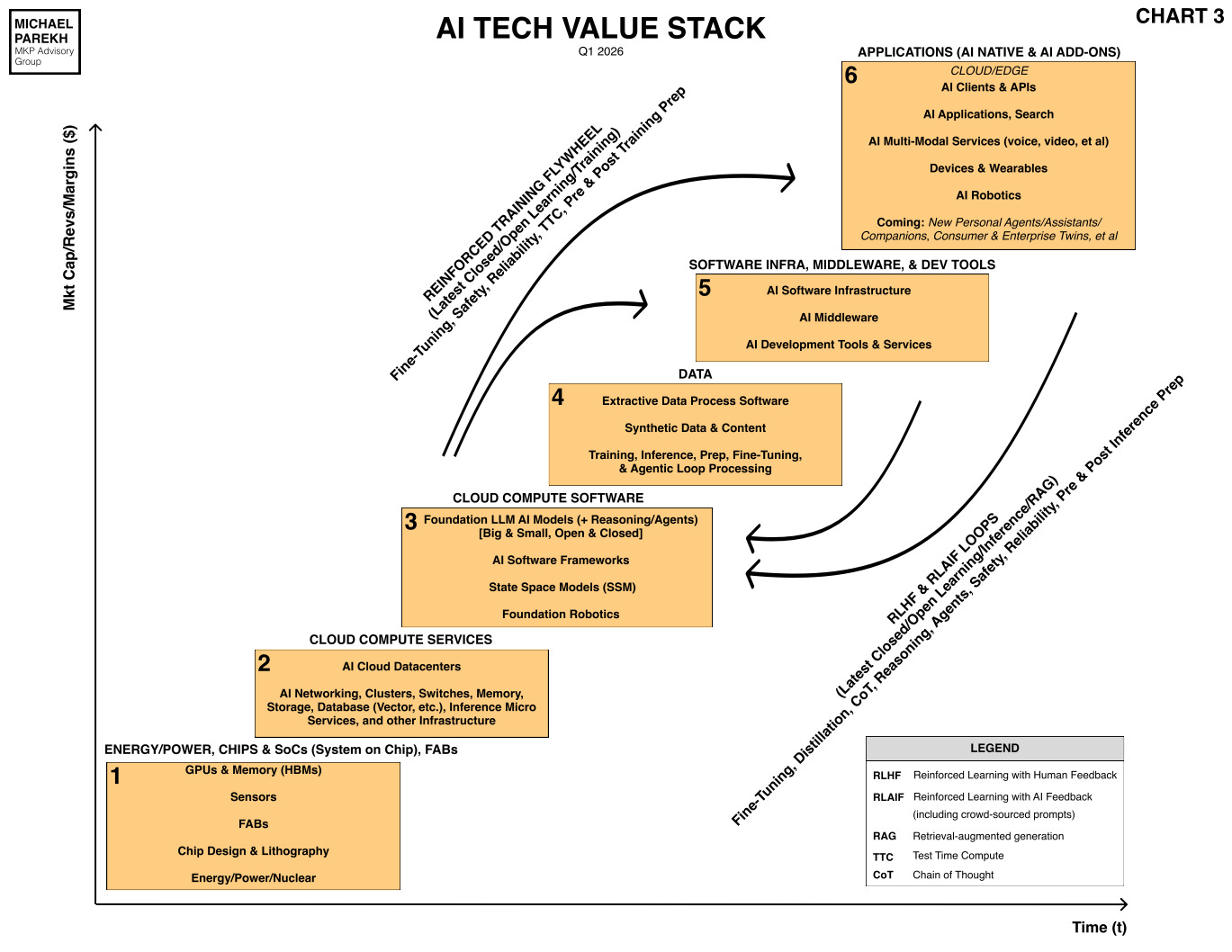

This dynamic is important to watch, as the three top Cloud providers, Amazon, Microsoft and of course Google Cloud, form the core foundation of AI applications and services in this AI Tech Wave for the rest of this decade. It’s Box #2 below, upon which the LLM AIs from OpenAI, Anthropic, Google Gemini and other lay the next layer in Box 3. All leading to the critical Data (#4), Software Infra (#5), and the holy grail #6, which are the AI Applications and Services.

The ‘Neoclouds’ like CoreWeave and others of course help build additional fleets of AI Data Centers mostly using Nvidia AI chips and infrastructure. But the three big ones, along with Oracle as a fast growing fourth major Cloud provider, are the core of the AI Cloud Data Center foundation.

The FT lays out Amazon AWS’s critical moment in “Amazon’s Andy Jassy bets on $200bn AI spending drive to revive AWS”:

”Amazon is embarking on the largest capital spending programme in its history, seeking to regain momentum against AI rivals by expanding data centres, developing chips and building models. The group is undergoing a strategic shake-up amid fears its cloud arm, AWS, is losing ground to competitors in securing corporate AI contracts, according to more than a dozen current and former senior employees.”

“Chief executive Andy Jassy last week announced Amazon’s capital expenditure would rise to $200bn this year, exceeding that of Google and Microsoft, with the outlay focused on computing infrastructure.”

AWS saw a reorg in addition to the infusion of a far bigger annual budget:

“In December, he consolidated the group’s chip, model and advanced research teams under a single leadership structure, a move intended to align its AI plans. The Amazon chief has also cut costs, including jobs — eliminating some 30,000 of about 350,000 corporate roles.”

“We have deep experience understanding demand signals in the AWS business and then turning that capacity into a strong return on invested capital,” Jassy said earlier this month. “We’re confident this will be the case here as well.”

The driver is as much concerns around competitive dynamics of peers, as it is enterprise market demand for AI applications and services.

“AWS employees said the company’s moves also reflected internal concern that it had failed fully to capitalise on its lead in cloud computing, in particular by being slower than rivals to secure major contracts with AI providers after OpenAI launched ChatGPT in 2022.”

Things have changed fast by now in the fourth year of OpenAI’s ChatGPT, especially as it comes out of Microsoft’s shadow:

“We were just not fully prepared for how fast things would unfold,” said one former senior AWS employee. AWS remains the world’s largest cloud provider, generating nearly $130bn in sales last year and more than 60 per cent of Amazon’s overall profits.”

“But analysts forecast that demand for AI-powered cloud services will lead Microsoft’s cloud business to overtake AWS over the next three years. Amazon said other providers did not report “true cloud figures” and that this prevented an accurate comparison between different cloud businesses.”

Microsoft has the unique advantage of full access to OpenAI’s AI IP for the rest of the decade and beyond. And AWS is in the cross-hairs as the current Cloud market leader. With oodles of AI chips from Nvidia:

“Roughly three-quarters of Amazon’s planned $200bn capital expenditure is allocated to AWS, according to public filings. Microsoft, Google and Oracle are on course to collectively spend nearly $400bn this year. Jassy last week said Amazon was planning to add a meaningful amount of data centre capacity this year.”

“In 2025, it added nearly 4 gigawatts of capacity — equivalent to the annual energy consumption of more than 3.2mn US homes. The group plans to double capacity by 2027. Investors are uneasy about the scale of Amazon’s bet.”

The markets are digesting this AWS dynamic, with Amazon shares now down in full correction territory:

“Shares are down more than 20 per cent from their November peak amid concerns about how quickly the spending will translate into returns. Employees said Amazon was under pressure to secure more deals with leading AI groups, among the main drivers of new cloud business.”

Amazon of course does have the benefit of an early investment in fast growing Anthropic, the #2 in LLM AIs vs OpenAI, but so does #3 Cloud provider Google:

“It has invested $8bn in Anthropic and is building vast data centres for the group. But its initial equity investment came after Google backed the start-up.”

“Meanwhile, as one of OpenAI’s earliest investors, Microsoft secured exclusive cloud computing contracts with the ChatGPT maker. Only after Microsoft allowed OpenAI to undergo a corporate restructure did Amazon sign a $38bn cloud computing deal with the start-up last year. That agreement is dwarfed by OpenAI’s $250bn contract with Microsoft and its $300bn worth of deals with Oracle.”

Amazon of course, is also banking on its own AI GPU chips to diversify its dependence on Nvidia and others. Just as Google is doing with its TPU AI chips, made by TSMC in Taiwan, just like Nvidia chips.

“It has touted the uptake of the group’s Graviton and Trainium chips, used for conventional cloud computing and AI training respectively. Sales of these chips are on course to generate more than $10bn in combined annual revenue.”

“Amazon debuted its latest generation of Trainium chips in December, promising a significant increase in performance. It is holding talks to join OpenAI’s latest multibillion-dollar funding round in a move partly designed to ensure the ChatGPT maker adopts its semiconductors, said people familiar with the matter. The chips should also help Amazon reduce its reliance on Nvidia’s products, helping to expand AWS’s profit margins from renting out data-centre capacity to corporate customers.”

Google’s TPU strategy and direct access to Taiwan Semiconductor (TSMC) fabs for manufacturing at scale remains a key advantage vs Amazon and others:

“However, Google has attracted interest in its “tensor processing unit” (TPUs), using its custom chips to advance its Gemini AI models. The search giant sold Anthropic 1mn TPUs in a deal worth tens of billions of dollars. Ben Bajarin of tech consultancy Creative Strategies questioned whether leading AI start-ups would adopt Amazon’s chips for their core products even if they proved cheaper to run than Nvidia.”

Amazon is also developing its own AI models, dubbed Nova, with slow initial success:

“Amazon talks specifically about price performance but the problem is that some users need outright performance,” he said. Amazon is also spending to advance its own “Nova” AI models, marketing them as a low-cost alternative to rival models. Nova underperforms the most advanced models made by OpenAI, Google, Meta and Anthropic, according to independent benchmarks.”

“Executives have been irked by some AWS employees describing Nova as “Amazon Basics”, a term used for the group’s generic household products, according to three people familiar with the matter. Staff said the company was pushing its own AI tools such as developer platform Kiro, while setting a target for 80 per cent of developers to use AI for coding tasks at least once a week.”

“But several company engineers said they preferred using Anthropic’s Claude over Nova for coding work, with one AWS engineer saying: “I didn’t even know we had a model.”

Amazon of course is defending its progress with Nova and Anthropic models on its Trainium chips:

“Amazon said its Nova models were used by “tens of thousands of AWS customers” and performed comparably to some leading models. It noted AI labs such as Anthropic were also deploying a significant number of its Trainium chips.”

And the top down laser focus on AI leadership is ramping up:

“Jassy last year said: “We’re going to keep pushing to operate like the world’s largest start-up — customer-obsessed, inventive, fast-moving, lean, scrappy, and full of missionaries trying to build something better for customers.”

“The pressure to regain ground in the AI race is weighing on employees. Some said they feared Amazon could slip closer to “day two” — a term used by founder Jeff Bezos in 2018 to describe a business in “stasis”, followed by an “excruciating, painful decline”.

“The culture has shifted, but so has the world around us,” said one senior AWS engineer of the company’s strategic pivot. “We’re going to have to prove our worth.”

The whole piece is worth a closer read, particularly for its comparative charts and graphs.

But it highlights that big tech incumbents have deeply learned the lessons of past tech waves. That not focusing on new tech waves with ‘Founder Mode’ intensity, is increasingly not an option. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)