AI: Enterprises like convenience too. RTZ #596

I’ve spent a fair bit of time in these pages discussing how enterprises large and small are trying to assess AI for their businesses, evaluating AI products and APIs (application programming interfaces) from OpenAI, Microsoft, Salesforce and many more AI vendors.

And the best ways to build, test and deploy AI reasoning agents, applications and services for their customers, using their own data, intermixed with external data as needed.

This is the BIG opportunity that every major LLM AI and AI data center company is focused on in this AI Tech Wave. And investors are waiting with bated breath to understand the rate at which these customers deploy AI products and services at scale.

Also of interest for investors has been the split between open and closed/proprietary LLM AI services businesses will end up using. Again, this is an area I’ve also gone into at length. New data this week seems to support the view that for now, many businesses are skewing to closed vs open LLM AI models, for a variety of reasons. And it’s worth diving in a bit into the details.

The Information lays it out in “Why Businesses are Skipping Open-Source Models”:

“If there’s one thing artificial intelligence sellers learned in 2024, it’s that customers want AI to be a cinch to set up. Businesses are even willing to pay a premium and take their chances with AI models or chatbots like ChatGPT that have fewer built-in security features than they’re used to—as long as they just work out of the box.”

“That explains why OpenAI and Anthropic each grew revenue 500% or more last year despite a proliferation of free, open-source alternatives from Meta Platforms, Mistral AI and others.”

I’ve discussed Meta’s success and opportunity with their open source Llama models of various sizes against traditional AI data center companies, and how it remains an attractive option for businesses and developers despite the trend above.

And the open source model LLM AI strategy is being emulated by a host of other software companies, especially on the enterprise database side:

“A year ago, some enterprise software providers, including Databricks and Snowflake, were betting many of their customers would prefer open-source models because they were easier to tweak and secure, and they performed nearly as well as proprietary models such as GPT-4. A customer would fine-tune a model to understand its specific business vocabulary so the model could better understand employees’ commands and give better answers, for instance.”

“But it turned out that customizing open-source models—or any kind of large language model, for that matter—isn’t something most AI customers want to bother with.”

The reasons vary, but it comes to one word, convenience of the closed/proprietary LLM AI companies, and their relatively easy to use tools and APIs. This is something companies like OpenAI, Microsoft and Google have been focused on in particular, as I’ve outlined.

As the Information continues, the ease of use factor plays a role in preferences today:

“It’s a little bit too hard” for a company to tweak AI models, said Naveen Rao, who runs Databricks’ generative AI business. “You have to do a lot of data engineering, data cleaning, in order to get a model that really works well. And I think that’s just a big step for most customers to take.”

“Databricks’ archrival, Snowflake, is now singing the same tune. Both companies, which primarily sell databases and other software for developing AI apps, say they have mostly stopped developing their own open-source generative AI models to offer to customers.”

For now, the open-source models need better tools and packaging:

“In other words, businesses aren’t taking advantage of one of the biggest potential advantages of using open-source models. Instead, they are using techniques like retrieval augmented generation to improve a model’s performance for their specific needs, without changing the model itself. RAG can work for both proprietary and open-source models.”

But there are still a big number of enterprises and developers. getting their hands dirty with open source:

“Meta spokesperson Ashley Gabriel said in a statement that startups and enterprises are widely using Llama, with over 770 million downloads. “The success of Llama showcases the transformative impact of open-source innovation,” Gabriel said.”

“Still, our reporting shows a mixed record for Llama. It has struggled to gain traction with customers of Amazon Web Services, and a recent edition of Llama saw fewer downloads shortly after its release than for older versions. For many companies, it’s just easier to tap OpenAI.”

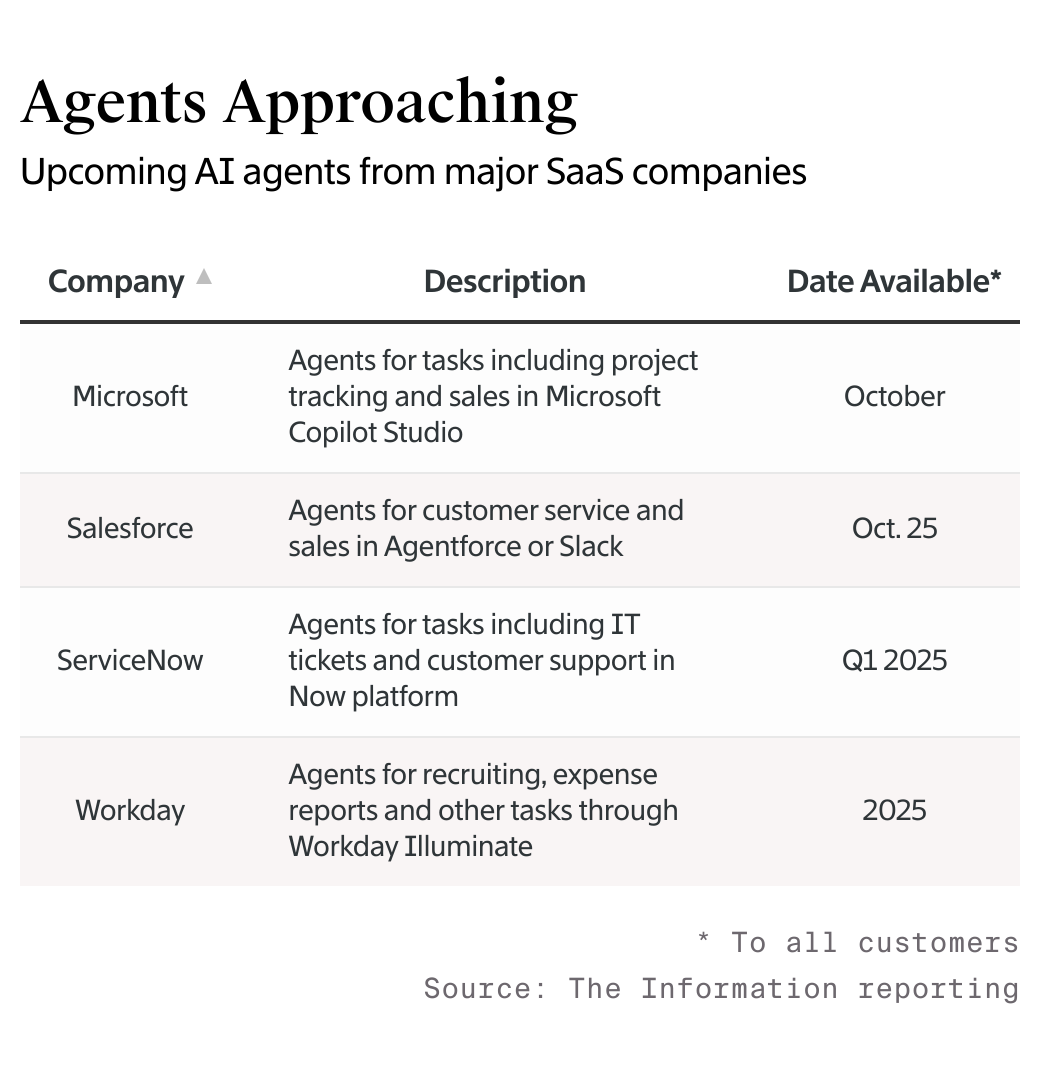

Traditional SaaS (Software as a service) companies like Salesforce et al of course benefit in the interim:

“The relatively slow corporate adoption of open-source LLMs is also potentially good news for enterprise application developers such as Salesforce and Oracle. Those incumbents are weaving AI features into existing apps and hope businesses will embrace them rather than spend the time and effort to replicate and replace such apps with the help of AI. (To be sure, some businesses are willing to go that distance, as we have shown.)”

“As Snowflake’s head of AI, Baris Gultekin, told me, “We’re seeing the market go more and more towards packaged, ready-to-go solutions.”

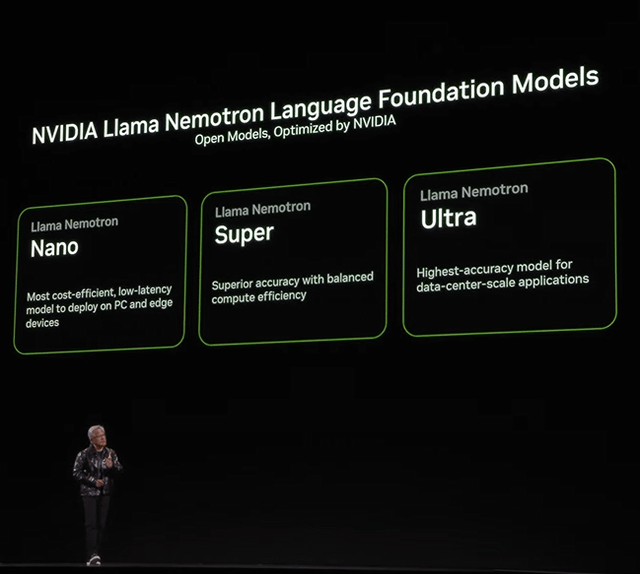

Better, easier to use hardware and software LLM open source models and services are coming, the most recent being Nvidia’s announcements this week of their range of open-source Llama Nemotron models in sizes small to large. Founder/CEO Jensen Huang laid it out at CES 2025, which I discussed a few days ago.

We’re going to see the convenience factor go up on the open-source side as vendors ramp up on this enterprise opportunity in this AI Tech Wave.

I’ve seen this movie before, having helped Redhat go public with its open source business in the 1990s. Their winning proposition over time was bundling open source software and services for businesses large and small. We’re likely to see similar opportunities this time with AI companies as well.

For now, it’s notable to see businesses swayed by the same factors consumers are in most products and services: convenience, and packaging, over additional DIY (do it yourself) efforts. The market will adjust accordingly on both open and closed. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)