AI: Google jumps to Gemini 3 Flash vs OpenAI. RTZ #943

The competitive cadence between Google and OpenAI brings to mind the Rolling Stones/Mick Jagger classic ‘Jumpin’ Jack Flash’:

“I was born in a crossfire hurricane

And I howled at the morning drivin’ rainBut it’s all right now, in fact it’s a gas

But it’s all right, I’m jumpin’ jack flash

It’s a gas, gas, gas”.

As I highlighted on Saturday, Google is pressing on its Gemini 3 lead vs OpenAI and other AI peers with Google 3 Flash released this week. The release is scoring well on benchmarks, and offer class leading competitive efficiency and compute token metrics. Gemini will rapidly replace Google Assistant across most Android phones globally next year. The timeline is a few months, not weeks.

That is important since the near-term race in this AI Tech Wave between Google and OpenAI with its recent ‘Code Red’, is getting to a billion plus weekly users worldwide. OpenAI currently is at 800 million vs 650 million for Google.

Axios outlines the details in “Google’s new Gemini 3 Flash is fast, cheap and everywhere”:

“Google released its new Gemini 3 Flash model on Wednesday, aiming to strike a decisive blow against OpenAI and other competitors by pushing a faster, cheaper model throughout its sprawling ecosystem.”

“Why it matters: The AI race is quickly becoming a standoff between Google and OpenAI, with huge implications not just for artificial intelligence technology, but for the entire economy.”

The economy piece is important given the impact of the AI Data Center buildout on US quarterly GDP growth numbers this year, as I’ve highlighted.

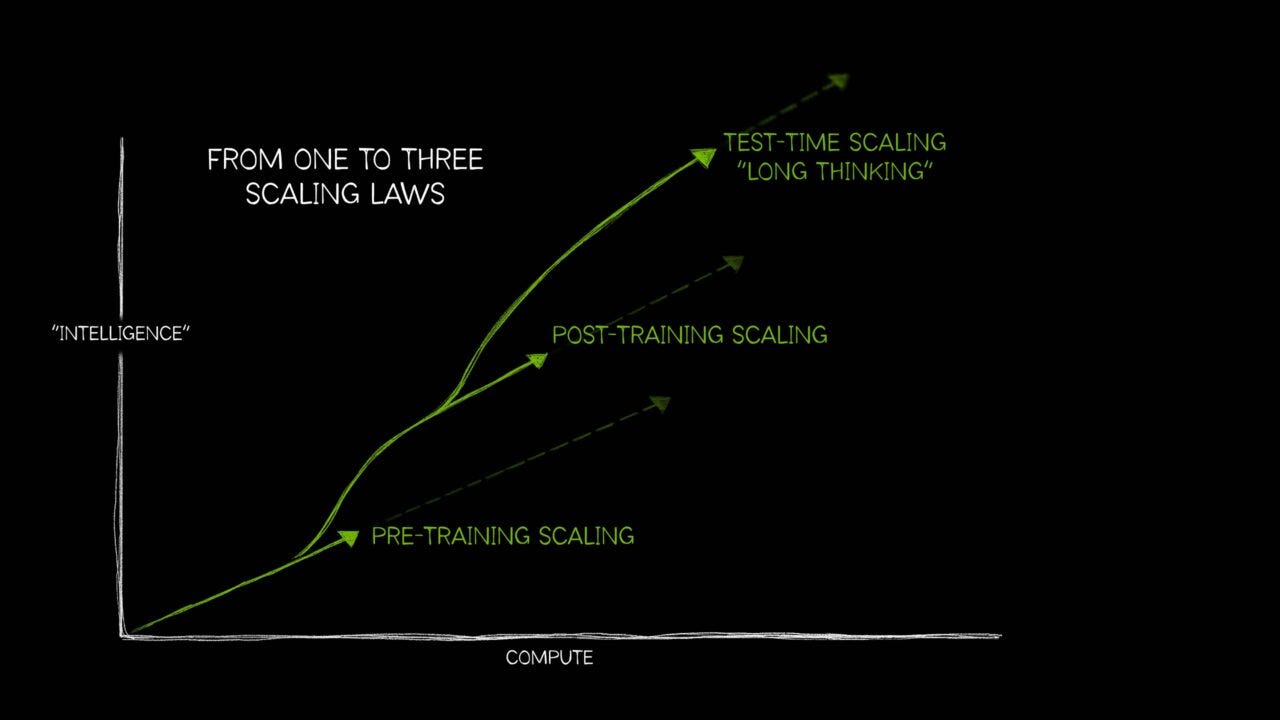

“Driving the news: Gemini 3 Flash includes Gemini 3 Pro’s reasoning capabilities in a model that Google says is faster, more efficient and cheaper to run.”

“This is about bringing the strength and the foundation of Gemini 3 to everyone,” Tulsee Doshi, senior director and Gemini product lead, told Axios. The release is all about giving more people access to the most powerful AI tools, Doshi says.”

“As of Wednesday, Gemini 3 Flash will be the default model in the Gemini app, replacing Gemini 2.5 Flash for everyday tasks.”

“It will also be the default model for AI Mode in search, meaning everyday Google users worldwide will be exposed to it.”

“Salesforce, Workday and Figma are already using Gemini 3 Flash.”

“The launch comes less than a week after OpenAI launched GPT-5.2, and a day after OpenAI launched ChatGPT Images.”

AI compute efficiency is the key variable as all the big companies . Google and others are ruthlessly prioritizing the availability of their AI data centers internally across many demands.

Gemini 3 Flash is a ‘jack of most trades’ with relative compute efficiency:

“Between the lines: More efficient AI models can put the power of machine learning into the hands of more people, including consumers and small businesses.”

“Google says Gemini 3 Flash excels at tasks like planning last-minute trips or learning complex educational concepts quickly.”

“Multimodal reasoning capabilities in the new model allow users to ask Gemini to watch videos, look at images, listen to audio or read text and turn those answers into content.”

And performs better vs its bigger siblings. And external competitors:

“The intrigue: Gemini 3 Flash performs better than Gemini 3 Pro on SWE-bench Verified, a benchmark for evaluating coding agent capabilities, Doshi says.”

“That makes the new model more attractive for business clients, an area where Anthropic’s Claude has made heavy inroads and where OpenAI is trying to catch up.”

“Catch up quick: Google released its Gemini 3 Pro model on Nov. 18, lighting a fire under OpenAI and forcing the company into a “code red“ mode to catch up.”

Efficiency of running the models is the hard and fast race between the AI companies. As the Information outlines in “OpenAI is getting more efficient at running its AI”:

“The AI giant is increasing the revenue it generates relative to the costs of running its models for paid users.”

“As OpenAI discusses raising an unprecedented investment round of up to $100 billion, it can crow about some key improvements to how it runs its business. While its total computing costs are still high as a percentage of revenue, it is wringing more revenue out of every dollar it spends to run the servers that power ChatGPT’s subscription business and selling access to models to corporate customers.”

“The company’s compute margin, its share of revenue after the cost of running AI models for paying users, has jumped to about 70% in October from about 52% at the end of last year and roughly 35% in January 2024, according to a person with knowledge of the company’s financials.”

It’s the same challenge and opportunity at Anthropic, Google and others at the scales involved.

All this is worth noting especially for the unending cadence of AI product improvement in AI Tech Wave.

Despite entering the holiday season and yearend 2025. A harbinger for an even faster pace next year, as I discussed yesterday.

It’ll all go in a flash, as everyone steps on the gas. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)