AI: Google ramping up Intelligence Tokens for Gemini AI everywhere. RTZ #727

Google knocked it out of the park. As I posited they could do two years ago.

They got all their wood behind the AI Arrow, aimed, and hit the target. Especially with Google ‘AI Mode’, which is where Google Search is going.

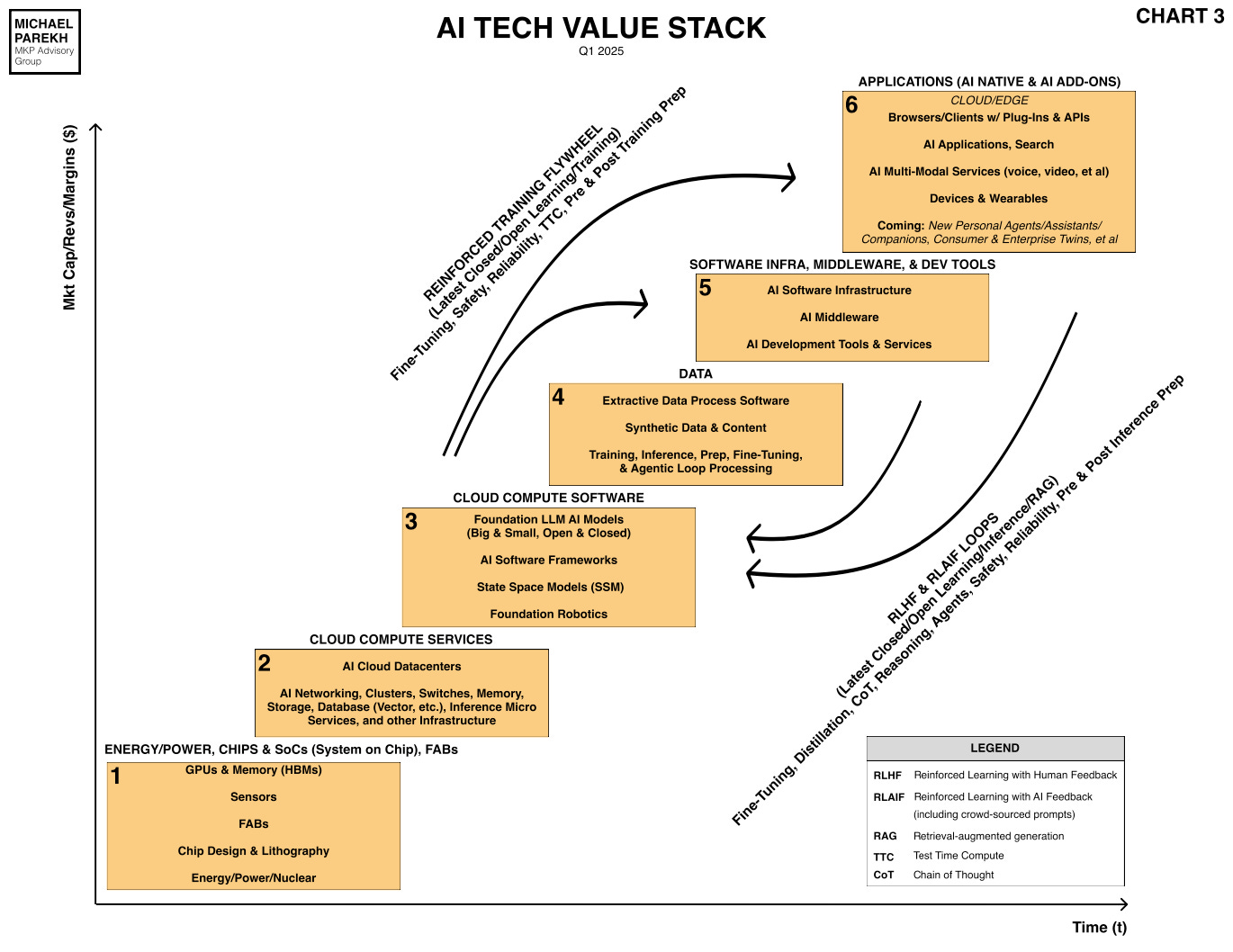

CEO Sundar Pichai’s Google I/O developer conference, and keynote, though long in waves of content, conveyed the concise message. Google, with its vertically integrated AI Tech Stack of Compute, Software, and Applications, has both ‘AI Add On’ and ‘AI Native applications and services integrated into current and upcoming AI products from Google. All the stuff in Box 6 of my AI Tech Stack chart below.

And none of it possible at Google Scale of making it all available to billions ahead of peers, without Google’s AI TPUS (their in-house version of Nvidia GPUs), Cloud Infrastructure, and the deep waters of its application software/services ecosystem. None of the Mag 7s, including Apple, which has the closest, comparable vertically integrated tech stack, are quite ready with the AI products and services Google announced and is rolling out with its latest versions as of this week.

The details are wide and deep. All about Google Googling with AI. From Google ‘AI Mode’ being integrated and rolled out with Google Gemini 2.5 Search, to Google text to video, audio, graphics, creative content, AI coding, AI translation in Meet, AI notebooks, AI Gmail and drive, AI video conferencing with the delightful Google Beam, AI XR Smart Glasses with glassware partners, AI powered Chrome, and more. The product names are dizzying in their scope and capabilities. The almost two hour keynote covers it in detail, and yet still leaves one wanting lot more details.

And yes, their AI Search product is now being used by over 400 million Google users monthly, close to the half billion number from their uber-competitor OpenAI.

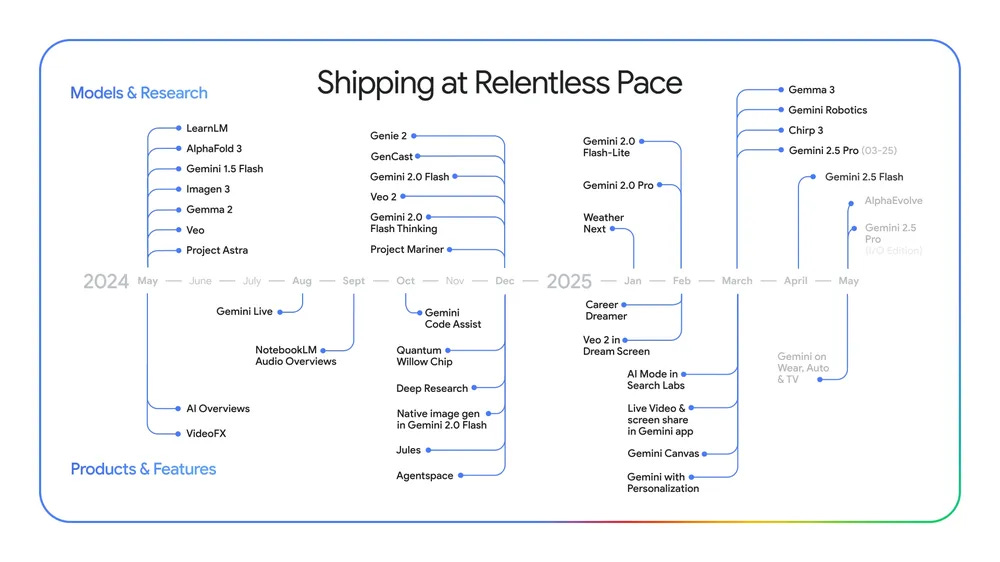

But the most impressive metric is the ability of Google to leverage their AI data center infrastructure to grow the Intelligence Tokens year to year. And a cost efficient pace that peers would envy.

From the keynote transcript:

“More intelligence is available, for everyone, everywhere. And the world is responding, adopting AI faster than ever before. Some important markers of progress:”

-

“This time last year, we were processing 9.7 trillion tokens a month across our products and APIs. Now, we’re processing over 480 trillion — that’s 50 times more.”

-

“Over 7 million developers are building with Gemini, five times more than this time last year, and Gemini usage on Vertex AI is up 40 times.”

-

“The Gemini app now has over 400 million monthly active users. We are seeing strong growth and engagement particularly with the 2.5 series of models. For those using 2.5 Pro in the Gemini app, usage has gone up 45%.”

The main story here is that they have the Compute in scale today vs the Compute their peers hope to have soon tomorrow.

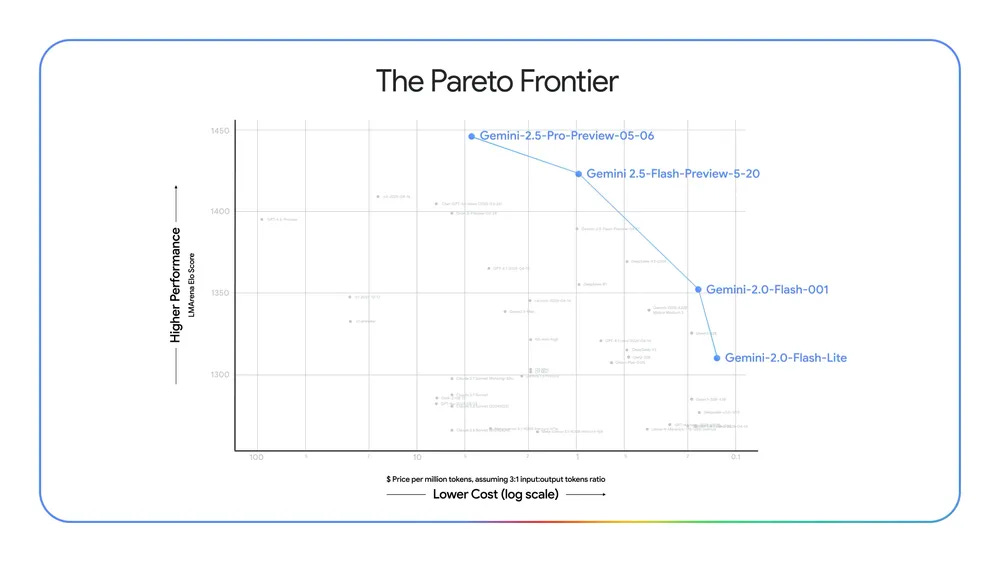

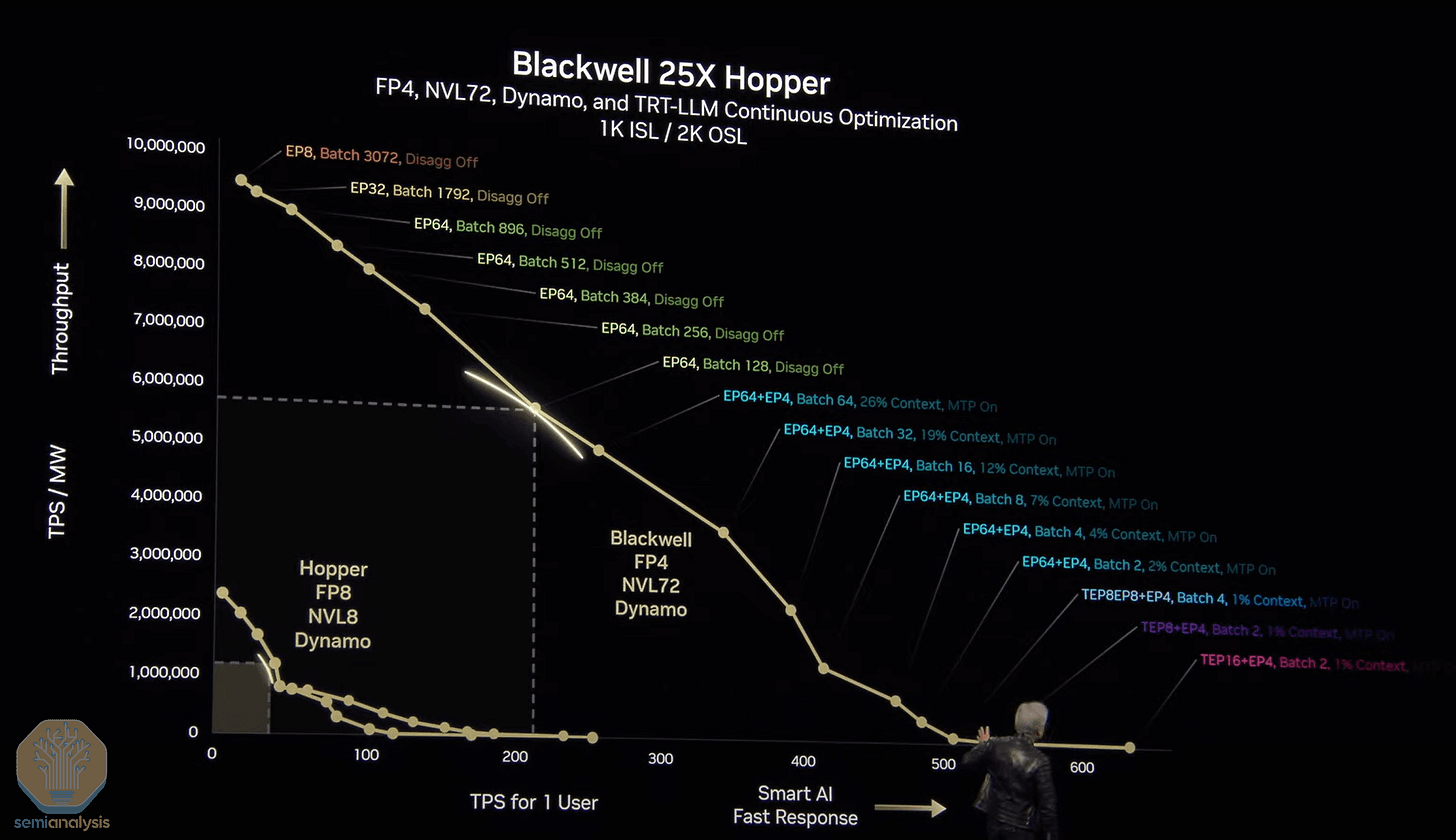

Producing intelligence tokens at scale with ever decreasing costs is the current holy grail for the AI industry. It’s something Nvidia founder/CEO Jensen Huang has focused on for a while when discussing ‘AI Factories’, with his recent chart from their GTC conference a few months ago, a key example.

It’s Nvidia’s Hopper/Blackwell/Rubin/Feynman+ product roadmap ‘Pareto Frontier’ that highlights their AI GPU leadership for years ahead. Google is in that game as well with its comparable TPU architecture, with its latest Ironwood TPU incarnation. This while of course while Google is a big Nvidia customer on its Google Cloud business side.

For Google, this ability to scale their own AI data center infrastructure with their AI TPU chip platforms, and to cost efficiently serve this range of intelligence tokens, is a unique advantage. And it’s something that all its rivals from OpenAI/Microsoft to Meta to Amazon/Anthropic to Elon’s xAI/Grok et al are spending hundreds of billions to build and serve.

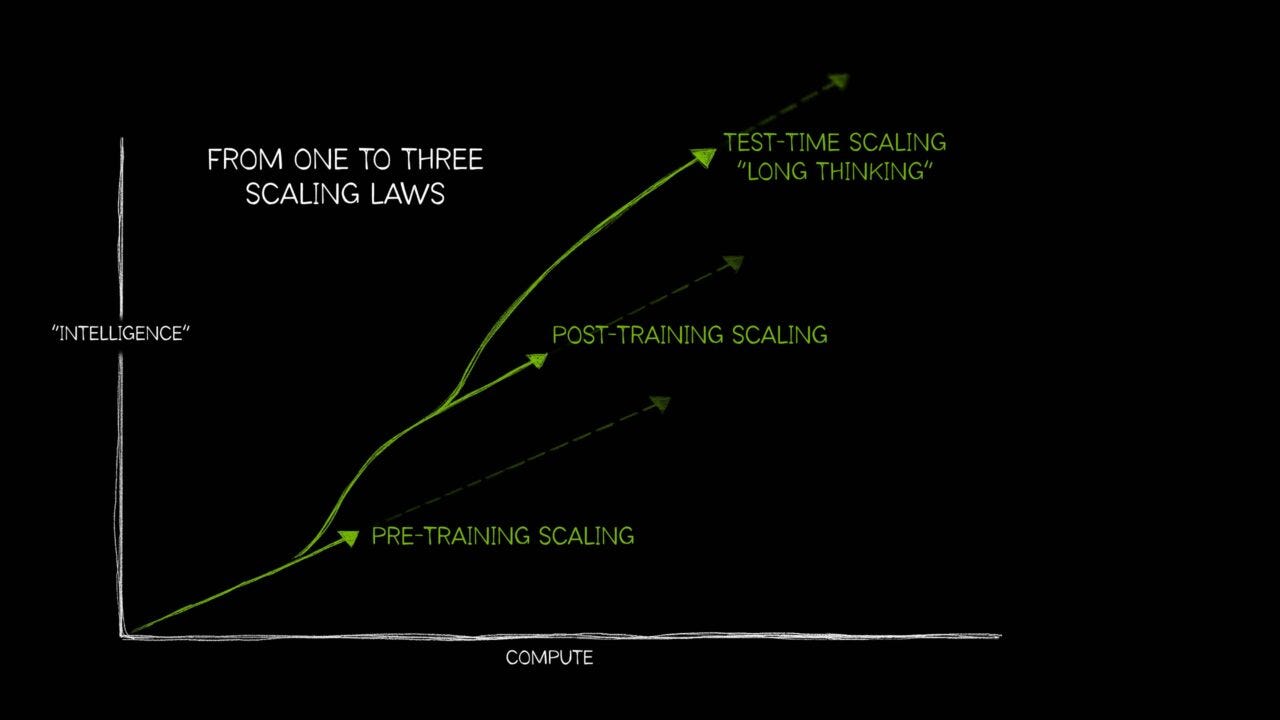

All in the race to scale AI across its trifurcating curves I’ve discussed in detail, from training to inference in all its extraordinary technical innovations like distillation, test time compute and many others.

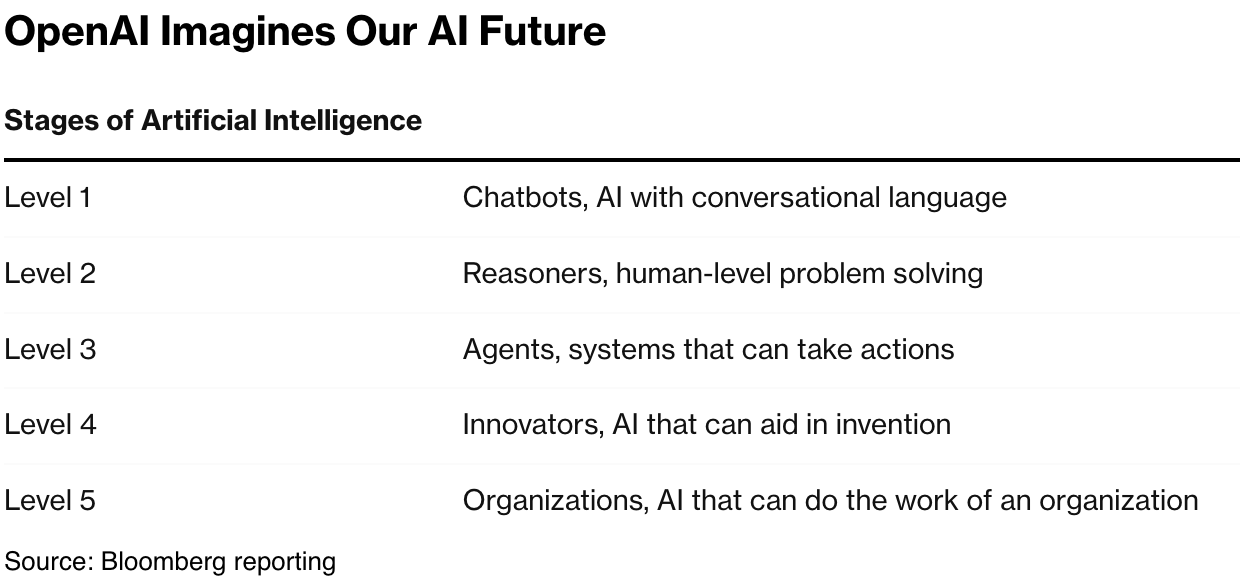

And racing away to AGI, whenever we hit it on the roadmap via AI reasoning, agents, and beyond.

For more details on all the impressive products, here are some useful links to scan.

Axios summarizes it well in “Google is putting more AI in more places”:

“Google used this week’s I/O developer conference to announce a slew of new AI features and experiences, along with a new $250-a-month subscription service for those who want to access the company’s latest tools.”

“Why it matters: Google is aiming to prove that it can make its core products better through AI without displacing its highly lucrative advertising and search businesses.”

“Key announcements Google made Tuesday:”

-

“It debuted Flow, a new AI filmmaking tool that draws on the company’s latest Veo 3 engine and adds audio capabilities. The company also announced Imagen 4, its latest image generator, which Google says is better at rendering text, among other improvements.”

-

“Google said it would soon make broadly available its latest Gemini 2.5 Flash and Pro models and add a new reasoning mode for the Pro model called Deep Think.”

-

“Google will make its AI Mode, a chat-like version of search, broadly available in the U.S., and it’s updating the underlying model to use a custom version of Gemini 2.5.”

-

“It’s also beginning to allow customers to give large language models access to their personal data — starting with the ability to generate personalized smart replies to Gmail messages that draw on a user’s email history. That feature will be available for paying subscribers this summer, Google CEO Sundar Pichai said.”

-

“On the coding front, Google offered further details on Jules, its autonomous agent, which is now available in public beta. Microsoft and OpenAI have also announced new coding agents in recent days.”

“For those who want to make sure they can have the most access to Google’s AI models, the company is introducing Google AI Ultra, a $250-a-month service.”

-

“The high-end subscription — an alternative to the standard $20-a-month basic service — includes access to a number of its most powerful AI agents, models and services, as well as YouTube Premium and 30TB of cloud storage.”

Even in new and upcoming product areas, the company delighted reviewers with products yet to come. Examples here include Google Beam, an AI powered corporate videoconferencing setup that delighted first time testers.

And their prototypes of AI Google XR Smart Glasses with built in display, all yet to be released.

The product above would go up against Meta’s successful Ray-Ban partnered AI smart glasses which have sold over 2 million units thus far. The industry is racing to perfect these going forward in various forms and iterations.

“Also in Google’s cavalcade of demos, the company showed an early prototype of its Android XR glasses searching, taking photos and performing live translation.”

-

“The glasses have audio and camera features similar to Meta’s Ray-Ban smart glasses, but also offer an optional display. (Meta is also said to be working on a version of its glasses with a built-in display for later this year.)”

-

“Partners include Samsung and eyewear makers Warby Parker and Gentle Monster.”

Again, a lot to take in here, especially in the details of the various Google AI products and services being offered.

This has been a week of AI centric developer conferences that’s I’ve discussed, with more to come.

“The big picture: Google’s event comes a day after Microsoft made a slew of announcements at its Build conference in Seattle. Anthropic, meanwhile, is holding its first-ever developer conference on Thursday in San Francisco.”

But the big picture is that Google is hitting its AI stride this AI Tech Wave with Gemini and more, despite the concerns many have had to date. They have the ability to deliver the ‘intelligence tokens’ to drive it all at scale.

Even with the competition still coming on strong. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)