AI: Jensen shows off Nvidia roadmap at Computex 2025. RTZ #725

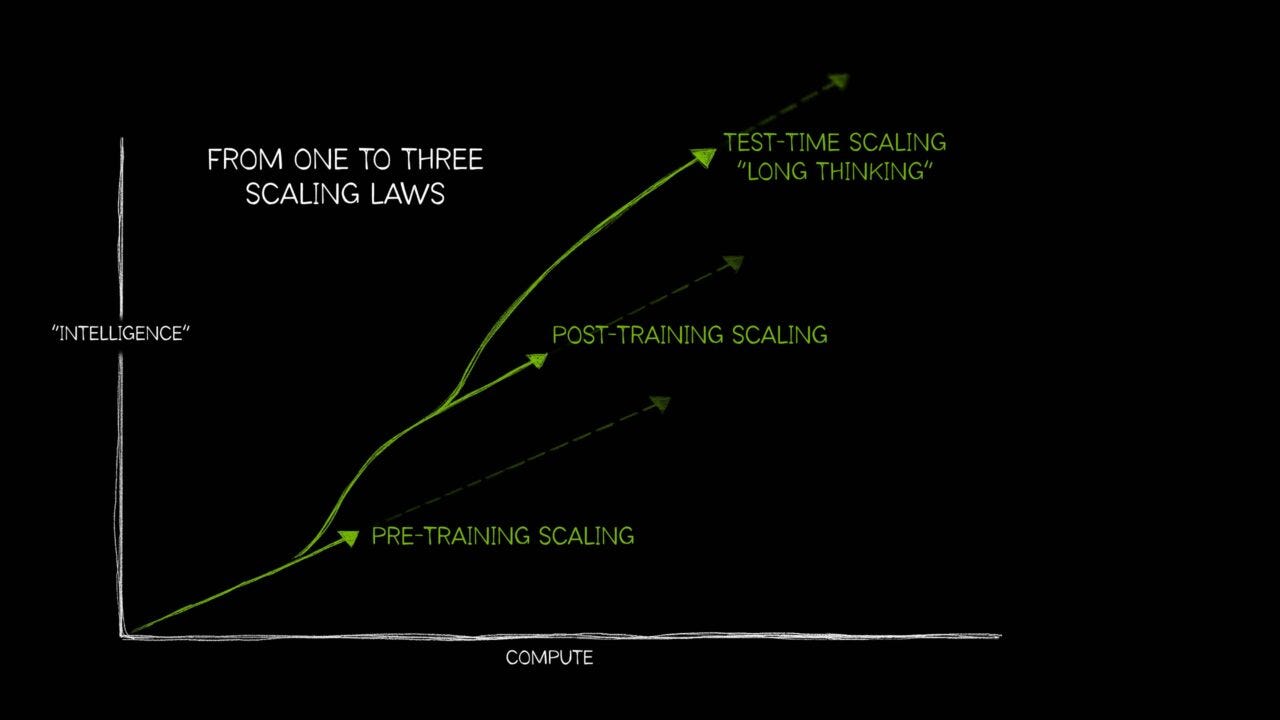

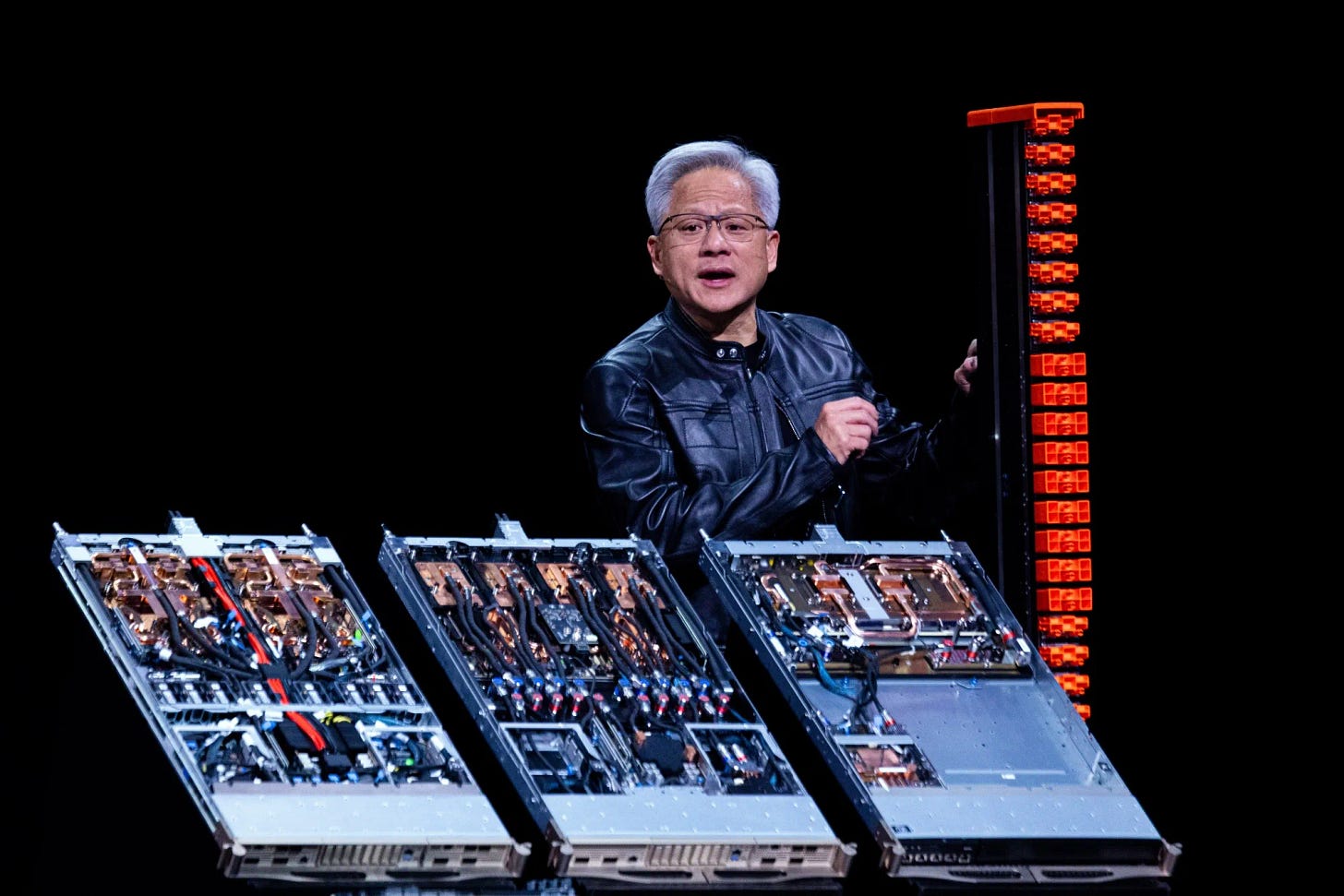

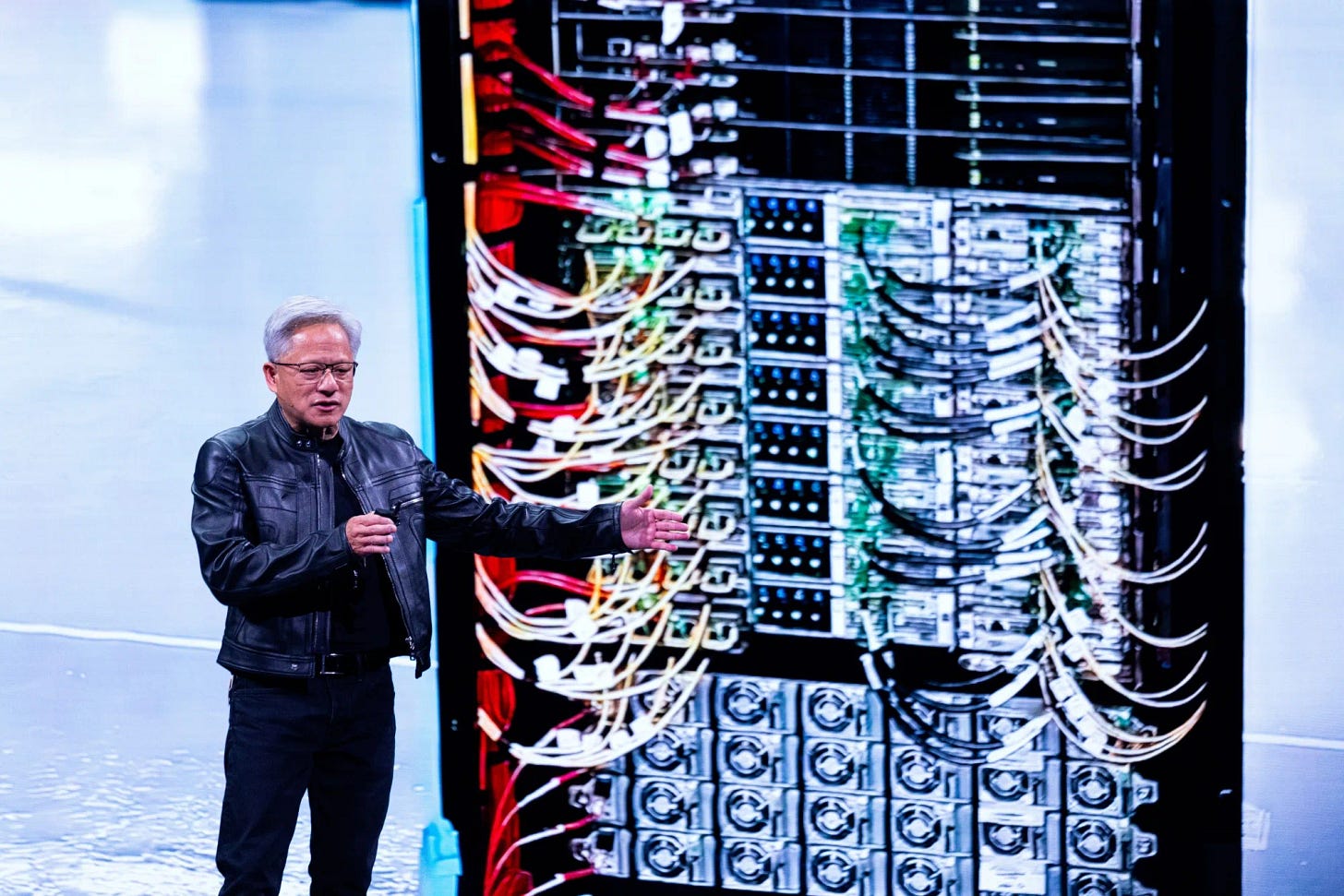

Nvidia laid out its updated AI data center to AI data factory roadmap at its Nvidia GTC Taipei Computex 2025 in Taiwan, a major annual conference for the technology industry. While the whole presentation is worth watching, I’d like to highlight some key points that are very relevant for where we are in this AI Tech Wave thus far.

This presentation by founder/CEO Jensen Huang builds on the beefy AI Infrastructure roadmap he rolled out in March 2025 at GTC (GPU technology conference), and of course the 2024 GTC as well. And it all illustrates how the technologies and the infrastructure building opportunities are accelerating at scale for developers and end customers globally.

Bloomberg summarizes the rollouts well in “Nvidia Opens AI Ecosystem to Rival Chipmakers in Global Push”:

“Nvidia Corp. Chief Executive Officer Jensen Huang outlined plans to let customers deploy rivals’ chips in data centers built around its technology, a move that acknowledges the growth of in-house semiconductor development by major clients from Microsoft Corp. to Amazon.com Inc.”

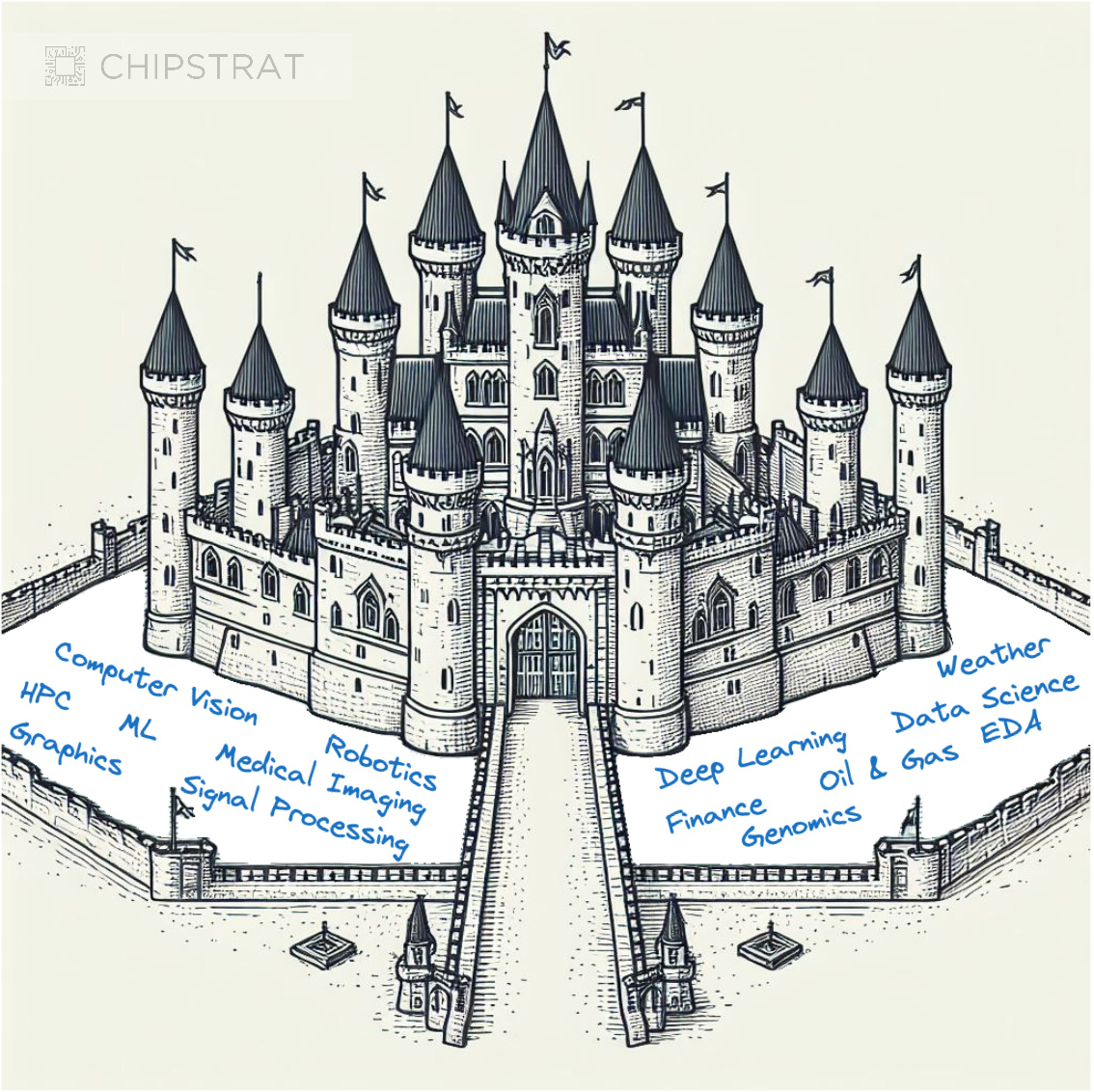

Remember that Nvidia’s top customers, the LLM AI companies (Microsoft/OpenAI, Google, Amazon, Meta, Tesla et al), accounting for almost half of his annual revenues the next few years, are also building their own chips to compete with Nvidia down the road. And remember that Nvidia has many pieces of its ‘moat’, especially the CUDA open source software kernels and libraries that most developers globally rely on to build AI applications and services.

And Nvidia has one of the densest array of hardware and software partners around its technologies, many of them in Taiwan and Asia:

“Huang on Monday kicked off Computex in Taiwan, Asia’s biggest electronics forum, dedicating much of his nearly two-hour presentation to celebrating the work of local supply chain partners. But his key announcement was a new NVLink Fusion system that allows the building of more customized artificial intelligence infrastructure, combining Nvidia’s high-speed links with semiconductors from other providers for the first time.”

The company announced ‘NVLink Fusion’ products that allow many of its vendor partners and customers to build their own customized AI infrastructure around semicustom systems build with Nvidia components:

“To date, Nvidia has only offered complete computer systems built with its own components. This opening-up gives data center customers more flexibility and allows a measure of competition, while still keeping Nvidia technology at the center. NVLink Fusion products will give customers the option to use their own central processing units with Nvidia’s AI chips, or twin Nvidia silicon with another company’s AI accelerator.”

“Santa Clara, California-based Nvidia is keen to shore up its place at the heart of the AI boom, at a time investors and some executives express uncertainty whether spending on data centers is sustainable. The tech industry is also confronting profound questions about how the Trump administration’s tariffs regime will shake up global demand and manufacturing.”

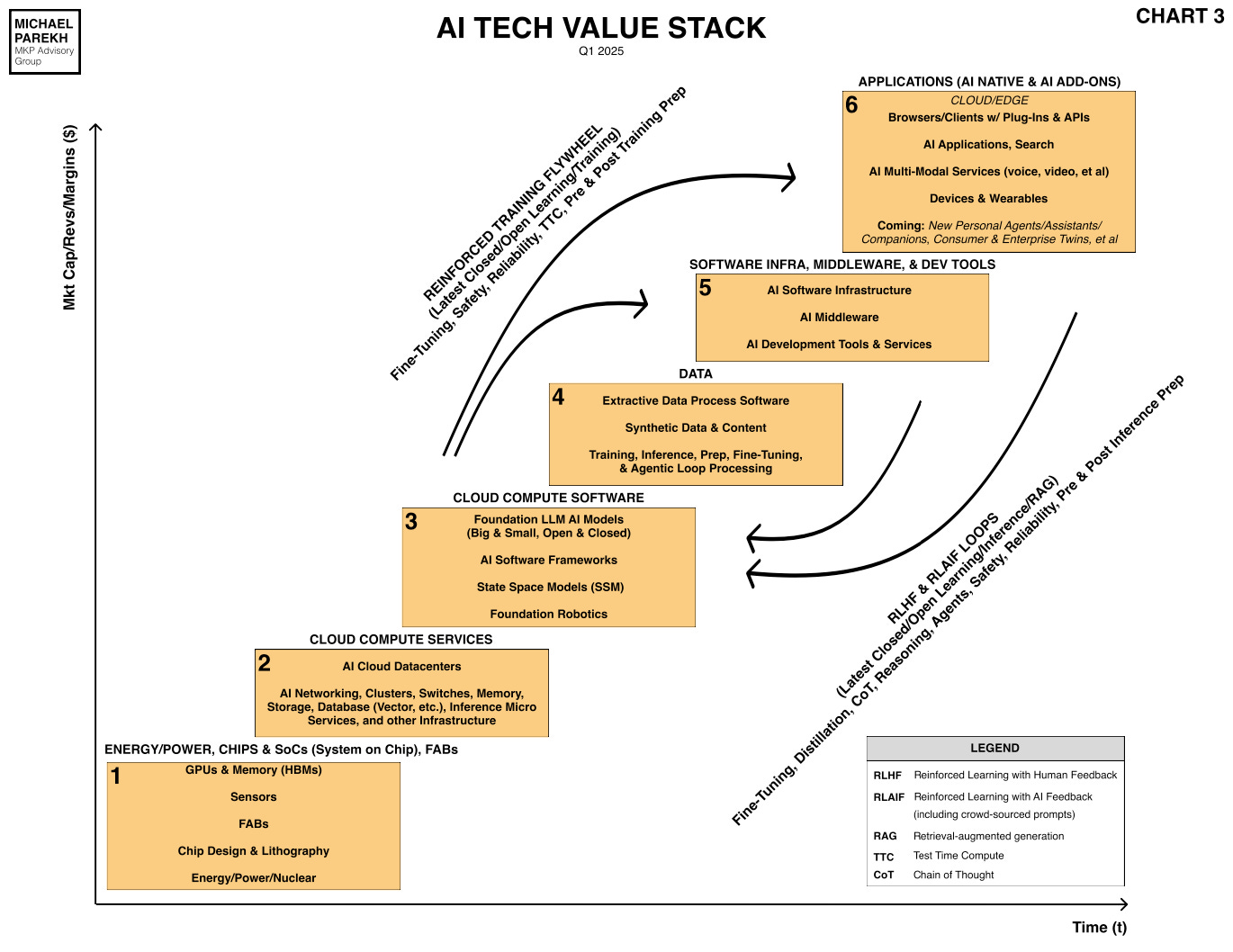

All this helps buttress Nvidia’s position in the AI Tech Stack from Box 2 all the way to Box 6 below:

“It gives an opportunity for hyperscalers to build custom silicon with NVLink built in,” said Ian Cutress, chief analyst at research firm More Than Moore. “Whether they do or not will depend on if the hyperscaler believes Nvidia will be here forever and be the keystone. I can see others shun it so they don’t fall into the Nvidia ecosystem any harder than they have to.”

“Apart from the data center opening, Huang on Monday touched on a series of product enhancements from faster software to chipset setups intended to speed up AI services. That’s a contrast with the 2024 edition, when the Nvidia CEO unveiled next-generation Rubin and Blackwell platforms, energizing a tech sector then searching for ways to ride the post-ChatGPT AI boom.”

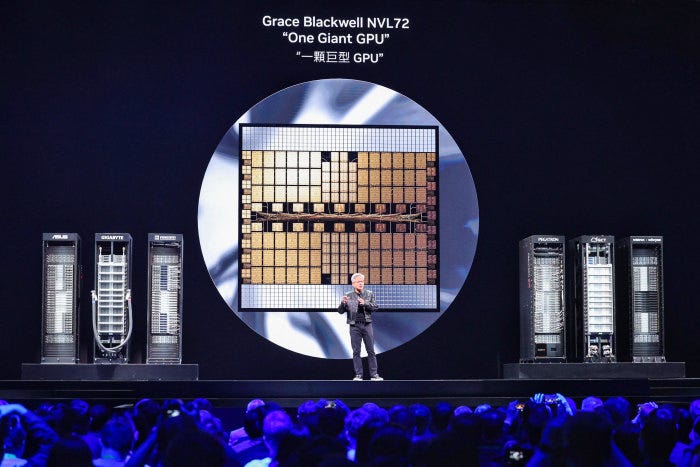

There was a modest upgrade to the current state of the art Blackwell AI GPU infrastructure:

“Huang opened Computex with an update on timing for Nvidia’s next-generation GB300 systems, which he said are coming in the third quarter of this year. They’ll mark an upgrade on the current top-of-the-line Grace Blackwell systems, which are now being installed by cloud service providers.”

“At Computex, Huang also introduced a new RTX Pro Server system, which he said offered four times better performance than Nvidia’s former flagship H100 AI system with DeepSeek workloads. The RTX Pro Server is also 1.7 times as good with some of Meta Platforms Inc.’s Llama model jobs. That new product is in volume production now, Huang said.”

And some notable infrastructure investments in Taiwan as well:

“On Monday, he made sure to thank the scores of suppliers from TSMC to Foxconn that help build and distribute Nvidia’s tech around the world. Nvidia will partner with them and the Taiwanese government to build an AI supercomputer for the island, Huang said. It’s also going to build a large new office complex in Taipei.”

In many ways, Jensen highlighted Nvidia’s deep connection to the land he was born. He had his parents in the audience.

“When new markets have to be created, they have to be created starting here, at the center of the computer ecosystem,” Huang, 62, said about his native island.”

And of course all this is important in light of the ‘Frenemies’ nature of Nvidia’s core business:

“While Nvidia remains the clear leader in the most advanced AI chips, competitors and partners alike are racing to develop their own comparable semiconductors, whether to gain market share or widen the range of prospective suppliers for these pricey, high-margin components. Major customers such as Microsoft and Amazon are trying to design their own bespoke parts, and that risks making Nvidia less essential to data centers.”

The new offerings also expand commercial opportunities for Nvidia’s core partners:

“The move to open up the Nvidia AI server ecosystem comes with several partners already signed up. MediaTek Inc., Marvell Technology Inc. and Alchip Technologies Ltd. will create custom AI chips that work with Nvidia processor-based gear, Huang said. Qualcomm Inc. and Fujitsu Ltd. plan to make custom processors that will work with Nvidia accelerators in the computers.”

And Nvidia had offerings rolling out for local AI processing with ‘Small AI’ models that I’ve discussed to date:

“Nvidia’s smaller-scale computers — the DGX Spark and DGX Station, which were announced this year — are going to be offered by a broader range of suppliers. Local partners Acer Inc., Gigabyte Technology Co. and others are joining the list of companies offering the portable and desktop devices starting this summer, Nvidia said. That group already includes Dell Technologies Inc. and HP Inc.”

And of course it’s offerings for the ‘Physical AI’ world of AI driven robots:

“The company also discussed new software for robots that would help more rapidly train them simulation scenarios. Huang talked up the potential and rapid growth of humanoid robots, which he sees as potentially the most exciting avenue for so-called physical AI.”

And its new ways to roll out ‘AI Factories’:

“Nvidia said it’s offering detailed blueprints that will help accelerate the process of building “AI factories” by corporations. It will provide a service to allow companies that don’t have in-house expertise in the multistep process of building their own AI data centers to do so.”

“In addition, the company introduced a new piece of software called DGX Cloud Lepton. This will act as a service to help cloud computing providers, such as CoreWeave Inc. and SoftBank Group Corp., automate the process of hooking up AI developers with the computers they need to create and run their services.”

Overall reaction to the keynote was positive:

“The keynote was “packed with new product launches and developments for the Age of AI,” Rosenblatt Securities analyst Kevin Cassidy wrote in a note following the event. “We view the announcement of the NVLink Fusion for custom silicon as the most important,” he added, as this is “a strategic move by Nvidia to take part in upcoming custom silicon road maps from customers, including hyperscalers.”

For this year, the Blackwell chips remain the heart of the offerings, followed by Rubin and Feynmann down the road.

The WSJ also has a good piece on the conference for additional details.

For now, the presentation highlighted Nvidia’s core focus on its technology products and partnerships. While it also navigates the global realities of US/China geopolitics, tariffs and other frictions.

The company under Jensen’s leadership is certainly walking and chewing gum at the same time in this AI Tech Wave. And doing it at Double time while accelerating. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)