AI: Latest AI releases have notable pros and cons. RTZ #699

This year has seen a relentless flow of LLM AI innovations from the AI industry led by industry leaders OpenAI, Amazon/Anthropic, Meta, Google and others here, and DeepSeek, Alibaba, and others in China.

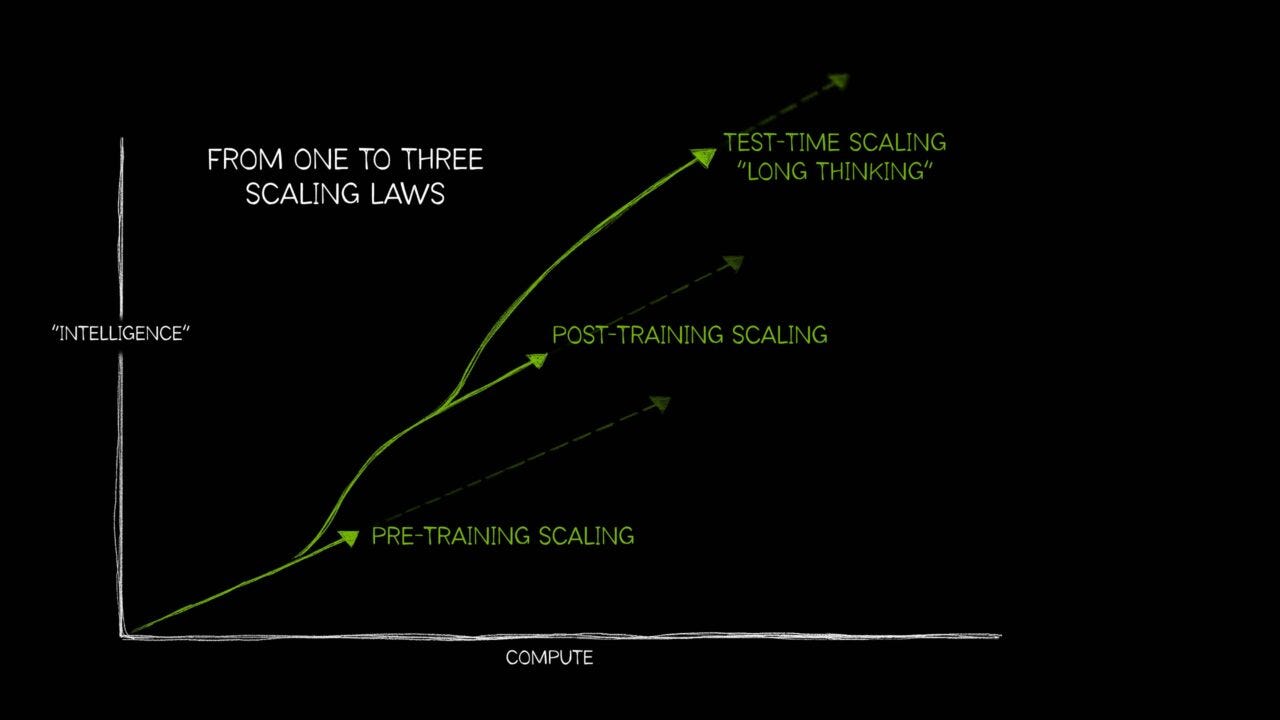

And as I’ve described much of the product innovations and iterations in AI models, AI Reasoning, AI Agents, AI Tools and beyond on the way to AGI, are barely constructed commercial products. While the industry expends hundreds of billions in AI Capex. And that’s BEFORE the ‘trifurcation of AI Scaling’ I’ve discussed.

These are new concoctions barely out of the oven. And developers/users are finding that as exciting as they are in their capabilities, there are in many cases issues to be worked out. In many cases, the new products are LESS reliable in terms of hallucinations and outright ‘deceptions’. It’s worth a closer discussion.

Axios lays out the pros and cons well in “Advanced AI gets more unpredictable”, and it’s worth unpacking:

“The rave reviews OpenAI’s latest models have been winning come with an asterisk: Experts are also finding that they’re erratic — they break previous records for some tasks but backslide in other ways.”

“Why it matters: “Frontier AI models” keep pushing into new territory, but their progress hasn’t become any more scientific or predictable in the two-and-a-half years since ChatGPT took tech by storm.”

They then take OpenAI’s latest and greatest as a case in point:

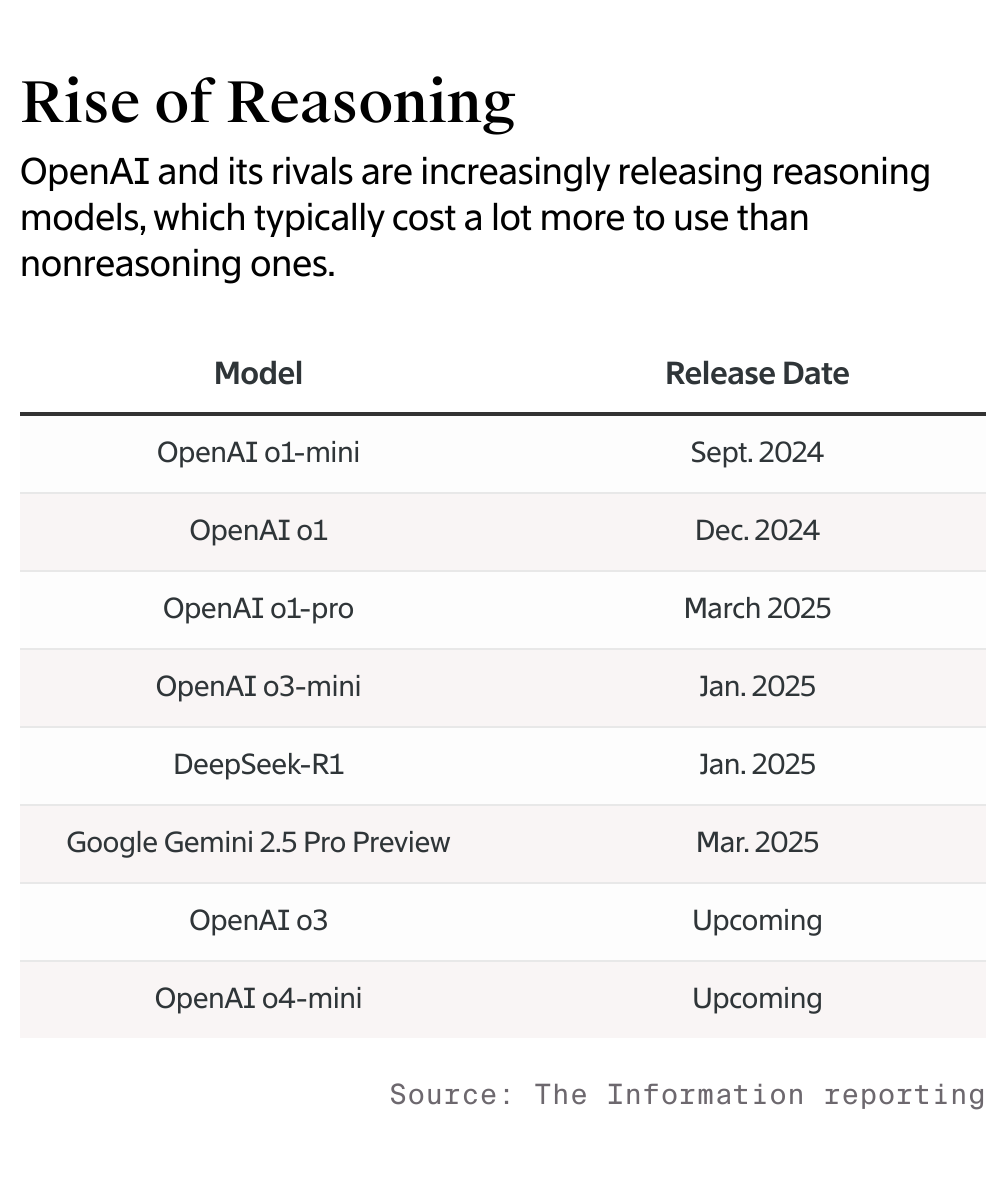

“Catch up quick: OpenAI released the o3 and smaller o4-mini models a week ago and called them “the smartest models we’ve released to date.”

-

“The company and early testers lauded o3 for its overall reasoning prowess — its ability to respond to a user prompt by planning, executing and explaining a series of planned steps.”

-

“They also highlighted o3’s reliability in conducting web searches and using other digital tools without constant user supervision or intervention.”

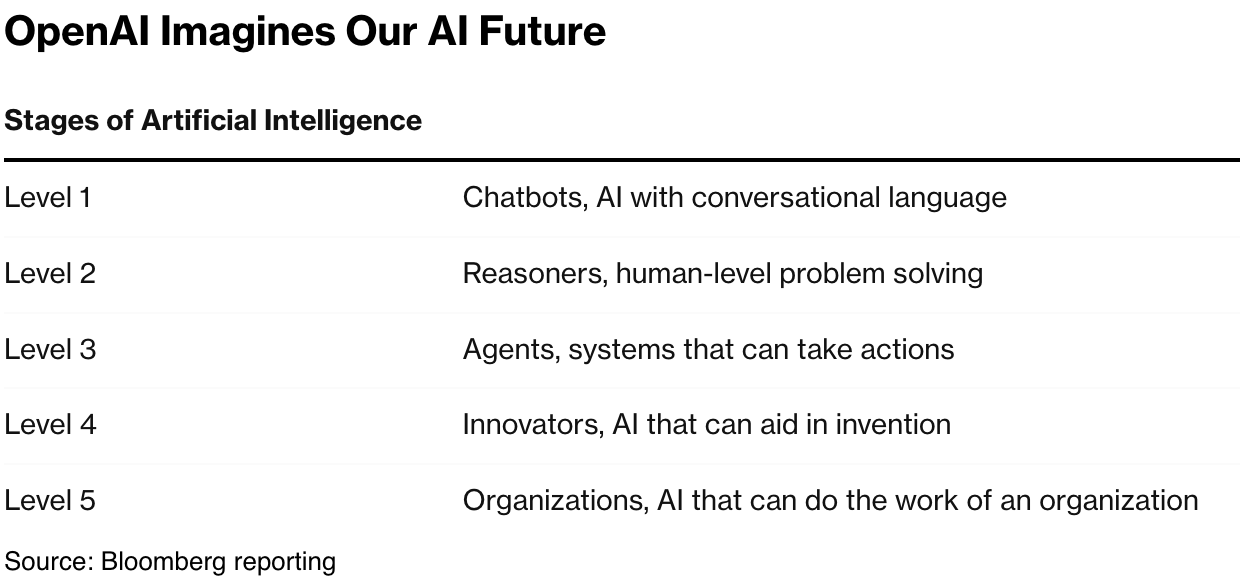

These OpenAI innovations supercharge product capabilities from Level 1 to 3 below:

The applause has been loud and wide:

“o3 won praise from reviewers not only for bread-and-butter AI work like writing, drawing, calculating and coding but also for advances in vision capabilities.”

-

“One popular — and, for privacy experts, potentially alarming — trick that went viral: Using o3 to look at virtually any digital photo and identify where it was taken.”

The flurry of AI reasoning and agentic innovations this year have been breathtaking:

“What they’re saying: “These models can run searches as part of the chain-of-thought reasoning process they use before producing their final answer. This turns out to be a huge deal,” the developer Simon Willison wrote.”

-

“This is the biggest ‘wow’ moment I’ve had with a new OpenAI model since GPT-4,” Every’s Dan Shipper reported.”

-

“Economist-blogger Tyler Cowen declared that o3 heralded the advent of AGI: “I think it is AGI, seriously. … Benchmarks, benchmarks, blah blah blah. Maybe AGI is like porn — I know it when I see it. And I’ve seen it.”

But so have been discussions on issues to address and fix:

“Yes, but: Plenty of reviewers found reasons to criticize o3, including math errors and deceptions.”

-

“A study of models’ performance in financial analysis placed o3 at the top of the heap — but it still only provided accurate results 48.3% of the time, and its cost-per-query was the highest by far, at $3.69. (The Washington Post has more on the study.)”

“Between the lines: Intriguingly, OpenAI notes that despite o3’s impressive capabilities, it’s actually regressing in some areas — like its tendency to “hallucinate,” or make up incorrect answers.”

-

“In one widely used accuracy benchmark test, OpenAI found that o3 hallucinates at more than twice the rate of its predecessor, o1.”

-

“o3 also answers more questions — and gets more of them right — than o1. “More research is needed” to understand why o3’s error rate jumped, OpenAI says.”

So the glass is both half full and half empty, as the industry ‘keeps it coming’:

“Zoom out: AI analyst Ethan Mollick describes o3’s impressive but scattershot performance as an example of “the jagged frontier”: “In some tasks, AI is unreliable. In others, it is superhuman.”

-

“Mollick argues that “the latest models represent something qualitatively different from what came before, whether or not we call it AGI. Their agentic properties, combined with their jagged capabilities, create a genuinely novel situation with few clear analogues.”

And the piece underlines that much remains to be done:

“Our thought bubble: Software makers and programmers have spent decades trying to make their work more reliable, scalable and flexible, and they’ve made plenty of progress.”

-

“Making AI is newer, stranger and so far not well enough understood to be turned into a predictable discipline.”

“The bottom line: Designing, building and training AI models remains stubbornly resistant to developers’ efforts to impose scientific rigor on their field or duplicate their results.”

-

“Apparently, this process is still more like raising a kid than building a bridge.”

-

“That adds to the sense of mystery and possibility surrounding AI development — but also frustrates efforts to domesticate it or harness it for economic advantage.”

Think the whole piece is a balanced reminder of how much there is to celebrate and yet recognize the hard work that remains ahead.

As I noted in a piece earlier this year titled “‘Miles to go before I Sleep’”:

“OpenAI and others’ work is just getting started, a decade in. The industry has some hard tasks ahead in a frenetically scaling AI industry”.

And that’s where we are in this AI Tech Wave. As exciting as the latest AI innovations are, so much heavy lifting is yet to be done. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)