AI: 'Making it up in Volume'this AI tech wave. RTZ #831

We’re going to have to turn up the volume in AI compute, even beyond 11, as the popular Spinal Tap clip goes. And we’re seeing the reason in the current environment around pricing for AI beyond training of the LLM AI models. On the ‘inference side’ of the AI models. And mix our metaphors by the ‘Volume’ above, into the ‘making it up in volume’ side of things. Let’s unpack:

I’ve long discussed how Variable costs in AI are a critical difference in this AI Tech Wave vs prior tech waves. Not to mention a key challenge to manage pricing for AI products and services up and down the AI Tech Stack.

Not just an issue for the LLM AI companies like OpenAI in Boxes 3 on, but also for companies all the way up in the holy grail Box #6 in AI Applications and Services.

That complexity is coming through now as hundreds of companies above the LLM AI layer now figure out the complexity of charging for countless types of AI reasoning, and agentic activities across countless applications, AI and otherwise.

The WSJ re-visits this key topic in “Cutting-Edge AI Was Supposed to Get Cheaper. It’s More Expensive Than Ever.”:

“With models doing more ‘thinking,’ the small companies that buy AI from the giants to create apps and services are feeling the pinch”

“As artificial intelligence got smarter, it was supposed to become too cheap to meter. It’s proving to be anything but.”

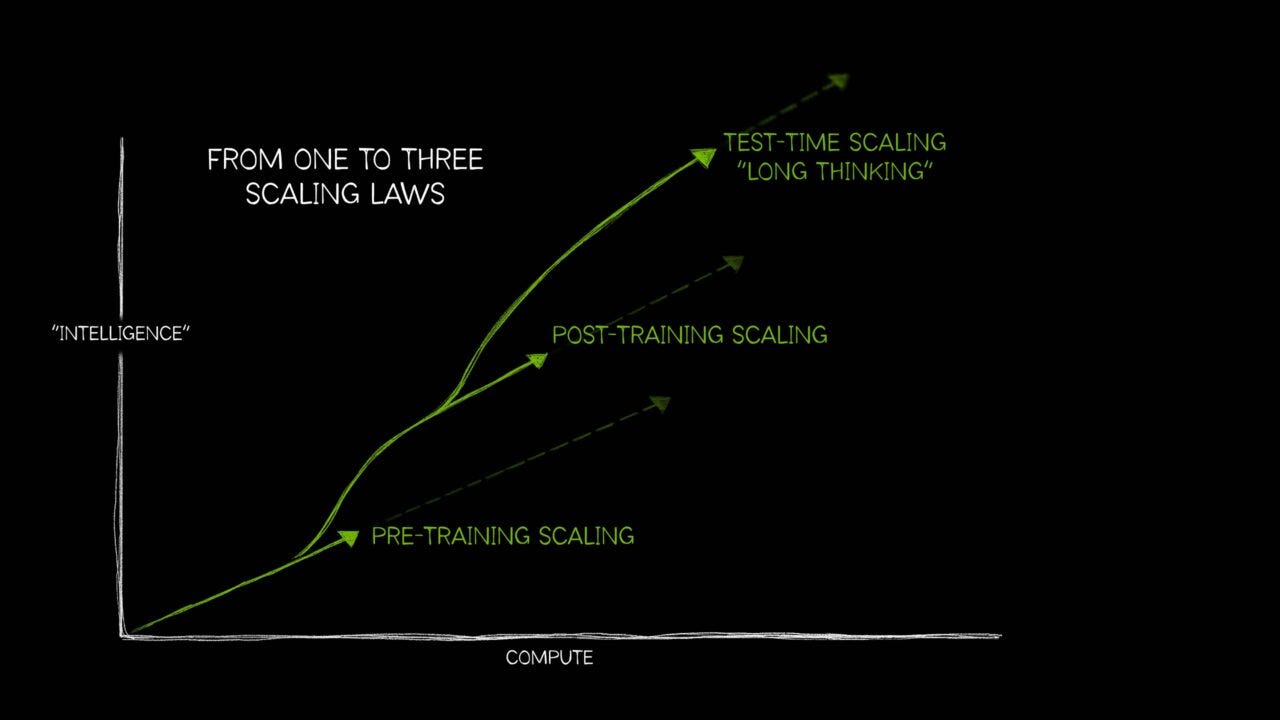

But that assumption presume s that it’s just one type of AI computation. Not infinite type of AI computations as AI Scaling brings up so many ways to apply A. Another topic I’ve discussed.

“Developers who buy AI by the barrel, for apps that do things like make software or analyze documents, are discovering their bills are higher than expected—and growing.”

Let us count the ways:

“What’s driving up costs? The latest AI models are doing more “thinking,” especially when used for deep research, AI agents and coding. So while the price of a unit of AI, known as a token, continues to drop, the number of tokens needed to accomplish many tasks is skyrocketing.”

“It’s the opposite of what many analysts and experts predicted even a few months ago. That has set off a new debate in the tech world about who the AI winners and losers will be.”

And truly ‘smart’ AI applications and services are going to need a boatload of Compute…not just a ‘Bigger Boat’ in terms of trilliong dollar AI Data Centers.

“The arms race for who can make the smartest thing has resulted in a race for who can make the most expensive thing,” says Theo Browne, chief executive of T3 Chat.”

“Browne should know. His service allows people to access dozens of different AI models in one place. He can calculate, across thousands of user queries, his relative costs for the various models.”

The answers are in the details. Something I covered in the ‘good news, bad news’ piece recently in OpenAI’s success with various Ai applications.

“Remember, AI training and AI inference are different. Training those huge models continues to demand ever more costly processing, delivered by those AI supercomputers you’ve probably heard about. But getting answers out of existing models—inference—should be getting cheaper fast.”

The good news is that it is getting cheaper in unit costs terms, with a choice of AI models to use across the closed and open spectrum. The bad news is it’s all going to need a collectively a LOT more inference compute across ALL those models.

“Sure enough, the cost of inference is going down by a factor of 10 every year, says Ben Cottier, a former AI engineer who is now a researcher at Epoch AI, a not-for-profit research organization that has received funding from OpenAI in the past.”

Remember the AI costs mount up far beyond the basic chatbot layer, on ‘the road to AGI’:

““Despite that drop in cost per token, what’s driving up costs for many AI applications is so-called reasoning. Many new forms of AI re-run queries to double-check their answers, fan out to the web to gather extra intel, even write their own little programs to calculate things, all before returning with an answer that can be as short as a sentence. And AI agents will carry out a lengthy series of actions based on user prompts, potentially taking minutes or even hours.”

“As a result, they deliver meaningfully better responses, but can spend a lot more tokens in the process. Also, when you give them a hard problem, they may just keep going until they get the answer, or fail trying.”

Some granular examples of the costs of ‘Intelligence tokens’, both input and output, are helpful. Mostly to the benefit of Nvidia and other AI Infrastructure companies in the middle boxes above:

“Here are approximate amounts of tokens needed for tasks at different levels, based on a variety of sources:”

• “Basic chatbot Q&A: 50 to 500 tokens”

• “Short document summary: 200 to 6,000 tokens”

• “Basic code assistance: 500 to 2,000 tokens”

• “Writing complex code: 20,000 to 100,000+ tokens”

• “Legal document analysis: 75,000 to 250,000+ tokens”

• “Multi-step agent workflow: 100,000 to one million+ tokens”

Which brings the ultimate question to answer for the companies and their investors:

“Hence the debate: If new AI systems that use orders of magnitude more tokens just to answer a single request are driving much of the spike in demand for AI infrastructure, who will ultimately foot the bill?”

“Ivan Zhao, chief executive officer of productivity software company Notion, says that two years ago, his business had margins of around 90%, typical of cloud-based software companies. Now, around 10 percentage points of that profit go to the AI companies that underpin Notion’s latest offerings.”

So AI is bending the business model far beyond the AI Infrastructure layer in the tech stack chart agove, as I discussed in detail here and here.

This trends are especially showing up in the pricing for the white hot area of AI Coding, a critical market for AI companies large and small. Especially with the current fashionable pursuit of ‘vibe coding’:

“The challenges are similar—but potentially more dire—for companies that use AI to write code for developers. These “vibecoding” startups, including Cursor and Replit, have recently adjusted their pricing. Some users of Cursor have, under the new plan, found themselves burning through a month’s worth of credits in just a few days. That’s led some to complain or switch to competitors.”

Amjad Masad is the founder and CEO of Replit, an AI service that helps coders and non-coders alike create apps. Photo: Bryan Bedder for The Wall Street Journal

“And when Replit updated its pricing model with something it calls “effort-based pricing,” in which more complicated requests could cost more, the world’s complaint box, Reddit, filled up with posts by users declaring they were abandoning the vibecoding app.”

But customers still pay the higher prices:

“Despite protests from a noisy minority of users, “we didn’t see any significant churn or slowdown in revenue after updating the pricing model,” says Replit CEO Amjad Masad. The company’s plan for enterprise customers can still command margins of 80% to 90%, he adds.”

All this will of course will settle down as markets ebb and flow, and find their levels:

“Some consolidation in the AI industry is inevitable. Hot markets eventually slow down, says Martin Casado, a general partner at venture-capital firm Andreessen Horowitz. But the fact that some AI startups are sacrificing profits in the short term to expand their customer bases isn’t evidence that they are at risk, he adds.”

“Casado sits on the boards of several of the AI startups now burning investor cash to rapidly expand, including Cursor. He says some of the companies he sits on the boards of are already pursuing healthy margins. For others, it makes sense to “just go for distribution,” he adds.”

Fundamentally, it all means that the LLM AI and the AI Infrastructure companies can continue to invest unprecedented amounts in AI capex, for now.

“The big companies creating cutting-edge AI models can, at least for now, afford to collectively spend more than $100 billion a year building out infrastructure to train and deliver AI. That includes well-funded startups OpenAI and Anthropic, as well as companies like Google and Meta that redirect profits in other lines of business to their AI ventures.”

“For all of that investment to pay off, businesses and individuals will eventually have to spend big on these AI-powered services and products. There is an alternative, says Browne: Consumers could just use cheaper, less-powerful models that require fewer resources.”

The market will also figure out a way to sort the booming inference demad for intelligence tokens and route them to the appropriate layers of less expensive AI models. This will be an accelerating trend:

“For T3, his many-model AI chatbot, Browne is beginning to explore ways to encourage this behavior. Most consumers are using AI chatbots for things that don’t require the most resource-intensive models, and could be nudged toward “dumber” AIs, he says.”

And a lesson that is being applied in spades by the leading LLM AI company, OpenAI, that’s on the bleeding edge of these trends:

“This fits the profile of the average ChatGPT user. OpenAI’s CFO said in October that three-quarters of the company’s revenue came from regular Joes and Janes paying $20 a month. That means just a quarter of the company’s revenue comes from businesses and startups paying to use its models in their own processes and products.”

It applies across the board of the slate of OpenAI’s current and future AI applications and services to come:

“And the difference in price between good-enough AI and cutting-edge AI isn’t small.”

“The cheapest AI models, including OpenAI’s new GPT-5 Nano, now cost around 10 cents per million tokens. Compare that with OpenAI’s full-fledged GPT-5, which costs about $3.44 per million tokens, when using an industry-standard weighted average for usage patterns, says Cottier.”

“While rate limits and dumber AI could help some of these AI-using startups for a while, it puts them in a bind. Price hikes will drive customers away. And the really big players, which own their own monster models, can lose money while serving their customers directly. In late June, Google offered its own code-writing tool to developers, completely free of charge.”

“Which raises a thorny question about the state of the AI boom: How long can it last if the giants are competing with their own customers?”

That of course brings us to the ‘Frenemies’ question in AI, one we’ve seen in almost every other tech wave before this AI Tech Wave.

Just be assured that the numbers are likely to be bigger, up and down the AI tech stack above. Especially as those inference loops turn faster around the middle boxes above. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)