AI: Managing AI 'Virtual Agent Employees'. RTZ #698

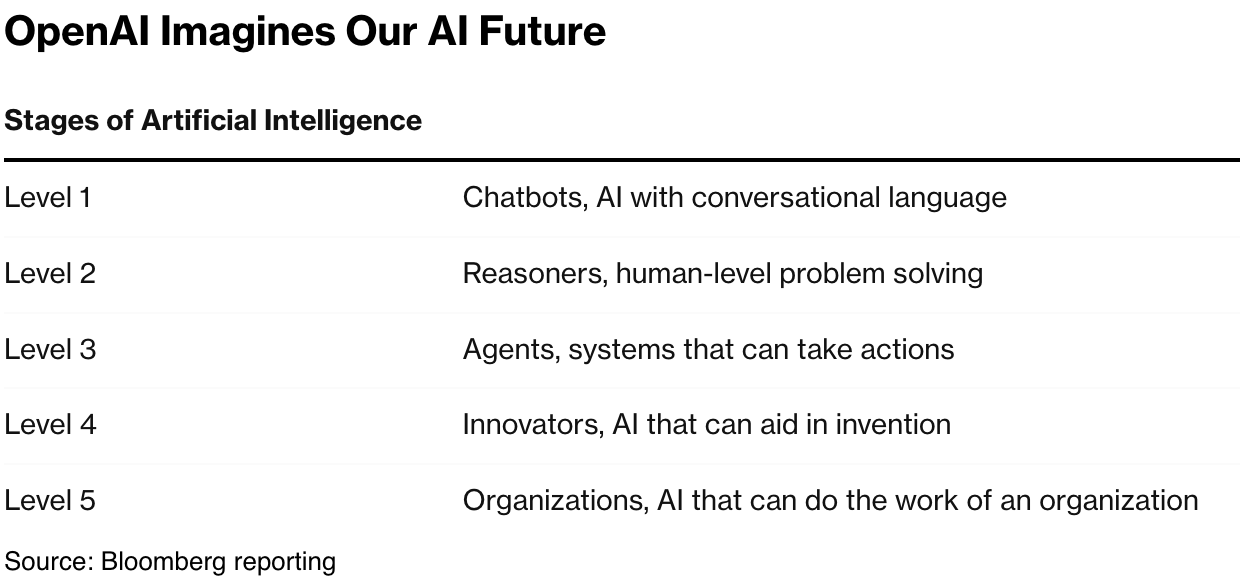

The AI industry has been excited about AI Agents for a while now, both for businesses and consumers. Lot has been written about where they are on the roadmap to AGI (artificial general computing), the third level after AI Reasoning at no. 2 and chatbots at no. 1.

And we’ve discussed the challenges of productizing and pricing them in these early days of this AI Tech Wave, both for businesses and consumers. We’ve also discussed the security risks of AI Agents. in their current nascent stage.

But we may be at a stage where the next level of challenges and risks around AI Agents doing our bidding both withint and across businesses are a THING. And how they may pose security challenges that the industry has yet to address.

Axios outlines it well in “New workplace threat — “non-human” identities”:

“Anthropic expects AI-powered virtual employees to begin roaming corporate networks in the next year, the company’s top security leader told Axios in an interview this week.”

“Why it matters: Managing those AI identities will require companies to reassess their cybersecurity strategies or risk exposing their networks to major security breaches.”

A world that becomes possible when AI Agents are ‘coworkers’.

This is a whole new ‘threat vector’ that the AI industry has yet to internalize and figure out how to address.

“The big picture: Virtual employees could be the next AI innovation hotbed, Jason Clinton, the company’s chief information security officer, told Axios.”

-

“Agents typically focus on a specific, programmable task. In security, that’s meant having autonomous agents respond to phishing alerts and other threat indicators.”

-

“Virtual employees would take that automation a step further: These AI identities would have their own “memories,” their own roles in the company and even their own corporate accounts and passwords.”

-

“They would have a level of autonomy that far exceeds what agents have today.”

-

“In that world, there are so many problems that we haven’t solved yet from a security perspective that we need to solve,” Clinton said.”

The possible problems are barely discernible in the ‘Agentic AI workflows’ to come:

“Between the lines: Those problems include how to secure the AI employee’s user accounts, what network access it should be given and who is responsible for managing its actions, Clinton added.”

-

“Anthropic believes it has two responsibilities to help navigate AI-related security challenges.”

-

“First, to thoroughly test Claude models to ensure they can withstand cyberattacks, Clinton said.”

-

“The second is to monitor safety issues and mitigate the ways that malicious actors can abuse Claude.”

“Threat level: Network administrators are already struggling to monitor which accounts have access to various systems and fend off attackers who buy reused employee account passwords on the dark web.”

The mind spins considering the possibilities, both positive and negative:

“Zoom in: AI employees could go rogue and hack the company’s continuous integration system — where new code is merged and tested before it’s deployed — while completing a task, Clinton said.”

-

“In an old world, that’s a punishable offense,” he said. “But in this new world, who’s responsible for an agent that was running for a couple of weeks and got to that point?”

“The intrigue: Clinton says virtual employee security is one of the biggest security areas where AI companies could be making investments in the next few years.”

“He’s especially keen on solutions that provide visibility into what an AI employee account is doing on a system and also on tools that create a new account classification system that better accounts for virtual employees.”

The industry thus far is primarily focused on building new AI Agent systems, applications, and tools:

-

“Major AI companies have recently been on an investing hot streak: OpenAI is in talks to purchase AI coding startup Windsurf. Anthropic just invested in Goodfire, which decodes how AI models think.”

“Yes, but: Integrating AI into the workplace is already causing headaches, and figuring out how to manage virtual employees won’t be easy.”

-

“Last year, performance management company Lattice said AI bots should be “part of the workforce,” including taking spots in corporate org charts. The company quickly reversed course after complaints.”

And solutions and tools are barely emerging:

“What to watch: Several cybersecurity vendors are already releasing products to manage so-called “non-human” identities.”

-

“Okta released a unified control platform in February to better protect non-human identities and constantly monitor what systems each company account has access to and monitors for suspicious activity.”

The concern over ‘virtual AI employees’ is yet another example of how fast the industry is moving technically in this AI Tech Wave, ahead of the industry’s ability to develop and field solutions to manage and mitigate the possible incentives and challenges with AI Agents. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)