AI: Meta's 'Behemoth' Llama LLM AI difficulties. RTZ #721

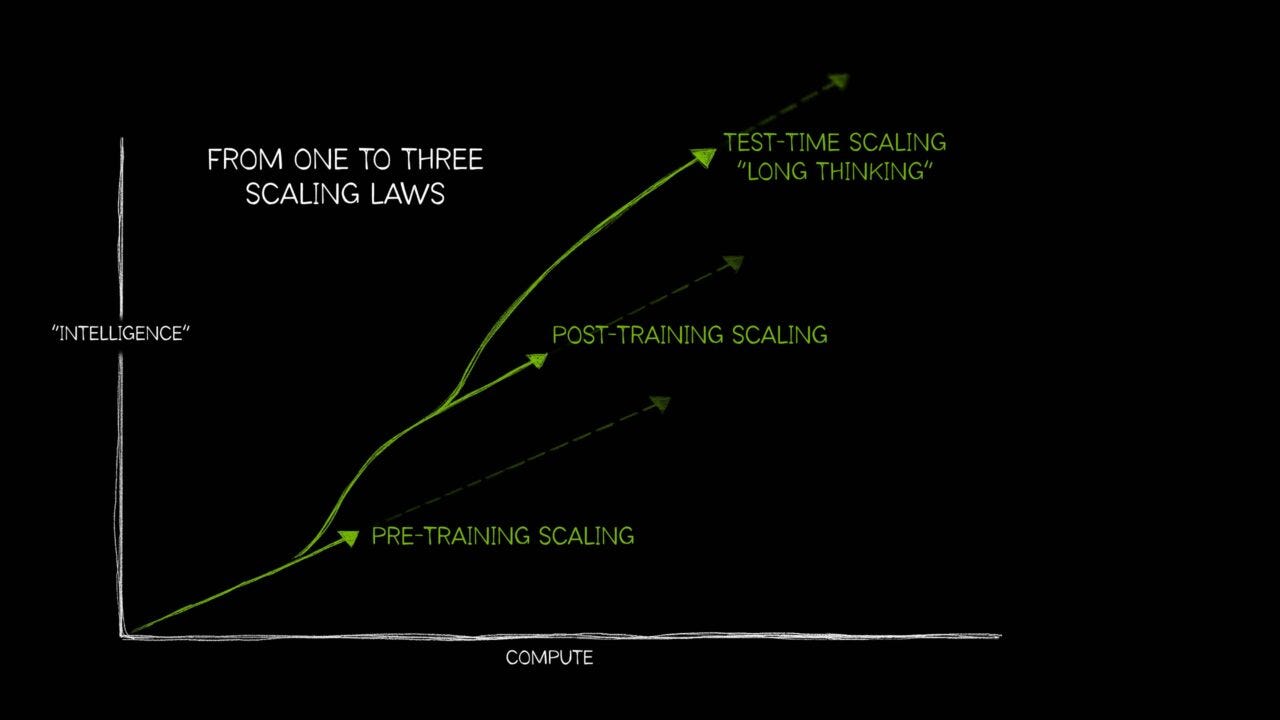

For almost two years in these pages I’ve chronicled daily the relentless progress in key tech and AI companies scaling LLM AIs on almost a clockwork like schedule to newer and better capabilities. It doesn’t always go in a straight line, despite the scaling curves involved.

And I’ve discussed how these improvements in this AI Tech Wave have far rivaled the progression of Moore’s Law, which propelled the tech industry to global success over 70 years.

And yes, how this progress compelled the unprecedented scale of AI Capex investment in the hundreds of billions a year to build the Compute and Power necessary to create the ‘intelligence tokens’ that make AI model training and inference possible in the first place.

And this progress across these areas has resulted in relentless, iterative progress by leading AI companies like OpenAI, Anthropic, Google, and others here, and DeepSeek and others in China, to give the impression that this ongoing acceleration in model capabilities is inevitable and relatively easy.

It’s not. Like everything in life, including evolution, it all comes in fits and starts. And the latest from Meta, the leader in open source LLM AI with its Llama models is a case in point. Despite the progress I discussed from Anthropic just yesterday.

The WSJ highlights these issues in “Meta Is Delaying the Rollout of Its Flagship AI Model”:

“The company’s struggle to improve the capabilities of latest AI model mirrors issues at some top AI companies”.

-

“Meta is delaying the release of its “Behemoth” AI model due to concerns about its capabilities.”

-

“Meta engineers are struggling to improve “Behemoth,” leading to internal questions about its public release.”

-

“Meta is contemplating management changes to its AI product group due to the performance of the Llama 4 team.”

“Meta s delaying the rollout of a flagship AI model, prompting internal concerns about the direction of its multibillion-dollar AI investments, people familiar with the matter said.”

Remember that Meta is investing north of $64 billion a year on AI model and infrastructure development. So any development hiccup is notable.

“Company engineers are struggling to significantly improve the capabilities of its “Behemoth” large-language model, leading to staff questions about whether improvements over prior versions are significant enough to justify public release, the people said.”

“Early in its development, Behemoth was internally slated for an April release to coincide with Meta’s inaugural AI conference for developers. Meta put out two smaller models in its Llama AI model family ahead of the event, but later pushed an internal target for the larger Behemoth’s release to June. Now, it’s been delayed to fall or later.”

Meta’s success in open source Llama models to date has been nothing but breathtaking. Rivaled only by the open source innovations of DeepSeek from China just a few months ago.

“Meta has previously drawn praise for the speed with which it’s caught up to rivals in the global AI arms race—spending billions of dollars along the way to develop the technology that powers chatbots on WhatsApp, Instagram and Facebook. Meta plans to spend up to $72 billion in capital expenditures this year, much of which will be used to help realize Chief Executive Mark Zuckerberg’s grand ambitions for AI.”

“Zuckerberg and other Meta executives haven’t publicly committed to a timeline for Behemoth. The company could ultimately decide to release Behemoth sooner than expected, including by rolling out a more limited version. But Meta engineers and researchers are concerned its performance wouldn’t match public statements about its capabilities, the people said.”

The core issues behind the hiccups are opaque:

“Senior executives at the company are frustrated at the performance of the team that built the Llama 4 models and blame them for the failure to make progress on Behemoth, according to people familiar with their views. Meta is contemplating significant management changes to its AI product group as a result, the people said.”

“The Facebook-parent has publicly touted the capabilities of Behemoth, saying it already outperforms similar technology from OpenAI, Google and Anthropic on some tests. But internally, its performance has been hobbled by training challenges, the people said.”

And to be fair, Meta is not alone in the challenges of scaling next generation LLM AIs, especially on the training side of the ledger.

“Meta’s recent challenges mirror stumbles or delays at other top AI companies that are trying to release their next big state-of-the-art models. Some researchers see the pattern as evidence that future advances in AI models could come at a far slower pace than in the past, and at tremendous cost.”

“Right now, the progress is quite small across all the labs, all the models,” said Ravid Shwartz-Ziv, an assistant professor and faculty fellow at New York University’s Center for Data Science.”

OpenAI’s pace with its next gen GPT-5 is a case in point:

“GPT-5, one of OpenAI’s next big technological leaps forward, was initially expected around mid-2024, The Wall Street Journal previously reported. In December, The Journal reported that development on the model was running behind schedule.”

“In February, OpenAI Chief Sam Altman said the model would be released as GPT-4.5 and that GPT-5—the model they hoped would come with bigger technological breakthroughs—was still months away. ChatGPT currently runs on versions of GPT-4o. OpenAI declined to comment on the timing of GPT-5’s release.”

Anthropic as well, discussed yesterday, is a case in point:

“Anthropic last year said it was working on a new model called Claude 3.5 Opus, a larger version of the AI models it released last year and has continued to update. That heftier version still hasn’t been released. A spokeswoman says Opus is coming soon.”

And Meta has its own pathway to how it’s come to build its world class LLM AI Llama models, and the development turbulence and turmoil:

“Meta’s first version of Llama was produced by its Fundamental AI Research Team, which largely consists of academics and researchers with doctorate degrees. The team released the models and a research paper explaining them to the public in early 2023.”

And the progress has made it seem like Meta was surfing to success in a straight line:

“Since then, 11 of the 14 researchers on that original paper have left the company. The Llama models since the first ones have been developed by a different team. The Information earlier reported on problems Meta was having with some of its recent Llama models.”

“The two models that were released in April initially performed well on a popular AI chatbot leaderboard. However, it was later revealed that the model submitted to the leaderboard wasn’t the same model that was released to the public.”

“Representatives for the leaderboard said Meta should have made it clearer that it had submitted a customized model intended to do well on its benchmark test. Zuckerberg acknowledged that Meta submitted a version of its AI model to the leaderboard that was optimized to do well on the third-party performance test.”

Overall, this latest tale from Meta just highlights that relentless progress in LLM AI Scaling improvements while directionally in the right direction, does not and will not come in a straight line. Lots of ups and downs, and even step retracements in the process all through this AI Tech Wave. We’ll just have to wait through it. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)