AI: Nvidia extends its AI Data Center strategy with Lepton DGX. RTZ #748

As expected with its ‘vertical AI Data Center Strategy’ described earlier, Nvidia stepped up its direct AI GPU Chips as a service business via its AI Data Center Cloud customers. Dubbed Lepton DGX Cloud, and outlined by founder/CEO Jensen Huang at earlier conferences, the new service ramp got highlighted by him at an event this week in Paris. It was described as the ‘Nvidia DGX Cloud Lepton’ service, connecting ‘Europe’s Developers to the Global Nvidia Compute Ecosystem’.

The move underlines the ongoing ‘Frenemies’ relationship between Nvidia and its prime Cloud services provider customers like Amazon AWS, Microsoft Azure, Google Cloud and others, who make up almost half of the company’s overall revenues. It’s a dynamic discussed in detail earlier, especially as its core AI Data Center Cloud customers are all developing their own AI chips to diversify away from Nvidia’s AI Chip infrastructure roadmap. Many with the help of AI Chip competitors like Broadcom and others.

Both opposing realities can be true and prevail at the same time. While Nvidia extends it moat and global AI Cloud Services market, it’s also accelerating its customer driven ‘AI Factories strategy. Much of it with its decade plus long open source CUDA software frameworks and other initiatives.

The Information outlines this dynainc in detail, in “Nvidia Muscles Into GPU Cloud Market, Rankling New Rivals”:

“Nvidia recently debuted a cloud service enabling artificial intelligence developers to rent server chips directly from the chip designer, part of its push into the cloud market dominated by Amazon and Microsoft.”

“In doing so, Nvidia is encroaching on the turf of CoreWeave and dozens of other up-and-coming firms that sell the same type of service. Nvidia executives told the firms they could make their AI servers available to customers of Nvidia’s new service, which it calls a “marketplace” of graphics processing units.”

Remember, Nvidia is an investor in lead NeoCloud CoreWeave, who like others, is also a key customer in this AI Infrastructure ‘gold rush’.

“The move left these firms, known as AI or GPU cloud providers, with a difficult choice.”

“Executives at several of them privately said they view the new Nvidia service as competitive with their own because it gives Nvidia direct relationships with AI customers, who may therefore have fewer reasons to do business with the cloud providers.”

“But the executives said they also worried about losing business to rivals that had already agreed to participate in the new Nvidia service if they didn’t go along with the plan.”

But both realities are accelerating withint Nvidia’s AI data center customer base:

“So far, more than a dozen GPU cloud providers have agreed to make servers available to the new Nvidia service, DGX Cloud Lepton. They include CoreWeave and Nebius, whose businesses are among the most mature in the field.”

“You’d rather play with [Nvidia] in the sandbox than not at all,” said one executive of a GPU cloud provider that participates in the Lepton service.”

“Nvidia is also making its own cloud-based GPU inventory available through the marketplace, which for now is only available to select customers, according to the Nvidia website.”

The latest iteration in Europe was laid out this week:

“Jensen Huang announced at the Nvidia developer conference in Paris on Wednesday that Nvidia added new cloud providers to DGX Cloud Lepton. Nvidia said Amazon Web Services and Microsoft would be the first large cloud providers to participate in the marketplace.””

“Nvidia’s move to aggregate these cloud providers’ server inventory under its Nvidia-branded service could attract regulatory attention. The company has been under investigation by antitrust lawyers at the Department of Justice, who are looking at whether the company has abused its dominant position in chips and the specialized software that controls them.”

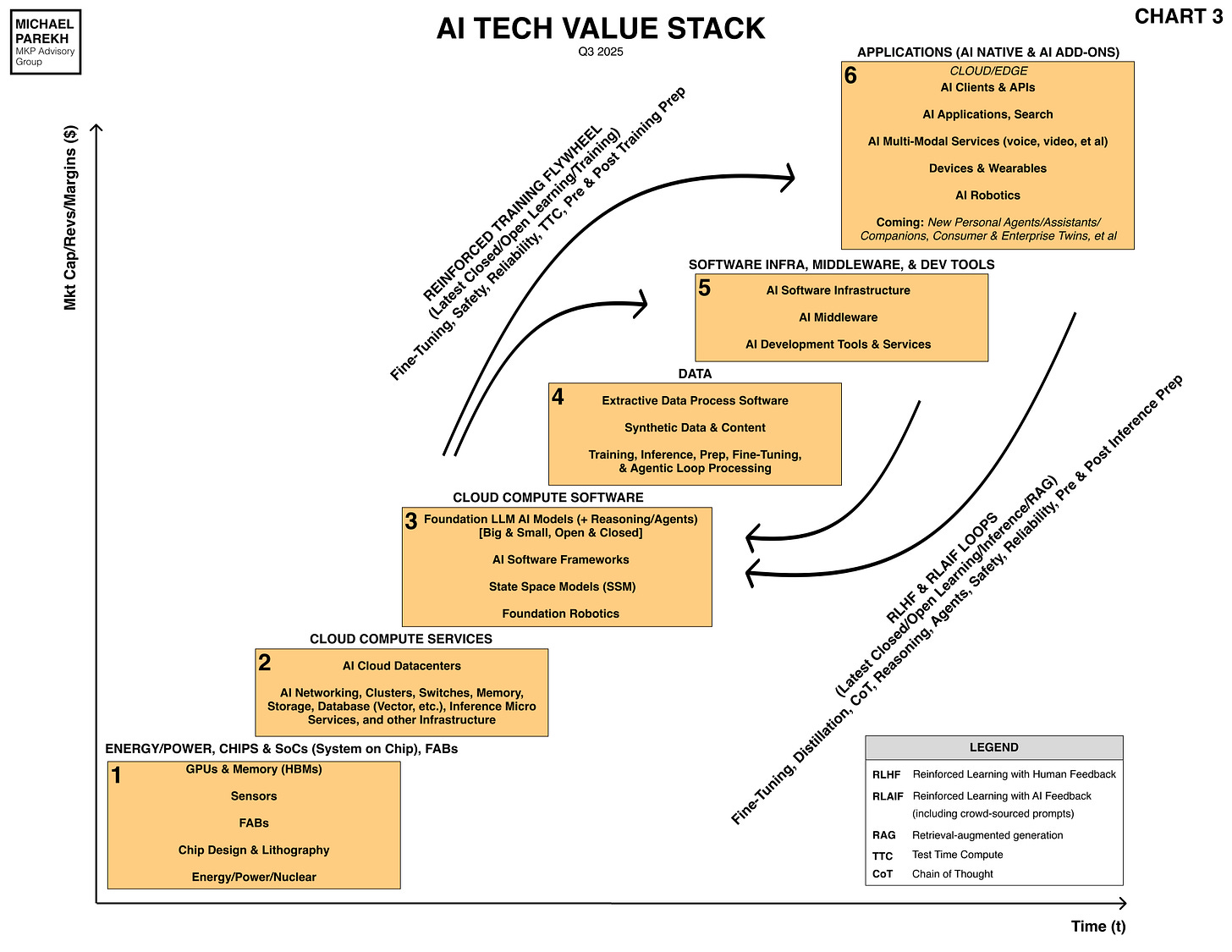

It’s all part of a long term Nvidia strategy in this AI Tech Wave outlined earlier:

“Nvidia is in the midst of a multiyear effort to diversify from chip sales by also renting out servers powered by its chips to businesses directly. It also provides software to help companies develop AI models and applications as well as manage clusters of GPUs that train the AI. That puts the company in direct or nearly direct competition with everyone from Amazon Web Services to CoreWeave, which exclusively rents out Nvidia chip servers.”

“Huang has said he wants Nvidia to give AI developers “instant access” to chips to train and run AI models, and he said in May that the Lepton service is part of an effort to develop a “planetary-scale AI factory.” Nvidia says its cloud and software businesses could generate $150 billion in revenue someday, rivaling AWS.”

It’s a meaningful, fast growing, global business for Nvidia:

“Nvidia’s AI-focused cloud and software business is nascent but is already one of the biggest of its kind, on pace to generate $2 billion in revenue annually, the company has said. That’s around the same amount of revenue CoreWeave, which has a market capitalization of more than $75 billion, generated last year from renting out Nvidia chips.”

“Last month, an executive at one of the GPU cloud providers went to Nvidia’s Santa Clara, Calif., headquarters to hear Nvidia’s pitch for Lepton. The executive said they asked why Nvidia wanted to launch a service that competes with the executive’s firm.”

“Huang’s move is understandable, given that AWS and other major cloud providers are developing AI server chips that compete with Nvidia’s and hope to convince their cloud customers to use the alternative chips.”

Again, a reality outlined earlier.

It started a while ago:

“Nvidia launched its first cloud service, DGX Cloud, in 2023, seeking to rent out GPU servers directly to large enterprises like SAP and Genentech to develop AI applications.”

“Nvidia didn’t launch the service from its own data centers. Instead, it asked major cloud providers such as Microsoft, AWS and Google—the biggest buyers of Nvidia GPUs—to make servers available for the Nvidia cloud service from within their data centers.”

“Nvidia for years has been pitching its DGX Cloud service as a “premium AI training service” that is less expensive than renting GPUs from AWS, according to a presentation viewed by The Information. However, Nvidia’s prices often appear to be more expensive than those of AWS, when factoring in the cost of using Nvidia software to operate the chips.”

“Nvidia’s move into the cloud rankled Amazon AWS, the largest cloud services provider, which initially rejected the request to take part in DGX Cloud but later reversed course.”

Amazon AWS is of course the largest of the Cloud providers.

“The new DGX Cloud Lepton marketplace is a similar idea, but geared toward attracting smaller AI developers rather than larger enterprises. The service is powered by technology from Lepton, a GPU cloud provider Nvidia acquired earlier this year for several hundred million dollars.”

The core proposition is as follows:

“Nvidia’s Pitch”

“Lepton competed in a fast-moving GPU cloud market that has emerged in the past three years, spawning dozens of companies. Some of these firms purchase Nvidia chips directly and operate their own data centers, while others rent out GPU servers that other companies host in data centers. These firms often develop their own software to help customers—typically startups or software firms—develop and manage their AI apps in the cloud.”

“Customers of the new Nvidia cloud marketplace have to make an Nvidia Cloud Account in order to rent chips, closely mirroring the types of accounts customers create to use AWS or Microsoft Azure. If they rent compute from the marketplace, they will manage it through their Nvidia account.”

That narrative got repeated in Europe this week:

“Huang’s pitch to developers in Europe was that Nvidia simplifies the process of getting reliable GPUs by aggregating its customers GPUs into a single platform. One benefit for Nvidia in operating a marketplace is that it can offer companies incentives to rent chips.”

“Nvidia said it is working with European venture capital firms to offer their portfolio companies up to $100,000 in credits to rent GPUs from the marketplace, which comes with access to Nvidia software for managing servers.”

“Inside Nvidia, executive Tony Zheng has been pitching GPU cloud providers to join the new Lepton service, according to people familiar with the meetings.”

It’s a narrative that will continue for the next few years, as Nvidia and its customers both ramp up on the ‘AI Table Stakes’ infrastructure markets, especially for Sovereign AI opportunities worldwide.

This dynamic will accelerate while Nvidia extends its core reign on the AI Factories market globally this year and beyond. Including the huge emerging market in the Middle East. Especially post the recent trip with the President.

This is the current dual reality for Nvidia in this AI Tech Wave for now. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)