AI: Nvidia vs its Top Customers for AI GPUs. RTZ #554

One of the most asked questions I get on Nvidia this AI Tech Wave, is that almost half of their total revenues, are from their top five customers all investing billions to build their own AI GPU chips. And of course hoping to replace Nvidia’s $30,000+ chips with less expensive versions. And one that are more customized for their AI uses cases at Scale. A valid set of concerns.

But as I’ve explained in this piece, Nvidia’s software moat is wide and deep, with millions of worldwide developers using their CUDA frameworks and AI libraries for their exploding number of AI applications and services.

But the issues are more nuanced, with far more complexity on both sides. While top cloud companies like Amazon AWS, Microsoft Azure, Google Cloud,, as well as Meta, Tesla and others, are all developing various next generation AI chips for training and inference purposes, the time to market, and a range of non-obvious issues, make it so that their ‘Frenemies’ relationship with Nvidia likely remains the norm for more than a few quarters.

This Bloomberg closer look at some of these efforts, particularly by the largest Cloud data center company, Amazon AWS, is worth a full read for this context. As they explain in “Amazon’s Moonshot Plan to Rival Nvidia in AI Chips”:

“The cloud computing giant won’t dislodge the incumbent anytime soon but is hoping to reduce its reliance on the chipmaker.”

“In a bland north Austin neighborhood dominated by anonymous corporate office towers, Amazon.com Inc. engineers are toiling away on one of the tech industry’s most ambitious moonshots: loosening Nvidia Corp.’s grip on the $100-billion-plus market for artificial intelligence chips.”

Amazon has been developing its AI Training and Inference chips here for several generations, dubbed ‘Trainium’ and ‘Inferentium’ of course.

“Trainium2 is the company’s third generation of artificial intelligence chip. By industry reckoning, this is a make-or-break moment. Either the third attempt sells in sufficient volume to make the investment worthwhile, or it flops and the company finds a new path.”

“Amazon AWS SVP James Hamilton holding the Trainium2 chip.”

“For Trainium2, which Amazon says has four times the performance and three times the memory of the prior generation.”

And it’s getting some traction by important partners:

“Databricks in October agreed to use Trainium as part of a broad agreement with AWS. At the moment, the company’s AI tools primarily run on Nvidia. The plan is to displace some of that work with Trainium, which Amazon has said can offer 30% better performance for the price.”

Of course like all the fabless semiconductor companies including Nvidia, Amazon gets its chips made by TSMC of Taiwan, discussed yesterday:

“Amazon is one of the 10 biggest customers of Taiwan Semiconductor Manufacturing Co., (TSMC of Taiwan), the titan that produces chips for much of the industry.”

And Amazon AWS is also is the 100,000 GPU AI data center race I discussed a few days ago:

“Amazon has started shipping Trainium2, which it aims to string together in clusters of up to 100,000 chips, to data centers in Ohio and elsewhere. A broader rollout is coming for Amazon’s main data center hubs.”

The AI data center ‘super-sizing’ continues here with Amazon’s own chips.

And they’re on a fast iteration plan on updating their AI chips:

“The company aims to bring a new chip to market about every 18 months, in part by reducing the number of trips hardware has to make to outside vendors.”

And Nvidia and others are also not standing still:

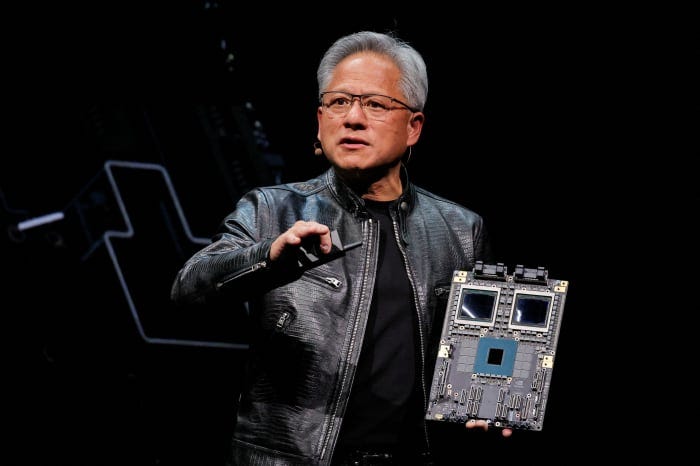

“Other companies are pushing the limits, too. Nvidia, which has characterized demand for its chips as “insane,” is pushing to bring a new chip to market every year, a cadence that caused production issues with its upcoming Blackwell product but will put more pressure on the rest of the industry to keep up. Meanwhile, Amazon’s two biggest cloud rivals are accelerating their own chip initiatives.”

“Google began building an AI chip about 10 years ago to speed up the machine learning work behind its search products. Later on, the company offered the product to cloud customers, including AI startups like Anthropic, Cohere and Midjourney. The latest edition of the chip is expected to be widely available next year. In April, Google introduced its first central processing unit, a product similar to Amazon’s Graviton. “General purpose compute is a really big opportunity,” says Amin Vahdat, a Google vice president who leads engineering teams working on chips and other infrastructure. The ultimate aim, he says, is getting the AI and general computing chips working together seamlessly.”

“Microsoft got into the data center chip game later than AWS and Google, announcing an AI accelerator called Maia and a CPU named Cobalt only late last year. Like Amazon, the company had realized it could offer customers better performance with hardware tailored to its data centers.”

But the ‘Frenemies’ dynamic prevails for now:

“Despite their competitive efforts, all three cloud giants sing Nvidia’s praises and jockey for position when new chips, like Blackwell, hit the market.”

They all want the maximum allocation of Blackwell chips from Nvidia founder/CEO Jensen Huang, while of coruse developing their less expensive wares.

For most of them, the hope is that their own chips will take more of their internal ‘meat and potatoes’ AI/ML processing loads, while their precious Nvidia allocated AI GPU chips can be available for customers using their AI Cloud services:

“Amazon’s Trainium2 will likely be deemed a success if it can take on more of the company’s internal AI work, along with the occasional project from big AWS customers. That would help free up Amazon’s precious supply of high-end Nvidia chips for specialized AI outfits. For Trainium2 to become an unqualified hit, engineers will have to get the software right — no small feat. Nvidia derives much of its strength from the comprehensiveness of its suite of tools, which let customers get machine-learning projects online with little customization. Amazon’s software, called Neuron SDK, is in its infancy by comparison.”

But they have broader ambitions of course, which Amazon is pursuing with its latest follow on investment in Anthropic, the #2 LLM AI company after OpenAI:

“Anthropic, the AI startup and OpenAI rival, agreed to use Trainium chips for future development after accepting $4 billion of Amazon’s money last year, though it also uses Nvidia and Google products. On Friday, Anthropic announced another $4 billion infusion from Amazon and deepened the partnership.”

“We’re particularly impressed by the price-performance of Amazon Trainium chips,” says Tom Brown, Anthropic’s chief compute officer. “We’ve been steadily expanding their use across an increasingly wide range of workloads.”

Amazon, Google, Microsoft, Tesla in particular are developing their own AI GPU/TPU chips AND their own specialized LLM AI models, to lessen their dependence on not just Nvidia, but the leading AI software company OpenAI.

This level of long-term ‘Frenemies’ dynamic is relatively unique this AI Tech Wave vs prior tech waves. And makes it challenging for investors to say the least. But a state of affairs more the norm for now. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)