AI: Nvidia's growing chops in self driving cars & Vera Rubin AI GPU chip metrics. RTZ #959

Well, as expected in Las Vegas, Nvidia founder/CEO Jensen Huang’s keynote at CES 2026 was worth the watch. Just like last year. One gets the sense that Nvidia, for all its accomplishments of late, is kinda just ‘getting warmed up’.

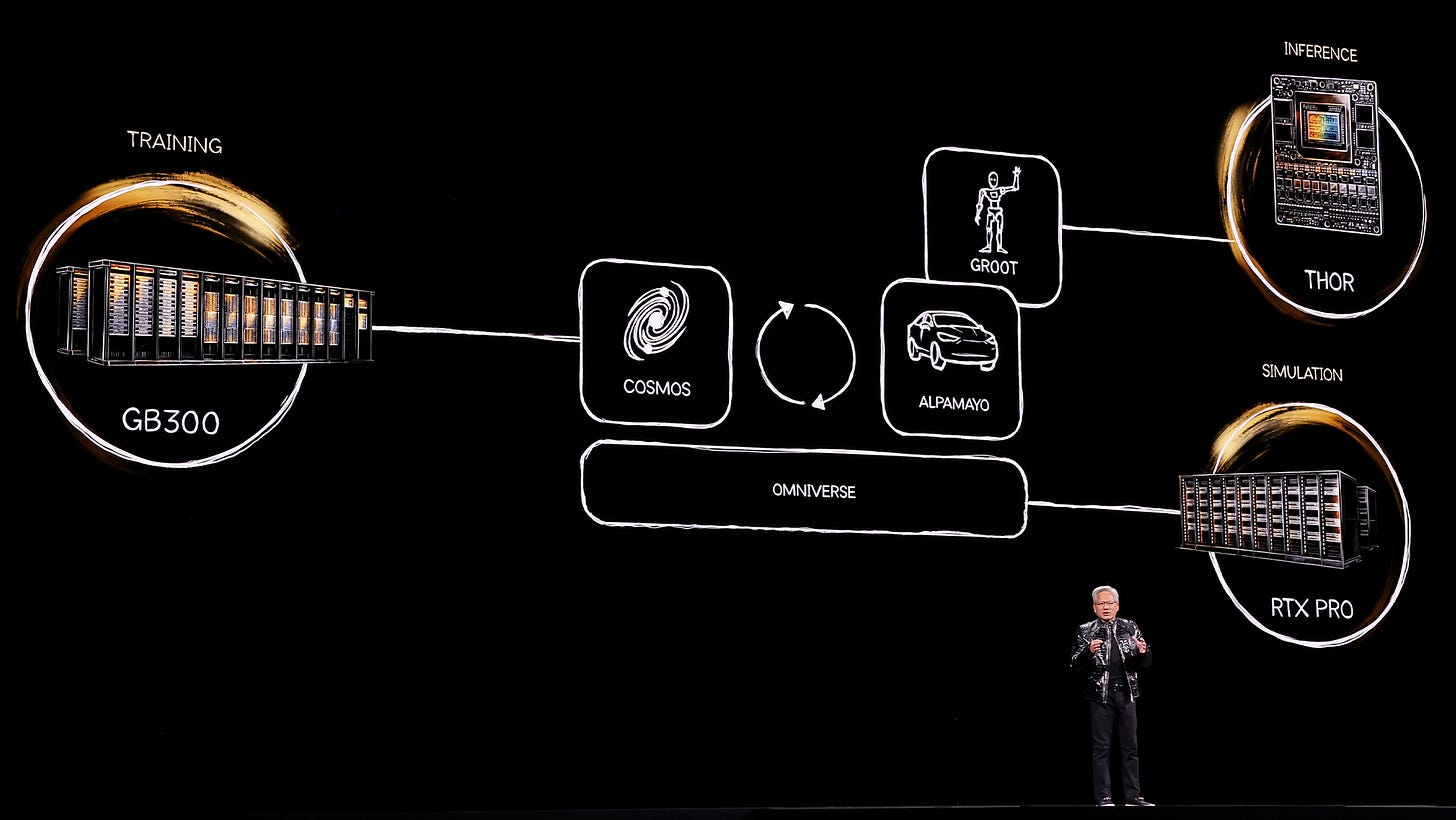

In particular, Nvidia made some announcements that have real implications for this AI Tech Wave, especially as it moves to ‘Physical AI in the real world.

Both in terms of its market leading AI GPU architected, AI ‘Accelerated Computing’, next gen ‘Vera Rubin’ platform. It follows the current Blackwell chips that are selling in the millions.

It’s all just getting started after over 30 years, as I wrote about a few days ago.

And in terms of Nvidia’s not so well understood plans to leverage its core competencies in the ‘Physical AI’ worlds of AI Robotics, and self-driving cars. The latter in particular had announcements that are worth paying attention to relative to the current market fixation on Tesla and Waymo as the champions in this area.

Let me use Axios’s summary of the Nvidia CES 2026 announcements in “ChatGPT moment for physical AI”: Nvidia CEO launches new AI models and chips”:

“Nvidia CEO Jensen Huang announced the launch of AI models for autonomous vehicles and new chips during a presentation at the Consumer Electronics Show on Monday.”

“Why it matters: The strategy underscores where the next wave of AI and computing is headed, given Nvidia’s dominance in the chip market.”

“Driving the news: “The ChatGPT moment for physical AI is here — when machines begin to understand, reason and act in the real world,” Huang said in a statement. “Robotaxis are among the first to benefit.”

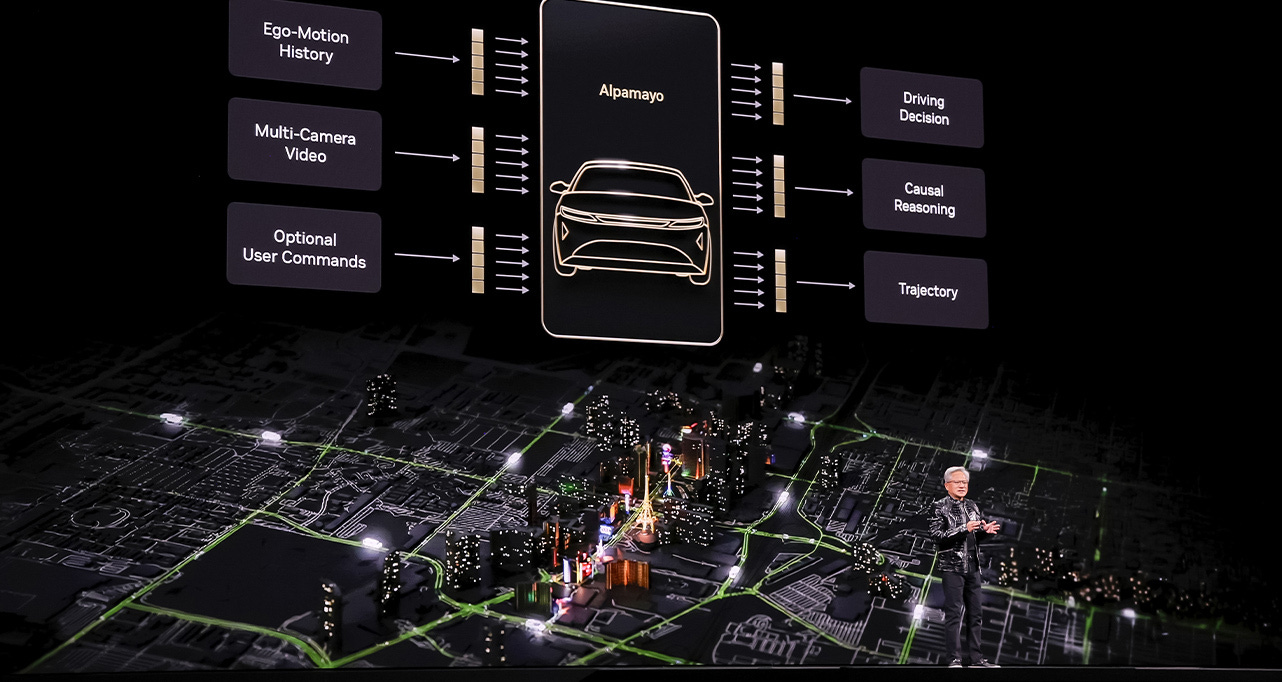

“Speaking onstage in Las Vegas, Huang said Alpamayo is “the world’s first thinking, reasoning autonomous vehicle AI. Alpamayo is trained end-to-end, literally from camera-in to actuation-out.”

“Huang also said the new Mercedes-Benz CLA will feature Nvidia’s driver assistance software in what he said is the company’s “first entire stack endeavor.” He then showed a demo of the car driving in San Francisco, avoiding pedestrians and taking turns.”

“Huang also announced the launch of the Rubin platform, composed of six chips. The products will be available for Nvidia partners in the second half of 2026, the company said.”

“There’s no question in my mind now that this is going to be one of the largest robotics industries, and I’m so happy that we worked on it,” Huang said. “Our vision is that someday every single car, every single truck will be autonomous.”

Those two announcements, on Alpamayo, Nvidia’s self driving car hardware and software platform, and the launching of its Vera Rubin AI Data Center computing generation into mass production, were the two key highlights of the show.

While they’ll take a year or two to really get going, they’re both key ways Nvidia continues to peel away from the competition.

“Reality check: It will be a while until every car is autonomous. Nvidia’s own plans to test a robotaxi service with a partner are slated for 2027.”

Of course, the presentation touched on Nvidia accelerating its AI Inference capabilities, soon to be helped by its $20 billion ‘licensing’ acqui-hire deal for AI Inference company Groq (no relation to Elon Musk’s Grok of xAI). Other analysts agree it’s a smart deal.

“Catch up quick: The presentation follows Nvidia entering into a nonexclusive tech licensing agreement with Groq, a startup producing chips to support real-time chatbot queries.”

“That deal helps strengthen Nvidia in inference, the stage at which AI models use what they’ve learned in the training process to produce real-world results, Axios’ Megan Morrone writes.”

“This phase is essential for AI to scale.”

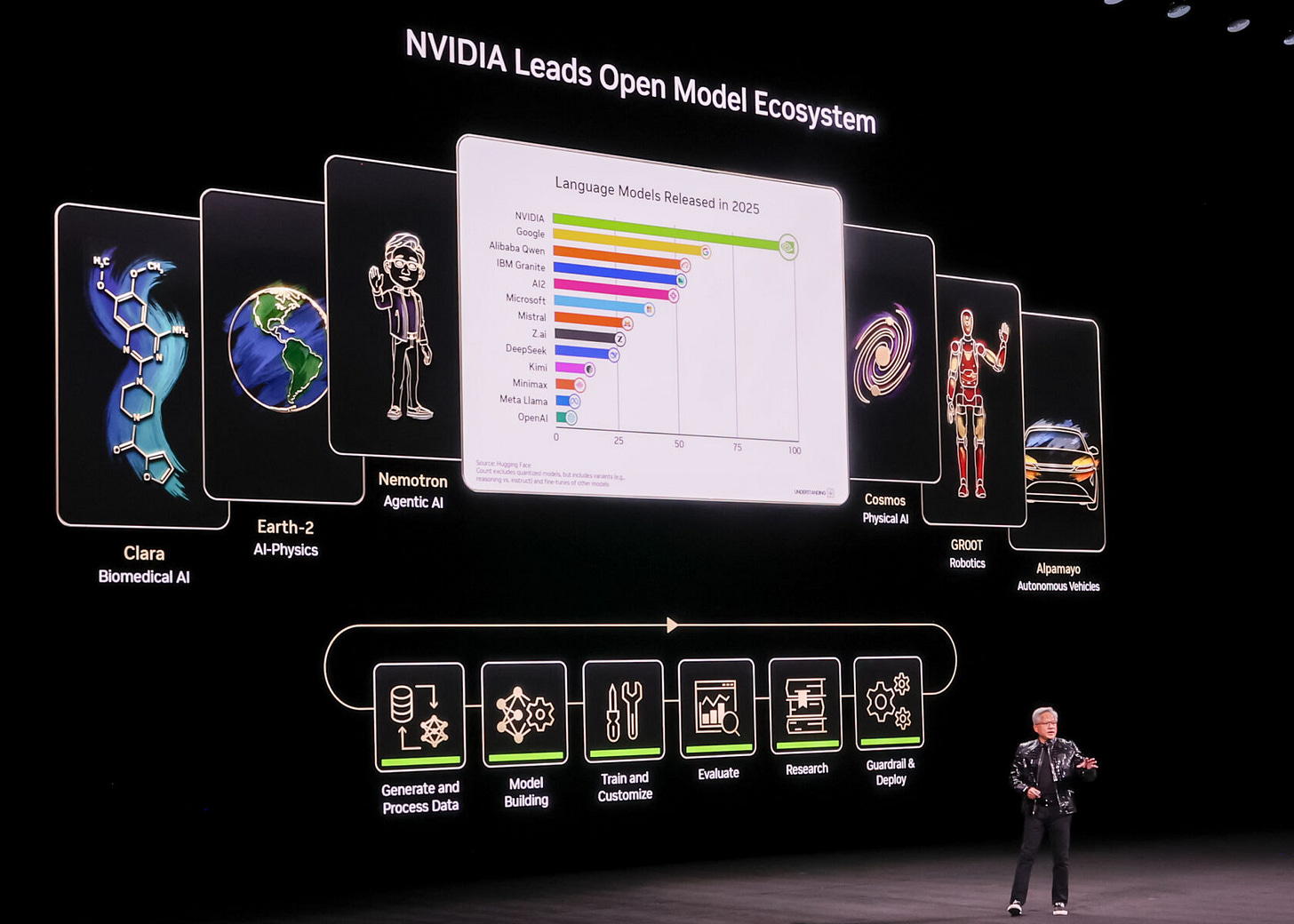

AI in the Physical AI world is a key push for Nvidia, involving billions in investments and thousands of Nvidia engineers working on the hardware and software. And all open-source on the AI software, a key item Jensen highlighted over and over again.

“The big picture: Nvidia has long been investing in physical AI, meaning AI interfacing with the world and not just software.”

And of course like last year, there was a lot of focus on AI Robotics, humanoid and otherwise.

“Last year, Nvidia stole the show at CES with a series of announcements, including its work in robotics and autonomous vehicles alongside new gaming chips and a smaller AI processing unit called DIGITS.”

“Of course, robotics extends beyond cars. Huang was joined onstage by two BD-1 units, droids from the Star Wars universe, as he displayed and discussed an image of other robots that rely on Nvidia’s tech such as Caterpillar’s construction equipment and Agibot’s humanoid robots.”

Self driving cars is of course a current market obsession, especially in terms of robotic driving fleets by Google’s Waymo and Tesla. They’re all still at the Level 2 on the 5 point scale, but all making progress that’ll take a few more years in my view.

The Verge in particular had a relevant review of Nvidia’s self driving car demos in collaboration with Mercedes that’s worth both a read and a watch. They lay it out in “I tested Nvidia’s Tesla Full Self-Driving competitor — Tesla should be worried”:

“The chipmaker is making a big bet on self-driving cars. And it’s making quick progress too.”

“The vehicle is using Nvidia’s new point-to-point Level 2 (L2) driver-assist system that is getting ready to roll out to more automakers in 2026. This is the chipmaker’s big bet on driving automation, one it thinks can help grow its tiny automotive business into something more substantial and more profitable. Think of it as Nvidia’s answer to Tesla’s Full Self-Driving.”

Nvidia’s self-driving car efforts are a surprise to many in the media, as evidenced in the many reviews of Nvidia’s test drives offered to them at CES 2026, including this one. The Verge continues:

“The invitation to test out Nvidia’s new system came a bit as a surprise. After all, the company isn’t exactly known as a self-driving leader. And while Nvidia has long supplied major automakers with chips and software for driver-assist systems, its automotive business is still relatively tiny compared to the billions it rakes in on AI. It’s third quarter revenues were $51.2 billion, but its automotive division only made $592 million, or 1.2 percent of the total haul.”

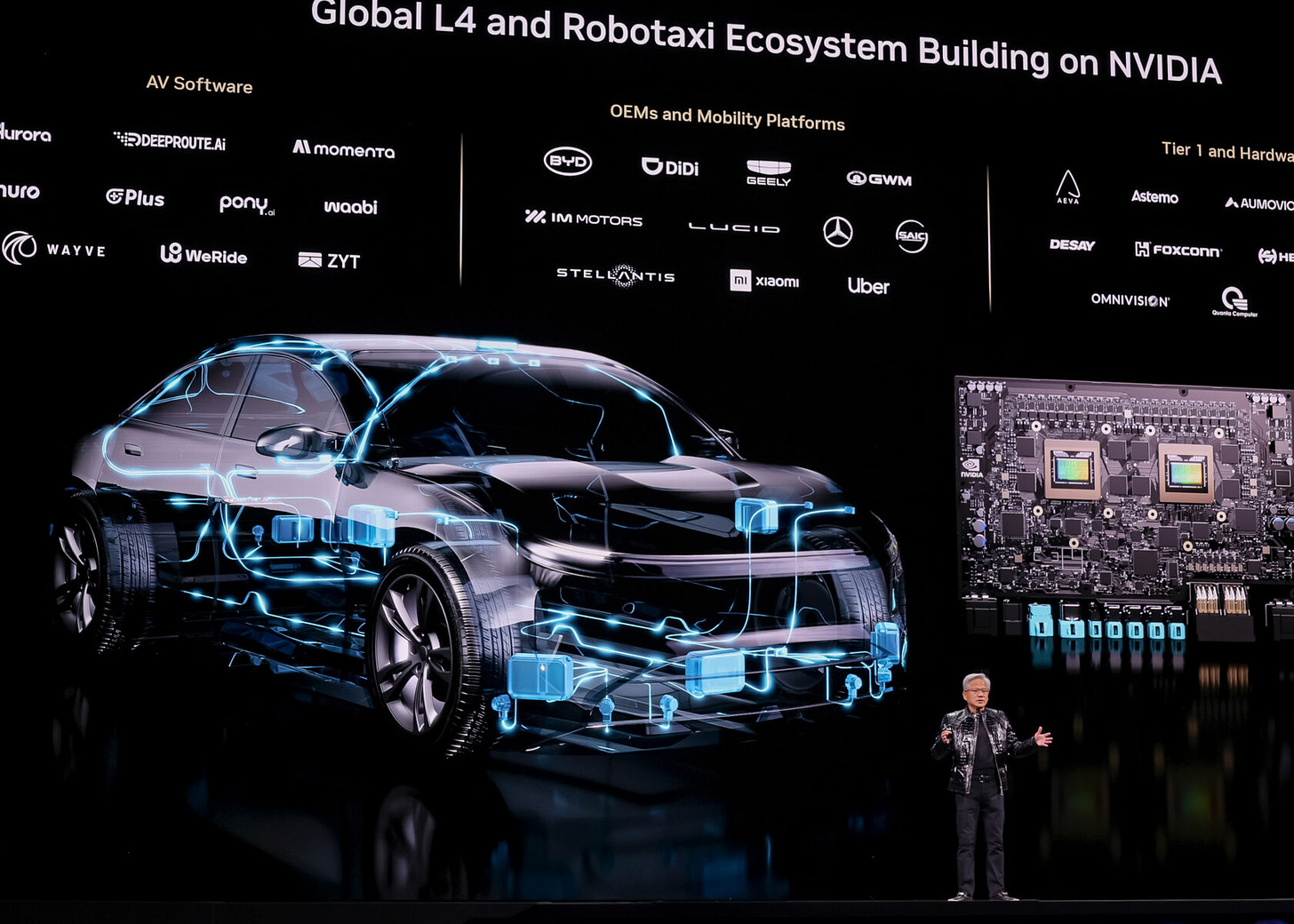

“That could change soon, as Nvidia seeks to challenge Tesla and Waymo in the race to Level 4 (L4) autonomy — cars that can fully drive themselves under specific conditions. Nvidia has invested billions of dollars over more than a decade to build a full-stack solution, says Xinzhou Wu, the head of the company’s automotive division. This includes system-on-chip (SoC) hardware along with operating systems, software, and silicon. And Wu says that Nvidia is keeping safety at the forefront, claiming to be one of the few companies that meets high automotive safety requirements at both the silicon and the software levels.”

“That includes the company’s Drive AGX system-on-a-chip (SoC), similar to Tesla’s Full Self-Driving chip or Intel’s Mobileye EyeQ. The SoC runs the safety-certified DriveOS operating system, built on the Blackwell GPU architecture that’s capable of delivering 1,000 trillions of operations per second (TOPS) of high-performance compute, the company says.”

“Jensen always says, the mission for me and for my team is really to make everything that moves autonomous,” Wu says.”

Although self driving cars seems a small focus for Nvidia, the company is clearly focused on building out a hardware and software self driving platform all the way to Level 4 and 5 over the next few years. Especially as Nvidia goes from its Orin to Thor chips in the AI GPU modules.

They’re investing billions with thousands of engineers focused on the effort, And in a way uniquely positioned to provide a general purpose self driving platform for all car markets around the world that don’t want to roll their own like Google and Tesla. And that includes auto comnpanies in China potentially down the road.

Nvidia has a systematic plan for the road ahead:

“Nvidia’s Wu outlines a roadmap in which Nvidia will release Level 2 highway and urban driving capabilities, including automated lane changes, and stop sign and traffic signal recognition, in the first half of 2026. This includes an L2++ system, in which the vehicle will be able to navigate point-to-point autonomously under driver supervision. In the second half of the year, urban capabilities will expand to include autonomous parking. And by the end of the year, Nvidia’s L2++ system will encompass the entirety of the United States, Wu said.”

“For L2 and L3 vehicles, Nvidia plans on using its Drive AGX Orin-based SoC. For fully autonomous L4 vehicles, the company will transition to the new Thor generation. Software redundancy becomes critical at this level, so the architecture will use two electronic control units (ECUs): a main ECU and a separate redundant ECU.”

“A “small scale” Level 4 trial, similar to Waymo’s robotaxis, is also planned for 2026, followed by partner-based robotaxi deployments in 2027, Wu says. And by 2028, Nvidia predicts its self-driving tech will be in personally owned autonomous vehicles. Also in 2028, Nvidia plans on supplying systems that can enable Level 3 highway driving, in which drivers can take their hands off the wheel and eyes off the road under certain conditions. (Safety experts are highly skeptical about L3 systems.)”

“Ambitious stuff, to say least. And some of it will obviously be dictated by Nvidia’s automotive partners, including Mercedes, Jaguar Land Rover, and Lucid Motors, and whether or not they have the necessary confidence (and legal certainty) to include the tech in cars they sell to their customers. A bad crash, or even an ambiguous one where the tech could have been at fault, could jeopardize Nvidia’s ambitions to become a Tier 1 supplier to the global auto industry.”

A key differentiator in Nvidia’s approach, especially vs Tesla, is using both cameras and Lidar sensors in its platform. Where Tesla’s Elon insists on just cameras. This provides more flexibilitya and safety in lower visibility situations for the multi-sensor approach.

Also, Nvidia uses both traditional ‘classical’ machine learning, and generative AI driven driving technologies concurrently, both helping each other. This is a differentiated approach vs all competitors.

“The system is based on reinforcement learning, meaning it can continue to improve over time as it gains more experience, Kani says. When asked how it compares to Tesla’s Full Self-Driving, he says its very close. In head-to-head city driving tests over long routes, the number of driver takeovers for Nvidia’s system is comparable, sometimes favoring one system, sometimes the other.”

“What makes this particularly notable is how quickly progress has been made. Tesla took roughly eight years to enable urban driving with FSD, whereas Nvidia is expected to do the same within about a year. No other passenger-car system besides Tesla’s has achieved this, Kani boasts.”

”We’re coming fast,” he says, as the Mercedes slows itself down at another intersection. “I’d say [we’re] very close [to FSD].”

So overall, I view this Nvidia keynote as more noteworthy than at first glance. Especially in the emerging global market for self driving cars and other vehicles.

It isn’t just a horse race between Tesla and Google Waymo, as the current view tends to dominate.

Perceived ‘late-comers’ like Nvidia could have approaches worth keeping a close eye on this AI Tech Wave Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)