AI: OpenAI GPT-5 in August? RTZ #795

The Bigger Picture, Sunday, July 27, 2025

After over a year of speculation and expectations, it looks like OpenAI’s GPT-5 LLM AI, will perhaps be launched in August. Given OpenAI’s pole position in this AI Tech Wave, it’s the Bigger Picture to be unpacked this Sunday.

The Verge summarized the imminent launch in this way:

“Altman referred to GPT-5 as “a system that integrates a lot of our technology” earlier this year, because it will include the o3 reasoning capabilities instead of shipping those in a separate model. It’s part of OpenAI’s ongoing efforts to simplify and combine its large language models to make a more capable system that can eventually be declared artificial general intelligence, or AGI.”

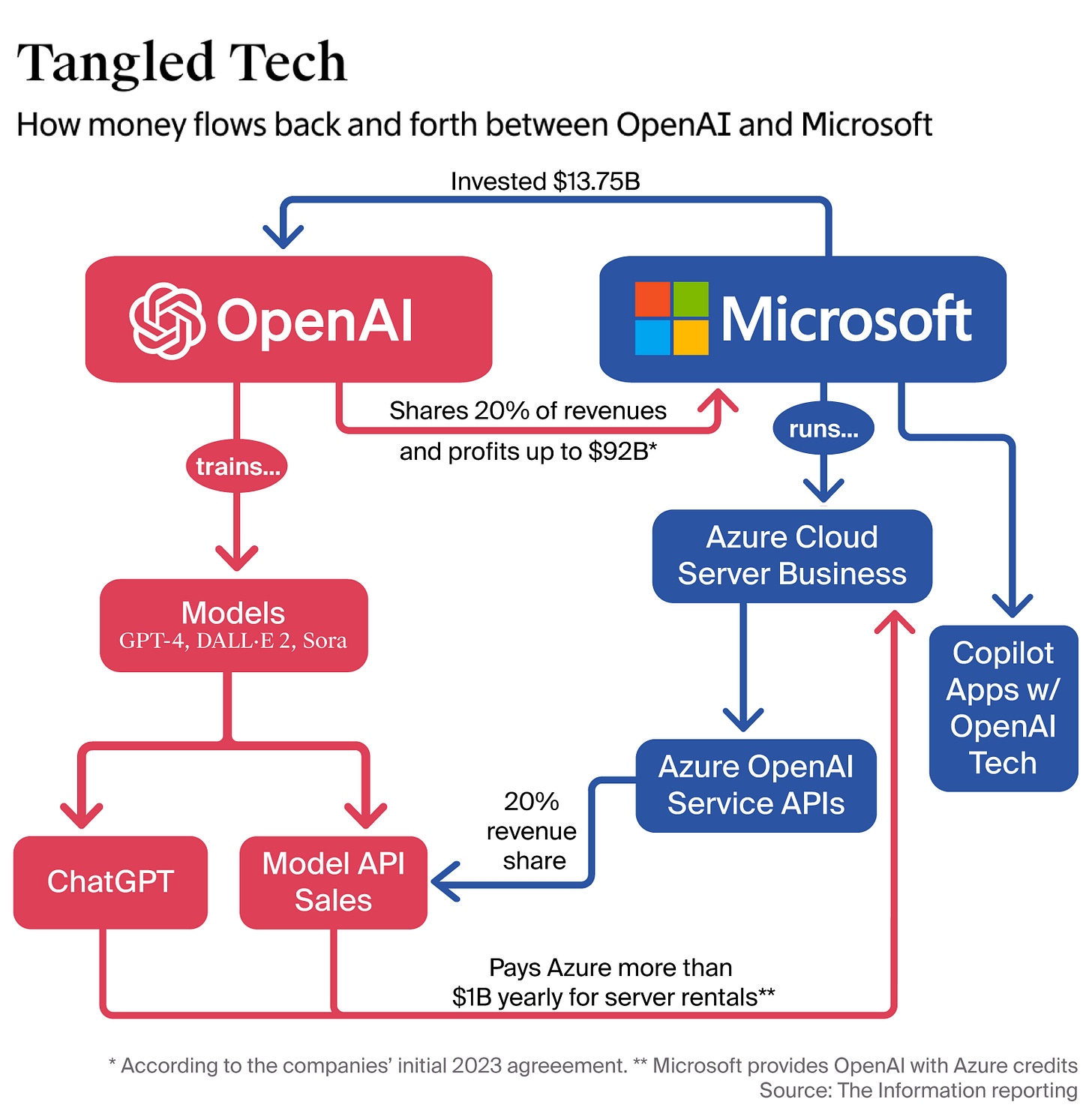

And there are tactical and strategic imperatives on the timing of ‘AGI’ for OpenAI, Microsoft:

“The declaration of AGI is particularly important to OpenAI, because achieving it will force Microsoft to relinquish its rights to OpenAI revenue and its future AI models. Microsoft and OpenAI have been renegotiating their partnership recently, as OpenAI needs Microsoft’s approval to convert part of its business to a for-profit company. It’s unlikely that GPT-5 will meet the AGI threshold that’s reportedly linked to OpenAI’s profits. Altman previously said that GPT-5 won’t have a “gold level of capability for many months” after launch.”

As mentioned above, the other imperative seems to be product simplification, kind of:

“Unifying its o-series and GPT-series models will also reduce the friction of having to know which model to pick for each task in ChatGPT. I understand that the main combined reasoning version of GPT-5 will be available through ChatGPT and OpenAI’s API, and the mini version will also be available on ChatGPT and the API. The nano version of GPT-5 is expected to only be available through the API.”

Readers here have seen my discussions of OpenAI’s GPT journey for months, from project names like ‘Orion’ and ‘Strawberry’, and OpenAI steps on its way to AGI (and ASI/Superintelligence).

From Level 1 Steps on its roadmap to Level 2 AI Reasoning, and Level 3 AI Agents today.

Still a long way to go to the next two levels and beyond. And the cornucopia of AI Applications (products and services), eagerly sought at the end of the AI rainbow. The AI Holy Grail as it were (Box 6 below).

For now GPT-5 is a step to the next bigger thing. Currently it looks like it is beefed up particularly for AI Coding. This is the white hot area as the industry races to recruit AI Researchers and Developers, to create the next Cambrian explosion of AI applications ahead.

And OpenAI is laser focused on it, despite it just losing out on buying AI Coding startup Windsurf for $3 billion to Google and Cognition AI. The root of that development was apparently the IP and Governance transition discussions long underway with its core partner Microsoft.

But it looks like GPT-5 will have deep AI coding capabilities nevertheless.

The Information lays out the latest in “OpenAI’s GPT-5 Shines in Coding Tasks”:

“GPT-5 is almost here, and we’re hearing good things. The early reaction from at least one person who’s used the unreleased version was extremely positive.”

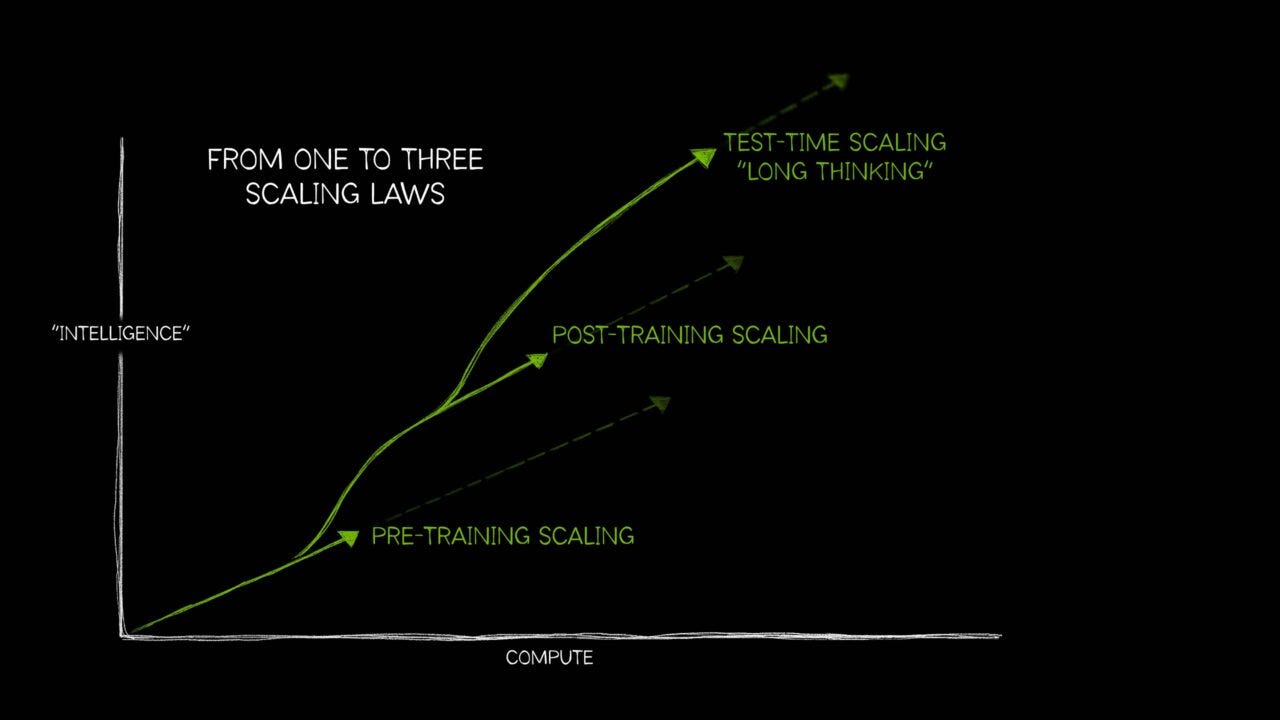

“That’s good news for OpenAI. The ChatGPT creator has been under pressure to show major gains from its next big artificial intelligence model since we first broke the news last November that it had seen diminishing returns from using more computational resources and data during “pretraining.” That’s part of the initial process of developing a model, when it processes tons of data from the web and other sources so it can learn the relationships between different concepts.”

There is organized and unorganized chatter building around the upcoming release:

“OpenAI hasn’t specified when GPT-5 will be released inside ChatGPT and for app developer customers. But CEO Sam Altman has started publicly discussing how much he’s enjoying using the unreleased version.”

And a key part of the evolution seems to be fusing regular LLM AI in ChatGPT with the deeper ‘thinking’ Reasoning and agentic capabilities.

“The model aims to fulfill Altman’s plan of integrating traditional “GPT”-branded large language models with the company’s “o” line of reasoning models into one model or chat interface, according to a person who has used it.”

Of course LLM AI peers like Anthropic, Google Gemini and others are also hot on this innovation and evolution trail.

“Similar to Anthropic’s hybrid Claude models, users will likely be able to control how much GPT-5 “thinks” about a certain problem, and the model will also turn its reasoning capabilities off and on depending on how difficult a problem is, the person said. (So if you ask it how many r’s are in the word “strawberry,” it won’t spend gobs of compute thinking about it, even if you’ve instructed it to.)”

And the capabilities of course extend beyond AI Coding for OpenAI and its peers:

“GPT-5 shows improved performance in a number of domains, including the hard sciences, completing tasks for users on their browsers and creative writing, compared to previous generations of models. But the most notable improvement comes in software engineering, an increasingly lucrative application of LLMs, the person who’s used it said.:”

“GPT-5 is not only better at academic and competitive programming problems, but also at more practical programming tasks that real-life engineers might handle, like making changes in a large, complicated codebase full of old code, the person said.”

The anecdotal buzz is high relative to the competition, especially vs Anthropic:

“That nuance has been something OpenAI’s models have struggled with in the past, and is one reason why rival Anthropic has been able to keep its lead with many app-developer customers. But, as we’ve reported, OpenAI is more than aware of this issue and has been working in recent months to improve the coding capabilities of its models.”

“One person who has used GPT-5 says it performs better than Anthropic’s Claude Sonnet 4 in head-to-head comparisons they’ve tested. But that’s just one person’s opinion. (And Anthropic also has a more advanced model called Claude Opus 4.)”

Coding though is the near-term area of intense competition, as the near-term prize at the attention and habits of Developers globlally in the tens of millions:

“Whether or not OpenAI is able to automate harder coding tasks and win over the hearts of software developer customers has implications for both its business and that of its competitors. Cursor and other popular coding assistants are on pace to pay Anthropic hundreds of millions of dollars annually, or more, to use its Claude models to power their coding apps. That’s money that could be going to OpenAI.”

“We also previously reported how OpenAI’s leaders also view automated coding, especially of practical programming tasks, as a critical component of developing artificial general intelligence.”

And OpenAI’s AI Infrastructure providers, Nvidia and recently Google, amongst many others are also beneficiaries, despite the Herculean challenges and investments:

“Overall, GPT-5’s strong performance seems like good news for OpenAI’s chip supplier Nvidia, firms building data centers, and any equity or debt investors who have wondered about the trajectory of AI development in the face of reports about periodically troubled AI model-development efforts at OpenAI, Google and other firms.”

A lot is of course not known about GPT-5 and OpenAI’s directions:

“We’re still not sure what GPT-5 actually is. It’s possible that the model is a kind of a router that directs queries to an LLM or to reasoning model, depending on the question, rather than using a single, newly developed model that can handle both types.”

“In that case, looking at GPT-5’s performance might not be able to help us answer the question of whether we’ll keep seeing significant improvements from scaling up compute and data during the pretraining process. In fact, we already know that earlier LLMs OpenAI wanted to eventually brand as GPT-5 weren’t good enough, and one of them was downgraded to GPT-4.5 and faded from relevance.”

But it’s clear that the milestones will include the roadmap chart above, focusing on AI Reasoning and Agents driven applications next:

“It could be that most of the improvements are going to come from gains in reasoning models, not traditional LLMs, meaning they will happen in the post-training phase when human experts get involved to teach models new tricks.”

“Even if that’s true, many researchers say they’ve been expecting a slowdown in improvements in pretrained models for a while.”

And a lot of the newer AI Scaling innovations and vectors that are emerging in force:

“They say the real opportunity to improve AI models will come from reinforcement learning in the post-training phase. That involves “synthetic data,” a fancy term to describe how models produce lots of possible answers to hard questions and the human experts who guide them through those problems. (For more on RL and some of the more experimental approaches researchers have been trying, check out this newsletter.)”

And the company is already defining the next AI Milestones to look ahead to:

“For what it’s worth, OpenAI executives have told investors that they believe the company can reach “GPT-8” by using the current structures powering its models, more or less, according to an investor.”

And OpenAI’s peers, both here and abroad, most certainly will be racing along these routes as well.

And that is the Bigger Picture to keep in mind at this mid-point in the 2025 AI Tech Wave. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)