AI: OpenAI GPT-5 tweaking 'Simplicity'. RTZ #812

It’s been almost a week since OpenAI’s long-awaited GPT-5 launch. And as expected, the reactions are coming in fast and furious from all quarters. While the reviews are generally positive on the model’s ‘Deep Thinking’ and AI Coding capabilities, the jury seems mixed on the regular AI capabilities. And there are some important tweaks reverting to older models.

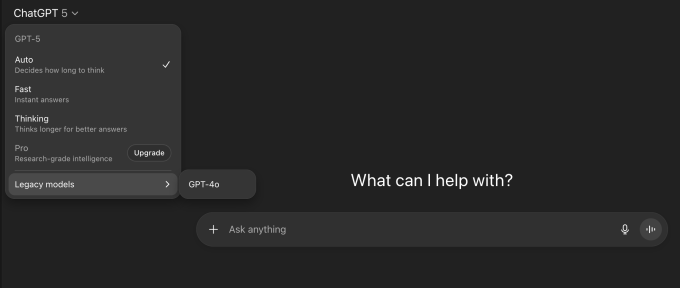

A core issue seems to be around the simplified model picker, where GPT-5, for its flagship mainstream user app ChatGPT-5, did away initially with the top down model picker that users had to navigate through half a dozen or more OpenAI models of various types.

They instead put in a ‘router’ where GPT-5 figured out which model to route to based on its reading of the user query.

It seems both consumera and businesses wanted a bit more choice between ‘fast results’ which mean lower models, and ‘thinking’ which means more slower models that take their time (and more costly compute) to figure out a more’reasoned’ answer.

And runs into the other major issue for OpenAI and other LLM AI companies, that they all live in a supply-constrained AI compute world. Despite the billions being invested to build more AI data centers. That just takes time (think months and years), to bring on line.

So within a few days, OpenAI decided to change up the ‘simplified approach. As Techcrunch notes in “ChatGPT’s model picker is back, and it’s complicated”:

“When OpenAI launched GPT-5 last week, the company said the model would simplify the ChatGPT experience. OpenAI hoped GPT-5 would act as a sort of “one size fits all” AI model with a router that would automatically decide how to best answer user questions. The company said this unified approach would eliminate the need for users to navigate its model picker — a long, complicated menu of AI options that OpenAI CEO Sam Altman has publicly said he hates.”

“But it looks like GPT-5 is not the unified AI model OpenAI hoped it would be.”

So here is the new, new approach to GPT-5 on ChatGPT-5 in particular:

“Altman said in a post on X Tuesday that the company introduced new “Auto”, “Fast”, and “Thinking” settings for GPT-5 that all ChatGPT users can select from the model picker. The Auto setting seems to work like GPT-5’s model router that OpenAI initially announced; however, the company is also giving users options to circumnavigate it, allowing them to access fast and slow responding AI models directly.”

And the model reverts back to GPT-4o, kind of a mini ‘New Coke/Coke Classic’ moment for OpenAI:

“Alongside GPT-5’s new modes, Altman said that paid users can once again access several legacy AI models — including GPT-4o, GPT-4.1, and o3 — which were deprecated just last week. GPT-4o is now in the model picker by default, while other AI models can be added from ChatGPT’s settings.”

The other issue that seems to have come up and is being addressed real-time by OpenAI, is ‘model personality’:

“We are working on an update to GPT-5’s personality which should feel warmer than the current personality but not as annoying (to most users) as GPT-4o,” Altman wrote in the post on X. “However, one learning for us from the past few days is we really just need to get to a world with more per-user customization of model personality.”

“ChatGPT’s model picker now seems to be as complicated as ever, suggesting that GPT-5’s model router has not universally satisfied users as the company hoped. The expectations for GPT-5 were sky high, with many hoping that OpenAI would push the limits of AI models like it had with the launch of GPT-4. However, GPT-5’s rollout has been rougher than expected.”

So teething problems in terms of the right tweaks up front and back. Both on the mainstream user focused ChatGPT-5 front, and the GPT-5 APIs that developers and businesses use to access the OpenAI models.

This is all important since this time, GPT-5 is a collection of models, unified from a UI/UX point of view, to automagically route mainstream queries for the best, delivering the relevant and hopefully more accurate answers. And as we see above, there are a lot of things to be optimized here, both from user costs and compute availability.

It’s a set of issues that is being fast iterated on by OpenAI peers as well. And all being assessed real time by the user community at large.

Semianalysis sums up OpenAI’s challenge with GPT-5 well here:

“To many power users (Pro and Plus), GPT5 was a disappointing release. But with closer inspection, the real release is focused on the vast majority of ChatGPT’s users, which is the 700m+ free userbase that is growing rapidly. Power users should be disappointed; this release wasn’t for them. The real consumer opportunity for OpenAI lies with the largest user base, and getting the unmonetized users who currently use ChatGPT infrequently in their day-to-day to indirectly pay is their largest opportunity.”

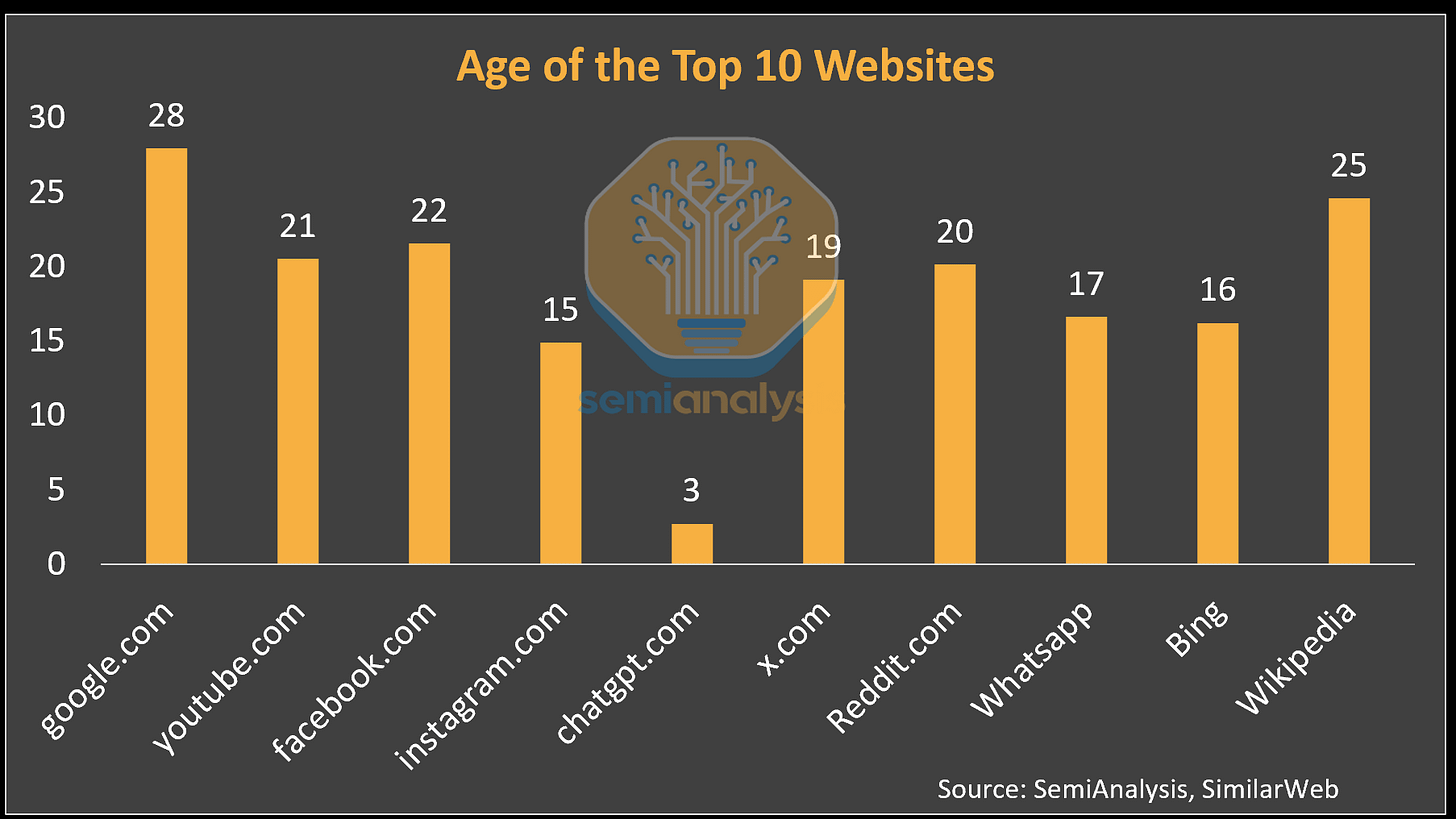

“Analysts focused on the model capabilities are missing the much larger context of network effects that ChatGPT is gaining quickly. In November of 2023, ChatGPT wasn’t even in the top 100 websites; now it is number 5. Larger than X/Twitter, Reddit, Whatsapp, Wikipedia, and quickly approaching Instagram, Facebook, Youtube and Google. On the top 10 list, every single property is much older than ChatGPT.com, and the sheer number of unmonetized users is staggering.”

“But that changes with GPT-5. OpenAI is laying the groundwork to monetize one of the largest and fastest-growing web properties in the world, and it all begins with the router.”

That summarizes well OpenAI’s long-game for its core business.

Finally, Axios gives a useful take overall on the current sentiment on the GPT-5 launch in ‘OpenAI’s big GPT-5 launch gets bumpy’:

“OpenAI’s GPT-5 has landed with a thud despite strong benchmark scores and praise from early testers.”

“Why it matters: A lot rides on every launch of a major new large language model, since training these programs is a massive endeavor that can require months or years and billions of dollars.”

Part of it of course are the high expectations that were set pre-launch:

“Driving the news: When OpenAI released GPT-5 last week, CEO Sam Altman promised the new model would give even free users of ChatGPT access to the equivalent of Ph.D.-level intelligence.”

“But users quickly complained that the new model was struggling with basic tasks and lamented that they couldn’t just stick with older models, such as GPT-4o.”

And the world online likes to run with narratives/memes of the moment:

“Unhappy ChatGPTers took to social media, posting examples of GPT-5 making simple mistakes in math and geography and mocking the new model.”

“Altman went into damage-control mode, acknowledging some early glitches, restoring the availability of earlier models and promising to increase access to the higher-level “reasoning” mode that allows GPT-5 to produce its best results.”

Axios does a good job laying out the potential problems:

“Between the lines: There are several likely reasons for the underwhelming reaction to GPT-5.”

“GPT-5 isn’t one model, but a collection of models, including one that answers very quickly and others that use “reasoning” — taking additional computing time to answer better. The non-reasoning model doesn’t appear to be nearly as much of a leap as the reasoning part.”

“As Altman explained in a series of posts, early glitches in the model’s rollout meant some queries weren’t being properly routed to the reasoning model.”

“GPT-5 appears to shine brightest at coding — particularly at taking an idea and turning it into a website or app. That’s not a use case that generates examples tailor-made to go viral the way previous OpenAI releases, like its recent improved image generator, did.”

All the links and resources are worth perusing for those wanting additional color and nuance. And most of these issues could be viewed as short-term teething problems. To be ironed out shortly.

The issue is the constant raising and lowering of expectations in all things AI:

“Zoom out: GPT-5 took a lot longer to arrive than OpenAI originally expected and promised. In the meantime the company’s leaders — like their competitors — kept upping the ante on just how golden the AI age is going to be.”

“The more they have promised the moon, the greater the public disappointment when a milestone release proves more down-to-earth.”

But the good thing is that OpenAI is trying to be responsive in real-time, from the top down:

“What they’re saying: In posts on X and in a Reddit AMA on Friday, Altman promised that users’ complaints were being addressed.”

“The autoswitcher broke and was out of commission for a chunk of the day, and the result was GPT-5 seemed way dumber,” Altman said on Friday. “Also, we are making some interventions to how the decision boundary works that should help you get the right model more often.”

“Altman pledged to increase access to reasoning capabilities and to restore the option of using older models.”

“OpenAI also plans to change ChatGPT’s interface to make it clearer which model is being used in any given response.”

Part of the issue is the rate of change, as users get comfortable how to get things done with the technology ‘as is’. That often clashes with technology ‘as it will be’, when it’s improved in any way.

“Altman also acknowledged in a later post recent stories about people becoming overly attached to AI models and said the company has been studying this trend over the past year.”

“It feels different and stronger than the kinds of attachment people have had to previous kinds of technology,” he said, adding that “if a user is in a mentally fragile state and prone to delusion, we do not want the AI to reinforce that.”

Of course the folks with a more AI ‘glass half empty’ are going to take a short-term victory lap.

“Meanwhile, critics seized on the disappointments as vindication for their long-standing skepticism that generative AI is a precursor to greater-than-human intelligence.”

“My work here is truly done,” longtime genAI critic Gary Marcus wrote on X. “Nobody with intellectual integrity can still believe that pure scaling will get us to AGI.”

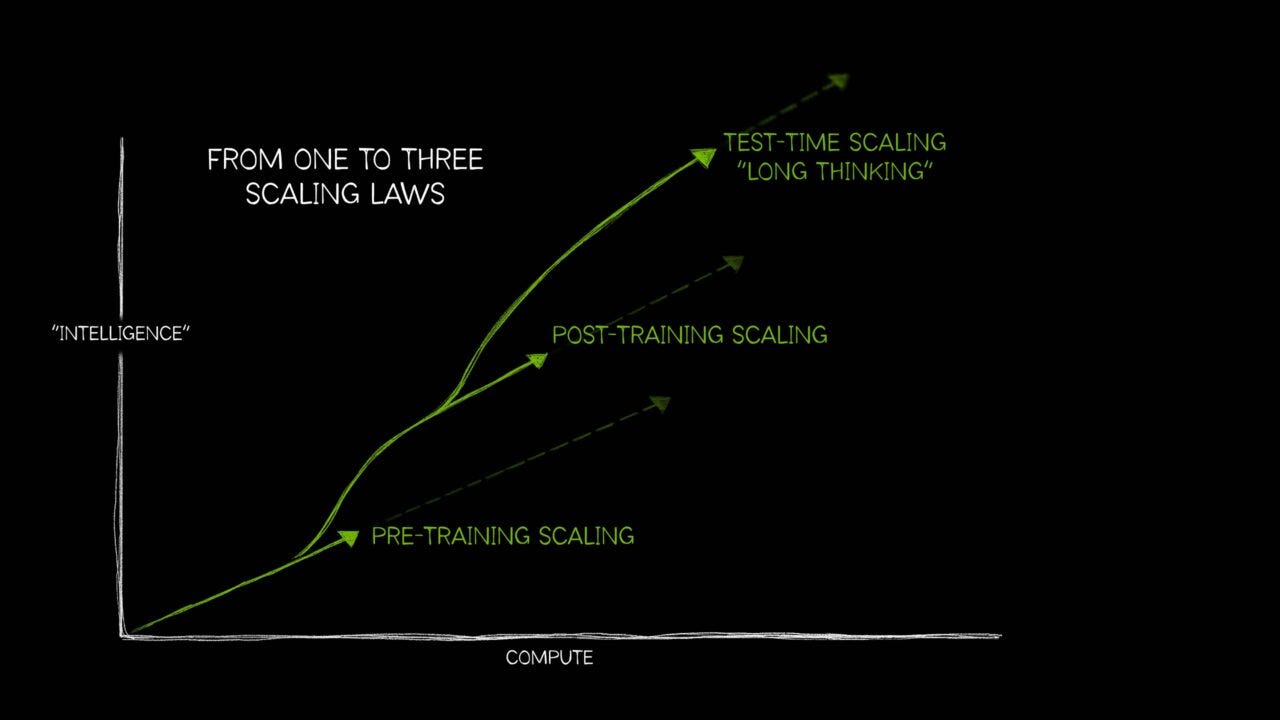

The core intellectual debate is on how far does AI Scale, something discussed here at length.

“Yes, but: OpenAI’s leaders argue that their scaling strategy is still reaping big dividends.”

“Our scaling laws still hold,” the company’s COO, Brad Lightcap, told Big Technology’s Alex Kantrowitz.

“Empirically, there’s no reason to believe that there’s any kind of diminishing return on pre-training. And on post-training” — the technique that supports models’ newer “reasoning” capabilities — “we’re really just starting to scratch the surface of that new paradigm.”

It’s too early to get the real, long-term picture on this or any new major launch by AI companies.

Especially given the complexity and opacity of the underlying LLM AIs. But spot checks along the way, do help put a shifting picture together in this AI Tech Wave . Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)