AI: OpenAI shows Growth Scaling with AI Compute. RTZ #972 (OpenAI's 'AOL Moment', part 2)

In the iconic words from ‘Jaws’ (1975), ‘You’re gonna need a bigger boat’.

That’s been the non-stop message message from the LLM AI companies over the last few years. The refrain led by OpenAI in particular, whose founder/CEO Sam Altman has been saying it repeatedly for a few years now. Replace ‘bigger boat’ with ‘bigger compute’.

As I highlighted in ‘OpenAI’s ‘AOL Moment’ this Sunday (part 1), OpenAI’s trajectory with growth and infrastructure scaling rhymes with America Online’s Internet Wave trajectory in the nineties. And there are notable takeaways that can be helpful to better understand the OpenAI challenges and opportunities. That’s what I’d like to discuss in ‘part 2’ of this discussion.

OpenAI just laid out its extraordinary journey in the growth of its financial metrics today, as it kicks off the fourth year post its ‘ChatGPT Moment’ on 11/30/22. It’s meant to provide the financial rewards, and the IMPERATIVES of extraordinary amounts of AI Scaling.

Mostly accepted by the investment world today as hundreds of billions, nay, trillions of dollars of scaling AI Compute Infrastructure and Power investments.

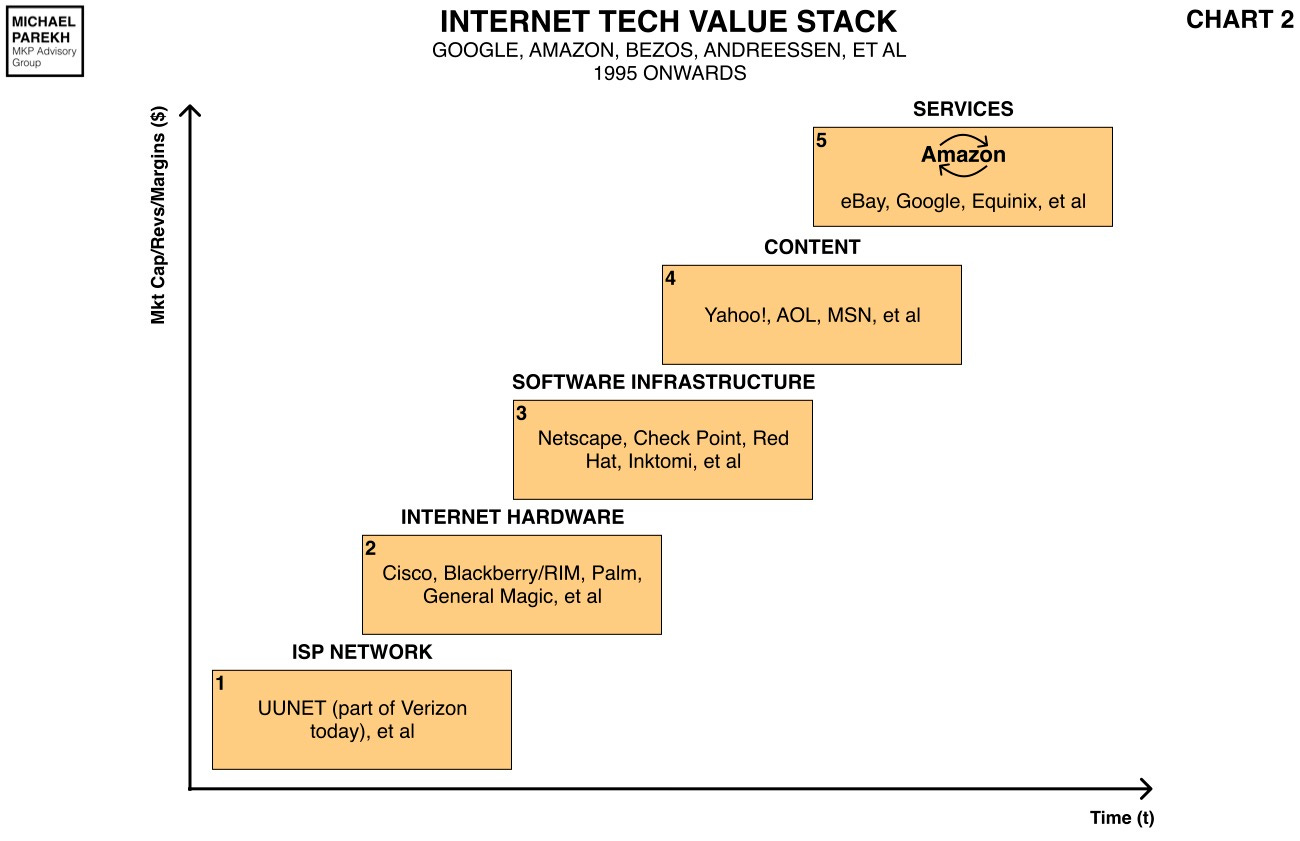

Driven by immense variable costs that grow with both mainstream users and their usage of AI. WAY BEFORE tangible evidence of financial rewards like revenues, margins and profits. The left axis in my staircase AI Tech Stack below.

And it’s worth highlighting some of OpenAI’s metrics. Followed by the larger picture against history. To understand what may lie ahead. Heads AND/or Tails.

OpenAI CFO Sarah Friar (also a Goldman Sachs alum), makes the argument for Scale well in “A business that scales with the value of intelligence”:

“We launched ChatGPT as a research preview to understand what would happen if we put frontier intelligence directly in people’s hands.”

“What followed was broad adoption and deep usage on a scale that no one predicted.”

She then goes into the consumer and commercial uses for ChatGPT discovered to date in some pithy anecdotes. Across OpenAI’s roadmap to AGI through AI Reasoning, Agents and more:

“More than experimenting with AI, people folded ChatGPT into their lives. Students started using it to untangle homework they were stuck on late at night. Parents started using it to plan trips and manage budgets. Writers used it to break through blank pages. More and more, people used it to understand their lives. People used ChatGPT to help make sense of health symptoms, prepare for doctor visits, and navigate complex decisions. People used it to think more clearly when they were tired, stressed, or unsure.”

“Then they brought that leverage to work.”

“At first, it showed up in small ways. A draft refined before a meeting. A spreadsheet checked one more time. A customer email rewritten to land the right tone. Very quickly, it became part of daily workflows. Engineers reasoned through code faster. Marketers shaped campaigns with sharper insight. Finance teams modeled scenarios with greater clarity. Managers prepared for hard conversations with better context.”

“What began as a tool for curiosity became infrastructure that helps people create more, decide faster, and operate at a higher level.”

Then a step back to how this trajectory continues to define and drive OpenAI:

“That transition sits at the heart of how we build OpenAI. We are a research and deployment company. Our job is to close the distance between where intelligence is advancing and how individuals, companies, and countries actually adopt and use it.”

“As ChatGPT became a tool people rely on every day to get real work done, we followed a simple and enduring principle: our business model should scale with the value intelligence delivers.”

Going onto the flexibility of its evolving business models spanning subscriptions, transactions and soon ads. The three rivers of online success across tech waves for decades.

“We have applied that principle deliberately. As people demanded more capability and reliability, we introduced consumer subscriptions. As AI moved into teams and workflows, we created workplace subscriptions and added usage-based pricing so costs scale with real work getting done. We also built a platform business, enabling developers and enterprises to embed intelligence through our APIs, where spend grows in direct proportion to outcomes delivered.”

“More recently, we have applied the same principle to commerce. People come to ChatGPT not just to ask questions, but to decide what to do next. What to buy. Where to go. Which option to choose. Helping people move from exploration to action creates value for users and for the partners who serve them. Advertising follows the same arc. When people are close to a decision, relevant options have real value, as long as they are clearly labeled and genuinely useful.”

And then an on-ramp to the user query flywheel rewarding the above approach. While feeding new data into the underlying models to make them better with each user prompt:

And of course drive the financial flywheels as well:

“Across every path, we apply the same standard. Monetization should feel native to the experience. If it does not add value, it does not belong.”

“Both our Weekly Active User (WAU) and Daily Active User (DAU) figures continue to produce all-time highs. This growth is driven by a flywheel across compute, frontier research, products, and monetization. Investment in compute powers leading-edge research and step-change gains in model capability. Stronger models unlock better products and broader adoption of the OpenAI platform. Adoption drives revenue, and revenue funds the next wave of compute and innovation. The cycle compounds.”

Note no hard numbers above, beyond the 900 million weekly users of ChatGPT OpenAI has articulated to date for a while now. With about 5% being paid subscribers at $20 a month and above. Soon to be supplemented with an Netflix style $8/month lower price subscription, to attract more mainstream users, supplemented by some ads.

But the growth to date over three years of ChatGPT are worth pausing over:

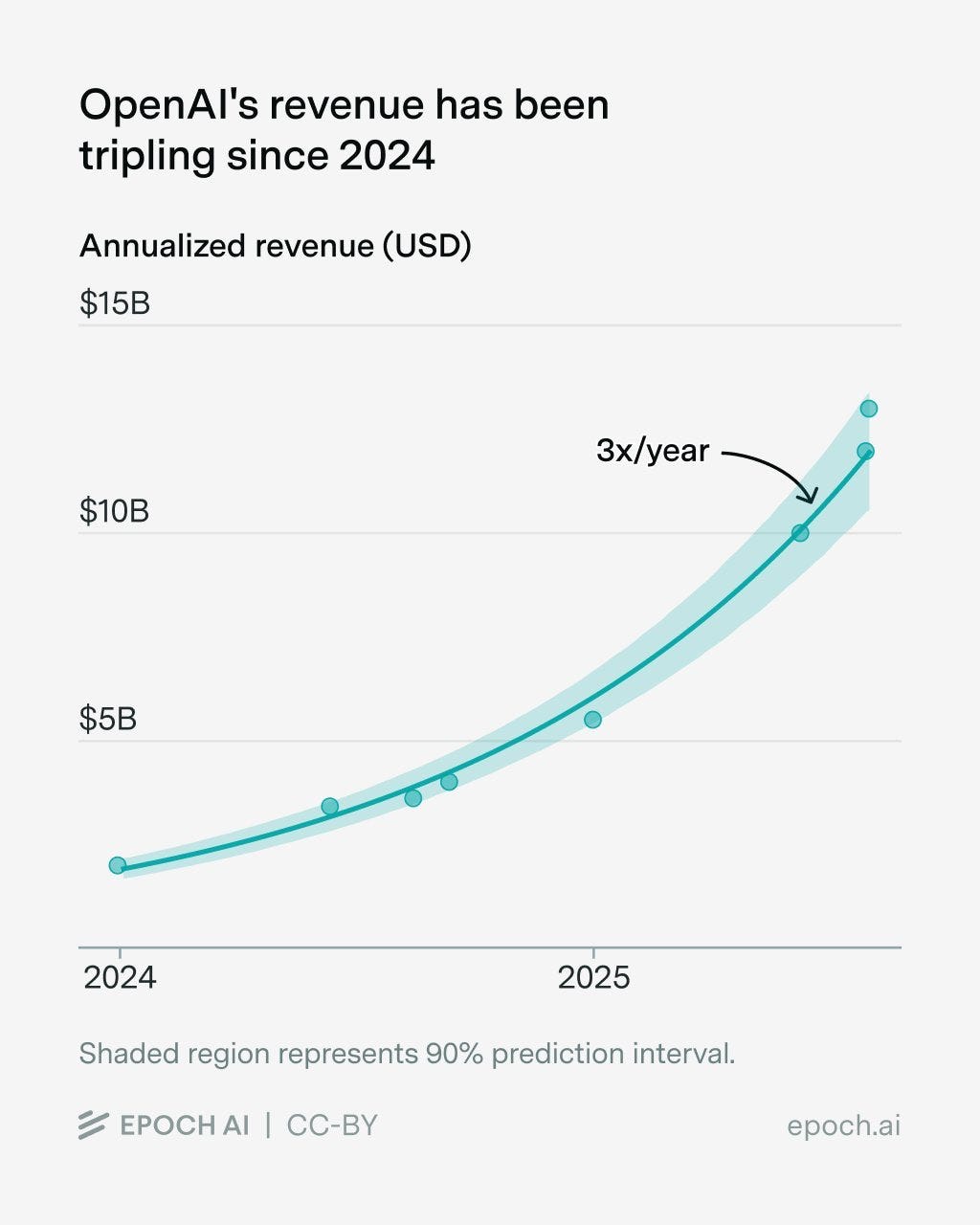

“Looking back on the past three years, our ability to serve customers—as measured by revenue—directly tracks available compute: Compute grew 3X year over year or 9.5X from 2023 to 2025: 0.2 GW in 2023, 0.6 GW in 2024, and ~1.9 GW in 2025. While revenue followed the same curve growing 3X year over year, or 10X from 2023 to 2025: $2B ARR in 2023, $6B in 2024, and $20B+ in 2025. This is never-before-seen growth at such scale. And we firmly believe that more compute in these periods would have led to faster customer adoption and monetization.”

What’s new above is the massive amounts of the Powered AI Data Centers for the Compute needed, to scale the metrics and the financials. Super-sized almost annually.

“Compute is the scarcest resource in AI. Three years ago, we relied on a single compute provider. Today, we are working with providers across a diversified ecosystem. That shift gives us resilience and, critically, compute certainty. We can plan, finance, and deploy capacity with confidence in a market where access to compute defines who can scale.”

“This turns compute from a fixed constraint into an actively managed portfolio. We train frontier models on premium hardware when capability matters most. We serve high-volume workloads on lower-cost infrastructure when efficiency matters more than raw scale. Latency drops. Throughput improves. And we can deliver useful intelligence at costs measured in cents per million tokens. That is what makes AI viable for everyday workflows, not just elite use cases.”

It lays out the company’s position from Box 3 to Holy Grail Box 6 for AI Applications/Services in the AI Tech Stack above. All on a growing mountain of AI Compute tha excels at unimaginable amounts of training and inference matrix multiplication by oodles of teraflops.

Probabilisitic computing vs the deterministic computing of the last 70+ years. And hybrids thereof going forward as LLM AIs evolve into World Models and beyond. To whatever and whenever the current ‘AGI’ quest leads:

“On top of this compute layer sits a product platform that spans text, images, voice, code, and APIs. Individuals and organizations use it to think, create, and operate more effectively. The next phase is agents and workflow automation that run continuously, carry context over time, and take action across tools. For individuals, that means AI that manages projects, coordinates plans, and executes tasks. For organizations, it becomes an operating layer for knowledge work.”

Note that the ‘operating layer for knowledge work’ above, itself rhymes with Microsoft’s Bill Gates of a different tech wave. When he was focusing on ‘Knowledge Workers’ for Microsoft DOS/Windows and its suite of Microsoft Office Applications. That went on to make him one of the then richest people on Earth.

All centered around changing mainstream user habits both at work and at home. And everywhere in between. With all its risks and opportunities, the toothpaste being out of the AI tube.

“As these systems move from novelty to habit, usage becomes deeper and more persistent. That predictability strengthens the economics of the platform and supports long-term investment.”

“The business model closes the loop. We began with subscriptions. Today we operate a multi-tier system that includes consumer and team subscriptions, a free ad- and commerce-supported tier that drives broad adoption, and usage-based APIs tied to production workloads. Where this goes next will extend beyond what we already sell. As intelligence moves into scientific research, drug discovery, energy systems, and financial modeling, new economic models will emerge. Licensing, IP-based agreements, and outcome-based pricing will share in the value created. That is how the internet evolved. Intelligence will follow the same path.”

Implicit here is an acknowledgement of the excruciatingly exquisite Execution required.

As OpenAI itself grew from 500 people when it almost imploded over a Board Governance Scuffle three years ago to over 4000 today. And growing. With two of the four senior founders remaining at OpenAI.

“This system requires discipline. Securing world-class compute requires commitments made years in advance, and growth does not move in a perfectly smooth line. At times, capacity leads usage. At other times, usage leads capacity. We manage that by keeping the balance sheet light, partnering rather than owning, and structuring contracts with flexibility across providers and hardware types. Capital is committed in tranches against real demand signals. That lets us lean forward when growth is there without locking in more of the future than the market has earned.”

This is of course where all the ‘circular deals’ adding up to over a trillion and a half for OpenAI alone come in. Along with all the concurrent admiration and skepticism generated. Yes, two opposite things can exist at the same time. Heads AND/or Tails.

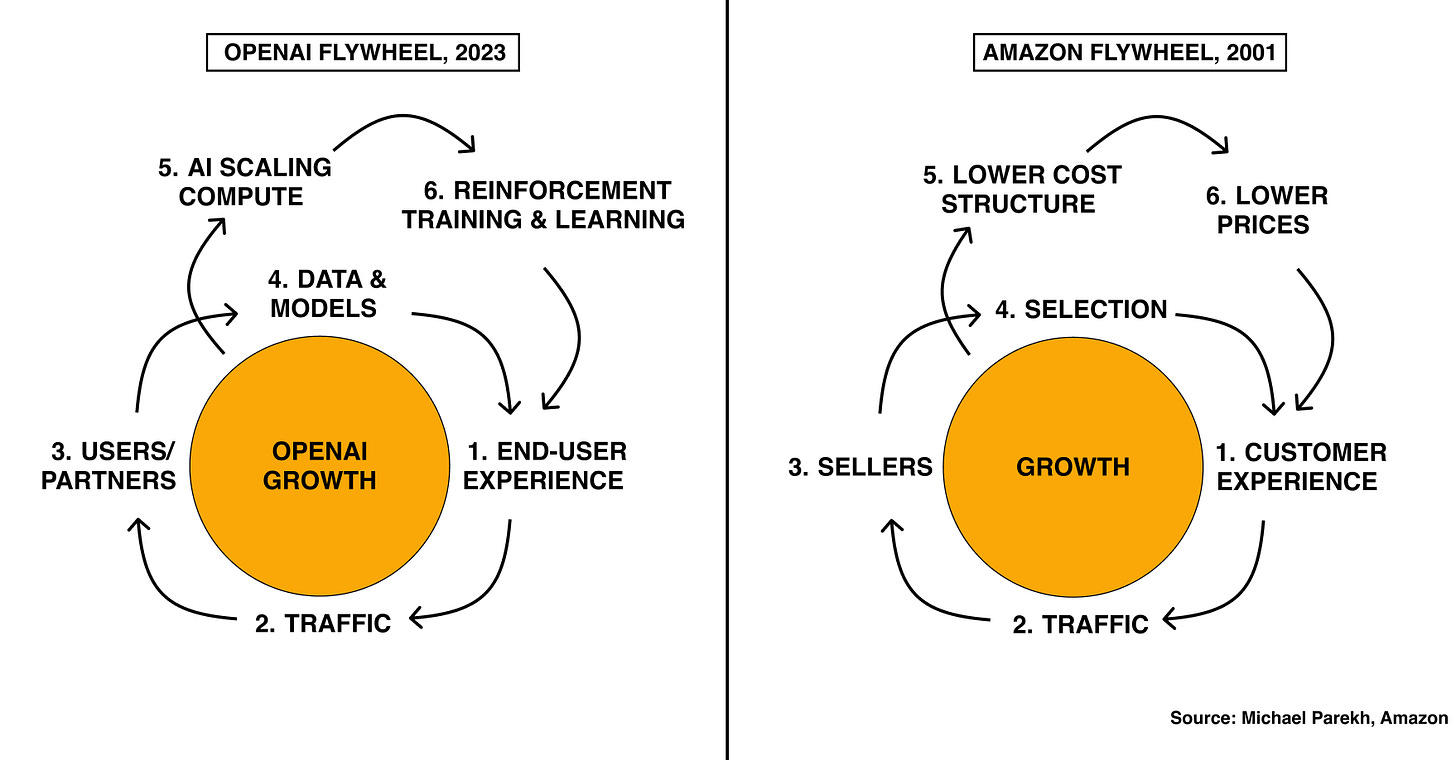

But as I described it in 2023, OpenAI continues to need to accelerate ever faster around these loops. And grow AI Compute (step 5 below), as users and usage grow around the flywheel (steps 1-4 and 6) below. As compared to Amazon’s original flywheel from 25 years ago. That is what OpenAI CFO Sarah Friar is detailing above.

Rhyming historically against Amazon’s flywheel loop from the nineties as well.

OpenAI’s CFO goes on to highlight the key element for the flywheel above to sustainably stick and scale, ‘practical adoption’:

“That discipline sets up our focus for 2026: practical adoption. The priority is closing the gap between what AI now makes possible and how people, companies, and countries are using it day to day. The opportunity is large and immediate, especially in health, science, and enterprise, where better intelligence translates directly into better outcomes.”

I’ve discussed OpenAI’s initiatives and opportunities in Healthcare alone, just a few days ago.

CFO Friar closes as follows:

“Infrastructure expands what we can deliver. Innovation expands what intelligence can do. Adoption expands who can use it. Revenue funds the next leap. This is how intelligence scales and becomes a foundation for the global economy.”

Not to mention an ‘AI Compute’ driven flywheel to create a business as large as Amazon did over the last quarter century. If not larger. Over the next quarter century.

The whole piece is worth a closer read. On the method in Sam Altman’s seeming AI ‘madness’.

As mentioned above, this Sunday I penned a piece “OpenAI’s ‘AOL Moment’”.

It wasn’t meant to be a positive or negative frame on OpenAI’s success to date. Nor to put a thumb on the probabilities of various opportunities ahead.

It was rooted in my seat at the table as the Internet Analyst at Goldman Sachs covering America Online from its earliest days as a public company in the mid 1990s. And through its apex when it merged with Time Warner, the larger media company in the world in 2000. At pre-merger peak valuations approaching half a trillion in today’s dollars. Close to OpenAI’s private market valuation today at the last mark.

The historical rhyme that made AOL a big winner for over the first half a decade of the Internet wave below.

Despite not outlasting some of the other winners in the holy grail Box 6. Amazon and Google to name a few.

A key element through that extraordinary journey was the operational and financial growth of its users in a market that was going mainstream for online access through dial up modems. Scaling that up was the clearest path to taming the variable costs of rising supporting more mainstream users and their usage. Just like for OpenAI today with AI Compute.

Building the most scaled up network of dial-up modems, was the biggest driver and imperative for AOL’s early years of mainstream user growth. Built with a little help from Internet Service Provider (ISP) UUNET, that Goldman Sachs helped via IPO and secondary offerings, with yours truly as the Internet Research analyst. (Just like OpenAI is leveraging Oracle and others today to build out their AI Compute at Scale).

Side note as another historical rhyme, Microsoft ended up taking an early equity interest in UUNET to build out its own competing MSN service vs AOL. Again rhyming with Microsoft’s early investment in OpenAI.

And the numbers through that journey for AOL were breathtaking. In users, revenues and costs. Growing AOL to capture over 30% of the available market of mainstream online users in that tech wave.

It was an unusual time where both bulls and bears could make concurrent, strident arguments on both sides of AOL’s business model and prospects.

OpenAI today is going through a similar earlier trajectory and debate over its model and prospects. In users, applications, revenues, and costs.

The last due to a variable cost model that requires OpenAI to execute unrelenting ‘circular’ deals to drive growth in AI Infrastructure, Data Centers, Power and more, to build unimagined amounts of AI Compute. To then generate a flood of AI Intelligence tokens. Going in and out of the Data Box no. 4 above in the AI Tech Stack.

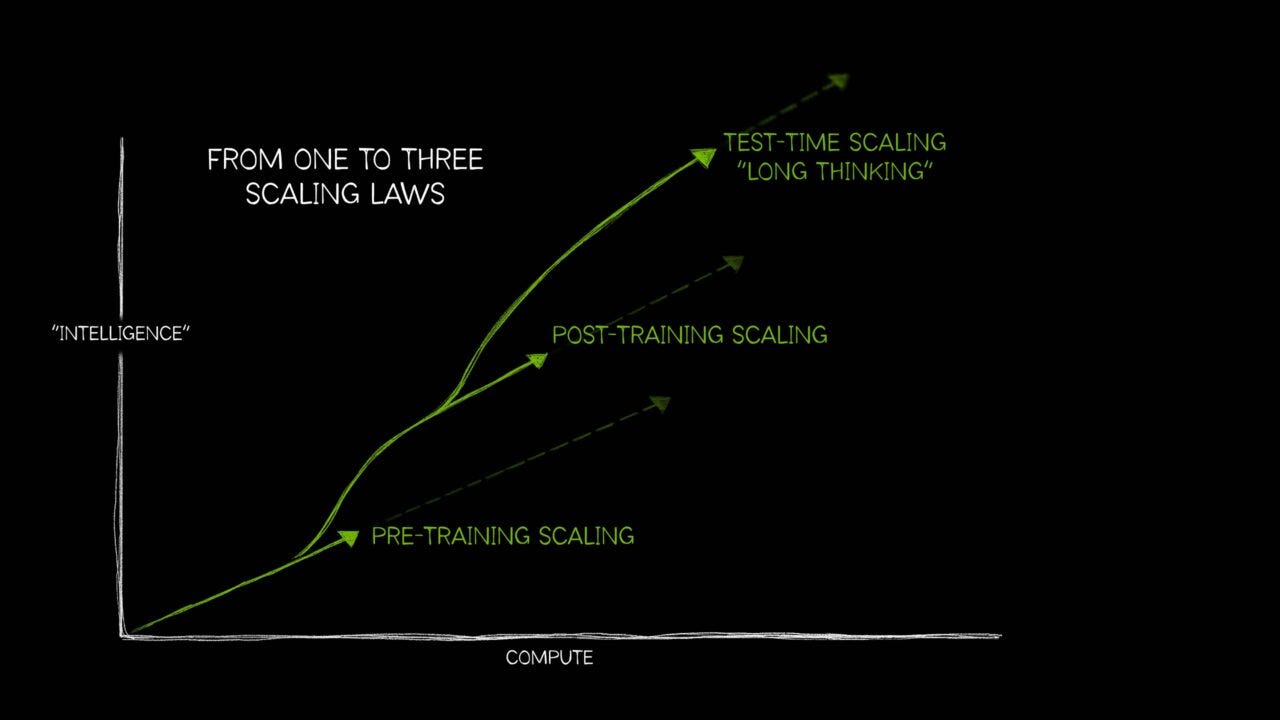

I’ve written about the AI Scaling and how it is spinning up into new curves of scales above the AI Data Centers of just three years ago.

And that scaling will take new shapes not yet fully understood by the markets. Many investors currently think of it as just the separate training and inference loops from a couple of years ago (the loops in the AI Tech Stack chart above. Those distinctions are increasing blurring and blending.

OpenAI is in the early part of its execution journey. And even for a half trillion dollar startup over a decade old now, it’s a journey still just ramping up.

And while its trajectory rhymes with AOL from almost three decades ago, its destiny is not yet written in this AI Tech Wave.

‘Nothing is Written’ as the iconic line goes in ‘Lawrence of Arabia’, 1962. For now, it applies to OpenAI as well. Despite historical rhymes with America Online.

Heads AND/or Tails. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)