AI: OpenAI's Infrastructure headwinds are par for the course. RTZ #792

It’s a tech truism that things always take longer to execute and deploy even when the project has ample resources and focus behind it. It’s something I’ve repeated often in these pages. It applies even in these extraordinary times of ‘flashing green light’ enthusiasm for all things AI in this AI Tech Wave.

The topic comes up as recent reports are highlighting how OpenAI’s ambitious $100 Billion+ AI Infrastructure build out dubbed ‘Stargate’ is taking longer than expected to deploy. This despite deep pocketed partners like Softbank led by founder/CEO Masayoshi Son, and neocloud AI data center partners like Oracle, CoreWeave, Crusoe, and others.

And ample access to the latest Blackwell AI GPUs and infrastructure from core partners like Nvidia.

Axios lays out the head winds in “OpenAI’s data center ambitions collide with reality”:

“OpenAI is finding that financing and building the massive data centers it needs to meet its ambitions is easier said than done.”

“Why it matters: There’s no limit on AI builders’ hunger for computing capacity.”

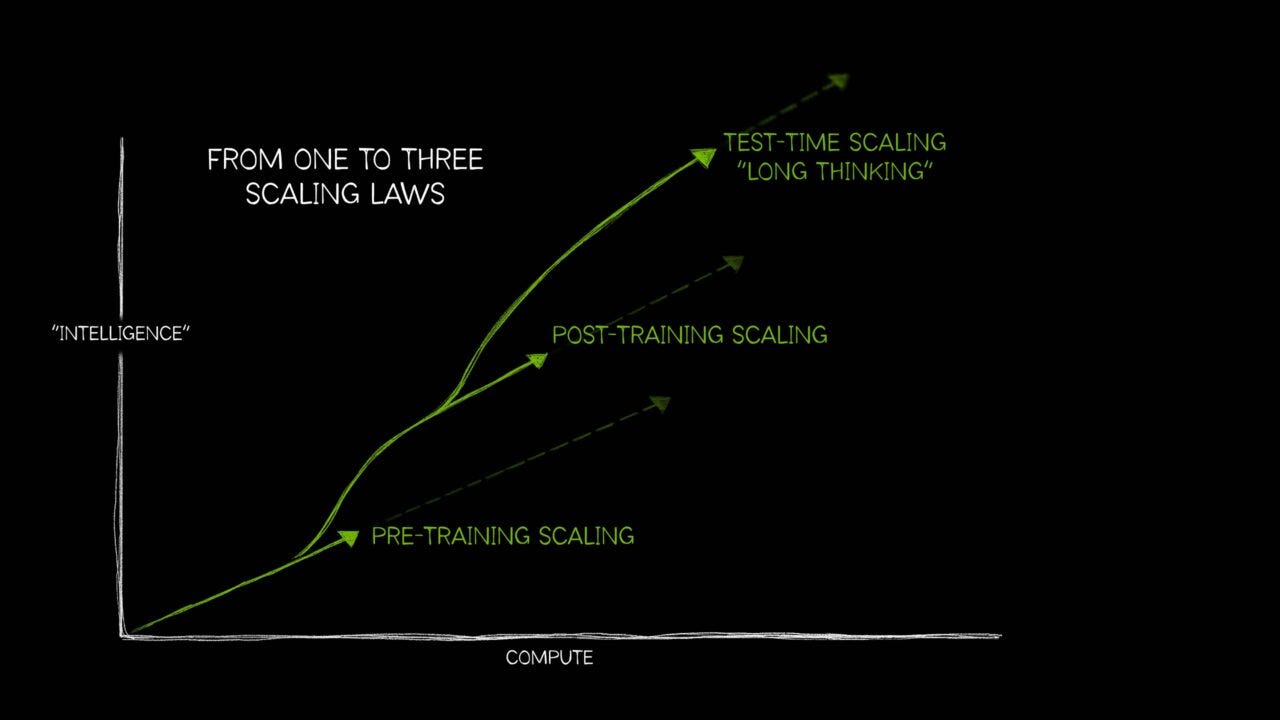

“Many advances in generative AI have come from dramatically boosting the computing power applies to model training, while new approaches and increased adoption mean that running those models demands more and more data centers, too.”

“Driving the news: The Wall Street Journal reported Monday that OpenAI has run into conflicts and stumbles in its relationship with SoftBank, a key partner in its effort to dramatically scale up its access to computing capacity.”

“The companies pledged in January that they would immediately invest $100 billion.”

“But no specific deals have yet been inked and near-term ambitions have been scaled back, with this year likely to yield only work on a single small U.S. data center, likely in Ohio, per the WSJ.”

“The Journal said other differences have cropped up in the six months since OpenAI CEO Sam Altman, SoftBank CEO Masayoshi Son and Oracle Chairman Larry Ellison announced their plans with President Trump in the Roosevelt Room of the White House.”

But there is progress despite the framing of headwinds, particular with Oracle’s help:

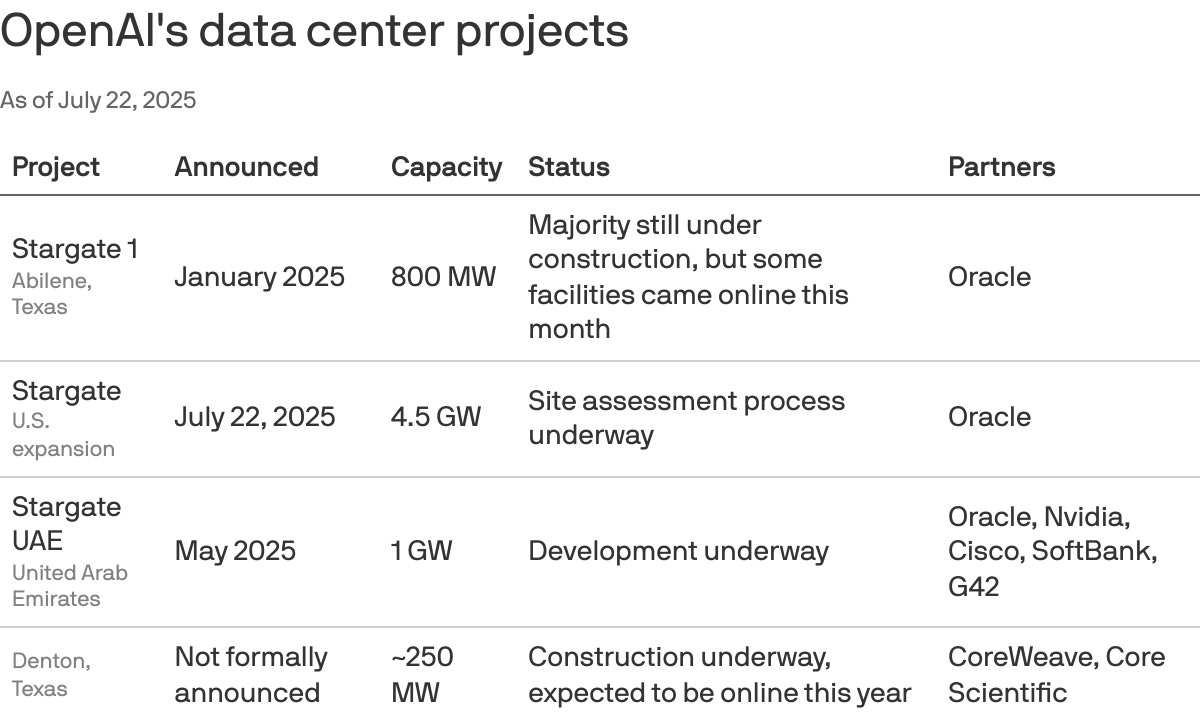

“Yes, but: OpenAI announced Tuesday an expansion of its work with Oracle that will see the pair build a further 4.5 gigawatts of capacity on top of an 800-megawatt project already under development in Abilene, Texas.”

“With this deal, we expect we will exceed the $500 billion commitment we announced at the White House 6 months ago; we are now working to expand that with Oracle, SoftBank, Microsoft, and others,” Altman told OpenAI employees Monday in a memo seen by Axios.”

Part of the headwinds seem to be current geopolitical trade and tariff realities on the global supply chain:

“What they’re saying: Altman acknowledged in the employee memo that the company’s thirst for computing is “starting to strain the supply chain” and will “require some real creativity.”

And the recent reshaping of OpenAI’s iconic partnership with Microsoft:

“Zoom in: OpenAI, which once relied solely on partner and investor Microsoft, has been partnering with an array of different companies and governments to get more processing power.”

“Its OpenAI for Countries project, announced in May, aims to bring various nations into the mix as partners. It signed its first such deal in May with the United Arab Emirates.”

“As part of OpenAI for Countries, partners will also agree to invest in U.S. projects — helping the company fund yet more data centers here.”

“OpenAI’s chief strategy officer Jason Kwon traveled across Asia for three weeks meeting with governments and potential investors in India, Singapore, Korea and other countries, though no additional deals have yet been announced.”

“OpenAI is even turning to rival Google for compute capacity.”

I discussed the significance of that last point separately.

Key here is access of Nvidia driven AI Compute. And that is on an impressive scaling plan relative to peers Meta, Elon’s x/xAI Grok and others.

“Altman reassured employees in his memo that additional capacity is coming sooner rather than later.”

“He noted that the company expects to have the equivalent of 2 million Nvidia A100 processors available for use by the end of August and then roughly double that capacity by the end of the year.”

Part of the issue seems to be what is covered by ‘Stargate’:

“Between the lines: It’s not easy to keep tabs on all the different data center projects OpenAI has announced in recent months.”

“The company has used the Stargate name to describe a number of next-generation data center efforts, but various projects under that banner involve different partners.”

“For example, its Middle East project is dubbed Stargate UAE and involves Oracle, Nvidia, Cisco, SoftBank and G42, a Middle East-based AI startup backed by Microsoft and others.”

“The new project announced Tuesday is also dubbed Stargate, though SoftBank (which actually registered the Stargate trademark) isn’t a part of the effort.”

And of course, OpenAI is not alone in this race towards super-sized AI Data Centers:

“The big picture: OpenAI isn’t the only company with big needs.”

“Global data center capacity is seen growing by 23% per year through 2030, according to a Morgan Stanley report.”

“Much of that growth is being spurred by a handful of big players, including Meta, Google, Microsoft and xAI.”

“Elon Musk said Tuesday that his xAI company has 230,000 GPUs, including 30,000 of Nvidia’s GB200, up and running for training Grok in its first Colossus supercluster.”

“Musk said the first batch of a planned 550,000 high-end Nvidia chips, also for training models, will come online in a few weeks. xAI relies on cloud providers to handle running Grok.”

And others are hot on the same trail:

“Anthropic, meanwhile, is acknowledging that meeting its data center needs will require the company to show more flexibility in choosing its partners, including taking money from Middle East governments.”

“Unfortunately, I think ‘No bad person should ever benefit from our success’ is a pretty difficult principle to run a business on,” Amodei said in a memo to staff, per Wired.”

So OpenAI’s ‘headwinds’ are the same for the industry at large, from infrastructure to AI Applications:

“The bottom line: All the companies chasing AI with superhuman capabilities say they will need ever more money, more electricity and more processing power.”

“We must continue to get more ambitious about compute; we want to bring lots of AI to lots of people,” Altman said in his memo. “It’s very clear to me how powerful it’s going to be to have AI working for you all of the time and helping you accomplish your goals in all sorts of ways.”

“One instance of GPT-5 running 24/7 will be powerful,” he wrote, “but personally I think 100 instances sounds better.”

So we need to be prepared for more ‘AI headwinds’ for companies beyond OpenAI in this AI Tech Wave. They’re being discussed today, primarily because they’re in a very visible pole position right now. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)