AI: Smaller 'Small Language Models' (SLMs) Emerging. RTZ #870

Regular AI: RTZ readers know I’ve been long highlighting the importance of both Large AND Small Language models (LLMs and SLMs), in these early days of the AI Tech Wave.

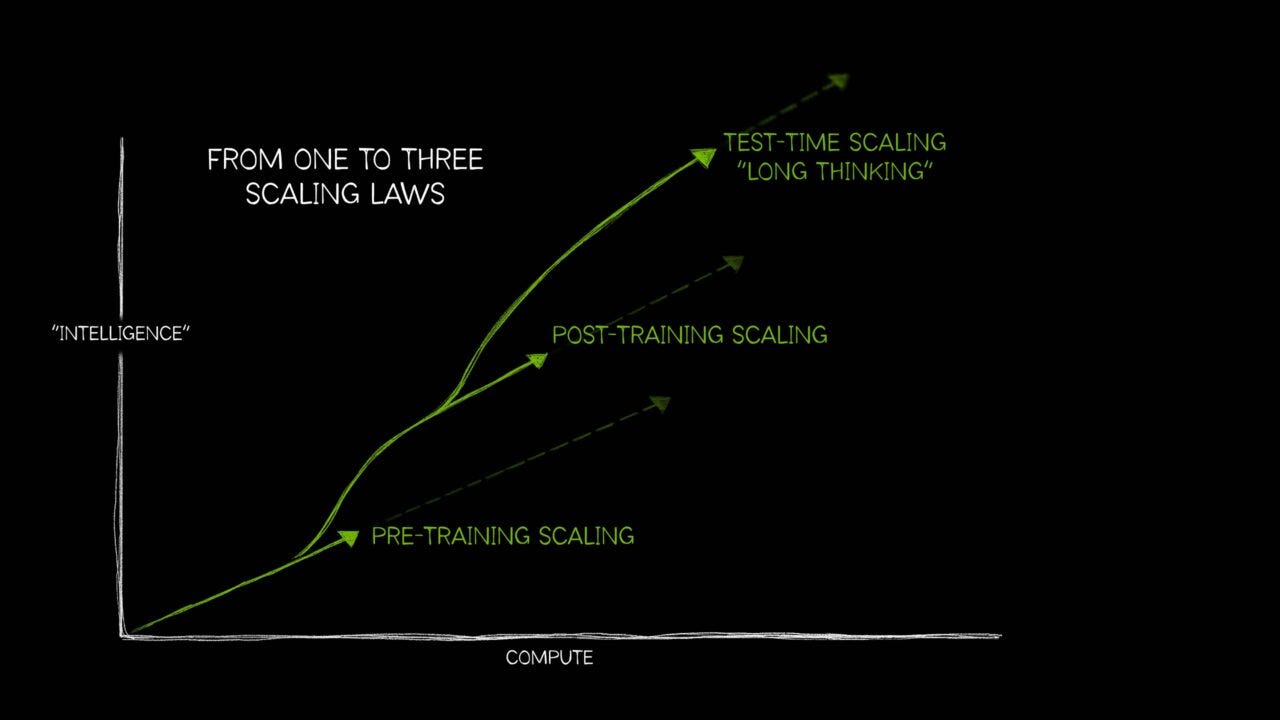

The major LLM AI companies have been focused on both ends for a while. But the majority of the industry AI Research attention, and more importantly AI capex dollars have been skewed to the massive demands of ever scaling LLM AI technologies.

This week saw some notable developments on the SLM side that is worth noting.

Venturebeat explains in “Samsung AI researcher’s new, open reasoning model TRM outperforms models 10,000X larger — on specific problems”:

“The trend of AI researchers developing new, small open source generative models that outperform far larger, proprietary peers continued this week with yet another staggering advancement.”

“Alexia Jolicoeur-Martineau, Senior AI Researcher at Samsung’s Advanced Institute of Technology (SAIT) in Montreal, Canada, has introduced the Tiny Recursion Model (TRM) — a neural network so small it contains just 7 million parameters (internal model settings), yet it competes with or surpasses cutting-edge language models 10,000 times larger in terms of their parameter count, including OpenAI’s o3-mini and Google’s Gemini 2.5 Pro, on some of the toughest reasoning benchmarks in AI research.”

This is smaller Small Language Models (SLM) than seen to dateL

“The goal is to show that very highly performant new AI models can be created affordably without massive investments in the graphics processing units (GPUs) and power needed to train the larger, multi-trillion parameter flagship models powering many LLM chatbots today. The results were described in a research paper published on open access website arxiv.org, entitled “Less is More: Recursive Reasoning with Tiny Networks.”

“The idea that one must rely on massive foundational models trained for millions of dollars by some big corporation in order to solve hard tasks is a trap,” wrote Jolicoeur-Martineau on the social network X. “Currently, there is too much focus on exploiting LLMs rather than devising and expanding new lines of direction.”

“Jolicoeur-Martineau also added: “With recursive reasoning, it turns out that ‘less is more’. A tiny model pretrained from scratch, recursing on itself and updating its answers over time, can achieve a lot without breaking the bank.”

And the code is available to examine and tinker with in a generous open source license:

“TRM’s code is available now on Github under an enterprise-friendly, commercially viable MIT License — meaning anyone from researchers to companies can take, modify it, and deploy it for their own purposes, even commercial applications.”

BUT, there is an imporant set of BUTs:

“However, readers should be aware that TRM was designed specifically to perform well on structured, visual, grid-based problems like Sudoku, mazes, and puzzles on the ARC (Abstract and Reasoning Corpus)-AGI benchmark, the latter which offers tasks that should be easy for humans but difficult for AI models, such sorting colors on a grid based on a prior, but not identical, solution.”

The innovation here is useful to understand, as it affects the many emerging ways to Scale AIs:

“The TRM architecture represents a radical simplification.”

“It builds upon a technique called Hierarchical Reasoning Model (HRM) introduced earlier this year, which showed that small networks could tackle logical puzzles like Sudoku and mazes.”

“HRM relied on two cooperating networks—one operating at high frequency, the other at low—supported by biologically inspired arguments and mathematical justifications involving fixed-point theorems. Jolicoeur-Martineau found this unnecessarily complicated.”

At the risk of dipping our toes into somewhat deeper technical waters, here’s more on the novel technical approach used here. Especially in the rapid evolution of AI Reasoning and Agentic technologies:

“TRM strips these elements away. Instead of two networks, it uses a single two-layer model that recursively refines its own predictions.”

“The model begins with an embedded question and an initial answer, represented by variables x, y, and z. Through a series of reasoning steps, it updates its internal latent representation z and refines the answer y until it converges on a stable output. Each iteration corrects potential errors from the previous step, yielding a self-improving reasoning process without extra hierarchy or mathematical overhead.”

“The core idea behind TRM is that recursion can substitute for depth and size.”

All this means a tiny language model that punches way above its weight:

“Despite its small footprint, TRM delivers benchmark results that rival or exceed models millions of times larger. In testing, the model achieved:”

“87.4% accuracy on Sudoku-Extreme (up from 55% for HRM)”

8”5% accuracy on Maze-Hard puzzles”

“45% accuracy on ARC-AGI-1”

“8% accuracy on ARC-AGI-2”

“These results surpass or closely match performance from several high-end large language models, including DeepSeek R1, Gemini 2.5 Pro, and o3-mini, despite TRM using less than 0.01% of their parameters.”

Yes, that DeepSeek from China, which changed the trajectory of US/China AI race in a couple of hundred days. Today, most of the leading open source AI models are from China, with prior global leader Meta with its Llama model, surpassed on a number of fronts.

Now we’re seeing an acceleration of SLM innovation vs LLM AI capabilities.

“Such results suggest that recursive reasoning, not scale, may be the key to handling abstract and combinatorial reasoning problems — domains where even top-tier generative models often stumble.”

At the very least, it attracts more AI Researcher attention to this end of the model spectrum:

“Supporters hailed TRM as proof that small models can outperform giants, calling it “10,000× smaller yet smarter” and a potential step toward architectures that think rather than merely scale.”

“Critics countered that TRM’s domain is narrow — focused on bounded, grid-based puzzles — and that its compute savings come mainly from size, not total runtime.”

“Researcher Yunmin Cha noted that TRM’s training depends on heavy augmentation and recursive passes, “more compute, same model.”

“The consensus emerging online is that TRM may be narrow, but its message is broad: careful recursion, not constant expansion, could drive the next wave of reasoning research.”

The whole piece is worth a full read for a lot more detail, and the model’s limitations as well as longer term potential.

But the key point remains. In this AI Tech Wave, it’s important to concurrently pay attention to scaling BOTH Large and Small Language Model technology innovations and development.

We are more likely than not to see the decades long gap between mainframe to PC to smartphones computing occur in this AI Tech Wave in time frames measured in less than a decade.

We’re likely to see meaningful AI inference loads start to ramp on local computers, smartphones and AI devices. As well as in massive AI data centers in the cloud, drawing huge amounts of Power. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)