AI: Weekly Summary. RTZ #458

-

OpenAI’s GPT-4o Fine-Tuning for businesses: OpenAI launched a long-requested feature from Developers, ‘Fine-tuning’ for its state-of-the-art GPT-4o (Omni) LLM AI models. For now it comes with a million training tokens for free early trial for businesses. The release allows businesses to use customer datasets to get higher performance, allowing the model to customize the ‘structure and tone of the responses’. That means a higher ability to handle more specific use cases at lower costs. These capabilities augment the company’s move to better AI ‘Strawberry’ code-named Reasoning and Agentic workflows, using its current and future models. More on the ‘Strawberry’ Reasoning AI efforts here.

-

Meta’s AI Opportunities: As Meta continues to invest billions on its open-source state of the art Llama LLM AI models, data centers, technologies and tools, questions continue to abound on its AI strategy vs peers with big AI cloud businesses. All the while, Meta continues to lead in its efforts to use AI tools and technologies to allow its massive base of advertisers to better reach targeted users in the multi-billion Meta/Facebook user base. But the question remains of whether Meta can leverage its partnerships with leading cloud providers, and share revenues on the business use of its open source models and technologies. More here.

-

AI Acqui-Hires and Investors: Tech companies and investors are anticipating more AI Acqui-Hires along the lines of recent Microsoft/Inflection, Amazon/Adept, and Google/Character.ai deals. The lists of other AI tech companies that may be potential ‘acqui-hires’ are being drawn up. Regulators are of course also starting to focus on these transactions, with concerns of circumvention of normal tech M&A rules and regulations. Part of the impetus of these deals of course are the exponentially higher, annual AI capex investments needed to continue to Scale AI models, data center Compute, and applications. More here.

-

AI Photos going from ‘deep-fakes’ to ‘far-and wide fakes’: Google released its latest Pixel 9 smartphones, with better AI chips and memory, which have cloud and local device enabled AI capabilities across a range of apps. In particular, this includes software computation driven photo editing. This ability to make AI text prompt driven photo editing, available to billions using smartphones, is raising industry questions of ‘What is a photo’? This in turn takes the current concerns over AI deep-fake photos into the broader realm of AI photos that can be changed with the ease of an Instagram photo filter of a few years ago. Add this to the list of concerns over AI technologies, as it barrels towards mainstream applications and uses. More here.

-

Elon’s xAI Grok 2 expands Guardrails: Elon Musk’s xAI released its latest Grok-2 LLM AI models with expanded text to image capabilities. Notable here were the more flexible guardrails on Grok-2 vs competing models. Media reports are already documenting some of the ‘noxious images’ possible with this release, with concerns around copyright use and other issues. This approach by Elon with Grok-2 expands the broader debates around ‘Freedom of Information’ and expression, amongst other concerns. And it raises questions of how some of the larger tech companies with competing LLM AI products, may choose to respond. More here.

Other AI Readings for weekend:

-

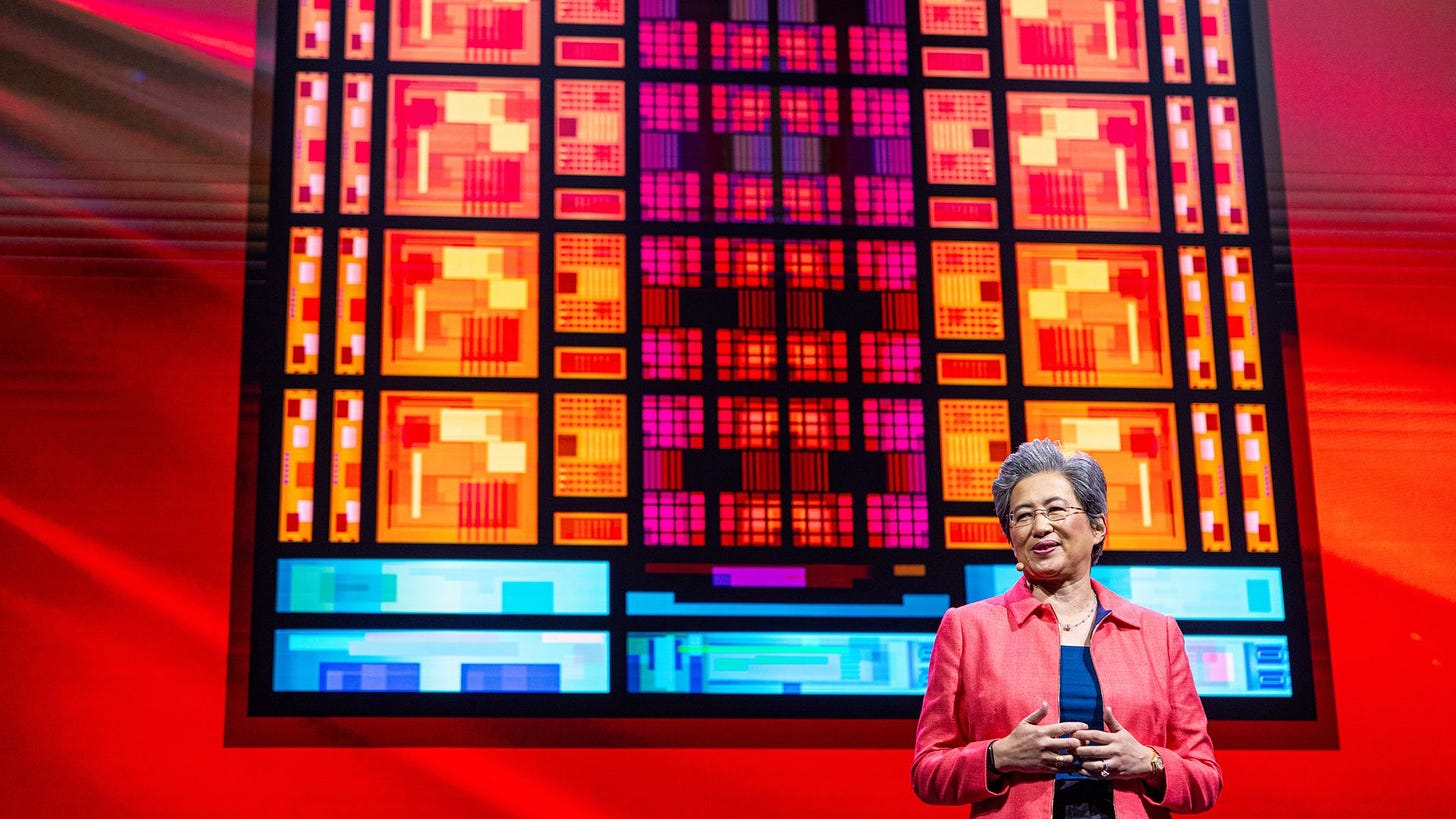

AMD $5 billion ZT Systems acquisition points to increased competition with Nvidia beyond ‘AI GPU chips’.

-

Anthropic memory caching for its latest Claude LLM AI models continue to advance AI technologies to better reasoning and agentic personalization.

Thanks for joining this Saturday with your beverage of choice.

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)