AI: Weekly Summary. RTZ #913

-

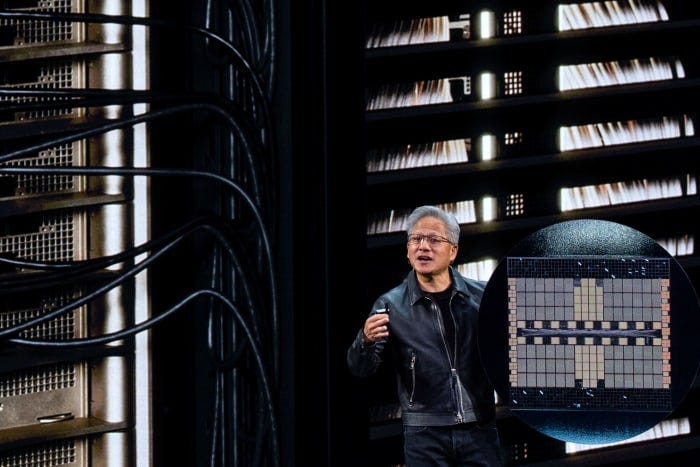

Nvidia’s Solid Q3 Results: Nvidia again met and beat investor expectations for Q3, with above consensus guidance for Q4. Founder/CEO Jensen Huang emphatically stated “AI Bubble’ not visible from his vantage point. The market, while encouraged briefly by results, continues to worry about rising, ‘circular’ AI deals and a possible ‘AI Bubble’. Nvidia’s results underlined solid underlying demand for Blackwell and coming Vera Rubin chips, with a backlog exceeding $500 billion over coming years. This despite accelerating efforts by top customers to build their own AI GPUs to initially supplement the top chips from Nvidia. It’s notable that Nvidia’s strong earnings results do not assume any further sales into China, the 2nd largest AI market in the world. More here.

-

Google Wows with Gemini 3: Google for now is taking the LLM AI crown amongst peers with its well received launch of long-awaited Gemini 3. The model performs better than competition on most benchmarks, while offering competitive AI token input and output pricing vs competing offerings. Gemini comes in a variety of tiers from free to paid, with the highest capabilities available on top tiers. Gemini leverages Google’s unique vertically integrated AI Infrastructure stack, with its in-house TPUs. Additionally Genini offers notable enhancements in end-user capabilities in UI/UX capabilities. Overall, Gemini 3 puts Google more in the AI leadership role now than ever before. This apparently is being acknowledged within OpenAI for now. Especially as Google is starting to roll out ads in AI mode, addressing one of the core, long-time investor concerns. More here.

-

OpenAI ChatGPT 5.1 gets ‘Warmer’: OpenAI released a ‘warmer’ and smarter’ version of ChatGPT in version 5.1. The new release is designed to be more conversational, leaning in on adjusting the behavioral parameters that implicitly connect emotionally with main stream users. It’s a strategy OpenAI has been leaning into with success, particularly with the text to video Sora 2 application recently. OpenAi learned that user preference when it moved from ChatGPT 4 to 5, with less of an emotional ‘personality’. And then had to roll it back with user pushback. ChatGPT 5.1 also get ‘instant’ and ‘thinking’ versions, for complex AI reasoning, with better capabilities. All this is to push these models in more daily mainstream activities, as the new CEO of Applications, Fidji Simo explained online. Humanizing AIs (aka anthropomorphizing) continues to be the winning strategy for now. More here.

-

OpenAI’s Fidji Simo Close-up: A new profile on OpenAI’s ‘CEO of Applications’ Fidji Simo is accelerating the company’s portfolio of AI applications and services. The piece provides additional context into the changing organization at OpenAI, with many new arrivals from Meta, one of her former companies. All this particularly accelerates after the company’s redrawn organizational structure, and the herculean efforts to build out AI Compute Infrastructure for trillions. Fidji stresses the need for more AI Compute to deliver on a lot more AI applications and services innovations underway at the company. And to do it while expanding markets, and the need for more people, not less with AI. Also useful is more detail on how she plans on working closely with CEO Sam Altman, as he focuses on AI Research and Compute. More here.

-

From LLM AIs to ‘World Models’: This week saw more AI companies forging a path from LLM AIs, to ‘World Models’ that have more intrinsic knowledge and data on how the physical world works. Case in point were a new AI startup planned by Meta’s Chief AI Scientist Yann LeCun, as well as uber AI Researcher Fei Fei Li’s new world model startup. As well as similar efforts by Google and others. We’re also seeing efforts underway on this front in China, with Tencent and others focused on the opportunity. These new models will require a different approach on acquiring Data at scale, for both training and inference purposes. Much of it will likely have to be produced in a synthetic form via simulations, which is a different requirement than data for current LLM AIs. These efforts are particularly critical for faster evolution of humanoid robots, and better self-driving cars. More here.

Other AI Readings for weekend:

-

Google manages technical feat of bringing Apple AirDrop to Android smartphones with Quick Share. More here.

-

Google improves ‘Nano Banana’ AI image editor, accelerating AI video race. More here.

(Additional Note: For more weekend AI listening, have a new podcast series on AI, from a Gen Z to Boomer perspective. It’s called AI Ramblings. Now 30 weekly Episodes and counting. More with the latest AI Ramblings Episode 30 on AI issues of the day. As well as our latest ‘Reads’ and ‘Obsessions’ of the Week. Co-hosted with my Gen Z nephew Neal Makwana):

Up next, the Sunday ‘The Bigger Picture’ tomorrow. Stay tuned.

(NOTE: The discussions here are for information purposes only, and not meant as investment advice at any time. Thanks for joining us here)